Your Cart is Empty

Customer Testimonials

-

"Great customer service. The folks at Novedge were super helpful in navigating a somewhat complicated order including software upgrades and serial numbers in various stages of inactivity. They were friendly and helpful throughout the process.."

Ruben Ruckmark

"Quick & very helpful. We have been using Novedge for years and are very happy with their quick service when we need to make a purchase and excellent support resolving any issues."

Will Woodson

"Scott is the best. He reminds me about subscriptions dates, guides me in the correct direction for updates. He always responds promptly to me. He is literally the reason I continue to work with Novedge and will do so in the future."

Edward Mchugh

"Calvin Lok is “the man”. After my purchase of Sketchup 2021, he called me and provided step-by-step instructions to ease me through difficulties I was having with the setup of my new software."

Mike Borzage

Portable CAD Automation: Cross-Platform Scripting, Deterministic IR, and Secure Headless Workflows

November 09, 2025 12 min read

Why portable CAD automation matters now

Fragmented ecosystems make automation brittle and expensive

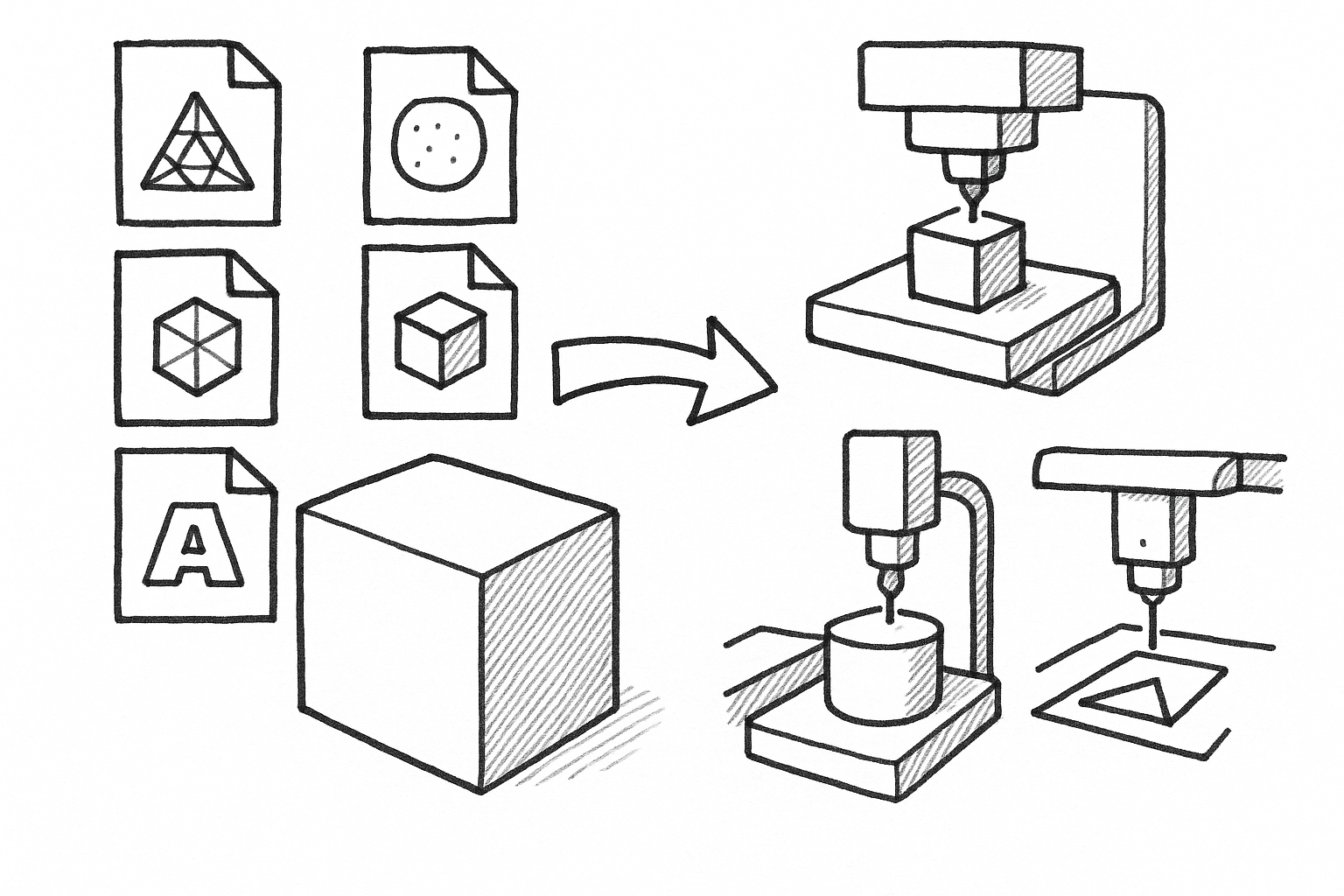

Modern product teams operate across a mosaic of CAD platforms and programming models. One group scripts part generation through NXOpen in C#, another maintains SolidWorks API macros in VBA, a supplier contributes Inventor automations in C++, and a visualization specialist prototypes geometry fixes through RhinoCommon in C#. Meanwhile, cloud-forward teams automate features in Onshape with FeatureScript or use Fusion’s Python APIs for data prep. This heterogeneity multiplies cost and risk: each automation must be reimplemented per tool, validated per kernel, and re-tuned when vendors change versions or deprecate calls. Even simple intents—like “create a sketch on the front datum, apply constraints, extrude to a blind depth, and fillet the edges”—become divergent code paths. Configuration control suffers because bug fixes and enhancements must propagate across disjoint repositories and languages. The result is a backlog of fragile scripts that are hard to test, harder to audit, and easy to break when models evolve. A **portable CAD automation** layer collapses this duplication by standardizing operations and reference semantics across NXOpen, SolidWorks API, Inventor, CATIA CAA, Fusion/Autodesk Platform Services, Onshape, and RhinoCommon. With a shared vocabulary and execution model, teams can invest in one script that runs anywhere, while vendor-specific adapters handle the messy differences under the hood. The outcome is faster delivery, fewer regressions, and a foundation for unified governance.

- One intent, many hosts: “extrude feature” written once, executed across multiple CAD kernels.

- Single source of truth for automation logic; adapters localize host differences.

- Lower onboarding burden; engineers learn an abstraction, not every vendor API.

Divergent abstractions block “write once, run anywhere”

Even when two systems share feature names, their semantics often diverge. Parasolid, CGM, and ACIS impose different tolerance models and geometric kernels that surface subtly incompatible behaviors: sketch solvers differ in constraint priority and degree-of-freedom counting; fillet engines vary in corner patch strategies; booleans disagree on sliver face cleanup; persistent identifiers mean one thing in one host and something else in another. Selection mechanics further complicate portability—some APIs bind to transient indices, others expose robust persistent IDs, and still others require interactive picks. What looks like a trivial “select all vertical edges and apply a constant-radius fillet” becomes a forest of host-specific filters and topology-walking heuristics. Without a normalized way to declare intent—feature types, reference roles, dimensions with units and tolerances—automation remains entangled with implementation quirks. A cross-platform layer must handle the ontology gap by defining stable verbs (create_sketch, apply_constraint, extrude, revolve, pattern, shell, draft, boolean), typed parameters, and reference semantics that survive regeneration. This is not about dumbing everything down; it’s about providing **graceful degradation** and capability negotiation so advanced hosts can do more while simpler hosts fall back predictably. Only with a shared abstraction can teams compose durable automation that stays true to design intent across kernels.

- Kernel-aware tolerances: explicit model units and numeric tolerances per operation.

- Persistent reference semantics that resist topology renumbering.

- Declared operation outcomes with host-provided fallbacks.

Scale pressures demand deterministic, headless pipelines

Design automation has expanded from convenience macros into production infrastructure. Continuous integration for CAD validates parameter changes, regenerates models headlessly, and flags regressions through visual and semantic diffs. Variant generation pipelines build thousands of options for sales configurators or platform architectures. Multidisciplinary design optimization (MDO) loops run sweeps across geometry, materials, and constraints, feeding simulation and costing tools. These workloads require deterministic and auditable scripts that produce the same geometry given the same inputs, enforce time budgets, and emit traceable logs for compliance. They also need stable, headless execution with predictable dependencies, not interactive sessions that rely on GUI state. The key is a **deterministic operation IR**—a declarative graph that sequences feature creation, constraints, and modifications with explicit dependencies, idempotence guarantees, and transaction semantics for commit/rollback. Built into this model are numeric robustness controls (tolerances, unit systems, and solver seeds) to minimize stochastic outcomes. With such an IR, teams can scale from laptops to clusters and clouds, version-lock dependencies, and reproduce builds as reliably as software. Automation stops being a set of ad hoc scripts and becomes a tested, governed pipeline artifact.

- Headless regeneration with bounded runtime and deterministic outputs.

- Reproducible builds with pinned package versions and captured seeds.

- Audit logs linking input parameter sets to generated geometry and metadata.

Hybrid cloud requires sandboxed, network-aware automation

CAD is no longer confined to desktop binaries. Teams increasingly blend desktop tools with cloud-native systems that expose REST or graph APIs, real-time collaboration, and server-side modeling. Automation must span this hybrid reality. Scripts need to respect identity boundaries, network policies, and **IP protection** while still orchestrating operations across desktops and services. That means sandboxed runtimes that default to “network off,” data-scoped permissions (e.g., read-geometry, write-features), and explicit egress policies for derived artifacts like meshes or drawings. Event-driven integrations (document open, regen complete) need throttling and debouncing so cloud backends are not overwhelmed or put out of compliance with rate limits. Meanwhile, the standard must preserve design intent across online/offline transitions—recorded sessions should replay deterministically whether they run locally or in a cloud worker. By normalizing packaging, permissions, and event semantics, cross-platform automation becomes deployable in regulated environments and scalable in elastic ones. The result is a consistent contract for hybrid execution that shields teams from vendor-specific sandbox quirks and provides predictable behavior under enterprise security controls.

- Default-deny permissions with least privilege for geometry and metadata access.

- Deterministic playback for recorded actions across desktop and cloud hosts.

- Configurable rate limiting and backoff to comply with service constraints.

Portability is a strategic hedge against business risk

Beyond engineering elegance, portability reduces exposure to vendor churn, licensing shifts, and compliance mandates. Lock-in inflates switching costs and slows down mergers, supplier onboarding, and regional expansion. Regulations increasingly require traceable lineage from requirements to geometry, with persistent proofs of who changed what, when, and with which tools. If your automation is bound to one vendor’s API or one kernel’s quirks, every audit, migration, or disaster recovery scenario becomes an unfunded liability. A **cross-platform scripting standard** acts as a hedge: you can move workloads to alternate hosts, distribute operations across partners’ systems without sharing proprietary macros, and prove design provenance independent of any single application. It also creates hiring leverage—engineers can be productive without memorizing every API variant. Most importantly, it reframes procurement: you can evaluate vendors on capability and performance rather than on the sunk cost of bespoke automations. Portability is not just convenience; it is risk management, compliance posture, and negotiating power distilled into technical form.

- Traceable automation with provenance that survives vendor transitions.

- Reduced switching costs through adapter-based portability.

- Broader talent pool, lower training costs, and faster onboarding.

What a cross-platform scripting standard must define

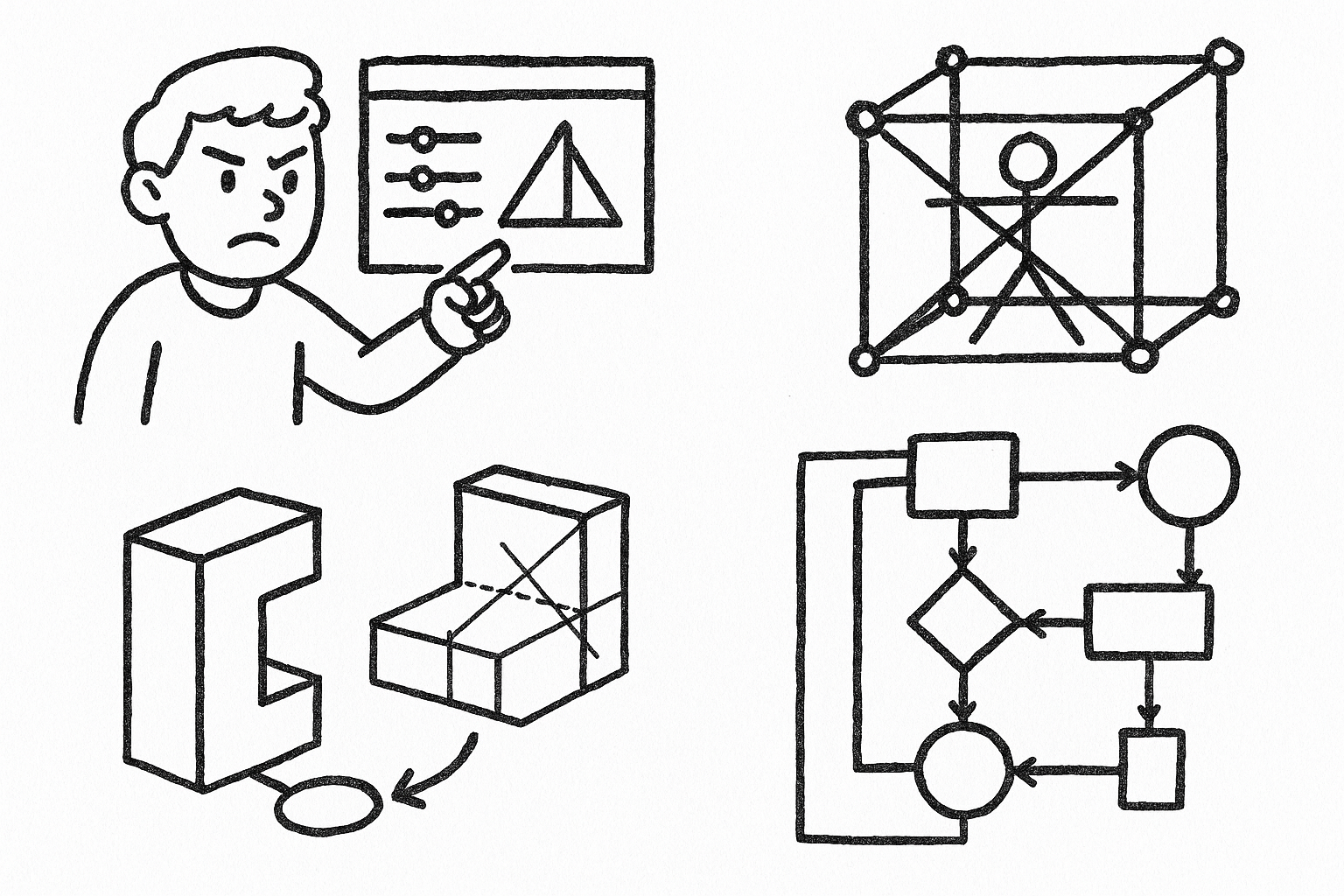

A normalized feature and operation ontology

At the heart of portability is a common vocabulary. The standard should define verbs that are both familiar and precise—create_sketch, apply_constraint, extrude, revolve, fillet, chamfer, shell, pattern, boolean, draft, and datum operations (planes, axes, points). Each verb accepts typed parameters with units, optional tolerances, and reference roles (e.g., profile, direction, limit face) that make intent explicit. References must resolve by persistent identifiers and semantic selectors, not transient screen picks or array indices. Because hosts vary, the ontology needs a capability matrix with declared minimums and **graceful degradation**. For example, a variable-radius fillet can fall back to constant-radius if unsupported, and a symmetric extrude can degrade to two blind limits. Scripts should communicate expectations upfront: which features are required, which are optional, and what fallbacks are acceptable. This promotes predictable outcomes and simplifies conformance testing. Finally, the ontology should encode units and default tolerances at both model and operation levels so numerics remain explicit, not implicit. By building on this shared language, teams can compose complex workflows that read like intent rather than API plumbing, and adapters can translate intent into host-native calls while honoring declared capabilities and fallbacks.

- Explicit param types: length, angle, pattern count, pattern spacing, with units and tolerances.

- Reference semantics: by role and persistent ID, not by GUI pick order.

- Declared fallbacks: per-verb capability negotiation and acceptable degradations.

An operation IR with transactions, determinism, and provenance

The execution substrate should be a declarative operation graph rather than an opaque script. Nodes represent operations with inputs, outputs, and side effects; edges represent dependencies and reference bindings. Transactions allow grouping operations into atomic units with commit/rollback semantics, improving error recovery and enabling partial retries. Idempotence rules and deterministic ordering eliminate flakes: given the same graph, inputs, and seeds, geometry must be identical within stated tolerances. Numeric robustness is first-class—unit systems are declared, tolerances are explicit, and any stochastic solvers accept captured random seeds. Because traceability matters, the IR carries provenance blocks: author identities, timestamps, tooling versions, source hashes, and environment fingerprints. This makes automation verifiable and audits straightforward. The IR should also support annotations for expected invariants (e.g., number of features, mass properties within a tolerance) that hosts can validate after execution. Together, these elements turn automation into a reproducible artifact similar to a build manifest in software engineering, enabling confident distribution across teams, suppliers, and compute environments while maintaining **determinism** and explainability.

- Declarative DAG of operations with explicit dependencies and reference bindings.

- Transaction scopes for atomic commits and safe rollbacks.

- Provenance metadata and seeds for reproducibility and audits.

Stable model queries and intent-aware selectors

Portability fails when selections drift after regeneration. The standard must confront the topological naming problem head-on with intent-aware selectors that bind to semantics, not ephemeral IDs. Select by feature type and role (e.g., “edges generated by the Extrude ‘Boss’ side face”), by tags and name patterns, or by topology queries (“all edges on faces with normal parallel to datum ‘Front’ within tolerance”). Prefer binding to features and construction geometry instead of raw topology when possible, and encode fallback strategies when exact matches fail. In addition to geometry, selectors should span product metadata: MBD/GD&T, materials, appearances, and PMI. Query expressions ought to be declarative, composable, and stable across rebuilds and across hosts with different kernel behaviors. To support automation at scale, queries should be cacheable and verifiable—hosts can emit diagnostics showing which entities matched and why, improving debuggability. With **intent-aware selectors**, scripts survive changes in tessellation density, face splitting, or solver tie-breakers, preserving design intent even when topology shifts. Combined with the IR, queries form the connective tissue that lets portable operations act on the right entities, consistently and explainably.

- Selectors by role, feature lineage, tags, and semantic filters.

- Topology queries with geometric predicates and tolerances.

- PMI/MBD-aware queries for GD&T-driven automation.

Language-neutral runtime, secure packaging, and coherent events

Developers should write in the languages they know while targeting a portable core. The IR becomes the lingua franca, with reference bindings provided for Python and WebAssembly (WASI) to enable **sandboxed runtimes** that run the same automation on desktops, servers, or cloud workers. Packaging must be strict: signed bundles with dependency locking, verified hashes for IR and assets, and data-scoped permissions—e.g., read-geometry, write-features, read/write metadata—so enterprises can enforce least privilege. Network access should default to off, with explicit per-endpoint egress policies. An event and interaction model rounds out the runtime: standard events such as document open/close, feature regeneration started/completed, selection changed, and solve completed, all with throttling/debouncing to prevent storms in collaborative sessions. Deterministic playback from recorded sessions allows teams to capture expert workflows and turn them into portable scripts without reimplementation. The runtime also needs well-defined headless and interactive modes so the same automation can power CI pipelines or assist designers live. With these ingredients—language neutrality, secure packages, and consistent events—automation becomes deployable anywhere without sacrificing security or ergonomics.

- Python and WASI bindings targeting a single IR for cross-host execution.

- Signed, permission-scoped packages with offline-by-default networking.

- Standardized events and deterministic session replay.

Testing, conformance levels, and security guarantees

Portability must be measured, not assumed. A conformance program should define golden-model test suites with semantic visual diffs, mass/center-of-gravity tolerances, and regeneration time budgets. Vendors and integrators can certify at levels that reflect capability: L1 (read/query), L2 (feature create/modify), L3 (sketch constraint edit), and L4 (assembly and cross-part references). Each level specifies required verbs, selectors, and performance envelopes, with transparent reports. On the security front, enterprise adoption depends on robust guarantees: sandboxed execution isolating scripts from host file systems, explicit data egress rules, and privacy-preserving telemetry with redaction hooks. Audit logs must capture who ran what, where, and against which data, producing an immutable trail for regulated contexts. Combined, these measures turn the standard into a contract: if a host claims L3, your parameterized sketch edits will behave predictably and safely; if a package declares “network-off,” it will be enforced. Security and testing are not afterthoughts—they are the scaffolding that makes **cross-platform scripting** credible in production, enabling shared automation to flow between organizations without compromising IP, compliance, or uptime.

- Golden-model corpus with semantic and numeric tolerances.

- Tiered conformance (L1–L4) mapped to explicit capabilities.

- Sandboxing, telemetry redaction, and immutable audit logging.

Reference architecture and adoption path

Host adapters and kernel-aware bridges

The practical road to portability runs through thin adapters that map the standard IR to native APIs. Each adapter knows how to translate verbs and selectors into host-specific calls—NXOpen for Siemens NX, SolidWorks API for SolidWorks, Inventor’s API for Autodesk Inventor, CATIA CAA for CATIA, Fusion/Autodesk Platform Services for Fusion, FeatureScript for Onshape, FreeCAD’s Python API, and RhinoCommon/Grasshopper for Rhino. Because kernels behave differently, adapters also include kernel-aware bridges that choose numeric strategies and tolerances suited to Parasolid, CGM, or ACIS. For example, if a host’s fillet engine requires larger minimum radii due to curvature limits, the adapter can negotiate fallbacks or adjust inputs within declared tolerances. Adapters expose capability metadata so scripts can probe for features at runtime and degrade gracefully where needed. Importantly, adapters remain thin: they avoid adding new semantics, focusing instead on faithful translation and robust error mapping back to the IR’s transaction model. This architecture lets you introduce new hosts or versions by upgrading adapters rather than rewriting automation, turning vendor diversity from a liability into an extensible surface you can plan for.

- Verb-to-API translation with precise error mapping and recovery.

- Kernel-tuned tolerances and numeric strategies per host.

- Capability discovery for runtime negotiation and fallbacks.

Tooling: recorder, linter, fuzzer, and diff close the loop

A thriving ecosystem surrounds the core standard. A recorder captures native user actions and emits normalized IR with stable selectors, transforming expert workflows into reusable automation. A linter evaluates scripts against portability rules: reject implicit unit assumptions, flag kernel-specific edge cases without fallbacks, and warn about selectors tied to volatile topology. A fuzzer stress-tests regenerations with parameter sweeps, solver seeds, and tolerance jitter to detect non-determinism and performance cliffs before they hit production. Visual and semantic diff tools compare feature trees, PMI, mass properties, and bounding boxes across hosts, producing concise reports that are meaningful to engineers and quality teams. Together, these tools operationalize portability: you can capture, normalize, validate, and continuously test automations like any other software artifact. Integrated into CI, they enforce baselines and prevent regressions. Embedded in IDEs, they shorten feedback loops for authors. And when combined with signed packages and audit logs, they deliver end-to-end governance from authoring through deployment and monitoring, making **portable CAD automation** practical at scale.

- Capture to IR with robust selectors; no manual re-coding required.

- Policy-as-code lint rules to enforce portability and security.

- Automated diffs that communicate change impact beyond screenshots.

Migration patterns and workflows unlocked

Most teams cannot stop the world to rewrite everything. Migration should be incremental and value-driven. Start by transpiling high-value macros into the IR using heuristics for naming, intent extraction, and constraint inference. Where full translation is risky, employ a wrap-and-extend approach: encapsulate native scripts behind adapters, then gradually replace segments with portable operations validated by diff and fuzzer pipelines. Along the way, you unlock workflows that were previously out of reach or too expensive to maintain across tools. Cross-CAD batch property extraction and BOM generation becomes a single pipeline with semantic enrichment and consistent formats. Parameter studies and MDO loops run identically across different CAD backends, enabling apples-to-apples comparisons and elastic scaling. Design linting and standards enforcement happen once and are applied everywhere. MBD/GD&T propagation and verification can traverse multiple hosts without losing PMI fidelity. Variant generation for sales configurators ceases to be vendor-locked, improving resilience and negotiating power. In each case, portability lowers friction and increases confidence, turning automation into a cross-organization asset rather than a stack of siloed, brittle scripts.

- Transpile, then wrap-and-extend to derisk adoption while capturing quick wins.

- Unify BOM and metadata extraction with one IR-driven pipeline.

- Scale optimization studies and configurators without vendor constraints.

Governance, versioning, and extensibility

For a standard to endure, governance must be neutral and transparent. A consortium comprising vendors, ISVs, integrators, and open-source stakeholders should steward the spec, the golden test corpus, and the conformance program. Versioning with feature gates allows hosts to roll out support incrementally while users target stable LTS branches for enterprise deployments. Extensions provide a path for innovation: vendors can propose new verbs or selectors as experimental features, backed by tests and documentation, without forking the core. Successful extensions graduate into the standard; others remain vendor-specific but declared, so scripts can detect and adapt. Clear deprecation policies protect users from surprises, and compatibility matrices document which host versions meet which conformance levels. With this approach, the ecosystem evolves without fragmenting. Enterprises gain predictability and leverage; vendors gain a larger automation market and less friction integrating with partners; the community gains a shared vocabulary and toolchain that rewards quality and openness. In short, governance transforms technical alignment into durable collaboration and compounding value.

- Neutral stewardship with transparent conformance and tests.

- Feature gates and LTS branches for predictable enterprise adoption.

- Documented extension paths that encourage upstreaming.

Conclusion

From possibility to practice: a pragmatic path to portable CAD automation

Achieving portability in CAD automation is a matter of precision and discipline. It hinges on a sharp feature ontology, a **deterministic operation IR**, stable intent-aware selectors, and sandboxed runtimes with permissioned packaging. Start where the value is immediate: reading models, querying metadata, and creating or modifying common features. As coverage deepens and sketch, constraint, and assembly semantics converge under the standard, the payoff increases—CI pipelines become cross-CAD, optimization loops scale elastically, and governance gains real teeth. Vendors benefit by tapping a larger ecosystem with lower integration friction and fewer bespoke requests; users gain resilience, compliance, and dramatically reduced maintenance. The practical playbook is clear. Adopt adapters to bridge current tools. Record and normalize actions to IR instead of hand-porting everything. Enforce portability with linters, fuzzers, and golden-model tests wired into CI. Grow coverage under a vendor-neutral governance model that rewards conformance and makes innovation safe to adopt. Do this, and automation stops being a fragile per-tool convenience. It becomes a robust, auditable, and secure platform capability that outlives any single vendor, accelerates engineering cycles, and turns design intent into a first-class, portable asset for your organization.

- Precise ontology + deterministic IR + stable selectors + secure runtimes.

- Early wins in read/query and feature-level automation; deeper gains as semantics converge.

- Vendors gain ecosystem leverage; users gain resilience and compliance.

- Adapters, record/normalize, lint/test, and vendor-neutral governance form the pragmatic path.

Also in Design News

Accelerate Your Design-to-Market Process with PTC Creo's Integrated Visualization Pipeline

November 09, 2025 6 min read

Read More

Discipline in Parametrics: Master Parameters, Skeleton‑First Modeling, Datum Anchors and Rule‑Based Automation

November 09, 2025 10 min read

Read MoreSubscribe

Sign up to get the latest on sales, new releases and more …