Your Cart is Empty

Customer Testimonials

-

"Great customer service. The folks at Novedge were super helpful in navigating a somewhat complicated order including software upgrades and serial numbers in various stages of inactivity. They were friendly and helpful throughout the process.."

Ruben Ruckmark

"Quick & very helpful. We have been using Novedge for years and are very happy with their quick service when we need to make a purchase and excellent support resolving any issues."

Will Woodson

"Scott is the best. He reminds me about subscriptions dates, guides me in the correct direction for updates. He always responds promptly to me. He is literally the reason I continue to work with Novedge and will do so in the future."

Edward Mchugh

"Calvin Lok is “the man”. After my purchase of Sketchup 2021, he called me and provided step-by-step instructions to ease me through difficulties I was having with the setup of my new software."

Mike Borzage

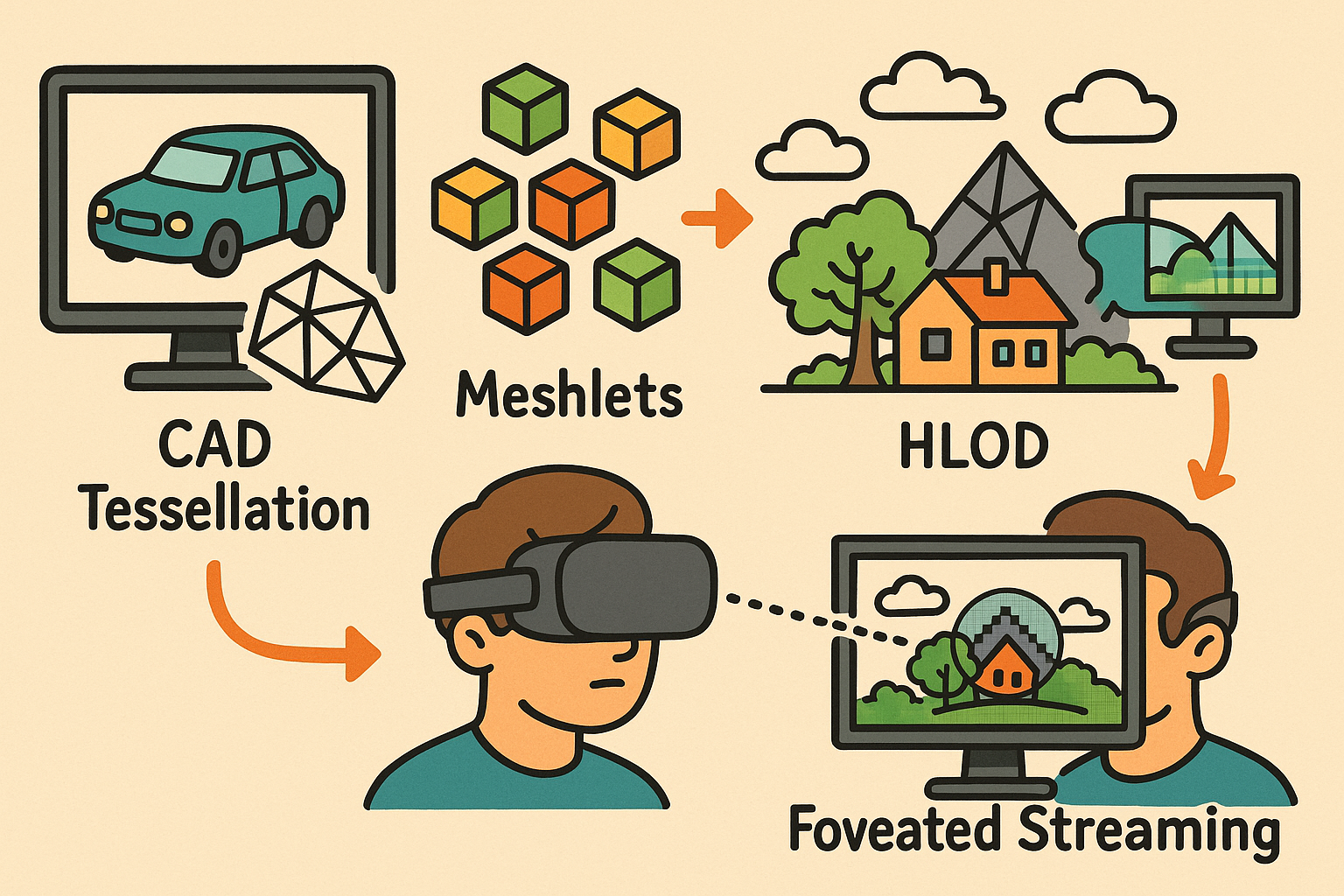

Perceptual LOD Pipeline for Real-Time and VR: CAD Tessellation, Meshlets, HLOD, and Foveated Streaming

November 21, 2025 13 min read

Context and Goals

The most ambitious real-time and VR design experiences now hinge on a careful, end-to-end system for Level of Detail (LOD). The goal is deceptively simple: render exactly what matters to human perception within tight frame-time and streaming budgets, and nothing more. The means are multidisciplinary—spanning CAD-aware tessellation, perceptual metrics, material baking, virtualization of geometry, compression, automated pipelines, and runtime policies that adaptively trade fidelity for responsiveness. In practice, the challenge is rarely one killer bottleneck but the compounding of many small inefficiencies across geometry, shading, scene management, and I/O. This article organizes the problem using concrete budgets and perceptual constraints; surveys geometric and material simplification with safeguards; lays out a scalable pipeline built on open standards; and closes with a pragmatic playbook you can apply to large assemblies, highly reflective PBR assets, and collaborative VR reviews. Expect specific heuristics, measurable targets, and implementation notes intended for teams who already instrument their engines and are comfortable with meshlets, bindless, and virtual texturing. The emphasis is on **perceptually grounded, semantic-aware** decisions: reserve the budget for silhouettes, specular coherence, and interaction affordances; aggressively simplify outside the fovea and beyond task-relevant distances; and validate the outcome with automated visual metrics tied to frame-time telemetry.

Performance and Perception Constraints in Real-Time and VR

Frame-time budgets and VR latency

Real-time budgets are unforgiving. On desktop displays, 60 Hz allows roughly 16.6 ms; high-end visualization at 120 Hz halves that to 8.3 ms. In VR, the constraint tightens further: 90 Hz targets ~11.1 ms per eye, and 120/144 Hz yields ~8.3/6.9 ms per eye—under strict **motion-to-photon latency** requirements. Stereo rendering can double several costs: draw submission, culling passes, and portions of shading if single-pass stereo isn’t in play. What remains must also leave headroom for asynchronous timewarp/spacewarp (ATW/ASW), which demand predictable GPU availability to reproject the latest head pose. Failure to reserve this headroom leads to reprojected frames, perceived as smear or judder. Practical budgets allocate slices such as 1–2 ms CPU submission, 1–2 ms culling/compute (meshlet/cluster), 3–5 ms base shading, 1–2 ms shadows, <1 ms transparencies, and ~0.5 ms post/FX—then retain 1–2 ms of slack for ATW. Be wary of transparency sorting spikes and streaming stalls that occasionally exceed budget; rare spikes still break comfort. ASW can mask frame shortfalls but introduces warping artifacts on fast motion or parallax-rich scenes; treat it as a fallback, not a target. Prioritize determinism: schedule heavier passes (shadow/resolves) earlier to protect ATW, and use GPU markers to enforce a reliable tail.

- Prefer single-pass stereo techniques where possible; audit view-dependent branches for divergence.

- Use GPU timing queries per pass and per eye; visualize instantaneous and rolling averages.

- Plan a soft ceiling at ~85–90% of the frame budget to maintain ATW slack.

Perceptual priorities for LOD

Human vision is foveated: acuity peaks where gaze lands and drops steeply in the periphery. That enables aggressive simplification outside the fovea—polycount decimation, MIP biasing, fewer BRDF lobes—provided that high-salience cues remain coherent. The cues that most betray simplification are silhouettes, specular highlights, and shading continuity across curved surfaces. Preserving **normals and curvature** on silhouettes keeps outlines stable; keeping specular flow continuous avoids “sparkle” and “swim” that are glaring in VR. Foveal detail should prioritize task-critical affordances: edges used for snapping, datums and measurement references, labels and AR overlays, fastener heads the user manipulates, and manipulators themselves. In contrast, high-frequency surface detail (knurls, micro-bevels, threads) rarely drives shape perception; bake it. The sensitivity also depends on interaction tempo: when the user is moving or rotating an assembly, temporal stability dominates raw spatial detail. That argues for reducing BRDF complexity during motion and restoring it when the view stabilizes. The bottom line is a **perceptual budget**: allocate triangles and shading instructions to what drives recognition and task performance; demote everything else gracefully, especially outside the fovea.

- Protect silhouettes with higher tessellation and tighter normal fidelity than interior faces.

- Maintain specular coherence: match tangent spaces and normal map MIP transitions.

- Elevate fidelity around grab/snapping edges, buttons, labels, and measurement datums.

Workload constituents and metrics that matter

Performance derives from four intertwined constituents. Geometry: triangle throughput, index/vertex fetch locality, cache-friendly **meshlet** partitioning, and early/cluster culling. Shading: BRDF complexity (clearcoat, subsurface, anisotropy), normal map density, overdraw, and transparency sorting. Scene management: draw-call count, state switches (materials/pipelines), skinning/animation, and LOD selection/culling cost. Memory/I-O: texture residency, streaming stalls, and compression/decompression overhead. The metrics that convert these into actionable LOD thresholds are primarily screen-space. Use **screen-space error (SSE)** for geometric deviation, normal and silhouette deviation for shading cues, texels-per-pixel for material density, and scene-level budgets like draw-call caps and shader instruction counts. Evaluate per-eye in stereo; the same object may meet SSE on one eye but not the other depending on parallax and gaze. Complement SSE with projective solid angle for tiny objects to avoid over-investing in negligible screen area. Keep a VRAM budget per scene and per LOD tier; enforce residency of foveal materials and colliders before decorative shells. Continuous telemetry in your editor/runtime should plot metric distributions against GPU time, making it obvious when your shader LODs or HLOD cutoffs are too conservative.

- Geometry: SSE thresholds per distance band; silhouette deviation for silhouette-facing clusters.

- Materials: target texel density; manage MIP bias and anisotropy needs by view angle.

- Scene-level: draw-call budget per pass; cap shader instruction count for far LODs.

- I/O: VRAM footprint per LOD; streaming queue depth; decompression time (KTX2/BasisU).

Techniques for Geometric and Material Simplification

CAD-to-mesh strategies

Effective LOD starts at tessellation. CAD imports benefit from feature-aware tessellation that tightens sampling near small radii, fillets, and sharp blends, while loosening on planar and near-planar regions. Segment NURBS/splines with heuristics that preserve G1/G2 continuity; this stabilizes shading and avoids faceting across smooth transitions. Preserve silhouette edges as constraints so decimation can operate safely elsewhere. After initial tessellation, retopology or remeshing with field-aligned quads/triangles improves UV packing, normal baking, and deformation if parts are animated. Curvature-driven sampling equalizes shading error: denser where principal curvature changes; sparser on flats. When preparing for mesh shaders, partition into **meshlet-friendly chunking** (e.g., 64–128 triangles per cluster) with spatial locality to maximize culling efficiency and cache reuse. Plan material boundaries early; discontinuities in UVs or tangents become creases that are expensive to repair later. For assemblies, normalize units and part orientations, deduplicate identical parts, and exploit instancing; this alone can cut draw calls dramatically before any decimation.

- Constrain tessellation along silhouette curves and tight fillets; relax on planar plates.

- Use curvature-aware remeshing to bound normal deviation for smoother shading.

- Partition into meshlets with compact bounding volumes to improve cluster culling.

Decimation methods and safeguards

Quadric Error Metrics (QEM) is the proven baseline for decimation, but it needs constraints. Flag hard edges, creases, and boundaries to prevent collapses that destroy design intent. Introduce angle- and curvature-based vetoes so collapses that exceed normal or curvature deviation limits are rejected. Preserve UV, vertex color, skin weights, and other attributes; weight the error metric to penalize attribute drift in proportion to their visual impact. Maintain separate error budgets for interior faces versus silhouettes; the latter deserve tighter thresholds. For runtime smoothness, adopt **continuous LOD** or progressive meshes for near/far transitions, or implement discrete chains with hysteresis and temporal cross-fade to avoid popping. For massive assemblies, lean on **Hierarchical LOD (HLOD)**: cluster distant groups into proxies (merged meshes or simplified impostors) guided by spatial partitions (BVH, k-means in world space) plus semantic grouping (BOM, sub-assemblies). Generate physics-friendly proxies concurrently so colliders align with visual LOD. Track provenance so that high-fidelity parts can be swapped in on demand without losing metadata or anchors.

- QEM with hard-edge constraints and per-attribute fidelity weights (UV/normal preservation).

- Collapse vetoes based on angle/curvature; tighter thresholds at silhouettes.

- Discrete LOD chains with hysteresis and dithered/cross-fade transitions to mask pops.

- HLOD clustering for scalability; bake proxies and colliders at each cluster level.

Representation alternatives

Beyond classic triangle decimation, alternative representations stretch performance. For far LODs, image-based impostors with depth/normal atlases preserve parallax and lighting cues at trivial draw cost. Voxel grids or signed distance (SD) fields are compact proxies for collision, visibility tests, and coarse shadows. Recent engines exploit virtualized geometry (Nanite-like) and mesh shaders to stream and cull micro-triangles or meshlets, pushing billions of apparent triangles by performing fine-grained, view-dependent visibility. Careful: while this dramatically reduces artist-time on LOD creation, it doesn’t eliminate shading and material costs, nor I/O pressure; you still need good atlasing and compression. Emerging **neural assets**—billboards enhanced with learned features, 3D Gaussian splats, or coarse NeRFs—offer excellent far-field richness. In VR, however, they carry caveats: view-dependent radiance can conflict with stereo consistency, reprojection can shimmer, and motion parallax under head movement can misalign unless trained with multi-view constraints. Treat neural proxies as accelerators for backgrounds and instant previews, while reserving CAD-derived meshes for near-field, interactive geometry.

- Impostors with depth/normal atlases for distant clusters; update sparingly under camera motion.

- Voxel/SDF proxies for physics and occlusion; cheap to stream and query.

- Virtualized geometry or meshlet pipelines for fine-grained culling at scale.

- Neural far LODs with VR-aware training; avoid for interaction-critical objects.

Material and texture simplification

Shading often dominates time in modern PBR pipelines. Bake high-frequency geometric detail into compact textures: normal maps, AO, curvature, and cavity. Consolidate textures—convert UDIMs to atlases where feasible, and use trim sheets to handle repetitive hardware and panels. Pair atlasing with **virtual texturing** (SVT/VT) to stream only visible tiles; budget for preload around the fovea to avoid MIP “popping.” For formats, KTX2 with BasisU supercompression yields GPU-friendly streaming and transcoding; for geometry, use Draco or meshopt to reduce network and disk sizes while aiding vertex cache locality. Adopt shader LOD: offer BRDF variants keyed by distance or foveation—simplify clearcoat and subsurface scattering, clamp normal map MIP to avoid sparkling, and reduce sample counts (e.g., anisotropy taps) in the periphery. Audit overdraw: alpha-tested foliage or perforated panels may deserve impostors or masked simplifications at distance. Crucially, align material LOD thresholds with geometry LOD to avoid perceptual mismatches where a coarse mesh still drives a heavy shader, or vice versa.

- Bake microsurface features; reserve high-frequency normals for near and foveal regions.

- Atlas and trim-sheet repeated elements; stream via VT to control residency.

- Use KTX2/BasisU for textures; Draco/meshopt for geometry and improved cache usage.

- Shader LODs: simplified clearcoat/subsurface; MIP-aware normal detail; fewer BRDF lobes at distance.

Semantic rules for design data

Not all parts deserve equal treatment. Define semantic rules that reflect how components are perceived and used. Fasteners, small fittings, and distant scaffolding typically move to impostors quickly; the perceptual risk is low, and the savings are large. Cables, hoses, and harnesses can be simplified to swept ribbons or low-spline rigging; their cross-section detail is rarely visible. Threads, knurls, and micro-chamfers should be baked into normals and AO, but preserve thread lead and pitch in near-field if the task involves alignment or inspection. Suppress hidden or irrelevant features using feature recognition—tiny holes and fillets below a screen-space threshold, internal ribs and bosses that never appear externally, or overlaps that create redundant faces. Conversely, preserve critical tolerances and datums crucial for snapping, measurement, and assembly verification; tag them so decimators know to hold edges and maintain consistent surface normals. By encoding these policies in machine-readable form, you scale simplification without losing the **design intent** that underpins interaction quality.

- Part-class policies: fasteners → impostors; cables → ribbons; knurls/threads → baked detail.

- Feature recognition to suppress tiny or internal geometry while keeping load-bearing cues.

- Mark and protect datums, tolerances, and snapping edges from decimation.

Designing a Scalable LOD Pipeline—Standards, Automation, and Runtime Policies

Asset ingestion and standardization

Ingestion is where chaos becomes data. Normalize content through **USD or glTF**, mapping CAD assemblies and PMI/MBD annotations into a scene-graph with clear semantics. Preserve instancing and hierarchy so identical parts deduplicate across assemblies; pack transforms and material bindings into clean layers. Encode units explicitly and record provenance (source file, revision, tolerance settings). As assets arrive, apply validation gates: watertightness checks for printable parts, manifoldness where required, detection of self-intersections and inverted normals, and UV sanity (islands, overlaps, density). Where PMI defines datums or tolerances, materialize them as anchors and metadata so downstream decimators and colliders respect them. Replace duplicate meshes with references; collapse trivial hierarchy where it bloats draw calls. For glTF, align with extensions like MSFT_lod for LOD chains and KHR_materials_variants where variants map to PLM options; for USD, use variants and payloads for scalable composition. The output of ingestion should be a clean, instanced, unit-annotated scene where every node can be subjected to rule-driven LOD recipes.

- Use USD layers or glTF scenes to encode assemblies with instancing preserved.

- Run manifold and normal-orientation tests; fix or quarantine failures.

- Record PMI/MBD and units; treat datums as first-class runtime anchors.

Automated processing at scale

At production scale, LOD must be automated and reproducible. Batch decimation should run on GPU or across a compute cluster, with out-of-core strategies to process massive assemblies that exceed local memory. Build HLOD hierarchies by combining spatial partitioning (BVH, voxel grids, or k-means in world coordinates) with semantic grouping (BOM sub-assemblies) to retain meaningful structure. Implement rule-driven pipelines: per-category LOD recipes, attribute-preservation policies, and exception lists that encode the semantic rules you authored earlier. Compression and atlasing steps should be deterministic so identical inputs yield byte-identical outputs; this makes delta updates trivial. Integrate CI/CD for assets: lint builds to catch violations (too many draw calls, missing MIPs), and run visual regression tests using SSIM/LPIPS and edge-map comparisons between the reference and the simplified version. Store per-pass timing and memory metrics alongside artifacts. By treating assets like code with tests and budgets, you scale confidently while avoiding drift. If something regresses—silhouette deviation spikes, shader instruction counts creep—you catch it in CI rather than on a device at 90 Hz.

- Distribute decimation and baking jobs; use out-of-core processing for huge assemblies.

- Combine spatial and semantic clustering for robust HLOD trees.

- CI for assets: linting, SSIM/LPIPS baselines, edge maps, per-pass timing and memory budgets.

Runtime selection and streaming

At runtime, LOD decisions must be cheap, stable, and stereo-aware. Select geometry LOD via **SSE and projective solid angle**, evaluated per eye. With eye tracking, integrate foveated LOD: raise thresholds in the periphery for both geometry and materials while maintaining conservative bands near the fovea. Synchronize with foveated shading so material and geometry fidelity co-vary. Your culling stack should combine frustum and occlusion tests with meshlet/cluster culling for fine-grained savings; adopt **bindless resources** to minimize state changes when LODs swap. Stream progressively: prioritize colliders and interaction targets, then visible shells, and finally fine detail. Virtual texturing should prefetch foveal tiles and the near future of head motion to suppress “late” MIP resolves. Maintain hysteresis in LOD thresholds to avoid oscillation as objects hover around cutoffs. For transparent objects, weigh the sorting cost; coarse LODs might switch to dithered or hashed alpha to minimize overdraw and sorting passes at range. Continuously log per-object and per-pass GPU time; let the runtime gently adjust thresholds to hold a target frame-time under varying scene complexity.

- Per-eye SSE and solid-angle tests; foveated LOD with gaze-driven rings.

- Frustum + occlusion + meshlet culling; bindless to cut state churn when swapping LODs.

- Progressive streaming: colliders → visible shell → fine detail; VT prefetch for fovea.

- Hysteresis and dithered transitions to tame popping and specular swim.

Interactivity, physics, and collaboration

High-fidelity interaction requires colliders that track visual LODs without blowing budgets. Generate simplified colliders—convex hulls, VHACD decompositions, or SDF volumes—aligned to each LOD tier. For snapping and measurement, maintain **design-intent anchors**: datums, feature IDs, and PMI that tools can reference in AR/VR markup. Where PLM or variant management is in play, represent options via USD variants or glTF MSFT_lod plus materials_variants; this keeps interactive switching cheap and traceable. Track provenance and traceability from CAD import to final runtime assets for auditability. Be mindful of pitfalls. Texture pop occurs when material VT residency lags geometry swaps; prefetch near anticipated gaze or interaction. Specular swim often follows mismatched normal MIPs or tangent spaces across LODs; ensure consistent bake settings and normal map MIP bias. Transparency sort cost can explode with complex assemblies; mitigate via per-LOD shader variants (hashed alpha at distance), reduced overdraw, and limited depth layering. Use temporal cross-fades or dithering in transition bands to smooth silhouette shifts. Collaboration scenarios benefit from a shared metric vocabulary so remote reviewers see comparable fidelity given similar budgets.

- Colliders per LOD: convex hulls, VHACD, or SDFs; align to visual proxies.

- Keep datums/PMI live for snapping and measurement; attach to scene nodes.

- Variants via USD/glTF constructs; preserve provenance and asset lineage.

- Mitigations: prefetch VT tiles, align normal MIP chains, hashed alpha for far transparencies.

Conclusion

The core trade-off

Real-time and VR push a relentless trade-off: preserve perceptual fidelity while respecting strict frame-time budgets, with VR tightening the vise through **motion-to-photon** constraints and stereo duplication of work. Success depends on allocating resources to what perception values—silhouettes, specular coherence, and task affordances—while downgrading or baking everything else, especially outside the fovea. The fulcrum is a robust LOD system that is semantic-aware (recognizes part classes and features), standards-centric (USD/glTF for portability), and automated (rule-driven decimation, HLOD, compression, CI validation). Instrumentation turns opinions into evidence; SSE distributions, texture residency, shader instruction counts, and per-pass GPU times provide the levers to tune thresholds without guesswork. Comfortable VR emerges when the entire chain cooperates: CAD tessellation that respects curvature, material baking aligned with atlases and VT, streaming that prioritizes colliders and interactables, and runtime LOD policies that are per-eye, foveated, and hysteresis-stabilized. Treat anything not measured as a risk to comfort; treat anything not automated as a scale limiter.

- Perception-first budgets; silhouette/specular continuity above raw triangle count.

- Semantic simplification rules; bake fine detail aggressively.

- Automation and telemetry; CI ensures regressions are caught early.

A practical playbook

Begin by setting numeric budgets: target frame-time per eye, draw-call limits per pass, and VRAM ceilings for each LOD tier. Choose SSE and normal deviation thresholds for near/mid/far ranges, with tighter bands for silhouettes. Establish per-part-class LOD recipes: fasteners to impostors early; cables to ribbons; knurls/threads baked; critical datums protected. Build material policy: target texel densities, MIP bias by distance and angle, simplified BRDF variants at range. Adopt meshlet-friendly partitioning and cluster culling. Construct HLOD trees using both spatial and semantic clustering. Standardize on USD or glTF; preserve instancing; compress via KTX2/BasisU for textures and Draco/meshopt for geometry. Integrate CI/CD: lint assets for missing MIPs or excessive permutations; run SSIM/LPIPS and edge maps against references; track shader instruction counts. At runtime, evaluate LOD per eye via SSE/solid angle; couple with foveated shading; stream progressively with VT prefetch around gaze. Instrument telemetry and refine thresholds continuously.

- Define budgets and perceptual priorities; codify SSE/normal deviation bands.

- Per-part-class recipes with aggressive baking; protect interaction-critical features.

- HLOD + meshlets + compression in USD/glTF for portability and scale.

- CI with visual/metric regression; runtime telemetry closes the loop.

Emerging directions

Eye-tracked **foveated LOD** will become the default loop: geometry, materials, and sampling adapt continuously to gaze with perceptual models informed by contrast sensitivity and temporal masking. Expect neural scene proxies to handle far-field clutter and instant previews—3D Gaussian splats and coarse NeRFs hybridized with CAD meshes for near-field accuracy—with stereo-consistency and reprojection-aware training to reduce VR artifacts. Standardized LOD metadata is likely to land in USD and glTF so tools can exchange not only meshes but policy: SSE thresholds, protected features, normal/silhouette budgets, and streaming priority classes. Hardware is also pushing toward mesh/task shaders and triangle culling in the fixed function path, making fine-grained visibility a given and artist-authored LODs less burdensome. Finally, VT and sampler innovations will address specular stability by co-managing normal MIP chains and anisotropy with view-dependent policies. The theme across these trends is convergence: perceptual science, CAD semantics, and GPU architecture co-designing a pipeline where fidelity is allocated precisely where the user benefits.

- Perceptual foveated loops integrating SSE, BRDF LOD, and VT prefetch.

- Neural far LODs blended with CAD near meshes; stereo-consistent training.

- USD/glTF LOD metadata for cross-tool continuity and digital thread integrity.

Bottom line

Treat LOD as an end-to-end system rather than an afterthought. The system spans CAD tessellation choices, semantic rules that encode design intent, decimation and baking with explicit metrics, compression and atlasing for efficient I/O, HLOD and meshlets for scalability, and runtime policies that make **stereo-aware, foveated** decisions with hysteresis and cross-fades. Anchor every decision to perceptual priority and measured budgets, and let automation and CI keep thousands of assets aligned with the targets. When this discipline is in place, you can deliver high-fidelity, comfortable VR and real-time experiences at scale—where silhouettes hold, speculars behave, interactions feel crisp, and the frame-time graph stays flat. The reward is not merely hitting 90/120/144 Hz; it’s the creative agility to load bigger assemblies, iterate materials faster, and collaborate across locations without the usual performance anxiety. In other words, fidelity where it matters, efficiency where it doesn’t, and a pipeline that knows the difference.

- Perception-led, metric-driven, automated LOD yields comfort and scale.

- Invest in standards (USD/glTF), compression, and meshlet/HLOD architectures.

- Close the loop with telemetry; keep headroom for ATW; ship with confidence.

Also in Design News

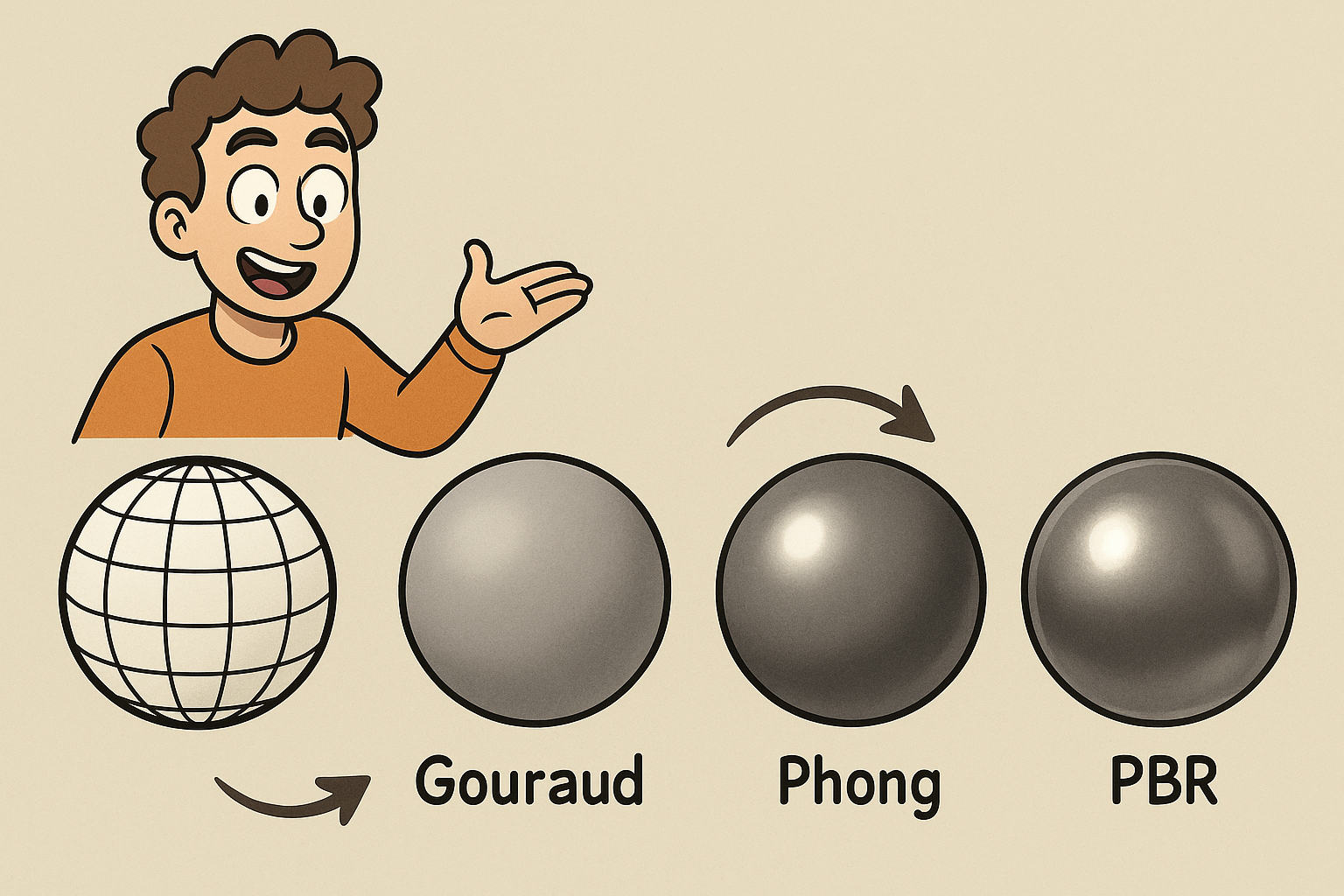

Design Software History: From Wireframe to PBR: How Gouraud, Phong, and the Microfacet Turn Changed CAD Shading

November 21, 2025 15 min read

Read More

Cinema 4D Tip: Disk-Cache Particle Simulations for Consistent, Faster Renders

November 21, 2025 2 min read

Read MoreSubscribe

Sign up to get the latest on sales, new releases and more …