Your Cart is Empty

Customer Testimonials

-

"Great customer service. The folks at Novedge were super helpful in navigating a somewhat complicated order including software upgrades and serial numbers in various stages of inactivity. They were friendly and helpful throughout the process.."

Ruben Ruckmark

"Quick & very helpful. We have been using Novedge for years and are very happy with their quick service when we need to make a purchase and excellent support resolving any issues."

Will Woodson

"Scott is the best. He reminds me about subscriptions dates, guides me in the correct direction for updates. He always responds promptly to me. He is literally the reason I continue to work with Novedge and will do so in the future."

Edward Mchugh

"Calvin Lok is “the man”. After my purchase of Sketchup 2021, he called me and provided step-by-step instructions to ease me through difficulties I was having with the setup of my new software."

Mike Borzage

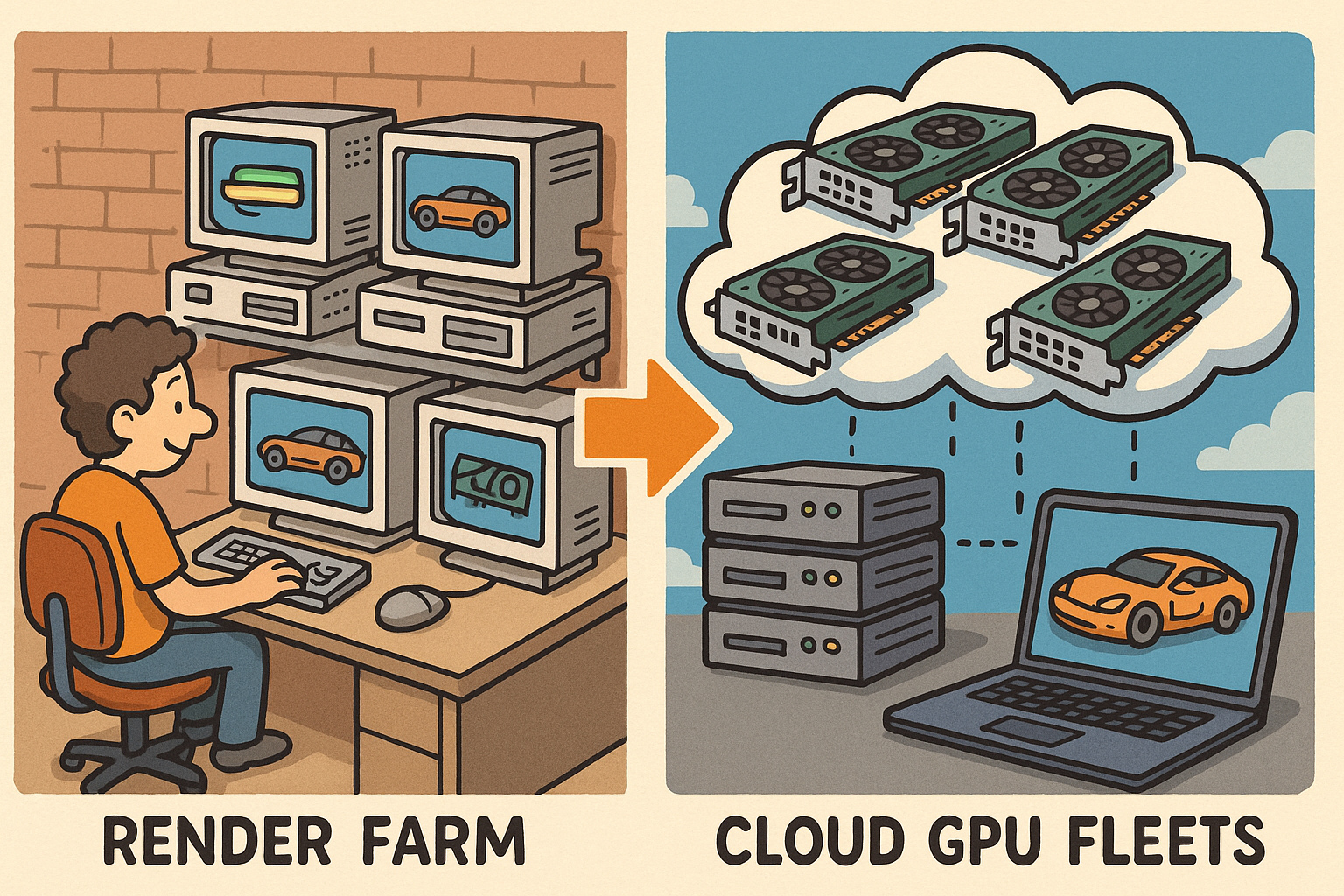

Design Software History: Render Farms to Cloud GPU Fleets: History and Architecture of Scaled Product Visualization

October 30, 2025 12 min read

From the first racks of UNIX workstations to today’s containerized GPU fleets, render farms have quietly shaped how products are imagined, reviewed, and sold. What began as a pragmatic workaround for slow desktop CPUs grew into a strategic enterprise capability that merges design, engineering, and marketing workflows. Across three decades, advances in schedulers, licensing, materials standards, and compute architectures redefined what the industry expects from “photoreal,” as automotive and consumer-product organizations aligned digital assets with manufacturing truth and brand storytelling. The arc is unmistakable: distributed computing turned the art of image-making into an industrial process, and the pressure to deliver consistent, color-accurate visuals at scale pushed standards forward. If you’ve ever configured a car online, approved packaging colors from a tablet, or watched a product launch reel, you’ve benefited from this evolution. The following narrative traces the inflection points—from early hardware queues to today’s hybrid CPU/GPU pipelines—through the tools, teams, and decisions that made scaled product visualization both routine and remarkable.

Early render farms and the birth of product visualization (1990s–mid-2000s)

Hardware and queues

In the 1990s, render farms were less data center and more back room—rows of SGI IRIX, Sun Solaris, and HP-UX workstations wired together to survive overnight render pushes. The operational glue was early scheduling middleware like Portable Batch System (PBS), Sun Grid Engine, and Platform LSF, which exposed job queues, resource slots, and primitive priorities. Digital content creation back-ends hooked into these schedulers: Alias|Wavefront and later Maya dispatched frames via built-in batch modes, while Discreet’s 3D Studio/3ds Max Backburner offered a Windows-friendly controller/servers topology. Memory was scarce, so admins bin-packed jobs by RAM and I/O, carefully staging textures on NFS mounts to avoid saturating 100 Mbps Ethernet. What this era lacked in automation it made up for in ingenuity: shell scripts to throttle threads, cranky license daemons to nurse, and per-host “do not use” hours to accommodate daytime CAD workloads. Even then, teams learned the enduring lesson that would define the next three decades—separating interactive work from batch rendering increases throughput and lowers risk.

- UNIX-first culture: IRIX and Solaris standardized OpenGL pipelines and predictable memory models for batch rendering.

- Early schedulers: PBS/SGE/LSF provided queueing, but little in the way of dependency graphs or license-aware dispatch.

- DCC back-ends: Alias|Wavefront/Maya command-line renderers and 3ds Max Backburner bridged artists’ scenes to cluster jobs.

- Storage constraints: Texture replication and NFS contention were constant operational bottlenecks.

Foundational renderers and people

Rendering culture crystalized around a few landmark engines. Pixar RenderMan set expectations for distributed rendering quality and stability, driven by the work of Rob Cook, Loren Carpenter, and Pat Hanrahan. Its REYES-era micropolygon pipeline prioritized smooth displacement and motion blur, and studios learned to decouple scene translation from renderer execution to scale across hosts. In parallel, mental images under Rolf Herken delivered mental ray, which became the de facto CPU photoreal renderer embedded in many CAD/DCC pipelines. The engine’s programmable shaders and photon mapping produced glossy product shots at a time when ray tracing was still exotic on commodity hardware. By the early 2000s, Chaos introduced V-Ray (with Vlado Koylazov and Peter Mitev), quickly earning a reputation for a pragmatic balance of speed, flicker control, and GI quality that fit commercial timetables. Each renderer implicitly taught teams how to scale: RenderMan through shading networks and rib-archives, mental ray via distributed bucket rendering and shader libraries, V-Ray through adjustable irradiance cache strategies. The people behind these engines set the idioms—shaders as modular programs, geometry as streams, and the farm as a production instrument rather than an afterthought.

- RenderMan: distributed RIB workflows normalized scene pre-processing and instancing at scale.

- mental ray: photon mapping and programmable shaders brought CAD-integrated realism to CPUs.

- V-Ray: practical GI caching and flexible sampling catered to deadline-driven product imagery.

Product viz pioneers

Automotive and industrial design were among the first to treat rendering as an enterprise function. Automakers like BMW, Audi, and Ford adopted large offline pipelines for both marketing and design reviews, pairing Class-A surfacing tools such as ICEM Surf with high-fidelity visualization ecosystems like RTT DeltaGen (later Dassault Systèmes 3DEXCITE). These organizations needed images that matched manufacturing reality: paint flake orientation, anisotropic brushed metals, and accurate glass refraction—alongside precise trim, wheel, and regional variant coverage. Meanwhile, industrial design powerhouses including IDEO and frog design delivered massive shot lists for catalogs and bids, using mental ray or V-Ray to translate CAD intent into crisp marketing visuals. Constraints drove decisions: compute-bounded overnight shots, curated camera packs, and light rigs designed once then reused across campaigns to ensure consistency. As teams grew, render coordinators emerged as a distinct role to track asset versions, render passes, and delivery windows. In practice, the combination of enterprise PLM discipline and DCC artistry forged the product visualization playbook: clean CAD, robust material definitions, predictable render layers, and a farm that could run for days without supervision.

- Automotive fidelity: paint BRDFs, HDR-based studio lighting, and strict color targets for marketing.

- Design firms: large shot grids standardized on studio rigs to control mood and comparability.

- Workflow glue: surface data from ICEM Surf flowed into DeltaGen for consistent “digital twin” visuals.

Pain points that shaped farms

The constraints of the era left fingerprints on farm design that persist today. First, long CPU-bound renders encouraged aggressive per-shot manual tuning: per-angle irradiance caches, shadow map sizes tailored per asset, and memory trims to avoid swap storms. Second, small memory footprints on many hosts meant geometry had to be instanced, tiled, and streamed—driving a culture of scene modularity. Third, materials were brittle across tools: translating CAD appearance properties into DCC shaders often broke energy conservation or texture coordinate assumptions, forcing hand-tuned conversions with unpredictable results. Finally, the lack of robust dependency graphs in early schedulers made multi-pass pipelines (beauty, shadow, reflection, matte) risky; a failed pre-pass could waste overnight cycles. These friction points motivated investments that would define the next decade.

- Long CPU renders led to GI caching techniques and night-only farm policies.

- Limited memory enforced instancing discipline, geometry partitioning, and texture atlases.

- Brittle material conversion between CAD and DCC demanded custom translators and shader templates.

- Primitive orchestration increased failure blast radius and complicated re-queues.

Orchestration, cloud, and the GPU turn (mid-2000s–2018)

Render management matures

As farms grew beyond a few dozen nodes, the need for vendor-neutral control layers became clear. Thinkbox Deadline, founded by Chris Bond and later acquired by AWS, delivered a cross-renderer job engine with dependency graphs, pools, and plugin extensibility. PipelineFX Qube! under Richard Lewis emphasized studio-grade support and analytics, while Pixar Tractor aligned naturally with RenderMan-centric shops and brought predictable, robust dispatching to mixed environments. Crucially, these managers embraced license-aware scheduling, interrogating FlexLM or RLM servers to avoid oversubscription. Per-core tokens and usage metering emerged as first-class operations concerns, informing queue priorities and budget forecasts. Dashboards made farm behavior legible to producers, who started to treat compute as a line item with ROI targets. Plugin ecosystems matured, allowing custom submitters for Maya, 3ds Max, and CAD tools, as well as auto-retries, post-render hooks, and archive policies. The result was cultural as much as technical: render wranglers, pipeline TDs, and IT worked from a shared operations language that enabled scale without chaos.

- Dependency graphs: guaranteed pre-pass completion before heavy frames.

- License-aware dispatch: synchronized tokens with farm capacity to curb idle stalls.

- Visibility: dashboards for throughput, failure rates, and per-asset costs.

- Extensibility: per-renderer submitters and post-tasks standardized farm behavior.

Renderer shifts

On the renderer front, a quiet revolution replaced tuning-heavy engines with predictable path tracers. Arnold, created by Marcos Fajardo and later acquired by Autodesk, displaced mental ray in many pipelines by emphasizing simple, consistent sampling over precomputed caches. Fewer dials meant fewer surprises at scale. In parallel, the GPU acceleration wave gathered momentum. After acquiring mental images in 2007, NVIDIA advanced Iray as a physically based engine that mapped naturally onto CUDA, appealing to CAD users with interactive photoreal previews. OctaneRender from OTOY, led by Jules Urbach, pioneered pure-GPU path tracing for independent artists and visualization teams. Redshift, developed by Rob Slater, Panagiotis Zompolas, and Nicolas Burtnyk (and later acquired by Maxon), delivered a biased but production-proven GPU renderer with out-of-core memory, unlocking dense scenes on modest GPUs. The CPU/GPU split diversified strategies: CPU for massive memory footprints and determinism; GPU for speed, interactivity, and energy efficiency. Pipelines learned to mix engines based on output targets, with farm schedulers increasingly aware of device types and VRAM.

- Arnold: path tracing with unified sampling reduced artist guesswork.

- Iray: CUDA-forward CAD photoreal preview and offline consistency.

- Octane and Redshift: GPU-first speed with maturing production features.

Cloud elasticity

The second major shift was economic: elastic compute. AWS EC2 (notably Spot instances), Microsoft Azure, and Google Cloud let studios burst during crunch windows without capex. Managed services like Autodesk 360 Rendering and Chaos Cloud simplified access further, abstracting queue setup and license wrangling. Studio-grade SaaS emerged with Conductor (spun out of Atomic Fiction and later acquired by CoreWeave), GridMarkets, and Fox Renderfarm, offering turnkey pipelines with predictable cost controls, data isolation, and plug-ins for major DCCs. These platforms operationalized security (VPCs, KMS encryption), cache warmers for textures, and autoscaling against job graphs. For producers, cost shifted from fixed to usage-based, which improved forecasting but required new habits: tagging jobs per client, setting budget caps, and choosing instance families per renderer. Elasticity also exposed architectural gaps—inefficient scene I/O or chatty license servers turned into billable drag—nudging teams toward better packaging, delta uploads, and license proxies. By 2018, “on-prem plus burst” became a default assumption across visualization-heavy businesses.

- Burst capacity: meeting deadlines without permanent hardware.

- Managed queues: offloading scheduler and license-server maintenance.

- Security and cost controls: VPC isolation, budget caps, and per-tenant metering.

CAD/product viz angle

The mid-2010s cemented turnkey network rendering inside design-centric tools. KeyShot from Luxion (founded by Henrik and Claus Wann Jensen) made push-button network and queue rendering accessible to industrial designers, while V-Ray and Arnold added simplified distributed rendering modes that hid network complexity. Automotive visualization pipelines adopted Autodesk VRED clusters for design reviews and digital showrooms, leveraging pragmatic ray tracing, and many toolchains embraced Iray-based workflows for consistent offline/interactive parity. The delta from CAD to pixels narrowed: direct CAD importers stabilized tessellation, render passes mapped to DCC compositing conventions, and neutral color pipelines reduced surprises when moving between design review and marketing imagery. In this period, the cultural distance between CAD, marketing, and IT shrank, as teams learned that job graphs, material libraries, and asset versioning were as important to product truth as tolerance stacks and BOMs.

- KeyShot Network Rendering: minimal setup for quick coverage of angles and variants.

- VRED clusters: standardized automotive review and VR sessions at studio fidelity.

- Iray-driven parity: interactive and offline renders matched closely for approvals.

Scaled product visualization pipelines: materials, assets, and configurators (2018–present)

Physically based assets at scale

Once orchestration matured, the bottleneck shifted to asset fidelity and consistency. The industry converged on PBR conventions influenced by Disney’s “principled” BRDF, codifying parameters like base color, metallic, roughness, and clearcoat in ways that survive renderer translation. Material standards such as X-Rite AxF, NVIDIA MDL, and MaterialX emerged to define appearance in a renderer-agnostic fashion, enabling shared libraries across product lines. High-quality capture—multi-angle scans, spectral measurements, and normal/height microstructure maps—entered retail pipelines so that leather grain, paint flake, and fabric weave reproduce across engines. For scene exchange, USD from Pixar became the backbone for complex assemblies and variant sets, while glTF handled lightweight, web-centric visualizations. The payoff is massive: a single canonical asset can drive design reviews, configurators, AR product pages, and hero marketing frames—each platform reading the same appearance definition and geometry LODs.

- Disney-influenced BRDFs standardized artist controls with physically plausible defaults.

- AxF/MDL/MaterialX enabled consistent material behavior across renderers.

- USD structured assemblies, variants, and references; glTF delivered compact runtime assets.

CAD-to-viz bridges

Bridging native CAD to scalable visualization became an engineering discipline. Dassault Systèmes 3DEXCITE (formerly RTT DeltaGen), Autodesk VRED/Arnold, Siemens Teamcenter with visualization modules, and PTC Windchill integrated pipelines where BOM/PLM changes propagate to render queues. Automated tessellation routines preserve curvature with feature-aware decimation and attribute transfer (e.g., paint codes, material slots), while variant tagging encodes market/trim logic directly in scene graphs. Mesh LODs and proxy hierarchies are baked to align with farm memory constraints and delivery targets, from 16K hero frames to web-delivered 3D. These bridges allow a part revision—say, a grille texture change—to ripple through hero shots, configurator thumbnails, and AR assets without manual rework. By treating visualization as an extension of PLM, enterprises achieve tighter governance: consistent naming, audit trails, and versioned materials, all of which scale farm throughput and reduce re-render waste.

- Automated tessellation: curvature-aware decimation and UV preservation for DCC readiness.

- Variant tagging: trim/region logic embedded in scene hierarchy for batch expansion.

- LOD baking: predictable memory/performance across hero, interactive, and mobile outputs.

Real-time meets farms

Real-time engines crossed into product pipelines not as replacements for farms, but as amplifiers. Unreal Engine and Unity now power interactive configurators and immersive reviews, while farms handle heavy lifting behind the scenes. For large catalogs, farms pre-bake lightmaps, generate tens of thousands of variant images, and compile shader libraries at scale for deployment. NVIDIA’s RTX/OptiX stack accelerated interactive ray tracing, and Omniverse introduced a USD-native hub where look-dev can happen interactively, with deferred farm-quality frames dispatched for campaigns. The pattern is hybrid: interactive sessions for decision speed, farm renders for guaranteed noise-free photoreal, especially at high resolutions and strict color standards. Crucially, material standards like MaterialX and MDL reduce the translation penalty between real-time and offline engines, aligning gloss, Fresnel, and normal responses. The result is a continuum where stakeholders can move from a WebGL preview to a press-ready still, without re-authoring assets.

- Interactive look-dev: real-time engines shorten review cycles for design and marketing.

- Deferred farm frames: high-res, denoiser-free or carefully denoised outputs for final media.

- Hybrid material graphs: MaterialX/MDL unify shading across real-time and offline paths.

Operations and delivery

At scale, the differentiators are operational. Farms run as containerized workers on Docker/Kubernetes, with node pools split by CPU/GPU and VRAM tiers. Asset distribution relies on CDNs and cache layers co-located with compute, minimizing cold starts for textures and HDRIs. Policy-based schedulers arbitrate between on-prem queues and cloud burst, respecting IP boundaries and cost ceilings. Quality gates move upstream: automated visual diffs compare new frames to baselines, flagging lighting or material regressions; parametric tolerance checks assert geometry and assembly integrity; and color-managed pipelines enforce device-independent fidelity with OCIO or equivalent. Observability spans logs, metrics, and traces, mapping render times to materials, cameras, and LODs for pinpoint tuning. By treating rendering like any other production microservice, teams achieve predictable SLAs—critical when marketing windows are immovable and variant matrices stretch into the tens of thousands.

- Kubernetes pools: per-queue GPU/CPU segregation aligns workloads with hardware.

- CDN-backed assets: global caches reduce misses and egress in hybrid clouds.

- Automated QA: visual diffs and color management protect brand-critical fidelity.

Conclusion: Why render farms still matter—and what’s next

Enduring value

Despite the march of real-time rendering, large farms remain the bedrock of product visualization for three reasons. First, guaranteed quality: consistent convergence, clean caustics, and strict color compliance at high resolutions are still easier to assure in controlled batch environments than on interactive rigs subject to frame time budgets. Second, massive variant coverage: marketing and e-commerce require exhaustive matrices of trims, colors, and regions, which farms expand reliably from a single USD source of truth. Third, deadline discipline: amplified by orchestration and cloud burst, farms deliver predictable throughput that aligns with launch calendars and print deadlines. Real-time tools are indispensable for decisions and engagement, but when the deliverable must be noise-free, color-accurate, and uniformly lit across thousands of SKUs, the farm’s deterministic pipeline wins. Moreover, the operational investments—materials standards, USD hierarchies, license-aware schedulers—compound over time, making each subsequent campaign faster, cheaper, and more consistent.

- Quality at scale: deterministic convergence and color management for critical assets.

- Variant expansion: programmatic coverage from canonical USD/MaterialX assets.

- Schedule reliability: orchestrators and cloud burst turn crunch into a controllable cost.

Trajectories to watch

The next wave will intertwine sustainability, interoperability, and AI-driven acceleration. Farms will adopt carbon-aware scheduling, steering workloads to renewables-backed regions and time-of-day windows, while per-watt optimization guides CPU/GPU selection and bin packing. Standards convergence will continue: MaterialX + USD + MDL will form a durable appearance graph that maps across raster, ray, and neural engines with minimal drift. Neural and differentiable rendering will shorten convergence, enable inverse material/lighting estimation, and synthesize product imagery from sparse inputs, with carefully bounded quality gates. On the delivery front, edge-streamed, photoreal configurators will hide heavy assets behind cloud GPUs, offering zero-download reviews that retain accurate gloss and parallax while guarding IP. Across all of this, the operational fabric—observability, reproducibility, and license governance—will decide who can adopt innovation without breaking SLAs. The farms that measure everything will learn fastest, spending fewer cycles to produce more convincing pixels.

- Carbon-aware orchestration: match queues to clean-energy windows and regions.

- Appearance graph standards: consistent shading across tools and engines.

- Neural acceleration: denoisers, inverse rendering, and learned importance sampling.

- Edge streaming: secure, zero-download experiences with cloud GPUs.

Strategic takeaway

The winners will be those who blend orchestration, interoperability, and hybrid compute into a single operating model. That means robust schedulers—Deadline, Tractor, or Kubernetes-native controllers—that are license-aware, cloud-savvy, and observable. It means interoperable assets—USD for structure, PBR/MaterialX/MDL for appearance—so a change to a paint flake or stitching pattern propagates from design to web to print with zero manual retouch. And it means embracing hybrid CPU+GPU rendering: CPU nodes for memory-heavy hero shots and deterministic final frames; GPU nodes for iterative look-dev, denoised campaigns, and elastic bursts. When this tripod is in place, organizations deliver interactive decisions and campaign-grade images reliably, repeatably, and at scale. The farm no longer looks like a room full of machines—it looks like a disciplined, data-driven production system that turns product truth into compelling pixels on demand.

- Orchestrate: queue graphs, license awareness, and cloud burst as table stakes.

- Interoperate: USD + MaterialX/MDL libraries keep visuals consistent across platforms.

- Hybridize: allocate CPU/GPU intelligently by scene profile and deadline.

Also in Design News

Rhino 3D Tip: Create a Reusable Rhino Template for Units, Layers, and Documentation

October 30, 2025 2 min read

Read More

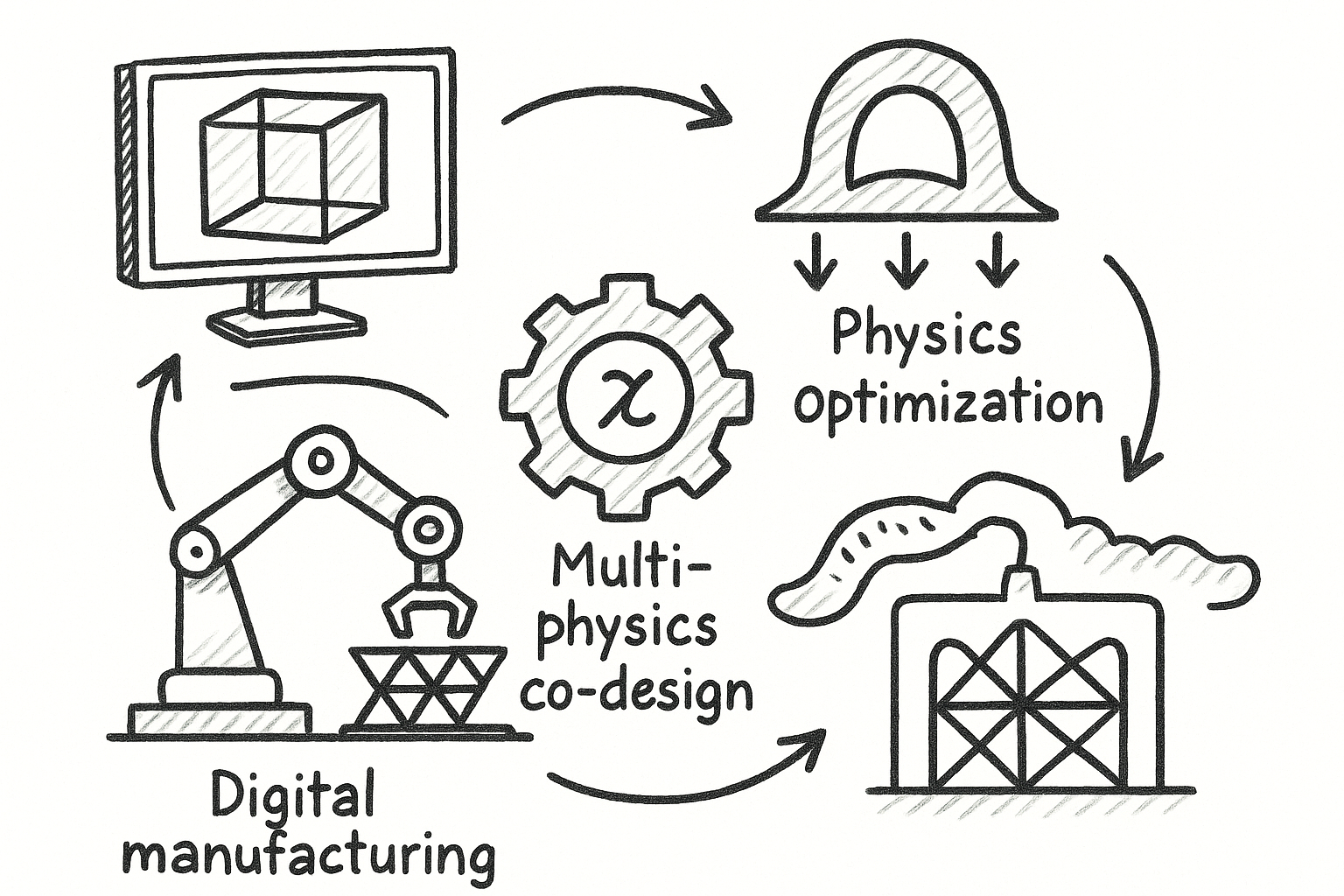

Differentiable Design Fabric: Rebuilding Geometry Kernels for Optimization, Multi-Physics Co-Design, and a Manufacturing-Aware Digital Thread

October 30, 2025 8 min read

Read More

5 Targeted Civil 3D Plug-Ins to Eliminate Annotation Churn, Enforce Standards, and Speed Sheet Production

October 30, 2025 7 min read

Read MoreSubscribe

Sign up to get the latest on sales, new releases and more …