Your Cart is Empty

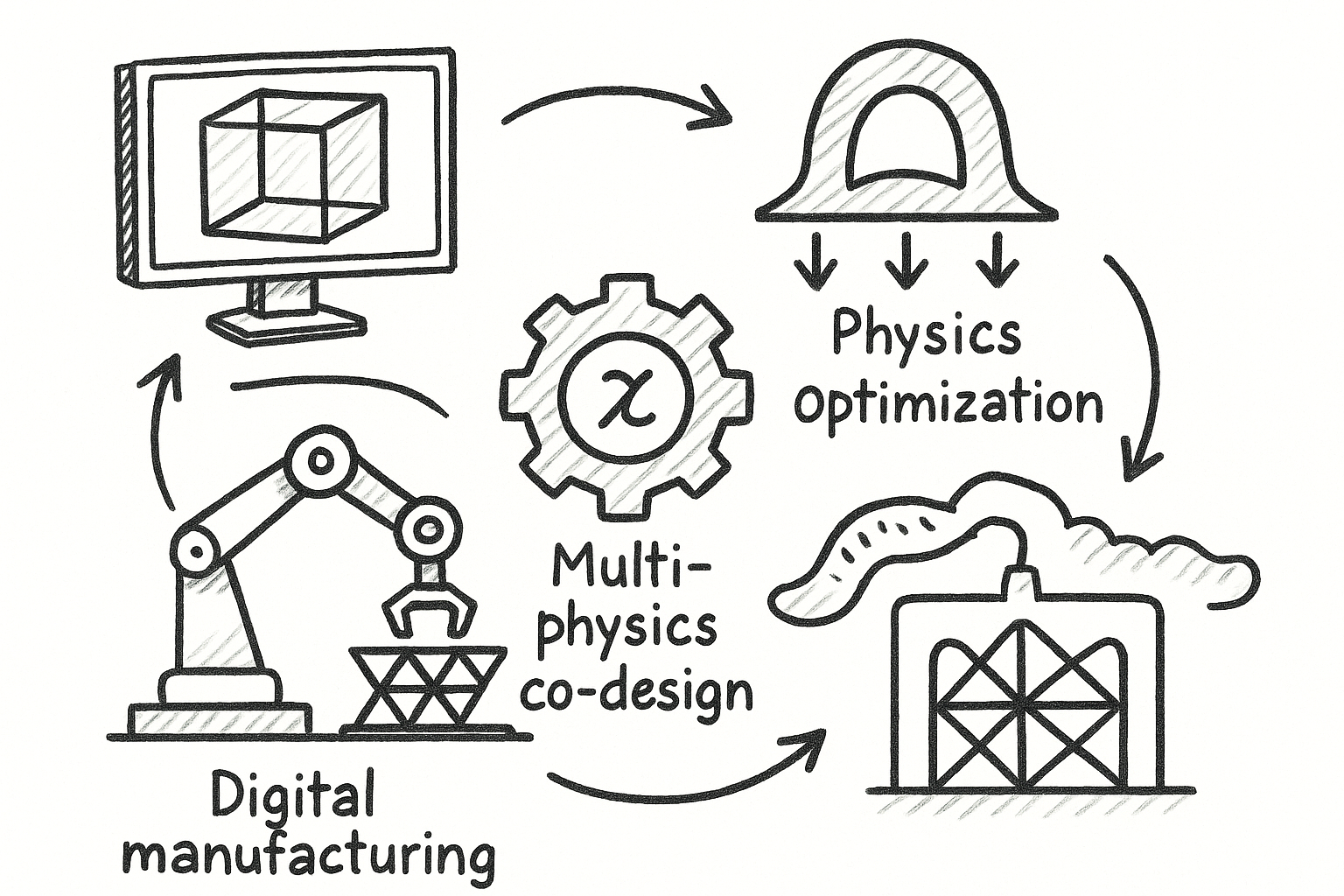

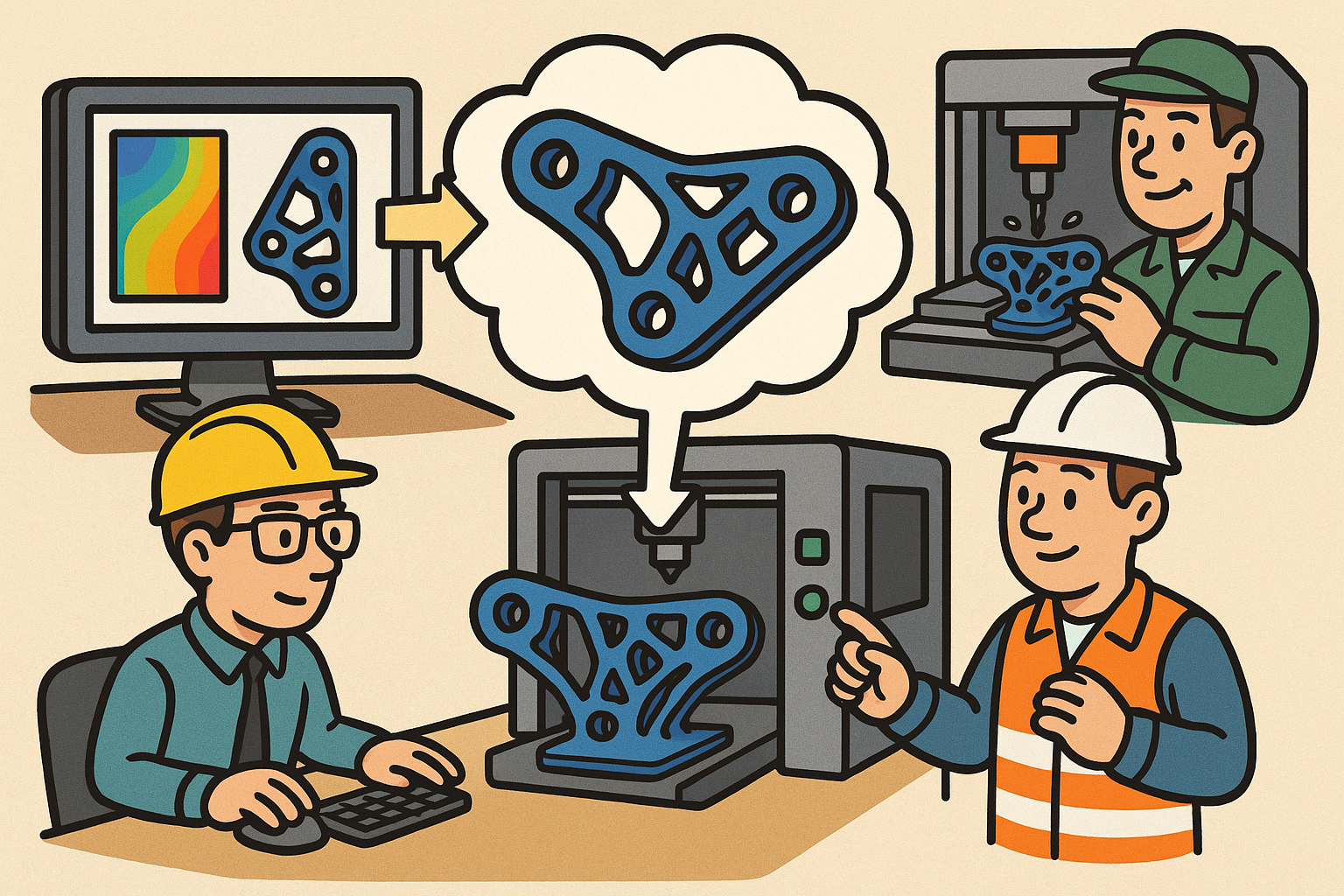

The next generation of design software will not be a stitched-together stack of separate tools. It will be a single, programmable fabric that connects generative intent, robust geometry, multi-physics optimization, and manufacturing constraints through an auditable, real-time **digital thread**. By making every step differentiable and verifiable, we collapse handoffs and turn manufacturability into a continuous design variable rather than a late-stage filter.

Rebuilding the geometry kernel for optimization and robustness

Traditional parametric CAD resists gradient-based design for reasons that are as cultural as they are mathematical. Feature trees and sketch constraints were built for editability by humans, not for smoothness under automated search. When a design variable moves, the model often jumps across discontinuities, stalls in ambiguous constraints, or crashes as regeneration fails. Optimization amplifies these issues because it demands coherent local derivatives and predictable topology transitions.

Why does legacy CAD fight gradients? Consider the fault lines:

- Discontinuous topology changes from feature suppression, reordering, and boundary events; underconstrained sketches that flip or fold across small perturbations.

- Non-differentiable operations such as hard Booleans, sharp fillets, and offsets built from tolerance-driven heuristics; tiny gaps or overlaps that create predicate ambiguity.

A geometry core that embraces optimization must represent shape in ways that admit calculus, proofs, and exact predicates. Implicit fields, such as signed distance functions and algebraic surfaces, naturally carry smoothness and support analytic or automatic differentiation. Volumetric splines and NURBS with C2 continuity can bridge to fabrication-grade surfaces while maintaining derivative quality. For correctness under floating-point noise, exact arithmetic for robust predicates and interval methods for guarantees provide the guardrails.

Differentiable feature modeling arises when classic operations are relaxed into smooth counterparts. Smooth Booleans via R-functions and convolution replace crisp min/max with continuously differentiable blends. Offsets can be reframed as PDE-based dilations, turning offset distance into a time-like parameter in a Hamilton–Jacobi formulation. Constraints become projection operators: a hard constraint projects design variables back onto the feasible set after an optimization step, while a soft constraint contributes a penalty to the objective, letting the solver negotiate trade-offs rather than fail.

The pragmatic path is a hybrid kernel architecture. Maintain a dual representation—precise B-reps for downstream manufacturability and analysis, and implicit fields for differentiability—with a common intermediate representation that tracks both. Attach an autodiff tape to the regeneration graph so each feature knows its local mapping and derivatives with respect to upstream parameters. Add failure-aware backtracking and repair: when a regeneration step detects a non-manifold, a degeneracy, or a constraint conflict, the kernel perturbs along a homotopy schedule to recover a nearby feasible configuration while preserving gradient information.

Several pitfalls recur in practice and deserve systematic mitigation. Topology changes—such as the birth or death of holes—can be handled by embedding a continuation that gradually morphs the feature rather than flipping it. Gradient masking near kinks avoids polluting global search with misleading sensitivities; surrogate losses that approximate sharp features with smooth envelopes enable meaningful updates while preserving the intent of edges and corners. Non-manifolds should be treated as first-class citizens with explicit codimension tracking, enabling the kernel to disambiguate between transient modeling artifacts and true feature intent.

To know whether a differentiable kernel is fit for design, measure what matters:

- Gradient correctness through finite-difference checks across representative models and parameters.

- Regeneration robustness under randomized perturbations and sketch edits; report failure recovery rate and time.

- Feature editability under optimization: how often does a step trigger a catastrophic rebuild versus a recoverable projection?

- Boolean stability envelopes that quantify sensitivity to tolerances and geometric noise.

The outcome is a geometry substrate where **differentiable modeling** is the default, not the exception, and where proof-carrying predicates anchor numerical performance to geometric truth.

Differentiable multi-physics at design time

A differentiable kernel unlocks little without physics that can speak the same language. Design-time simulation must provide accurate state and reliable derivatives with respect to geometry, material, process, and control parameters. The core techniques are established, yet underexploited in product design because they rarely appear in the same tool that houses geometry.

- Adjoint and autodiff-enabled FEM/MPM for structural, thermal, and fluid problems; differentiable contact and friction models that remain stable across intermittent contact.

- Spectral and reduced-order surrogates trained online to accelerate repeated queries; mixed-precision GPU solvers with automatic sparsity exploitation and graph coloring for Jacobians and Hessians.

Real products are multi-objective and multi-physics. The same geometry drives stiffness, heat flow, acoustic response, aero/fluids, and electromagnetics. A shared set of design variables should traverse these analyses so the optimizer can trade between, say, structural safety factors and thermal gradients. Reliability demands worst-case reasoning: stochastic formulations capture uncertainty distributions in load and material, while robust optimization enforces safety under bounded uncertainty sets. The solver must provide gradients of risk measures, not just mean performance.

Additive manufacturing injects process-specific physics and constraints. Anisotropic material behavior arises from layerwise deposition. Overhang angle costs, support risk, and porosity must shape the objective. Melt pool dynamics, energy density, and scan strategy influence microstructure and, in turn, macroscopic properties. Residual stress and distortion prediction are not postmortems—they belong inside the design loop so inverse warping can compensate geometry preemptively, with thermal history constraints ensuring a print path respects energy budgets.

Scaling these computations is as much about algorithm as it is about hardware. Domain decomposition and multigrid reduce global solves to local work with fast convergence. Matrix-free operators minimize memory and maximize throughput on GPUs. Differentiable timestepping with checkpointing balances memory with gradient fidelity in transient simulations. The result is wall-clock efficiency that makes design-time iteration feasible for industrial-scale models.

Validation must be rigorous and continuous. Canonical tests—cantilevers for structures, heat sinks for conduction-convection, mufflers for acoustics—anchor expectations. Process-aware specimens and lattice coupons calibrate AM surrogates against in-situ sensing and ex-situ metrology. Metrics should focus on decision quality:

- Constraint violation rates across optimization runs and seeds.

- Convergence speed measured in function/gradient calls and wall-clock.

- Sensitivity repeatability across perturbations and remeshing.

- Simulation feasibility rate when coupled to complex geometry edits.

When physics is both fast and differentiable, **co-design** ceases to be a buzzword and becomes an operational paradigm.

From prompts to parametrics—aligning generative AI with engineering intent

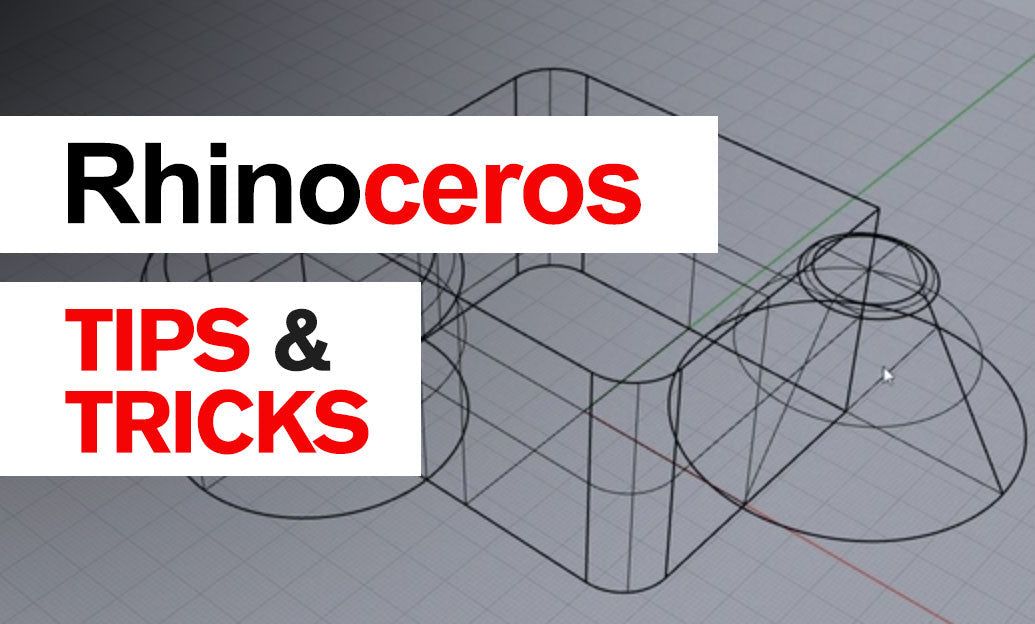

Generative models can sketch possibilities at astonishing speed, but manufacturing and engineering require editability, constraint satisfaction, and traceability. The bridge from “looks right” to “builds right” is representation. A generator that emits meshes or voxels without structure leaves the engineer rewriting the design in CAD. Instead, the model should produce feature programs—sketches, constraints, and dimensions—or a graph-structured CAD that maps to a production kernel.

Several representations support editable generation. Shape programs and domain-specific languages compile into feature graphs with explicit dependencies. Neural implicit priors can be mapped to parametric handles through inverse mapping networks, grounding high-dimensional latent spaces in interpretable dimensions like wall thickness, fillet radius, or lattice porosity. The result is a dialog where the human can edit, not just accept or reject.

Data curation determines model behavior. Mining STEP and Parasolid repositories into program traces allows the model to learn the semantics of sketches, constraints, and features. Deduplication suppresses superficial variants; anonymization and license-aware curation protect IP and enable ethical scale. Automatic constraint extraction—recognizing symmetry, parallelism, or co-linearity—and semantic labeling of features form the supervision signals that push generation toward editability and verifiability.

The human remains central. The workflow is **AI proposes, designer constrains**: the model offers candidates with uncertainty annotations; the human adds constraints or adjusts handles; the system refines using differentiable solvers that treat constraint satisfaction as a first-class objective. Interactive critique loops keep design rationale attached to geometry so decisions survive handoff to simulation and manufacturing.

Guardrails ensure safety and brand integrity. IP leakage prevention mechanisms filter training data and outputs; policy checks catch unsafe geometries; style and brand constraints bias the generator to corporate signatures. Evaluation must move beyond aesthetics. A generator earns trust when its outputs score high on an editability score, constraint satisfaction rate, manufacturability score, and simulation feasibility rate. Time-to-spec convergence and number of human interventions quantify how quickly a design becomes production-ready.

This alignment—generative priors snapped onto parametric programs—lets **generative engineering** accelerate ideation without sacrificing rigor.

Manufacturing-aware co-optimization for additive processes

Designing for additive manufacturing requires embedding process realities into the objective, not checking them at the end. Overhang integrals penalize geometry that demands supports beyond strategic anchors. Orientation becomes a design variable: the optimizer may rotate the part to minimize support volume while maintaining functional surfaces. Toolpath-aware losses enforce bead width limits, contour-infill coupling, and thermal accumulation constraints so the proposed geometry is coherent with feasible scan strategies.

Microstructures and lattices turn material into a field of tunable properties. Property-targeted metamaterials can achieve stiffness-to-weight, damping, or thermal conductivity profiles unattainable with bulk solids. Continuum-to-lattice mapping, grounded in homogenization, guarantees that macroscopic targets correspond to printable unit cells. Connectivity and drainage constraints avoid trapped powder and dead-end struts, aligning geometric elegance with process practicality.

Process simulation belongs inside the loop. Thermo-mechanical fields from scan histories predict residual stress; inverse warping compensates expected distortion at the geometry level. Porosity and microstructure surrogates, calibrated with in-situ sensing and ex-situ micrographs, flag regions at risk and propose local parameter tweaks. These models must be differentiable to inform upstream geometry and process variables during optimization.

Qualification and traceability turn a design into a certifiable product. Digital certificates tie geometry, parameters, machine, and material batch into a single record. Statistical process control monitors layerwise signatures; Bayesian updating from sensor feedback improves parameter selection across builds and sites. The result is a feedback loop that raises yield and tightens uncertainty bounds as the fleet prints.

Planning and economics close the loop with reality. Build packing, orientation, supports, and scheduling should be co-optimized alongside cost, energy, and carbon. A design that saves 5% mass but adds 40% support volume may be dominated by an alternative that balances both. With the right objective, the optimizer navigates these trade spaces automatically.

To ground the approach, consider a few pattern-based exemplars that recur across industries:

- A lightweight bracket with distortion compensation, where residual-stress-informed inverse warping is co-optimized with topology and toolpath to hit tolerances off the printer.

- A patient-specific lattice implant with anisotropy bounds, balancing regional stiffness targets with unit-cell printability and pore interconnectivity for osseointegration.

- A conformal-cooled mold insert with support-minimal channels, where overhang costs and recoater clearance shape the channel network alongside thermal performance.

Embedding these DfAM logics into the solver ensures that manufacturability is not an afterthought but a governing force within the **manufacturing-aware optimization** loop.

Real-time visualization, verification, and the design-to-factory digital thread

A programmable, auditable pipeline needs a scene graph and interchange model that preserves intent and links analyses. OpenUSD offers assembly context, variants, and layering to orchestrate complex products. Model-based definition (MBD) with PMI keeps tolerances and annotations attached to geometry, not lost in PDFs. Bridges to STEP AP242, STEP-NC, and QIF connect design to metrology and machine instruction without breaking lineage.

Visual truth matters. Real-time path tracing communicates materials, finishes, and appearance with physical fidelity; neural rendering scales this fidelity to massive assemblies without crippling frame rates. Field visualization—stress, temperature, uncertainty, and gradients—belongs in the same viewport, including XR reviews where stakeholders inspect hotspots and sensitivities at 1:1 scale.

Verification must be woven into the same thread. Geometric differencing detects unintended changes; spec regression tests confirm that edits preserve performance envelopes; simulation invariants (like energy conservation) act as tripwires. Golden-master baselines ensure that a design remains within certified bounds as it evolves. Pipelines should be reproducible end-to-end through containers and environment capture, with cryptographic signatures and audit trails that prove who changed what, when, and under which inputs.

Decision support turns data into action. Uncertainty overlays and sensitivity maps reveal which parameters control outcomes; explainable recommendations rank feasible edits with quantified impact on objectives and constraints. What-if sandboxes tie geometry changes to BOM, supply chain, and sustainability dashboards so a designer sees cost, lead time, and carbon shifts before committing to an edit.

Performance and scale are non-negotiable. Out-of-core streaming handles assemblies that dwarf GPU memory. GPU baking precomputes shaders, distance fields, and field interpolants for instant feedback. Scalable compute orchestrates across desktop workstations, cloud clusters, and edge devices on the factory floor, keeping the same model running from ideation to inspection.

When visualization, verification, and interchange share one substrate, the **auditable digital thread** becomes tangible, connecting prompt to parametric program to print file to inspection report without translation loss.

Conclusion

By unifying **differentiable geometry**, **multi-physics co-design**, **generative programming**, **AM-aware optimization**, and **verifiable visualization** in a single, programmable pipeline, we expose intent to computation and make manufacturability a first-class variable. The immediate next step is a reference stack—open benchmarks, interoperable intermediate representations, and pilot deployments—that proves these ideas in production while setting new standards for auditability, safety, and speed. The reward is a design practice where optimization is native, handoffs are minimal, and the path from prompt to qualified part is both fast and provably correct.