Your Cart is Empty

Customer Testimonials

-

"Great customer service. The folks at Novedge were super helpful in navigating a somewhat complicated order including software upgrades and serial numbers in various stages of inactivity. They were friendly and helpful throughout the process.."

Ruben Ruckmark

"Quick & very helpful. We have been using Novedge for years and are very happy with their quick service when we need to make a purchase and excellent support resolving any issues."

Will Woodson

"Scott is the best. He reminds me about subscriptions dates, guides me in the correct direction for updates. He always responds promptly to me. He is literally the reason I continue to work with Novedge and will do so in the future."

Edward Mchugh

"Calvin Lok is “the man”. After my purchase of Sketchup 2021, he called me and provided step-by-step instructions to ease me through difficulties I was having with the setup of my new software."

Mike Borzage

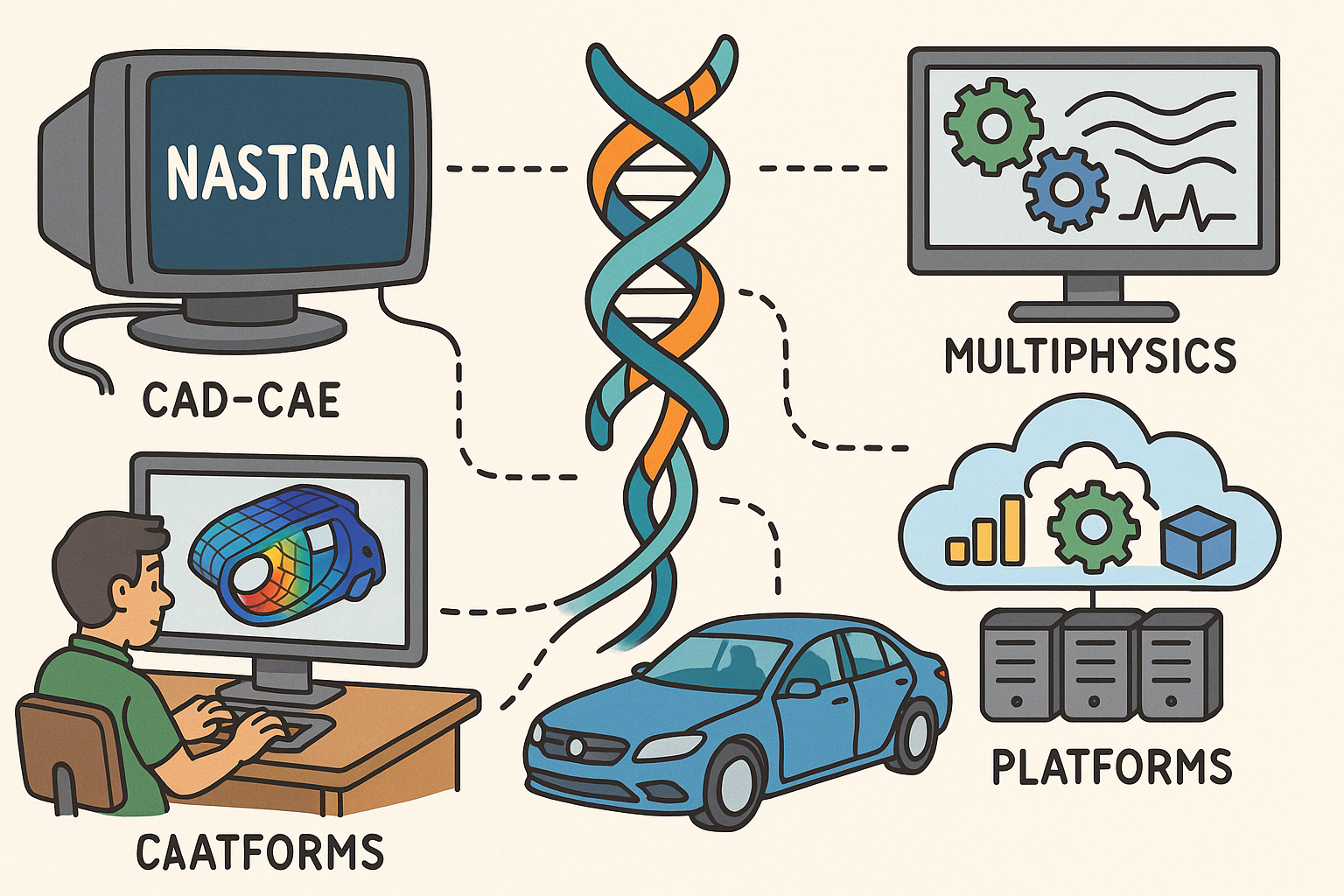

Design Software History: From NASTRAN to Multiphysics: CAD–CAE Coupling, Platforms, and the Digital Thread

November 06, 2025 11 min read

Origins and the Road to Multi‑Physics

1960s–1980s: Single‑physics maturity

The modern multi‑physics landscape is rooted in a period of relentless single‑physics refinement, when structural analysis and fluid dynamics each sprinted ahead on their own tracks. At NASA, Richard MacNeal and Robert Schwendler shaped NASTRAN into a definitive platform for structural finite element analysis (FEA), encoding the stiffness method and sparse direct solvers into a systemized workflow that ran on mainframes with batch queues and punched‑card discipline. MacNeal went on to found MSC, whose commercial Nastran distributions created an ecosystem of DMAP customization, superelements, and modal reduction that became indispensable across aerospace and heavy industries. In parallel, John Swanson left Westinghouse to create ANSYS, engineering a general‑purpose solver with a robust element library and thermal‑structural coupling that would eventually become a cornerstone of enterprise simulation. HKS—David Hibbitt, Bengt Karlsson, and Paul Sorensen—launched Abaqus to tackle nonlinearities that were under‑served by linearized workflows: large deformation, plasticity, and contact. Abaqus introduced dependable Newton–Raphson strategies, automatic stabilization, and a powerful input syntax that engineers could extend through user subroutines.

On the fluids side, the finite volume method moved from academic maturity into production software. Brian Spalding at Imperial College London created PHOENICS, the first general‑purpose CFD code available commercially, propagating SIMPLE and PISO pressure‑velocity coupling algorithms into industry. David Gosman’s trajectory—from the same Imperial lineage—fed into STAR‑CD at CD‑adapco, which would later pioneer polyhedral meshing and fully integrated pre‑solve‑post loops. AEA Technology’s CFX advanced pressure‑based solvers and high‑quality hexahedral boundary layers for turbomachinery, while FLUENT (with roots in NASA’s FIDAP and the FLOTRAN heritage around the broader ecosystem) expanded turbulence modeling and industrial usability. The collective impact was a hardening of single‑physics capabilities: robust element and turbulence libraries, better contact and combustion models, and nascent parallelism via vector machines and then early MPI. By the late 1980s, the field had established dependable single‑domain tools and a shared expectation that computational simulation could steer design decisions—a conviction that set the stage for coupling.

1990s: Coupling begins

The 1990s introduced the idea that one solver’s outputs could be another’s inputs in an automated loop. Engineers began to orchestrate partitioned co‑simulation for fluid‑structure interaction (FSI): the CFD code sent pressure loads to the FEA code, which returned displacements, iterating per time step with relaxation to maintain stability. These were typically “loose” couplings with staggered marching and field‑mapping strategies that relied on surface interpolation and conservative flux transfer. In parallel, 1D system simulation matured as Hilding Elmqvist shepherded the Modelica language (1996) and the Dymola tool, bringing acausal, equation‑based modeling to multi‑domain physical systems—electro‑mechanical, thermo‑fluid, and controls. That 1D plant modeling bridged to 3D CAE through connectors that let a drivetrain’s lumped inertia interact with a 3D gearbox mesh or a battery’s equivalent circuit inform a thermal CFD model.

A pivotal signal within product development arrived when PTC acquired Rasna in 1995, folding Pro/MECHANICA into the Pro/ENGINEER (later Creo) environment. Rasna’s p‑method FEA and sensitivity‑based design cemented the notion that CAD‑embedded analysis wasn’t a luxury, but a strategic imperative. Similarly, early versions of CATIA V5 linked with analysis modules, and SolidWorks paths to simulation matured. Across industries, the pattern repeated: data exchange through neutral formats (IGES, STEP) up‑leveled toward persistent feature and parameter connectivity, and simulation post‑processing began to feed upstream into design constraints and parametric updates. The decade ended with a clear thesis: multi‑physics would be achieved not merely by bigger solvers, but via reliable coupling, language standards, and integrated environments that aligned geometry, numerics, and controls within the same engineering loop.

2000s: Suites coalesce

In the 2000s, the market consolidated around integrated suites that promised one data model, one licensing pool, and one user experience spanning pre‑ to post‑processing. ANSYS launched Workbench to unify geometry, meshing, and physics under an associative project schematic. The strategy accelerated through acquisitions: CFX (2003) brought turbomachinery‑grade CFD; Fluent (2006) added a massive user base and broad turbulence/combustion coverage; Ansoft (2008) contributed HFSS and Maxwell to establish electromagnetics as a first‑class citizen. Workbench’s project page and parameter manager made multi‑physics orchestrations repeatable, while custom journals and later ACT extensions reduced friction in template‑based engineering.

COMSOL took a different tack, popularizing a “multiphysics‑first” approach at the PDE level. Instead of wiring solvers together, it invited users to declare coupled PDEs in one environment, with weak‑form assembly and automatic Jacobian generation. Simultaneously, Siemens assembled a portfolio: UGS/NX for CAD/CAE, LMS Amesim (2012) to bolster 1D system simulation, and ultimately STAR‑CCM+ through the CD‑adapco acquisition (2016), giving the company a unified CFD environment with integrated meshing and design exploration. Dassault Systèmes formed SIMULIA after acquiring Abaqus (2005), wiring in process automation (Isight from Engineous, 2008), fatigue (fe‑safe from Safe Technology, 2013), and later electromagnetic simulation via CST (2016), all increasingly managed through the 3DEXPERIENCE platform. The result was a shift from solver‑centric toolboxes to end‑to‑end platforms where CAD associativity, meshing, solving, optimization, and data management lived behind a single login and data backbone.

2010s–2020s: Industrialized multi‑physics

During the 2010s, multiphysics moved from frontier to factory. Hexagon acquired MSC (2017), consolidating MSC Nastran, Marc (nonlinear), Adams (multibody dynamics), and manufacturing simulation under a metrology‑infused digital thread. Optimization strategies matured: Altair’s OptiStruct and HyperStudy elevated topology and DoE‑driven exploration, while ANSYS acquired FE‑DESIGN (Tosca, 2013) to embed structural topology and CFD shape optimization into Workbench. Morphing tools, CAD‑linked parameterization, and variability management became table stakes. Electromagnetics, once peripheral, shifted center stage as ANSYS (Ansoft) and Dassault (CST) expanded EM–thermal–structural couplings for power electronics, antennas, and battery systems.

Infrastructure changed just as radically. Cloud/HPC democratization expanded through AWS, Azure, and platforms like Rescale and UberCloud; containers improved portability; schedulers such as SLURM and PBS integrated with vendor license managers; and usage shifted toward elastic consumption and tokens. At the hardware level, GPU acceleration spread from explicit dynamics into CFD and EM—Abaqus/Explicit, LS‑DYNA (post‑LSTC acquisition by Ansys, 2019), Ansys Fluent’s GPU solver, and CST/HFSS GPU back‑ends all pushed scalable throughput. Meanwhile, live parameter studies and adjoint methods shortened the loop between hypothesis and geometry change. By the early 2020s, the state of practice was clear: multi‑physics at scale, not as a special project, but as an everyday engine of design iteration, managed under PLM and validated against requirements in a closed loop.

How Tight CAD–CAE Coupling Works

Geometry associativity and model preparation

At the heart of tight CAD–CAE coupling is geometry associativity—the persistent linkage between a CAD model’s feature tree and the meshing, boundary conditions, and result objects downstream. Associativity preserves parameters, dimensions, and named selections so that a fillet radius change in NX, CATIA, Creo, or SolidWorks propagates through meshing and re‑solving without manual rework. Toolchains such as NX/Simcenter, CATIA+SIMULIA, Creo+Creo Simulate, and SolidWorks+Simulation expose this continuity through selection rules (by feature, by name, by topology) that survive regeneration and local remeshing. Since analysis geometry often differs from manufacturing geometry, direct modeling becomes essential: SpaceClaim (embedded in ANSYS), NX and Creo’s synchronous technology, and SIMULIA’s tools enable defeaturing (removing slivers, small holes), midsurfacing for thin‑walled parts, and “virtual topology” that suppresses tiny edges without altering the native CAD definition.

To bridge heterogeneous kernels—Parasolid (Siemens), ACIS (Spatial), and CGM (Dassault) among others—platforms deploy tolerant modeling and geometry healing. They stitch faces, close gaps, and adjust tolerances so watertight meshes can be generated even when imported STEP data carries small inconsistencies. Effective workflows combine:

- Parametric drivers (expressions, design tables) that feed analysis variables directly.

- Persisted “named selections” for loads/BCs, futuresafe against topology edits.

- Mid‑surface extraction and imprint operations for shell/solid transitions.

- Virtual topology layers that protect meshability while respecting the master CAD.

These mechanisms ensure that CAE edits are reversible, auditable, and aligned to the authoritative CAD model, preserving a clean path back to manufacturing data and PMI.

Meshing and data fidelity

Meshing translates geometric intent into discrete control volumes or elements, and fidelity hinges on how well the mesh retains association back to the CAD features. Automated hex‑dominant, tetrahedral, and poly‑prism schemes now re‑act to CAD updates by locally remeshing only what changed, maintaining boundary layer inflation, edge sizing, and growth rules. STAR‑CCM+ embeds meshing deeply within the CFD environment, reducing context switches and letting users combine polyhedral cores with prism layers in one pipeline. In structural domains, hierarchical tet refinements and swept hex blocks work alongside midsurfaces to achieve accuracy without exploding element counts. COMSOL’s LiveLink connectors (to SolidWorks, Inventor, and others) maintain geometry fidelity across iterations, enabling re‑solve without re‑import.

Adaptive loops close the gap between initial guesses and converged accuracy. Curvature‑based refinement adapts near fillets and contact zones; error estimators drive h‑refinement, while p‑refinement (higher‑order elements) and r‑refinement (node relocation) tune accuracy against cost. Key practices include:

- Protecting CAD‑referenced regions with “frozen” mesh controls so adaptive steps don’t lose BC context.

- Using feature‑based sizing tied to named edges/faces to survive topology edits.

- Employing conservative field mapping when remeshing transient runs, preserving integral quantities.

By treating the mesh as an associative derivative of geometry—not a detached artifact—teams preserve data fidelity across hundreds of iterations, a prerequisite for trustworthy multi‑physics accumulation of error.

Orchestration, co‑simulation, and standards

Robust multi‑physics requires orchestration that safely exchanges fields, aligns time steps, and manages convergence across domains. In‑solver couplers like ANSYS System Coupling and SIMULIA’s Co‑Simulation Engine synchronize solvers for FSI, conjugate heat transfer, and EM–thermal–structural problems. They handle interpolation between non‑conformal meshes, stabilize staggered marching with Aitken relaxation, and, where needed, support quasi‑monolithic schemes via tight iteration per physical time step. Outside the solver, system‑level tools—Simcenter Amesim, Dymola, and Simulink—connect 1D plant models to 3D CAE, embedding control loops and hardware behavior into the physics exchange.

Standards anchor repeatability. FMI/FMU, stewarded by the Modelica Association, packages models for cross‑tool exchange with versioned metadata and defined causality, allowing a controller from one vendor to drive a thermal model from another under a common API. Vendor scripting (Python, TCL) and REST/desktop APIs expose model setup and post‑processing for automation on CI/CD‑like runners. Orchestration best practices include:

- Declaring coupling contracts (what fields, what units, what interpolation) explicitly in templates.

- Versioning FMUs and geometry datasets in the same repository for reproducibility.

- Recording solver options and random seeds to guarantee re‑runs give consistent outcomes.

With these pieces, co‑simulation becomes an engineered product, not an ad‑hoc script—reducing risk and elevating consistency across programs and suppliers.

Optimization and the digital thread

Optimization closes the loop from simulation to design change. Adjoint CFD in Fluent and STAR‑CCM+ provides gradients of objectives (drag, pressure loss, mixing) with respect to geometry, enabling high‑dimensional shape optimization that would be impractical with finite differences. On the structural side, topology optimization in OptiStruct and Tosca removes unnecessary material subject to stiffness, dynamics, or thermal constraints, while morphing tools propagate shapes back to CAD. Gradient‑based optimizers leverage consistent sensitivities; surrogate and ML‑assisted methods accelerate exploration when individual runs are expensive. Crucially, modern suites can push these updates automatically to parametric CAD, preserving design intent and PMI.

PLM binds this loop into a governable process. Teamcenter/Simcenter, 3DEXPERIENCE/SIMULIA, and Windchill with Creo (and connectors to ANSYS) manage configurations, parameters, and requirements so that simulation results trace to named loads, solver versions, and tolerances. Integrations with MBSE/SysML tie system requirements to physical models, ensuring that a requirement change ripples into a new design baseline and regenerated analyses. A reliable digital thread typically includes:

- Parametric CAD as the master, with CAE associativity and variant management.

- Orchestrated solve pipelines with results promotion criteria and sign‑off roles.

- Requirements traceability from SysML to CAD/CAE artifacts, closing the loop from need to evidence.

The outcome is a repeatable, auditable path from concept through verification, where optimization and traceability are first‑class citizens rather than afterthoughts.

Pivotal Platforms, People, and Turning Points

Structural + CFD + EM consolidation

ANSYS’s Workbench exemplified an integrating vision: a project schematic where Fluent and CFX CFD, Mechanical structural, and Ansoft EM solvers share parameters, with SpaceClaim delivering analysis‑oriented direct modeling and Tosca embedding topology and shape optimization. Siemens orchestrated a tight CAD/CAE/PLM loop under Teamcenter, combining NX CAD, Simcenter 3D for CAE, Amesim for 1D systems, and STAR‑CCM+ for integrated CFD/meshing/optimization, with Design Manager enabling massively parallel design exploration. Dassault Systèmes fused SIMULIA—Abaqus, Isight, fe‑safe, and CST—under 3DEXPERIENCE, knitting CATIA geometry, process templates, and analytics into model‑based enterprise workflows. Hexagon’s acquisition of MSC packaged MSC Nastran, Marc, Adams, and manufacturing simulation with metrology, closing the loop between as‑designed, as‑simulated, and as‑measured. COMSOL remained an essential counterpoint, advancing equation‑level coupling with broad LiveLink connectors so that engineers could perform multiphysics analyses without leaving a single environment.

These consolidations mattered technologically. Cross‑solver parameter control, common meshing frameworks, shared materials databases, and process automation replaced brittle file‑based handoffs. Polyhedral meshing in STAR‑CCM+ improved convergence characteristics; HFSS and CST normalized co‑simulation between EM and thermal/structural; and Abaqus/Explicit and LS‑DYNA brought reliability to crash, impact, and metal forming. By stitching physics domains into coherent platforms, vendors reduced latency between ideas and evidence, and made it realistic to run coupled studies at the pace of design sprints rather than quarterly milestones.

Influential individuals and labs

Personal leadership has repeatedly realigned the field. John Swanson’s insistence on general‑purpose FEA laid a foundation for broad applicability that ANSYS continued to build upon for decades. David Hibbitt’s vision for dependable nonlinear analysis, alongside Bengt Karlsson and Paul Sorensen, made Abaqus the go‑to solver when contact and large deformation mattered. Brian Spalding and David Gosman seeded modern CFD through PHOENICS and the lineage that led to STAR‑CD and ultimately STAR‑CCM+, diffusing finite volume methods and turbulence modeling practice into industry. Hilding Elmqvist’s Modelica work gave rise to Dymola and the notion that acausal system modeling could coexist with 3D CAE, a conceptual shift now embedded across Amesim, Simulink, and the FMI standard.

Another inflection came from CAD preparation. Mike Payne, a co‑founder of SpaceClaim and previously a key figure in PTC and SolidWorks history, championed direct modeling that freed analysts from fragile feature trees, accelerating defeaturing and midsurfacing steps. NASA centers, national labs, and university groups—from Langley and Glenn to Sandia and Imperial College—provided the crucible where algorithms were tested under demanding regimes and then transferred to industry. Across these contributions, a common theme recurs: ideas traveled with people across institutions and companies, carrying techniques such as polyhedral meshing, sparse direct solvers, adaptive refinement, and contact stabilization into mainstream products.

Methodological breakthroughs

Several breakthroughs changed what engineers could trust and automate. Robust co‑simulation matured along two fronts: partitioned approaches with consistent field mapping and under‑relaxation for stability, and monolithic strategies that assemble coupled Jacobians to solve tightly linked physics. Contact modeling progressed from penalty‑only formulations to augmented Lagrangian and mortar methods, improving convergence and accuracy for frictional interfaces. Material modeling expanded with user subroutines enabling custom plasticity, creep, and viscoelasticity. On the CFD side, polyhedral meshes and improved near‑wall modeling reduced grid counts while preserving accuracy; combustion and multiphase models broadened industrial reach; and adjoint solvers provided sensitivities at scale.

Scaling was equally transformative. MPI‑based parallelism became standard; domain decomposition improved load balancing; and GPU acceleration delivered step‑change throughput for explicit dynamics, lattice‑Boltzmann and pressure‑based CFD, and high‑frequency EM. Design exploration tools integrated DoE, robust optimization, and surrogates: Altair HyperStudy, SIMULIA Isight, and ANSYS optiSLang orchestrated campaigns that connected solvers, parameter spaces, and constraints with convergence monitors and automated reporting. The cumulative effect is a toolchain where design exploration at scale is not a bolt‑on but a supported mode of working, enabling teams to move from single‑point validation to probabilistic, multi‑objective decision‑making.

Conclusion

From specialist post‑process to design driver

Multi‑physics suites have transformed simulation from a specialist’s post‑process into a front‑seat driver of design intent. Associative geometry and persistent metadata let engineers iterate by changing a parameter, not rebuilding a model. Integrated meshing and solver orchestration compress setup time and reduce errors that once hid in file conversions. Optimization—whether adjoint, gradient‑based shape, or topology—turns results into actionable geometry updates in hours rather than weeks. The most significant cultural shift is that teams now expect simulation‑informed decisions at every sketch and feature edit, with fidelity scaled to the question at hand rather than constrained by tool friction. This has raised the baseline for quality and reduced late‑stage surprises, not by adding heroics, but by making robust workflows available to every project, every day.

Seamless loops and traceable decisions

The winners are building seamless loops: parametric CAD feeding meshing and solving; sensitivity and optimization updating CAD; and PLM managing versions, PMI/MBD, and release. Open standards such as FMI enable black‑box models to plug into broader systems; vendor APIs support automation and reproducibility; and MBSE/SysML ensures that requirements, test plans, and analysis results are linked through a verifiable chain. This digital thread is as much about governance as it is about speed—capturing who changed what, when, and why, and demonstrating regulatory compliance with traceable evidence. By aligning data models across CAD, CAE, and PLM, organizations reduce handoff loss, minimize rework, and increase confidence that as‑designed, as‑simulated, and as‑built artifacts tell the same story.

The next horizon

Looking forward, three vectors stand out. First, higher‑fidelity real‑time twins will blend reduced‑order surrogates with periodic high‑resolution refreshes, keeping digital counterparts synchronized with sensor data. Second, pervasive GPU and cloud scaling will normalize petascale‑class capacity for everyday teams, while energy‑aware scheduling becomes a practical concern. Third, differentiable solvers and AI‑assisted meshing/BC setup will shorten preparation time and open new routes to gradient‑informed design spaces. These advances will not diminish the need for verification and validation; rather, they will make V&V more systematic, with uncertainty quantification embedded in standard runs. As multi‑physics continues its march from integrated to invisible—present everywhere, obtrusive nowhere—the field’s trajectory remains clear: tighter CAD–CAE coupling, stronger standards, and smarter automation driving better decisions, earlier in the design process.

Also in Design News

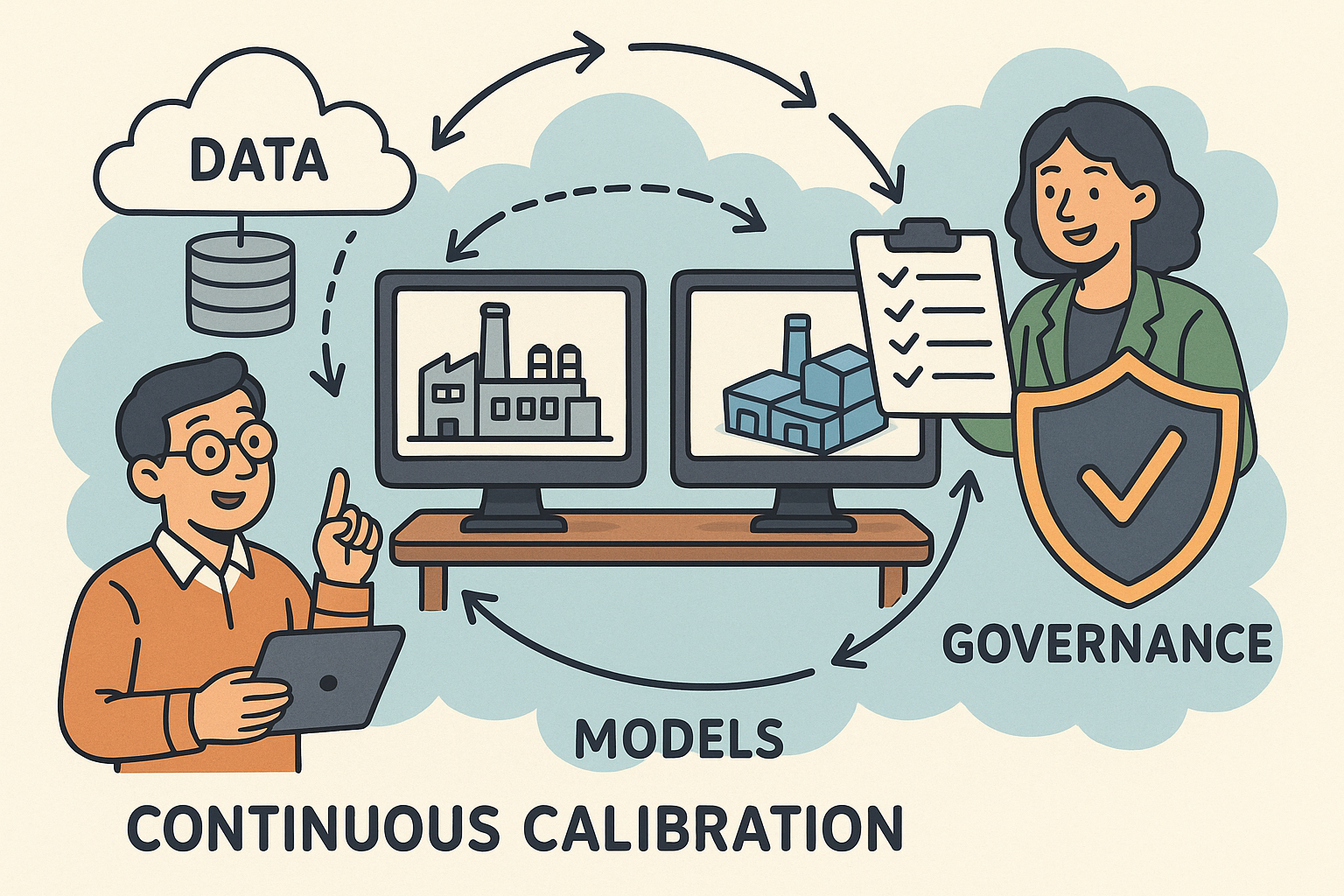

Continuous Calibration of Digital Twins: Data, Models, and Governance

November 06, 2025 11 min read

Read More

ZBrush Tip: Local Transform Pivot Workflows for Precise Gizmo Editing

November 06, 2025 2 min read

Read MoreSubscribe

Sign up to get the latest on sales, new releases and more …