Your Cart is Empty

Customer Testimonials

-

"Great customer service. The folks at Novedge were super helpful in navigating a somewhat complicated order including software upgrades and serial numbers in various stages of inactivity. They were friendly and helpful throughout the process.."

Ruben Ruckmark

"Quick & very helpful. We have been using Novedge for years and are very happy with their quick service when we need to make a purchase and excellent support resolving any issues."

Will Woodson

"Scott is the best. He reminds me about subscriptions dates, guides me in the correct direction for updates. He always responds promptly to me. He is literally the reason I continue to work with Novedge and will do so in the future."

Edward Mchugh

"Calvin Lok is “the man”. After my purchase of Sketchup 2021, he called me and provided step-by-step instructions to ease me through difficulties I was having with the setup of my new software."

Mike Borzage

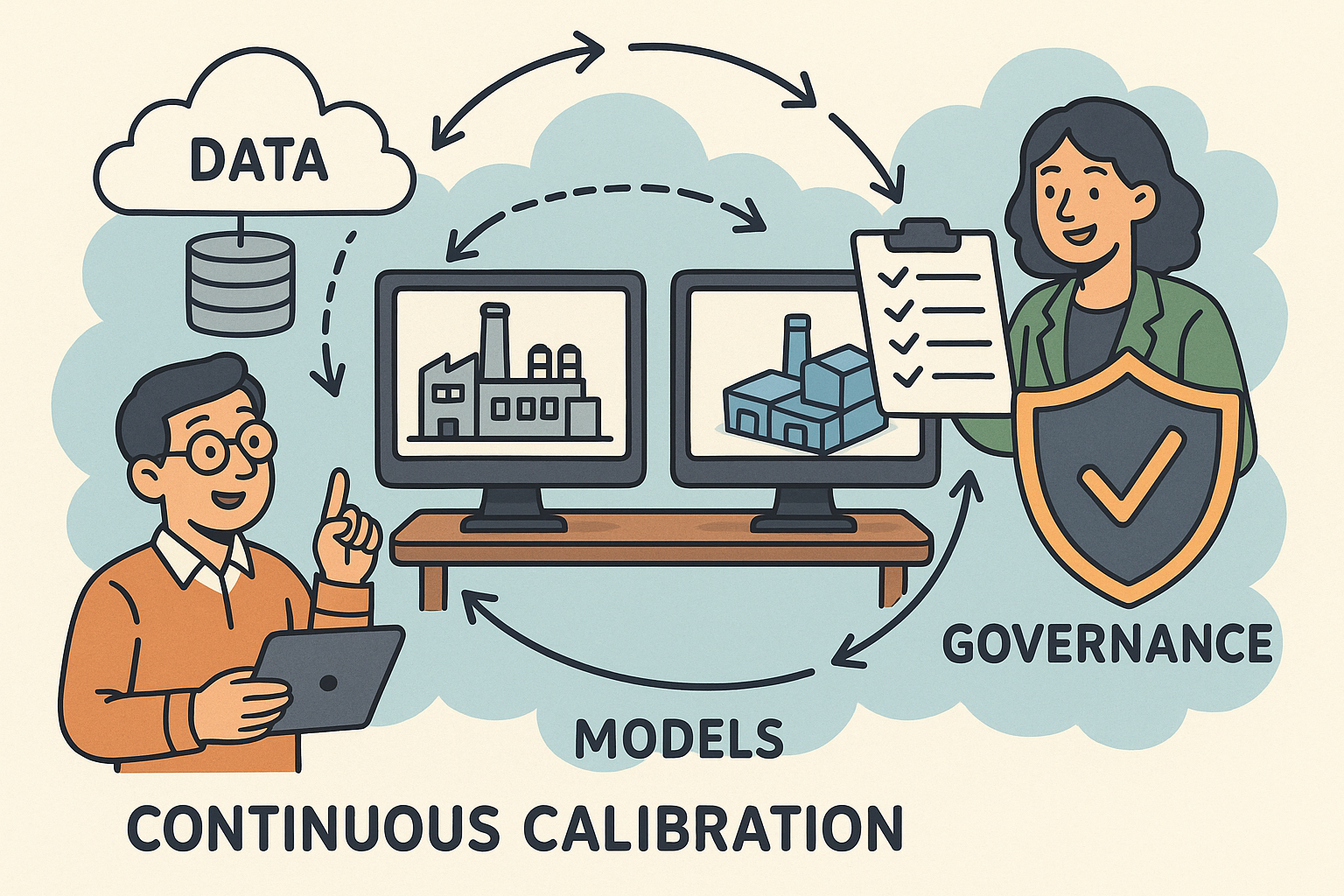

Continuous Calibration of Digital Twins: Data, Models, and Governance

November 06, 2025 11 min read

Definition and scope

What “digital twin” and “continuous calibration” really mean

A modern digital twin is more than a CAD model with a dashboard. It is a live, data-connected representation that spans geometry, physics, controls, and operational state, bound to one or many physical assets through time. The twin carries parametric descriptions of materials, joints, thermal paths, electrochemistry, and control laws; it consumes telemetry and events; and it produces forecasts, sensitivities, and decisions. Continuous calibration is the automated, ongoing alignment of this model’s parameters and uncertainty structure to field data, closing an end-to-end loop from design intent to operational reality. Calibrations may update geometry-related surrogates (e.g., seal leakage factors), physics-specific parameters (e.g., diffusion coefficients), or discrepancy terms that account for structured model bias. It is useful to distinguish related practices to avoid conflation and brittle processes:

- Verification: solving the equations right (numerical correctness, discretization error control).

- Validation: solving the right equations (fitness for purpose against reality at build-time).

- Calibration: inferring unknown parameters and error terms given data and priors.

- Online state estimation: inferring hidden states in real time given a fixed or slowly varying model.

What drives drift between model and reality

Sources of deviation that cumulative updates must tame

Even a well-validated model drifts once it meets the messiness of production. Manufacturing tolerances and assembly variability subtly reshape clearances, stiffness paths, and thermal contact; the “as-built” differs from “as-designed.” Environmental changes—temperature, humidity, dust, corrosion, fouling—shift heat transfer, friction, and sensor response. Load spectra evolve with business cycles and operator behavior, pushing systems into regimes under-sampled in pre-production tests. Wear and degradation alter parameters continuously: seal roughness, electrolyte impedance, bearing preload, and surface finish wander from nominal, while maintenance injects discontinuities (component replacements, firmware flashes). Control logic itself is not static: software updates, retuned gains, or altered interlocks can create dynamics the twin never saw. Calibration must therefore model not only parameter drift but also structured mismatch, sensor biases, and mode switches.

- Manufacturing variability: tolerance stacks, out-of-plane bow, adhesive fillets.

- Environment: ambient profiles, fouling layers, supply pressure fluctuations.

- Degradation: creep, corrosion, SEI growth, lubrication breakdown.

- Operational changes: firmware updates, operator habits, maintenance actions.

High-value use cases

Where continuous calibration pays for itself

The economics of calibration-at-scale shine in systems whose performance degrades, whose control needs precision, and whose operating envelopes vary widely. In EV energy storage, thermal-electrochemical twins calibrated to impedance growth and thermal response unlock safe fast charging while protecting lifetime: parameters like reaction rate constants, thermal contact resistances, and diffusion coefficients are continuously updated, and uncertainty feeds chargers’ current limits. In rotating machinery—compressors, turbines, pumps—vibration signatures and efficiency curves are tracked as blades foul or bearings wear; calibrated dynamics twins keep false alarms low while detecting precursors to instabilities. In commercial buildings, HVAC twins adjust to occupancy, fouling, and weather shifts, refining zone coupling, coil UA values, and valve characteristics to optimize energy against comfort constraints. In additive manufacturing, melt pool and distortion twins adaptively correct toolpaths by calibrating absorptivity, conductivity, and boundary layer models layer-by-layer. Across these domains, success comes from:

- Targeting observables with strong sensitivity: impedance spectra, shaft orbits, zone temperatures, melt pool width.

- Exposing parameters that matter for decisions: thermal resistances, damping coefficients, valve Cv curves, absorptivity.

- Using reduced-order models where latency matters and heavy models for batch refinement.

- Feeding calibrated uncertainty into controllers to trade performance and risk in real time.

Success metrics and guardrails

Measuring predictive value and enforcing safe updates

Without explicit metrics and guardrails, calibration can devolve into overfitting and brittle decisions. Predictive accuracy is table stakes: monitor MAE/RMSE on key observables and the lead-time over which forecasts remain reliable (e.g., 15-minute thermal headroom, 24-hour energy use). Uncertainty calibration matters equally: assess reliability vs sharpness via Expected Calibration Error (ECE), Probability Integral Transform (PIT) histograms, and coverage of prediction intervals. Operational KPIs connect to business value—energy per unit, scrap rate, unplanned downtime—and alerts measured by precision/recall and time-to-detection. Safety guardrails protect equipment and people: bounded parameter update magnitudes, physics-informed invariants (e.g., positive heat capacity, monotonic impedance growth), and fail-safe reversion to prior parameter sets when anomalies arise. Cost and latency budgets keep the process sustainable: compute per asset, network bandwidth, and update cadence must align with fleet size and SLAs.

- Accuracy: RMSE/MAE on observables; forecast horizon stability.

- Uncertainty: ECE, PIT, interval coverage, CRPS.

- Operations: energy use, throughput, downtime; alert precision/recall.

- Safety: bounded updates, formal invariants, rollbacks.

- Resources: per-asset compute, storage churn, bandwidth, latency.

Data plane

Ingest, structure, and curate data that calibration can trust

Calibration quality is bounded by data quality. Ingestion must bridge harsh edges and scalable cores: use MQTT or OPC UA at the edge for low-latency telemetry, buffer with store-and-forward, and fan-in to Kafka in the cloud for durable, ordered streams. Time alignment is non-negotiable; discipline around PTP/NTP and explicit clock quality flags prevents phantom lags and false dynamics. Semantics give numbers meaning: enumerate units, sensor metadata, coordinate frames, and reference transforms; map to NGSI-LD, DTDL, or AAS to encode relationships across assets. Quality controls filter spikes, de-noise with physics-aware filters (e.g., Savitzky–Golay for derivatives), and gap-fill cautiously with uncertainty markers. Provenance and confidence scores travel with each feature, preserving auditability and enabling robust estimators to downweight dubious data. An event-sourced backbone supports reproducible calibration windows: materialize windows into a feature store keyed by asset/time/version so you can recompute results deterministically.

- Edge ingest: MQTT/OPC UA, compression, buffering, local time sync.

- Core streaming: Kafka topics per asset class with schema registries.

- Schemas: units, frames, sensor health, calibration constants.

- Data QA: filters, de-noising, gap-filling with uncertainty metadata.

- Reproducibility: event sourcing and versioned feature stores.

Model and twin plane

Package physics, expose parameters, and embrace surrogates

Operational twins need a runtime that respects physics and scale. Package models as FMUs (FMI 2.0/3.0) for co-simulation, Modelica components for interpretability, or wrap legacy FE/CFD solvers behind stable APIs. Where latency is tight, deploy GPU-accelerated reduced-order models—POD/DPOD, autoencoders, or PINNs—to keep inner loops fast, reserving high-fidelity runs for batch sensitivity sweeps. Parameter exposure is a product decision: declare typed parameters with physical units, bounds consistent with manufacturing tolerances, and priors seeded from design specs and as-built metrology. Constrain coupled parameters to preserve invariants (e.g., conductance symmetry, passivity). Uncertainty must be first-class: represent parameter posteriors, sensor noise, and model discrepancy; propagate stochastic I/O through the twin to produce distributions, not just point estimates.

- Model packaging: FMU, Modelica, co-sim bridges for FE/CFD.

- Parameter API: typed, ranged, priors from design/manufacturing.

- Surrogates: POD/DPOD, autoencoders, PINNs on GPUs.

- Uncertainty: parameter posteriors, discrepancy terms, stochastic outputs.

Orchestration and governance

From twin registry to rollouts: make calibration a managed service

Scale demands orchestration. A twin registry tracks lineage from asset IDs to model versions and geometry (e.g., STEP AP242), tying semantic BOM items to parameter groups; it anchors who runs what, where, and why. A scheduler coordinates per-asset cadences and event-triggered updates: drift alarms, firmware changes, or maintenance work orders kick off recalibration jobs. Fleet strategies matter: cohort assets by environment or usage to share statistical strength; use canary and shadow-twin rollouts to de-risk updates; compare A/B twin variants to quantify uplift in accuracy or KPI impact. Version everything—models (semantic versions), parameter sets, datasets—and keep immutable audit trails to reproduce any decision. Provide stable interfaces: REST/gRPC for a calibration service that accepts datasets, returns posteriors, and publishes lineage to the digital thread back to PLM/CM systems.

- Registry: asset-to-model lineage, STEP links, semantic BOM.

- Scheduler: cadence plus event-driven triggers.

- Fleet strategies: cohorts, canaries, shadow twins, A/B twins.

- Versioning: models, parameters, datasets; immutable audits.

- Interfaces: REST/gRPC and digital thread integration.

Deployment topologies

Edge for reflexes, cloud/HPC for depth, cost-aware everywhere

The calibration workload bifurcates cleanly. At the edge, run low-latency state estimation and guardrails: lightweight filters (EKF/UKF), anomaly screens, and ROMs that shape immediate control decisions and protect equipment. In the cloud or HPC, run heavy lifts: batch Bayesian calibration across calibration windows, global fleet models, and FE/CFD sensitivity analyses that refresh priors or update surrogates. Cost-aware placement keeps budgets sane: exploit spot/HPC queues for batch jobs; apply mixed precision and adaptive discretization to squeeze performance; prune features and sensors that add cost without predictive value. Network limits also shape topology: pre-aggregate at the edge, compress efficiently, and stream posterior deltas rather than raw traces when possible.

- Edge: low-latency filters and ROMs; safety interlocks; local failover.

- Cloud/HPC: Bayesian calibration, global modeling, sensitivity sweeps.

- Cost/latency: spot queues, mixed precision, adaptive meshes, bandwidth-aware data movement.

State and parameter estimation

From deterministic gradients to full Bayesian posteriors

Calibration methods should match physics, data, and latency. For smooth problems with good observability, gradient/adjoint-based Gauss-Newton or L-BFGS fit deterministic least-squares efficiently; adjoints make sensitivity cheap even in large PDE models. Nonlinear dynamics and online operation favor the Kalman family: EKF/UKF capture moderate nonlinearity for low-dimensional states; EnKF scales to high-dimensional fields, updating ensembles instead of full covariances. When uncertainty matters for downstream control, move to Bayesian inference: Variational Inference (VI) for speed and streaming updates; Sequential Monte Carlo (SMC) for multi-modal posteriors; HMC/NUTS for accuracy on smaller, stiff problems. Across fleets, hierarchical Bayes shares strength by placing hyperpriors over asset-level parameters, yielding calibrated asset-specific posteriors and principled default priors for new assets. Constraints are not optional: encode physics-consistent bounds, manufacturing tolerances, and regularization (e.g., Tikhonov, sparsity) to prevent overfitting.

- Gradients: Gauss-Newton, L-BFGS with adjoints for PDEs.

- Filters: EKF/UKF for nonlinear, EnKF for high-dimensional states.

- Bayes: VI (fast), SMC (multi-modal), HMC/NUTS (accurate).

- Hierarchical Bayes: global hyperpriors with asset posteriors.

- Constraints: physics bounds, tolerances, structured regularization.

Discrepancy modeling and hybridization

Admitting model bias and learning it safely

Even with perfect parameters, models carry discrepancy—missing physics, unmodeled couplings, or scale gaps. Treating discrepancy explicitly unlocks robust forecasts. Gaussian process (GP) discrepancy terms, with input-aware kernels over operating point, environment, and time, capture smooth biases and deliver uncertainty with principled calibration tools (e.g., PIT, coverage). Gray-box hybrids augment governing equations with residual neural networks or PINNs that respect conservation laws while learning closure terms. Multi-fidelity fusion ties it together: trust-region blending lets a high-fidelity CAE model anchor behavior near design envelopes, while surrogates fill the broad operational space; discrepancies weight the blend. Safety requires guardrails: constrain learned residuals to preserve stability, monotonicity, or passivity; regularize toward zero to prevent over-attribution; and quarantine discrepancy to submodels where physics is known to be weak.

- GP discrepancy: input-aware kernels with calibrated uncertainty.

- Gray-box: residual nets/PINNs honoring physics invariants.

- Multi-fidelity: trust-region blending of CAE and surrogates.

- Safety: stability and passivity constraints, conservative priors.

Drift detection and triggering

When to recalibrate, and when to refuse

Calibrating at every tick wastes compute and invites overfitting; waiting too long erodes value. Change-point tests such as CUSUM and Page-Hinkley flag distribution shifts in residuals; population drift metrics compare current feature distributions to baselines; posterior predictive checks watchdog consistency between forecasts and observations. Sensor health models are equally important: robust statistics, redundancy voting, and unsupervised detectors (e.g., isolation forests) distinguish asset drift from sensor faults, preventing “calibrating to a broken sensor.” Trigger policies mix thresholds and Bayesian decision rules: elevate recalibration when the expected utility exceeds compute and risk costs, or when policy-critical uncertainty widens beyond limits. Introduce hysteresis and cooldown timers to avoid oscillation. Where updates are risky, use shadow twins to confirm benefit before promotion, and enforce hard safety gates that revert to known-good parameter sets if anomalies spike after an update.

- Statistical triggers: CUSUM, Page-Hinkley, KL or Wasserstein drift.

- Predictive checks: posterior predictive p-values, residual runs.

- Sensor health: robust stats, redundancy, isolation forests.

- Promotion controls: shadow twins, canaries, rollbacks with hysteresis.

Identifiability and experiment design

Only calibrate what data can see—and excite it if needed

Not all parameters are inferable from ambient operation. Sensitivity and adjoint analyses rank parameters by influence on observables; Fisher Information reveals identifiability and guides where adding sensors or excitations would pay off. Before turning dials, test for collinearity and sloppy directions to avoid ill-posed updates. When possible, design active experiments under safety constraints: inject optimal transients, step tests, or PRBS signals that maximize information without violating thermal or mechanical limits; coordinate with production so planned perturbations align with operations. Sensor placement matters: modest additions (e.g., a differential temperature across a critical interface) can break degeneracies cheaply. For fleets, hierarchical modeling plus active design allows a few well-instrumented assets to lift priors that generalize to lightly instrumented ones. Capture all these decisions in the registry with rationale, so future engineers can trace why a parameter became identifiable and under which conditions.

- Diagnose identifiability: sensitivities, adjoints, Fisher Information.

- Design excitations: step/PRBS under guardrails, scheduled tests.

- Sensor strategy: place to break degeneracies at low cost.

- Leverage fleets: learn on a few, generalize with hierarchical priors.

Robust operations

Handle messy data, validate relentlessly, and connect to decisions

Production data is imperfect. Build pipelines that detect and handle missing values, unit or coordinate mismatches, and clock skews; enforce schemas at ingestion; apply dimensionally aware checks so a unit slip cannot pass silently. Prevent leakage: keep calibration windows strictly separated from evaluation windows; use rolling-origin evaluation to mimic real deployment; run backtests against withheld episodes that include rare events. Validate uncertainty with coverage tests and PIT histograms, and track calibration drift over time, not just point accuracy. Most critically, feed calibrated distributions into decision layers: controllers that trade performance for risk by tightening limits when uncertainty widens; maintenance policies that combine posterior hazard rates with cost models; alerting systems whose thresholds adapt to confidence to keep alert precision/recall high. Document all assumptions, priors, and constraints in lineage; surface interpretable summaries so operations and safety teams can trust and challenge the twin.

- Data hygiene: unit/coordinate checks, time sync, schema enforcement.

- Evaluation: rolling-origin, backtests, uncertainty coverage.

- Decision integration: uncertainty-aware control, maintenance, alerts.

- Lineage: assumptions, priors, constraints, audit trails.

Conclusion

Closing the loop from design intent to operational impact

Continuous calibration closes the loop between the models we design and the realities we operate, elevating predictions, trust, and outcomes across industries. The pattern is repeatable: instrument data carefully, structure models for exposure and uncertainty, orchestrate updates with governance, and select algorithms that match physics and constraints. Then let feedback compound value—each update informs control, which shapes operation, which generates better data, which sharpens the twin. Pragmatically, start narrow and safe, expand with evidence, and keep humans-in-the-loop where consequences are high. The following concrete steps and guardrails help teams move from concept to durable operations:

-

A pragmatic rollout

- Begin with a single KPI and a ROM-backed twin; instrument data quality from day one.

- Establish a twin registry with versioned models, parameters, and datasets.

- Introduce drift detection first, then add periodic Bayesian updates; scale to fleets with hierarchical modeling.

- Operationalize guardrails: constrained updates, canaries, and automatic rollback to known-good states.

-

Common pitfalls

- Unidentifiable parameters and overfitting to noise when data is uninformative.

- Lack of unit and semantic discipline that corrupts features and residuals.

- Ignoring uncertainty, leading to overconfident control and brittle alerts.

- Pushing updates without A/B validation or shadow twins, causing regressions.

-

Action checklist

- Define observables, priors, and constraints aligned to decisions.

- Implement a calibration service with lineage and auditability.

- Deploy GPU-backed ROMs to meet latency while preserving physics.

- Monitor accuracy and uncertainty calibration continuously.

- Couple updates to controllers and maintenance policies with safety gates.

Also in Design News

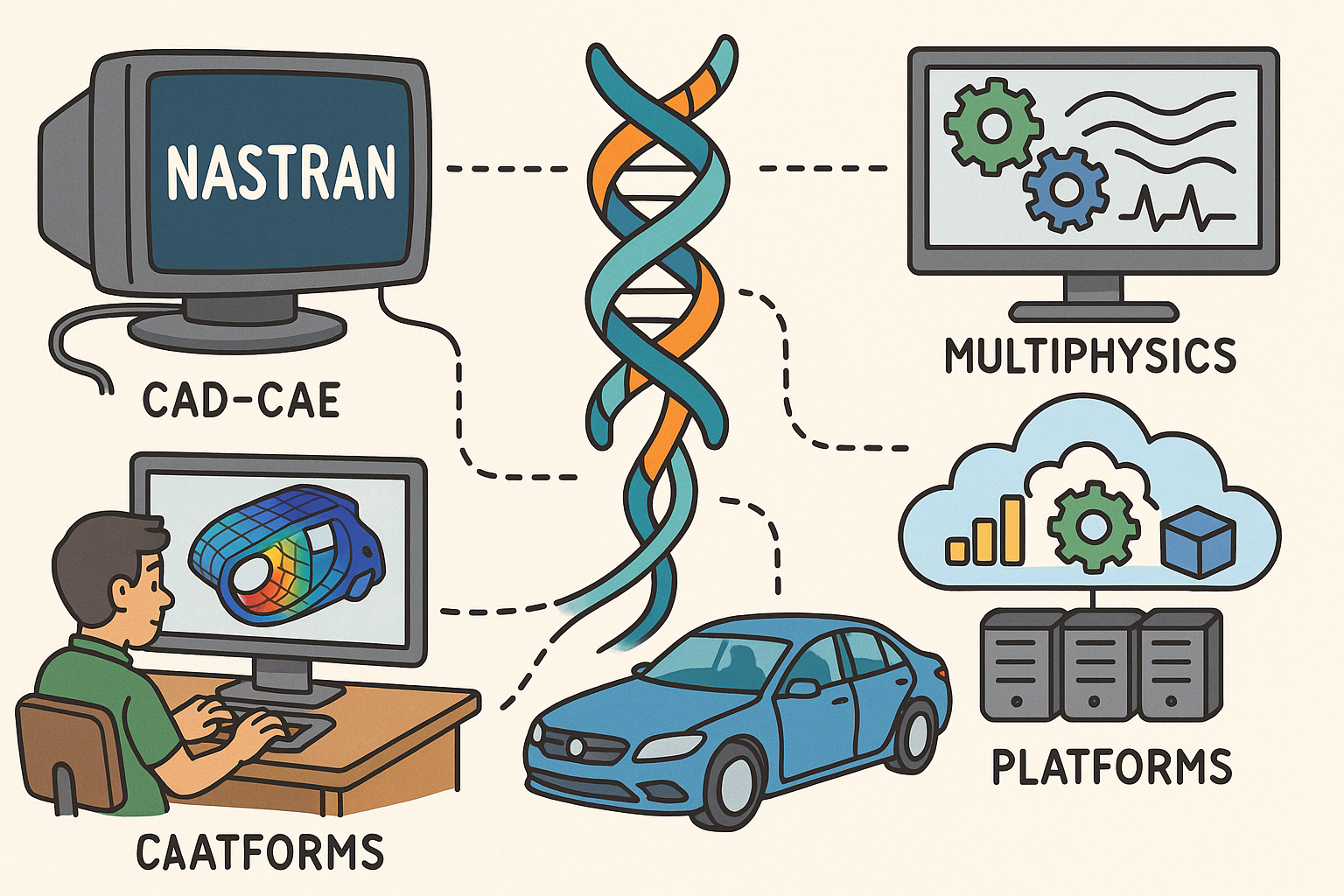

Design Software History: From NASTRAN to Multiphysics: CAD–CAE Coupling, Platforms, and the Digital Thread

November 06, 2025 11 min read

Read More

ZBrush Tip: Local Transform Pivot Workflows for Precise Gizmo Editing

November 06, 2025 2 min read

Read MoreSubscribe

Sign up to get the latest on sales, new releases and more …