Your Cart is Empty

Customer Testimonials

-

"Great customer service. The folks at Novedge were super helpful in navigating a somewhat complicated order including software upgrades and serial numbers in various stages of inactivity. They were friendly and helpful throughout the process.."

Ruben Ruckmark

"Quick & very helpful. We have been using Novedge for years and are very happy with their quick service when we need to make a purchase and excellent support resolving any issues."

Will Woodson

"Scott is the best. He reminds me about subscriptions dates, guides me in the correct direction for updates. He always responds promptly to me. He is literally the reason I continue to work with Novedge and will do so in the future."

Edward Mchugh

"Calvin Lok is “the man”. After my purchase of Sketchup 2021, he called me and provided step-by-step instructions to ease me through difficulties I was having with the setup of my new software."

Mike Borzage

Design Software History: From DMU to Closed-Loop Digital Twins: History, Architectures, and Standards

November 08, 2025 11 min read

Introduction

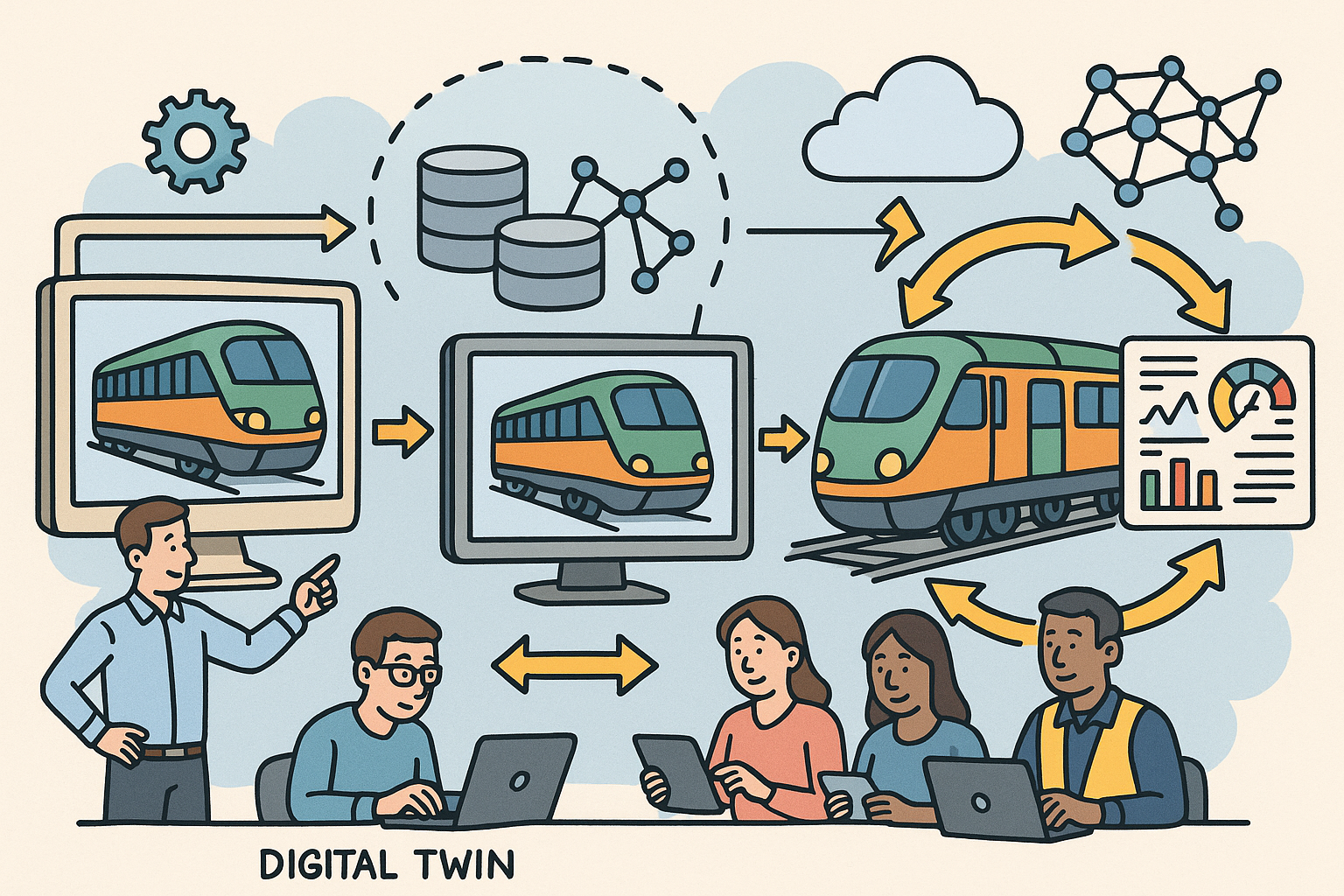

Why digital twins matter now

The term digital twin sits at the crossroads of engineering rigor and software agility, transforming how industries design, certify, operate, and continuously improve products and infrastructure. What once lived as disconnected files across CAD, simulation, and enterprise systems is being converged into synchronized, trustworthy virtual counterparts that reflect and anticipate the state of their physical peers. This convergence is not hype; it is the compounded result of decades of progress across CAD/PLM/MBSE/CAE, now supercharged by IoT telemetry, cloud-scale data platforms, and immersive visualization. The goal is deceptively simple: synchronize authoritative geometry and semantics with behaviorally faithful models, connect them to live data, and close the loop back to decisions in design, manufacturing, and operations. Achieving this, however, requires disciplined data lineage, robust interoperability, and a culture that treats models as operational assets. In the following sections, we trace the roots from the 1990s digital mock-up (DMU) wave to today’s closed-loop twins, unpack the enabling technologies and standards, survey the vendor landscape across product and AEC domains, and distill implementation patterns that separate promising prototypes from durable programs. The throughline is clear: twins are not a new category so much as a unification of methods that engineers have long trusted—made continuous, observable, and operationally decisive.

Historical roots and definitions: from DMU to closed-loop twins

Conceptual precursors

The intellectual scaffolding for digital twins was erected well before the phrase entered boardrooms. In the 1990s, aerospace, automotive, and shipbuilding leaders built digital mock-ups (DMU) as geometrically complete, clash-checked product representations. Boeing’s 777 program institutionalized CATIA V4/V5-based DMU under Dassault Systèmes, bypassing a physical mock-up and demonstrating that virtual integration could de-risk assembly and service. European automakers, including Renault and PSA, followed suit with CATIA-driven DMU, while large yards in shipbuilding turned to parametric hull modeling and specialized piping tools integrated with product structure. DMU made geometry authoritative but remained largely “not live”—it did not breathe with telemetry. In the 2000s, PLM and the digital thread matured under platforms like Dassault’s 3DEXPERIENCE, Siemens Teamcenter, and PTC Windchill, bringing configuration-managed lineage across CAD/CAE/CAM and bridging BOM/MBOM alignment. In parallel, model-based systems engineering (MBSE) with SysML, and multiphysics CAE (NASTRAN, ANSYS, ABAQUS) plus equation-based modeling (Modelica/Dymola), made behavior predictive and testable. Conceptually, David Gelernter’s “Mirror Worlds” (1991) imagined dynamic digital proxies of real systems, while cyber-physical systems research anchored feedback, sensing, and control. By the late 2000s, the pieces existed: authoritative geometry, governed lineage, and validated physics. What remained was wiring these together with real-time data and analytics to build a continuously synchronized, decision-ready twin.

- DMU: geometrically complete, clash-aware, primarily offline.

- PLM/digital thread: configuration management and traceability across lifecycle artifacts.

- MBSE/multiphysics: executable behavior models with V&V discipline.

- Conceptual inspirations: Mirror Worlds, cyber-physical systems, and control theory.

Coining and popularization

The phrase digital twin is most often attributed to Michael Grieves in the early 2000s, articulated in a PLM context to denote a virtual representation linked to a physical product through life. The concept gained engineering gravitas when NASA’s 2012 roadmap—led by John Vickers—formalized digital twins for aerospace missions as high-fidelity, continuously updated models used to predict behavior under uncertainty. Mid-2010s industrial momentum followed as GE Digital, with Bill Ruh’s evangelism, promoted asset-performance twins in the Predix era for turbines and jet engines, blending physics-based analytics with fleet telemetry. These popularizations refined the idea from a static model to a living system with feedback. The vocabulary matured as vendors positioned twins along the lifecycle, from design and manufacturing to operations and service. Importantly, the conversation shifted from standalone analytics to closed-loop twins that can trigger prescriptive actions—maintenance work orders, control setpoint tweaks, and, critically, design updates—by maintaining chain-of-custody back to validated models and requirements.

- Grieves: twin as PLM-linked virtual-physical pair.

- NASA: twin as uncertainty-aware, mission-critical predictor.

- GE Digital: twin as asset performance driver in operations.

- Shift: from visualization to continuous, prescriptive feedback loops.

Working taxonomy

Clarity begins with a taxonomy. A digital model is an offline artifact with no automatic synchronization to reality. A digital shadow ingests one-way telemetry and reflects state, but does not influence the physical system. A true digital twin is bi-directional, closing the loop with interventions—automated or human-mediated—that impact the physical, paired with updated models that absorb new evidence. Siemens usefully frames twins across the lifecycle with a product–production–performance triad: the product twin embodies design intent and physics; the production twin captures manufacturing assets, process plans, and factory flows; the performance twin watches in-field behavior. Maturity spans descriptive (what is), diagnostic (why happened), predictive (what will), and prescriptive (what should we do) levels. The prescriptive stage depends on traceable fidelity—linking real-time decisions to verified models—and on governance that prevents divergence. In practice, organizations often progress from a shadow to a closed-loop twin as they harden data pipelines, shrink model runtimes via reduced-order models (ROM), and institutionalize verification and validation. The result is not a monolith, but a federation of synchronized models, semantics, and services anchored to unique asset identities and backed by lifecycle provenance.

- Model vs. shadow vs. twin: synchronization and control authority define the boundary.

- Siemens triad: product–production–performance twins interlock.

- Maturity: descriptive → diagnostic → predictive → prescriptive.

- Prerequisites: ROMs, robust telemetry, and end-to-end configuration governance.

Core technologies and architectures that make twins possible

Geometry and semantics

Twins inherit their backbone from geometry kernels and semantic schemata. CAD kernels like Parasolid (Siemens) and ACIS (Spatial/Hexagon) produce the boundary-representation (B-rep) truths used in NX, SolidWorks, CATIA (with CGM), and others. Exchange and long-term archiving ride on STEP AP242, while lightweight visualization often uses JT. In AEC, IFC (ISO 16739) from buildingSMART, coupled with ISO 19650 processes, anchors shared information models and common data environments. A twin must align geometry with structure: part/assembly trees, coordinate systems, and tolerances, but also the metadata that gives these shapes meaning. PLM attributes, change states, requirements links, and variants define which configurations are valid. Equally vital is BOM/MBOM alignment—the mapping between engineering parts and manufacturing routings—that allows a production twin to simulate process plans and trace nonconformances. As twins cross domains, semantic bridges emerge: ontologies for asset types, property sets, and telemetry channels. Brick Schema and Project Haystack annotate building systems; in manufacturing, asset hierarchies and ISA-95-inspired models tag cells, lines, and stations. The north star is a single source of truth for shape and semantics that version-controls not just geometry, but context—who changed what, when, and why—so that analytics and prescriptive actions rest on authoritative, auditable foundations.

- Authoritative shape: Parasolid, ACIS, CGM; STEP AP242 and JT for exchange/visualization.

- Shared semantics: IFC and ISO 19650 for AEC; PLM metadata and change states for products.

- Operational alignment: BOM/MBOM synthesis; asset and telemetry ontologies (Brick, Haystack).

- Goal: governed, versioned models that bind geometry to meaning.

Simulation and analytics

The behavioral credibility of a twin is earned by physics and refined by data. High-fidelity solvers—FEA for structures, CFD for fluids, MBD for dynamics—anchor first-principles predictions via Simcenter Nastran, Ansys Mechanical/Fluent, Abaqus, and domain-specific tools. Co-simulation frameworks using the Modelica Association’s FMI/FMU standard orchestrate multi-physics and controls interaction. To achieve real-time or near-real-time responses, engineers derive reduced-order models (ROM) using techniques such as Proper Orthogonal Decomposition (POD) and Dynamic Mode Decomposition (DMD), or train surrogate models and hybrid physics-informed ML. Data assimilation closes the gap between model and reality: Kalman and particle filters fuse sensor streams with state estimators, while uncertainty quantification and V&V envelop predictions with measures of credibility. Critically, models must be traceable from their CAD/CAE progenitors to deployed ROM/ML artifacts, with lineage captured in PLM or model registries. Ansys Twin Builder, Simcenter Amesim, and Dymola exemplify pipelines for packaging physics into embeddable, executable twins. The architectural theme is tiered fidelity: heavy solvers for offline calibration, ROM/ML for operational decisioning, and continuous recalibration as telemetry shifts operating envelopes, ensuring the twin stays predictive rather than decorative.

- High fidelity: FEA, CFD, MBD; FMI/FMU for co-simulation orchestration.

- Operational speed: ROMs (POD/DMD) and ML surrogates for fast inference.

- Trust: data assimilation, state estimation, UQ, and rigorous verification and validation.

- Lineage: trace models from CAD/CAE to deployed artifacts with governance.

Connectivity and runtime

A twin’s lifeblood is the data pipe. Industrial connectivity relies on OPC UA for rich, semantically tagged telemetry; MQTT for lightweight publish/subscribe; and DDS for time-critical, peer-to-peer messaging. Time-series backbones combine event streams (Apache Kafka, Azure Event Hubs) with stores like InfluxDB, TimescaleDB, and OSIsoft PI (AVEVA). Latency-sensitive loops push computation to the edge via containerized microservices (Docker/Kubernetes), while orchestration spans edge-to-cloud to balance determinism and scalability. Observability is essential: metrics, logs, traces, and lineage must make twin behavior debuggable and auditable. For human-in-the-loop analysis, visualization layers merge real-time 3D via OpenGL/Vulkan, photorealistic ray tracing with RTX, and the emerging role of OpenUSD scene graphs for collaborative, tool-agnostic world building. NVIDIA Omniverse leverages USD to synchronize DCC tools and physics engines, while domain platforms translate authoritative CAD/IFC into runtime-friendly representations without losing semantic anchors. Access control and IP protection wrap the runtime, ensuring that only the right contexts see sensitive geometry or models. The result is a distributed fabric where data, models, and users meet with the right latency, fidelity, and trust for each decision moment.

- Protocols: OPC UA, MQTT, DDS for industrial-grade messaging.

- Pipelines and storage: Kafka, Event Hubs, PI, InfluxDB, TimescaleDB.

- Runtime: edge-to-cloud microservices; Kubernetes for portability and scaling.

- Visualization: OpenGL/Vulkan/RTX and OpenUSD for shared, high-fidelity scenes.

Reference frameworks and standards

Standards translate ambition into operational consistency. ISO 23247 articulates a reference framework for manufacturing digital twins, delineating roles, interfaces, and data governance. The Digital Twin Consortium, hosted by OMG, curates reference architectures, vocabularies, and testbeds that accelerate cross-industry alignment. In Europe’s Industrie 4.0 context, the Asset Administration Shell (AAS, IEC 63278) expresses machine capabilities and submodels in a harmonized envelope, promoting supplier interoperability and lifecycle traceability. For the built environment, OGC’s CityGML and SensorThings API extend GIS-anchored semantics into urban-scale twins, bridging geospatial and IoT. Together with buildingSMART IFC and ISO 19650 processes, these frameworks elevate twins from bespoke integrations to durable ecosystems. Complementary efforts—from OPC Foundation’s companion specifications to OpenUSD’s schema conventions—are converging on shared graphs where geometry, behavior, and telemetry cohabit. Adopting these references is not a box-ticking exercise; it is a foundation for portability, vendor neutrality, and the long-term survivability of models that must outlive today’s software fashions.

- Manufacturing: ISO 23247, AAS for structured, lifecycle-spanning models.

- Cross-industry: Digital Twin Consortium vocabularies and blueprints.

- Built environment: CityGML and SensorThings for geospatial-IoT fusion.

- Interoperability: companion specs and open scene/semantic graphs.

Modern implementations, vendors, and applications

Industrial/product twins

Industrial vendors now assemble end-to-end stacks that weave design, simulation, manufacturing, and operations. Siemens’ Xcelerator portfolio integrates Teamcenter for digital thread governance, NX for design, Simcenter for multiphysics, and MindSphere for IoT, enabling product, production, and performance twins with shared lineage. PTC combines Windchill’s configuration backbone with ThingWorx for industrial IoT, Kepware for connectivity, and Vuforia for AR, an approach advanced under Jim Heppelmann that emphasizes service-centric twins and augmented reality work instructions. Dassault Systèmes’ 3DEXPERIENCE platform spans CATIA/DELMIA/Simulia with Dymola for Modelica-based systems, packaged as a “virtual twin experience” under Bernard Charlès, aiming to synchronize engineering authority with manufacturing execution and operations. Ansys Twin Builder focuses on reduced-order, embeddable physics twins that run on edge and control systems. Platform providers add runtime gravity: Microsoft Azure, AWS, and Google offer digital twin services that interleave IoT ingestion, analytics, and knowledge graphs. In visualization and collaboration, NVIDIA’s Omniverse—championed by Jensen Huang—synchronizes domain tools via USD and GPU-accelerated simulation for factories and robotics. Across these offerings, the differentiator is how cleanly vendors tie back prescriptive decisions to verified models and how defensibly they preserve fidelity as they compress physics into ROM/ML surrogates for real-time use.

- Siemens: Teamcenter/Xcelerator, NX, Simcenter, MindSphere across lifecycle twins.

- PTC: Windchill + ThingWorx + Kepware + Vuforia for service and AR-driven workflows.

- Dassault Systèmes: 3DEXPERIENCE with CATIA/DELMIA/Simulia + Dymola for MBSE.

- Ansys: Twin Builder to package reduced-order physics for deployment at the edge.

- NVIDIA: Omniverse + USD for high-fidelity, collaborative industrial scenes.

AEC/infrastructure twins

In the built environment, twin platforms link BIM authority to operational telemetry and geospatial context. Bentley’s iTwin platform and iTwin.js streamline ingestion of IFC, DGN, and Revit models into iModels with change tracking, a concept long advocated by Greg and Keith Bentley to ensure design and construction models remain trusted in operations. Autodesk’s Tandem and Autodesk Platform Services aim to hand off a consolidated operational model at building commissioning, keeping it synchronized with building management systems (BMS) and IoT. At city and national scales, programs like Virtual Singapore and the UK’s National Digital Twin—guided by the Gemini Principles—advocate for federated, standards-based twins that can be governed across agencies and systems. Standards remain the backbone: buildingSMART’s IFC (ISO 16739), ISO 19650 for information management, and ontologies such as Brick Schema and Project Haystack to label equipment, points, and relationships. Visualization spans GIS and real-time 3D, with OpenUSD and glTF increasingly used to harmonize scenes. Successful AEC twins blend construction as-built truth with live telemetry—space utilization, energy, comfort—while respecting change control and data sovereignty across owners, operators, and service providers.

- BIM to operations: Bentley iTwin/iModels and Autodesk Tandem pipelines.

- Standards: IFC, ISO 19650, Brick Schema, Project Haystack.

- Scale: city/nation programs promoting federated governance and shared semantics.

- Visualization: GIS integration and USD/glTF for collaborative scenes.

Key implementation patterns

Execution patterns separate pilots from platforms. Start with a validated engineering model: the authoritative CAD, CAE, and MBSE artifacts that define design intent and physics. From there, derive reduced-order models or surrogates, and benchmark them against test data. Wire the twin to live telemetry via OPC UA/MQTT and edge gateways, implement data assimilation to keep state estimates credible, and monitor drift. Close the loop not only to maintenance (condition-based interventions, spare parts optimization) but back to requirements and design via the digital thread. Governance is non-negotiable: configuration management must bind in-field instances to the exact model versions and manufacturing pedigrees that produced them. Security and IP protection require least-privilege access, encryption end to end, and supplier-aware partitioning of models. Finally, invest in observability: lineage for analytics, model registries, and dashboards that expose health, confidence, and provenance. Organizations that succeed adopt a product mindset for their twins, with versioned releases, test suites, and continuous integration of models. They measure success by prescriptive impact—reduced downtime, efficiency gains, design improvements—anchored to auditable, bi-directional synchronization rather than one-off visualizations.

- Model-first: calibrate ROM/ML to validated physics before wiring telemetry.

- Closed loop: maintenance and design updates informed by live evidence.

- Governance: configuration, security, IP partitioning, and end-to-end lineage.

- Operations: observability, drift detection, and continuous recalibration.

Conclusion

Convergence realized

Digital twins represent the practical convergence of decades of engineering software progress with modern computing fabric. The journey from DMU to closed-loop twins fuses authoritative geometry and semantics (CAD kernels, STEP, IFC) with predictive behavior (MBSE, multiphysics solvers), all instrumented by IoT pipelines and rendered through real-time visualization and shared scene graphs like OpenUSD. The cultural shift is as significant as the technical one: models are no longer artifacts to be archived post-release, but operational actors that ingest evidence, evolve, and steer decisions. In this framing, PLM becomes the governance backbone of a living system, not a document vault. The organizations that thrive will be those that treat fidelity, interoperability, and lifecycle governance as first-class engineering requirements, not afterthoughts. When twins are credible and governed, the loop tightens: service informs design, production realities sharpen models, and in-field behavior continuously reshapes intent.

- From DMU to twin: geometry plus behavior, synchronized by data.

- Runtime-first: edge-to-cloud fabrics make models operational.

- Shared scenes: OpenUSD and domain semantics unify collaboration.

The three pillars of success

Three pillars determine whether a digital twin program compounds value or stalls. First, fidelity with verification and validation—traceability from CAD/CAE to ROM/ML—builds the trust to act on predictions. This includes uncertainty quantification, controlled updates, and auditable calibrations. Second, robust interoperability—STEP/IFC for geometry and semantics, FMI for co-simulation, OPC UA for telemetry, ISO 23247 and AAS for manufacturing schemas, and shared scene/semantic graphs like OpenUSD plus domain ontologies—keeps twins portable across tools and time. Third, lifecycle governance—configuration control, security, data provenance, and the digital thread—ensures decisions remain defensible across supply chains and regulatory contexts. These pillars are inseparable: fidelity without interoperability isolates insight; interoperability without governance invites drift; governance without fidelity yields bureaucracy. Codifying them in architecture, contracts, and team rituals turns digital twins from heroic projects into reliable infrastructure that upgrades how an organization thinks and acts.

- Fidelity: V&V, UQ, and traceable ROM/ML derivation.

- Interoperability: STEP/IFC, FMI, OPC UA, ISO 23247, AAS, and OpenUSD-centered graphs.

- Governance: configuration, security, and end-to-end data lineage.

Trajectory and impact

Near term, the center of gravity shifts toward physics-informed ML that preserves conservation laws while accelerating inference, scalable edge twins that act where latency matters, and collaboration patterns anchored in OpenUSD that keep design, simulation, and operations in a shared, high-fidelity scene. Expect tighter PLM–operations feedback loops: field data triggering auto-generated what-if studies, ROM refreshes, and requirement updates without manual handoffs. Over the longer arc, credible, auditable twins will transform releases into living systems. Products and infrastructure will evolve continuously under governance, compressing iteration cycles and rebalancing work across OEMs, suppliers, and operators. Regulatory regimes will adapt to certify not only artifacts but the processes—models, data, and controls—that keep twins trustworthy over time. For practitioners, the mandate is pragmatic: start from validated models, design for interoperability, and operationalize governance. For leaders, the opportunity is strategic: use twins to institutionalize learning, turning every deployed asset into a sensor for future design. When done well, digital twins become the connective tissue that lets organizations engineer, operate, and evolve with a clarity and speed that were previously out of reach.

- Near term: physics-informed ML, edge twins, and USD-centered collaboration.

- Mid term: automated calibration and closed-loop PLM–operations cycles.

- Long term: from releases to living systems, with auditable, continuous evolution.

Also in Design News

Physically Based Rendering in Engineering: Calibrated Visuals, Material Data, and Automated Review Pipelines

November 08, 2025 10 min read

Read More

Rhino 3D Tip: Standardize Rhino Page Setup for Reliable PDF and Print Output

November 07, 2025 2 min read

Read More

Cinema 4D Tip: Non-Destructive UV Transform for UDIM Prep and Trim Sheet Alignment

November 07, 2025 2 min read

Read MoreSubscribe

Sign up to get the latest on sales, new releases and more …