Your Cart is Empty

Customer Testimonials

-

"Great customer service. The folks at Novedge were super helpful in navigating a somewhat complicated order including software upgrades and serial numbers in various stages of inactivity. They were friendly and helpful throughout the process.."

Ruben Ruckmark

"Quick & very helpful. We have been using Novedge for years and are very happy with their quick service when we need to make a purchase and excellent support resolving any issues."

Will Woodson

"Scott is the best. He reminds me about subscriptions dates, guides me in the correct direction for updates. He always responds promptly to me. He is literally the reason I continue to work with Novedge and will do so in the future."

Edward Mchugh

"Calvin Lok is “the man”. After my purchase of Sketchup 2021, he called me and provided step-by-step instructions to ease me through difficulties I was having with the setup of my new software."

Mike Borzage

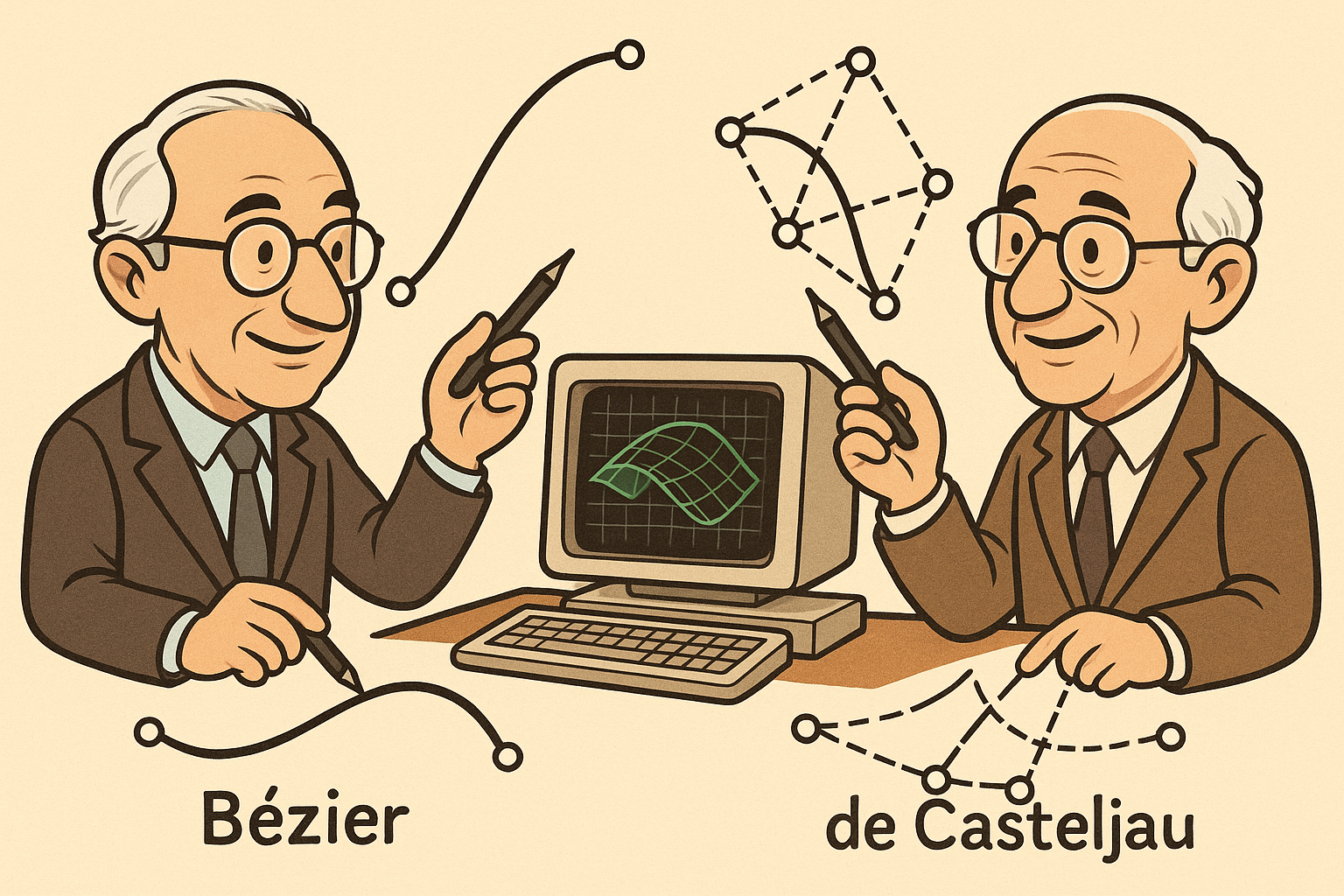

Design Software History: Bézier and de Casteljau: Dual Origins of Bernstein‑Based Curve Modeling and CAD Adoption

October 26, 2025 13 min read

Historical context and the two protagonists

Brief biographies and institutional contexts

Pierre Bézier and Paul de Casteljau emerged from the pressure cooker of mid‑20th‑century European automotive engineering, where styling aspirations collided with manufacturing constraints. Bézier, trained at the École des Arts et Métiers and later the Conservatoire National des Arts et Métiers, joined Renault in the 1930s and steadily rose through roles connecting tooling, machining, and body engineering. Within Renault’s industrial modernization after World War II, he became the champion for replacing manual lofting with computational descriptions of shapes. His work culminated in UNISURF, an internal system first deployed around 1968, which standardized how Renault generated and exchanged surface data for dies, inspection, and NC tooling. Bézier’s dual fluency in production realities and mathematical representation made him a uniquely effective advocate, and his choice to publish extensively under Renault’s banner brought his approach prominent visibility in the engineering community.

Paul de Casteljau, by contrast, entered the narrative from Citroën, where he worked from the late 1950s on mathematical methods for industrial design. A gifted mathematician and engineer, de Casteljau explored how to compute and manipulate parametric curves using iterative geometric constructions. He devised the now‑canonical de Casteljau algorithm: a recursive scheme based on linear interpolation that evaluates and subdivides curves defined by control points and the Bernstein polynomials. Yet Citroën’s internal secrecy policies severely limited his ability to publish, so his memos circulated largely in‑house for years. Only later did the broader community begin to recognize the method’s elegance and numerical advantages. Where Bézier occupied the limelight as an industrial spokesperson, de Casteljau shaped the core geometric algorithm in relative obscurity. Together, though rarely in direct dialogue, they defined two faces of the same class of curves—one by explicit polynomial form, the other by a robust computational procedure.

Corporate and cultural backdrop

The 1950s and 1960s were a crucible for industrial design’s transition from artisanal craft to digital abstraction. Automotive bodywork had long relied on wood bucks, spline battens, and draftsman’s lofts to define freeform contours. Postwar consumers and aerodynamics pushed stylists toward bolder shapes, but production reality demanded repeatability and tight tolerances. Toolmaking and die‑sinking for stamped panels were costly endeavors where small shape mismatches multiplied into major expenses. Within this environment, French automakers Renault and Citroën sought to compress styling, engineering, and manufacturing into a coherent, computable pipeline. Mainframe computing arrived in engineering offices, numerical control (NC) machines promised automation, and computational geometry had to bridge sketches and steel.

Corporate culture set the terms of credit as much as mathematics. Renault encouraged Bézier to publish in journals and present at conferences, aligning with a broader European shift toward university–industry exchange. Citroën, in contrast, was famously secretive, treating its research as proprietary artillery in a competitive market. Consequently, while both firms explored essentially the same family of curves—defined by control points and Bernstein basis functions—only one narrative traveled quickly through the literature. The label “Bézier curves” propagated via conference proceedings, textbooks, and early CAD vendor documentation. De Casteljau’s authorship of the algorithmic heart of the method, documented in internal reports from the late 1950s and early 1960s, remained subdued. The divergence illustrates how publication choices, company policy, and messaging shape the historical record even when the underlying ideas coincide.

Early mathematical antecedents and contributors

Underpinning both trajectories are early 20th‑century results in approximation theory, particularly the Bernstein polynomials introduced by Sergei Bernstein in 1912. Bernstein showed that polynomials constructed from binomial coefficients and the weights of a function’s samples converge uniformly—results later appreciated for their geometric niceties: nonnegativity, partition of unity, and shape‑preserving tendencies. These properties would become foundational for curve design because they allowed engineers to reason about parametric curves through the lens of control points and convex combinations rather than only power‑basis coefficients. Mid‑century advances further primed the field: Isaac Jacob Schoenberg’s B‑splines (1946) introduced basis functions with local support and excellent numerical properties; Carl de Boor and M. J. D. Powell expanded practical spline algorithms; and Steven Anson Coons proposed compositional surface patches for industrial design in the 1960s, hinting at a synthesis between mathematical splines and designer intent.

As computing entered drafting rooms, the nascent discipline of computer‑aided geometric design (CAGD) coalesced around rigorous representation and construction rules. Researchers such as Herbert B. Voelcker, Robert Riesenfeld, Gerald Farin, and David F. Rogers made influential contributions through algorithms, textbooks, and curricula that translated mathematical abstraction into engineering practice. Within this milieu, Bézier’s polynomial form and de Casteljau’s algorithm offered precisely what the automotive industry needed: a mathematically sound, visually intuitive, and computationally tractable way to describe freeform shapes. The stage was set for a synthesis of approximation theory, computational geometry, and industrial workflow, converging on the family of curves that would dominate surfacing and, eventually, many domains far beyond car bodies.

The mathematics and algorithms: two faces of the same curve

Bézier polynomial form

In the Bézier formulation, a degree‑n curve is defined by n+1 control points P0,…,Pn and expressed as a parametric polynomial in t∈[0,1] using the Bernstein basis. The curve is B(t)=∑i=0..n Bi,n(t)·Pi, where Bi,n(t)=C(n,i)·(1−t)^{n−i}·t^{i}. This form foregrounds the role of control points as geometric handles while wrapping the combination in familiar polynomial structure. Because the Bernstein basis is nonnegative and sums to one, the curve stays within the convex hull of its control points—an intuitive safety net for both design and manufacturing. Endpoint interpolation falls out naturally: B(0)=P0 and B(1)=Pn, while first derivatives provide tangent alignment with P1−P0 and Pn−Pn−1, giving designers precise control over boundary behavior.

Several consequences make the polynomial form attractive for analysis and integration into toolchains: - The coefficients’ binomial structure allows closed‑form manipulations, including degree elevation, derivative and integral computation, and basis conversion. - The convex hull property and variation‑diminishing behavior enable guaranteed bounds on shape, critical for collision, tolerance, and machining offsets. - Because the basis functions are smooth and well‑behaved on [0,1], the representation is stable across parameterizations and admits efficient composite constructions along a curve network.

For engineering teams in the 1960s–70s, expressing curves in this explicit polynomial form meshed neatly with NC programming and with the mathematical analysis needed to certify manufacturability, while remaining accessible to designers as a set of clearly meaningful control points.

Geometric intuition: control polygons and endpoint behavior

What made Bézier’s representation particularly compelling to stylists and engineers was its geometric transparency. The control polygon—the polyline connecting P0,…,Pn—acts as a scaffold that reveals how the curve bends and where it leans. Because the basis functions create convex combinations, the curve cannot “escape” the polygon’s convex hull, yielding a visually faithful and numerically safe envelope. Endpoint interpolation ensures the curve begins at P0 and ends at Pn, while the first control segments determine departure and approach tangents, which streamlines continuity management across adjacent curve segments. This is essential in automotive surfacing, where panel boundaries must meet with prescribed smoothness.

Intuition becomes workflow through operations designers repeatedly use: - Adjusting intermediate control points modulates bulge and inflection without disturbing endpoints. - Inserting extra control points through degree elevation retains the shape while increasing flexibility for local edits. - Subdivision (discussed below) creates curve segments with their own control polygons, enabling hierarchical refinement at multiple scales. - The curve’s affine invariance and the variation‑diminishing property ensure that geometric transformations and filtering behave predictably, a boon for toolpath planning and inspection algorithms.

These properties brought a shared language to styling reviews and manufacturing sign‑offs: stylists could “see” how changes to the polygon would tune highlights and curvature, while engineers could guarantee bounds and manage continuity criteria like positional (C0), tangent (C1), and curvature (C2) matching at joins.

De Casteljau algorithm

Paul de Casteljau’s algorithm evaluates and subdivides the same curves through a purely geometric, recursive construction. Given control points P0,…,Pn and a parameter t, the algorithm linearly interpolates between consecutive points to form a new polygon; it repeats the process on successive layers until a single point remains, which lies on the curve at parameter t. This elegant cascade is numerically robust because it replaces potentially ill‑conditioned polynomial evaluation with sequences of convex combinations. Each interpolation is stable on [0,1], and the computation mirrors the curve’s geometric definition, making it resistant to rounding errors and parameter extremes—core reasons why modern systems favor the de Casteljau algorithm for evaluation.

Equally valuable is the algorithm’s built‑in subdivision: the intermediate points created during evaluation at t naturally split the original control polygon into two sets that define the left and right curve segments. No refitting or approximation is needed; subdivision is exact. This supports: - Adaptive tessellation for rendering and simulation, refining where curvature or error warrants. - Local editing workflows, where designers isolate a segment and adjust its dedicated control polygon. - Multiresolution strategies that enable coarse‑to‑fine interaction without changing the underlying mathematical object.

The algorithm’s geometric clarity made it easy to implement on early hardware and straightforward to explain to users, cementing its role as the de facto evaluation and subdivision tool for Bézier curves and, later, surfaces.

Practical algorithmic properties and operations

Real design systems rely on a suite of operations, and the Bézier/de Casteljau toolkit delivers a coherent package. Degree elevation increases the polynomial degree while preserving the curve, enabling insertion of control points for localized shape control without reparameterization. Subdivision provides exact segmentation, underpinning trimming, Boolean operations, and hierarchical editing. Continuity management distinguishes parametric continuity (Ck) from geometric continuity (Gk); the former relates to equality of derivatives across joints, while the latter aligns unit tangents or curvature directions, which often better matches perceptual smoothness in styling.

Numerical behavior dictates algorithm selection. Bernstein basis evaluation is convenient for symbolic manipulation and error analysis, but for floating‑point computation, the de Casteljau scheme offers superior numerical stability: it uses only additions and scalar multiplications by t and (1−t) with values in [0,1], avoiding cancellation and overflow patterns common in high‑degree power bases. Blossoming theory (due to Larry Ramshaw) unifies these operations by treating the curve as a symmetric multi‑affine map, from which degree elevation, subdivision, and continuity relations flow naturally. Conversion to and from other bases—power basis for analysis, B‑spline basis for locality—is routine, enabling interoperability among modeling kernels and analysis packages. Collectively, these properties explain why this family of curves became a workhorse across CAD and graphics.

Relationship to later generalizations

The ideas generalize seamlessly. By taking tensor products of univariate Bézier bases, one obtains Bézier surfaces with rectangular control nets—a direct extension of the curve’s control polygon. These surfaces inherit convex hull containment, endpoint interpolation along edges, and subdivision via de Casteljau in two parameters. For complex shapes, however, designers needed locality and modularity beyond a single patch. Enter B‑splines: Schoenberg’s basis with local support enables editing a portion of a curve or surface without global side effects. The Cox–de Boor algorithm handles evaluation, while knot insertion mirrors subdivision, and degree elevation remains available.

In the 1970s, the introduction of rational forms yielded NURBS (Non‑Uniform Rational B‑Splines), widely attributed to work including Ken Versprille’s 1975 Ph.D. thesis at Syracuse University and subsequent industrial adoption by aerospace and automotive firms. NURBS encode conic sections and exact circular arcs by adding weights to control points, marrying exact analytic shapes with freeform flexibility. Crucially, the conceptual DNA remains the same: control points, convex combinations (in homogeneous coordinates), and recursive evaluation. Publications by Carl de Boor, Eugene Lee, Gerald Farin, and David F. Rogers, along with vendor implementations, diffused these generalizations. Standards such as IGES and later STEP enshrined NURBS as neutral exchange formats, ensuring that the lineage from Bernstein to Bézier to B‑splines to NURBS remained intact and dominant in CAD kernels.

Adoption, tooling and the long shadow of naming

Industrial uptake and software implications

Automotive and aerospace manufacturing adopted these curve representations because they bridged the gulf between clay and cutter. At Renault, Bézier’s UNISURF connected studio surfaces to die‑making and inspection workflows, reducing translation losses between departments. In practice, the convex hull property and predictable tangency control helped tolerance studies and highlight analysis for body panels. NC programming, which depended on continuous, well‑behaved paths, benefited from parametric curves that could be subdivided adaptively for feed rate control and gouge avoidance. Across the industry, similar thinking infused early surfacing modules in systems like CADAM, CATIA (later Dassault Systèmes), SDRC I‑DEAS, and Unigraphics (later Siemens NX).

Integration into CAM pipelines hinged on robust evaluation and offsetting. The de Casteljau algorithm enabled stable sampling for toolpath generation, while Bézier and B‑spline forms allowed curvature‑based refinement and consistent surface normals for finishing cuts. Standards accelerated uptake: - IGES provided entities for rational and non‑rational splines, allowing supplier networks to exchange geometry without re‑fitting. - STEP extended and modernized the exchange stack, codifying NURBS surfaces and trims that define solid boundaries in boundary‑representation (B‑rep) kernels. - Kernel vendors—Spatial (ACIS), Siemens (Parasolid)—embedded spline evaluation, intersection, and trimming tools, so application developers could trust consistent behavior across modelers.

The result was a virtuous loop: predictable geometry led to reliable tooling, which encouraged more ambitious surfacing, which in turn justified further investment in spline‑centric CAD/CAM infrastructure.

Cross‑domain influence

What began in car bodies quickly permeated digital media. Adobe’s PostScript, created by John Warnock and Chuck Geschke in the 1980s, used cubic Bézier curves as the backbone of page description, enabling scalable outlines for typography and vector art. Type 1 fonts and later OpenType CFF used cubic Béziers; Apple’s TrueType favored quadratic Béziers for efficient rasterization. The World Wide Web Consortium (W3C) standardized Bézier path commands in SVG, making the same control‑point editing metaphors ubiquitous in browsers and design tools. Applications from Adobe Illustrator and Inkscape to Figma and Sketch expose path handles that are, in essence, control points with tangent constraints.

In computer graphics and animation, spline curves and surfaces provided smooth camera paths, character rigs, and deformation lattices. Pixar’s RenderMan, Maya, and Houdini leaned on B‑spline and NURBS toolsets before subdivision surfaces became popular; even then, the conceptual lineage—control meshes, limit surfaces, and subdivision rules—echoes the same principles. Game engines rely on Bézier splines for UI curves, motion paths, and particle trajectories. The cross‑pollination continued in scientific visualization and medical imaging, where spline models fit anatomical contours and drive registration. In each domain, the payoff is identical: intuitive shape control, stable evaluation, and a well‑understood set of operations for refinement and continuity.

Why “Bézier” stuck as the common name

Names crystallize around stories, and Pierre Bézier’s story had a public stage. He published, demonstrated, and linked his curves to a flagship industrial deployment at Renault, making “Bézier” a shorthand that authors, educators, and vendors could cite. Paul de Casteljau’s contributions remained enclosed within Citroën for years, delaying recognition of the algorithm that, in practice, many systems run under the hood for evaluation and subdivision. When early CAD documentation, standards drafts, and textbooks needed a label, they reached for the visible brand. Over time, “Bézier curve” became a metonym for the representation, while the computation itself colloquially carried de Casteljau’s name primarily among specialists.

Other forces reinforced the eponym: - Developer ergonomics: APIs exposed “BezierCurve” classes while hiding evaluation mechanics. - Educational momentum: textbooks by David F. Rogers, Gerald Farin, and Les Piegl & Wayne Tiller used the Bézier label broadly, even while crediting de Casteljau’s algorithm. - Standards inertia: IGES and STEP entity names and vendor documentation normalized the terminology. - Cross‑domain echo: Adobe, Apple, and W3C specifications repeated “Bézier,” cementing it in graphics and typography.

The outcome is a duality: the public name celebrates Bézier’s advocacy and polynomial framing, while practitioners acknowledge de Casteljau’s algorithm as the workhorse for numerical stability and subdivision. Both are indispensable to the method’s success.

Key people and organizations to mention in a full article

Many contributors shaped the ecosystem that elevated these curves from equations to everyday infrastructure. A non‑exhaustive guide helps map the terrain and underscores how mathematical ideas gain traction through tooling, teaching, and standards:

- Pierre Bézier (Renault): Advocate of polynomial curve/surface representations and architect of UNISURF, linking studio shape to industrial tooling.

- Paul de Casteljau (Citroën): Originator of the recursive evaluation and subdivision algorithm that ensures robust computation.

- Sergei Bernstein: Father of the Bernstein polynomial basis, providing nonnegativity and partition‑of‑unity properties foundational to geometric intuition.

- Isaac Jacob Schoenberg: Introduced B‑splines with local support, which enabled modular editing and efficient algorithms.

- Carl de Boor and Michael J. D. Powell: Advanced spline algorithms and analysis; the Cox–de Boor algorithm remains central to B‑spline evaluation.

- Steven Anson Coons: Proposed Coons patches, inspiring surface composition techniques in industrial design.

- Ken Versprille: Pioneered NURBS in the 1970s, bridging exact conics with freeform design through weighted control points.

- Textbook authors and educators: David F. Rogers, Gerald Farin, and Les Piegl & Wayne Tiller codified practice and disseminated algorithms to engineers and developers.

- CAD vendors: Dassault Systèmes (CATIA), Siemens (NX/Parasolid), PTC (Pro/ENGINEER/Creo), Autodesk (Alias, AutoCAD), and Spatial (ACIS) operationalized spline modeling in kernels and applications.

- Graphics and typography: Adobe (PostScript, Type 1, PDF), Apple (TrueType), and the W3C (SVG) exported Bézier idioms to publishing and the web.

- Standards bodies: IGES and ISO STEP stabilized exchange formats around B‑splines and NURBS, enabling global supply‑chain interoperability.

Together, these actors transformed a mathematically elegant representation into a universal language of shape, embedded in machines, documents, and pixels worldwide.

Conclusion

Dual‑origin story: algorithms and advocacy

The history of the curves we habitually call “Bézier” is a dual‑origin story that pairs mathematical equivalence with divergent visibility. Pierre Bézier supplied the explicit polynomial framing, anchored it in Renault’s end‑to‑end surfacing practice, and brought it to the public through publications and talks. Paul de Casteljau delivered the geometric engine—the recursive interpolation scheme that evaluates, subdivides, and stabilizes the very same curves. Both approaches describe the identical object: a control‑point‑driven parametric curve in the Bernstein basis, endowed with the convex hull property, straightforward boundary control, and intuitive geometric behavior. The difference lies not in the shape but in the path each idea took into the world. Bézier’s name traveled through corporate endorsement, standards, and vendor documentation; de Casteljau’s algorithm spread among practitioners who needed reliable computation, earning respect for its elegance and robustness.

Recognizing this duality enriches our understanding of how foundational techniques emerge. It reminds us that the eponym we recite encapsulates advocacy and timing as much as authorship. For the engineering and design communities, the practical upshot is unambiguous: the combination of polynomial analysis and geometric recursion gave industry a toolset that aligned intuitions, satisfied numerical constraints, and scaled across use cases. The two protagonists, operating in parallel across rival automakers, converged on a shared solution that would outlast their immediate corporate contexts and knit together disciplines from manufacturing to media.

Lasting legacy: from CAD kernels to digital culture

The legacy of this work pervades modern design and computation. In CAD, B‑spline and NURBS generalizations of Bézier ideas underpin boundary‑representation modeling, associativity in parametric workflows, and robust interoperability via IGES and STEP. CAM pipelines rely on the de Casteljau algorithm and its descendants for adaptive sampling, offsetting, and collision‑aware toolpath generation. In typography and vector graphics, cubic and quadratic Bézier curves make scalable outlines inexpensive to store and render, shaping everything from logos to user interfaces. In computer graphics, the same principles of control points, convex combinations, and stable evaluation underlie character rigs, deformations, and rendering pipelines. The concepts are now part of the cultural substrate of design: when a designer drags a handle in a path editor, they are engaging directly with century‑old approximation theory modernized by two mid‑century engineers.

There is also a broader lesson about innovation pathways. Corporate context can throttle or amplify visibility; publication choices steer pedagogy and tooling; standards codify names and cement memory. The triumph of this curve family illustrates that excellence in algorithms is necessary but not sufficient—adoption requires advocacy, integration into workflows, and alignment with the constraints of manufacturing and computation. As new frontiers—implicit modeling, isogeometric analysis, AI‑assisted design—unfold, the story of Bézier and de Casteljau suggests a pragmatic compass: pair mathematically robust cores with representations that designers can reason about, ensure numerical stability and locality, and cultivate an ecosystem of tools and standards. Do that, and ideas graduate from papers to practice, becoming the invisible infrastructure of how we shape the world.

Also in Design News

Five Interlocking ZBrush Techniques for Production‑Ready Hard‑Surface Modeling

October 26, 2025 7 min read

Read More

Cineware Best Practices: Live In-Comp Rendering, Camera/Light Sync, AOVs & Take-Based Versioning

October 26, 2025 11 min read

Read MoreSubscribe

Sign up to get the latest on sales, new releases and more …