Your Cart is Empty

Customer Testimonials

-

"Great customer service. The folks at Novedge were super helpful in navigating a somewhat complicated order including software upgrades and serial numbers in various stages of inactivity. They were friendly and helpful throughout the process.."

Ruben Ruckmark

"Quick & very helpful. We have been using Novedge for years and are very happy with their quick service when we need to make a purchase and excellent support resolving any issues."

Will Woodson

"Scott is the best. He reminds me about subscriptions dates, guides me in the correct direction for updates. He always responds promptly to me. He is literally the reason I continue to work with Novedge and will do so in the future."

Edward Mchugh

"Calvin Lok is “the man”. After my purchase of Sketchup 2021, he called me and provided step-by-step instructions to ease me through difficulties I was having with the setup of my new software."

Mike Borzage

Q&A with Mihai Iliuta: Studio Lighting Techniques Using Maxwell Render

November 14, 2012 10 min read

Last week's webinar on Maxwell Render was a great success! Thank you to all who attended the session live. So many of you had questions, that even going over our usual one hour limit wasn't enough. Mihai Iliuta kindly agreed to answer all the remaining questions on our blog.

Q: If I stopped a rendering, how can I later continue the rendering?

A: This was answered in the webinar, at 1:13:20. For more info see also our docs at: http://support.nextlimit.com/display/maxwelldocs/How+to+resume+a+render

Q: Can Maxwell handle UV-islands in the materials?

A: Maxwell gets whatever UVs the main application gives it, it can also handle multiple UV sets and materials per object. Q: Maxwell works based on cores, does it need necessarily any video card?

Q: You might mention that the PC has specifications that you currently have? Q: What is your hardware configuration? Q: Can you talk about a good hardware set up for Maxwell- ram/cpu/video card etc?

A: Maxwell only uses the CPU(s) so the faster the CPU the faster the render. My config is an i7 2600K so really a middle range machine by today's standards. Please see our Benchwell site (http://www.maxwellrender.com/index.php/benchwell) where our users have benchmarked their computers to get an idea of what computer to buy. You can also run this benchmark test on your computer by opening Maxwell.exe (not Studio) and going to "Tools>Run Benchwell".

Q: Do you use any special material inside of the modeled reflectors ? Q: Do you use for a diffuser SSS -Material ?

A: I just set the inside of it to pure black because if it was white you would get more light bouncing in there which would slow down the rendering a bit and the light probably wouldn't be as focused. But it's not super important. A diffuser with SSS is probably overkill although it would work (use thinSSS and a single sided plane for faster renders in this case), so an HDR emitter or simply reflecting light off of a white solid plane would be sufficient.

Q: Hi, many thanks for the tutorial. Can you roughly say how much increasing resolution affects render time, as the coffee machine was only 700 by 700 pix?

A: If you double the resolution you quadruple the number of pixels so it will take 4X longer to render. This is the same for all renderers though.

Q: How can we achieve Chromatic Aberration?

A: There is a switch in the material editor to turn this on for transparent materials. It does add to the render time though so use it if you really want a material that shows this. If it's a high grade (pure) glass, they usually have almost unnoticeable aberration. More info: http://support.nextlimit.com/display/maxwelldocs/Transmissive+Properties

Q: Does the camera model choice affect the image besides proportion, is there any difference using a Canon or a Nikon?

A: Choosing a camera make changes the sensor width/height – which in turn affects what the "real" focal length of a lens is. We added this functionality for easier matching a render with a photo for compositing purposes, so you simply enter the real sensor width/height of the camera that took the photo, then you enter the focal length of the lens and the render and photo will match up. Other than this, camera make won't have any influence on render quality or look.

Q: Do you try to capture the gridded cells of a gridded soft box? Or do you always sink your large soft box into a single box cell as you did today?

A: It depends on how focused you want the light to be. You could of course model a grid and you'll get a bit more focused light. The nice thing in Maxwell is because it's so precise, you can take any real photography technique and use it in Maxwell.

Q: If I don't want monochromatic gray/white light, but I like HDR image emitters, how do I introduce color temperature to my HDR?

A: Just add some color to the gradient, it will work just as fine. The important thing when working with 32bit high dynamic range is the 32bit RGB value of the color picker. Try to stay within 0 – 1.0 when picking a range of colors. If you go higher when drawing the bright gradient you will get a too strong central light source. Depends on the look you want of course.

Q: Can you use the null point trick in Maxwell studio?

A: No, there is no constraint functionality in Maxwell Studio. In most modeling applications though there is. For example even Solidworks has "Mates" with which you should be able to achieve the same thing.

Q: Any tips specific for jewelry?

A: Some tips are mentioned at the end, at 1:06:05

Q: When you do studio lighting do you turn the sky off?

A: Unless you want to mimic taking studio photos in bright daylight (which wouldn't make sense), the sky is off. The sky is a MUCH stronger light source than studio lights, but you could for example block the light from the physical sky a bit to better mix it with studio lighting.

Q: How do you prefer to show lighting from within building windows in shooting an exterior night scene for showcasing architectural work?

A: I found that it works really well to take photos of lit windows at night, then turn them into HDR emitters either by saving the cropped photo in PS as 32b HDR/EXR or turning it into an MXI using Maxwell (File>Open Image, then File>Save as MXI). Then load them into an emitter material. They will emit light based on the colors in the photo so it creates a very realistic effect. Q: How do you get a soft reflex on the bottle's front? You had a hard reflex in the demo, but a soft one in your real rendering?

A: If by soft you mean it was semi transparent, I just lowered the intensity of the emitter. You could also get a soft edged effect by replacing the emitter with just a white reflector surface which will catch the light coming from the background but not be illuminated equally so it will create a faded reflection effect.

Q: Will the multi light feature work in Photoshop when you import Maxwell's native render into Photoshop?

A: Yes, when you open an MXI with our Photoshop plug-in, each light will be in its separate layer. The layers though are not ready to be used as Multilight, and we have a PS action which you apply to each layer to get the same functionality. More info here: http://support.nextlimit.com/display/maxwelldocs/PS+action

Q: What was the Maxwell light type you used when generating your custom lights?

A: I used either Lumens (instead of the default watts/efficacy), or "Image based" emitters which allow me to load a high dynamic range image format (HDR/EXR/MXI) which will emit light.

Q: I always have problems with adjusting DOF. Do you have any tips for this?

A: This is affected first of all by the aperture of the lens (fStop) and to a certain degree by the focal length of the lens. More info here: http://support.nextlimit.com/display/maxwelldocs/Camera I also really suggest to look into some basic photography information that covers this. What you learn from that is directly applicable in Maxwell.

Q: Can you do a simple interior scene with lightning from ""outside""… Q: What is the quick scene setup for interior scenes with ""Meshlights"" from ""outside""? That I don't need thousands of watts to lightning the room…

A: Change the exposure of the camera until it looks good. fStop, ISO, Shutter speed all affect the exposure of the camera: http://support.nextlimit.com/display/maxwelldocs/Camera

Q: Is there a repository for hdri image light maps?

A: No, not at the moment. But it takes just a few minutes to create them in PS.

Q: How to create that flat color image again?

A: This starts at 0:19:35

Q: Terrific seminar! Any tips on "filmback" setting in Studio?

A: This is only really useful for easier matching of your render with a photo. Just enter the sensor width/height of your camera, or choose one of the camera makers presets.

Q: Can you clarify the relationship between surface subdivs and light intensity?

A: Subdivisions of the emitting surface doesn't affect light intensity, it's just that if you use a one million polygon emitter surface, it will render a bit slower than a 1 polygon plane emitter.

Q: Do you typically find it helpful to post process with multi light layers or more trouble than it's worth?

A: Yes it can be very useful, especially with clients that aren't sure what look they want. Being able to change each light in post is a great time saver.

Q: Object ID / Material ID masks are noisy at the edges if you choose the magic wand – how can I avoid this noise and get a clean mask?

A: You shouldn't get noise if the objID/MatID are sufficiently clean. Remember that these channels also take into account the DOF blurring of your main render, otherwise they would be a bit useless. So in these areas you have to let the render until they are cleaner, if you get noisy edges.

Q: Which SL level should I have? I also read that the color for those Masks should be full RGB ( full red full blue,..) colors to get pixel true masks. Is that right ?

A: Most renders will look pretty much clean by SL 18, especially exterior renders. Interiors will take longer, and SL of 20 might be needed. I very rarely go over SL 20. Moreover, there's no point in getting too obsessed if there is a tiny bit of noise left. Even digital photos taken at ISO 100 will have a bit of noise. With a pure RGB color for the objID/matID you will get a better selection in PS and you can change these colors per object or per material from the material settings or the object properties. Where these are depends on your plugin. All the plugin docs can be found here: http://support.nextlimit.com/display/maxwelldocs/Plug-ins

Q: What is faster to render on Maxwell – emitters or image based lighting?

A: It's not a clear case and depends more on the type of scene you have. For example many highly reflective, slightly rough objects which cause a lot of light bounces. In general though, if the HDR has a lot of big even lighting, it will render faster. But the same is true if you use a large emitter that lights your object from many directions.

Q: I would like to ask about glass and liquid interaction. What is the correct setup for faces of meshes? I mean, liquid mesh should be scaled down, so there will be small air gap between glass and liquid? Q: Hi Mihai, would be nice to see a correct setup for rendering liquids in glass in Maxwell, in the forum are three different setups, but which one is the right one? Q: How did you simulate the liquid inside the bottle?

A: See image below. The important thing is that the normals of the liquid are pointing outwards. Leave also a small gap between the liquid and the glass at the top.

Q: HDRI Studio vs. physical studio light. Any rendertime differences? Q: Is there any different in image quality using Maxwell emitter vs HDR image ( from HDR light studio)?

A: It's not a clear answer but in general, the lighting from an HDR is more even and so it lights the object from many directions, which can decrease render time. The main advantage of HDR Light Studio is in the scene setup time – you can very quickly set up the lighting very precisely plus each time you move a light in the virtual HDR sphere, you don't have to wait for Maxwell to re-export and re-voxelize the scene which happens when you move real emitter geometry in your scene. There is no image quality difference per say – but when using HDR lighting remember that all your lightsources are really at the same distance from your subject, so you can't really use the light falloff law (as mentioned in the webinar) to your advantage.

Q: How would you best render with the ""camera"" UNDER water looking out towards the surface of the water? Q: Would that be the normals facing the camera in the bubble when taking image from inside water?

A: The main thing is that you need to tell Maxwell it's already inside water. So you need to place the camera perhaps inside an "airbubble" and this way there will be some refraction difference when rays go from air>water and water>air. Try placing the camera inside a sphere to which you apply a transparent material with an ND lower than water (so below 1.33). I would think the best way is to model this bubble with a real small thickness, in which case the normals won't matter.

Q: Can you use the object or material ID to influence the reflections of the material such as the red reflections? Or is this something that would have to be done in PS? Q: When you changed the hue saturation of the coffee machine, the reflection did not change. It seems there was no way to change the relection.

A: You can't adjust reflections with those channels. You do have other channels such as Roughness and Fresnel which can be useful but it's not possible to completely separate diffuse from reflections in one render. You would have to do two renders – one with the render options set the Type to "Diffuse", the other set to "Reflections". But it's impossible to always get a complete separate because for semi rough surfaces, what is really diffuse and what is really "reflection". For Maxwell, as in reality, all light is really "reflected" light. It's just a matter of how diffusely or specularly that light is reflected that gives…"reflections".

Q: Is it better to use a roughness of 1 or to use a bumpmap for the roughness?

A: "Better" or "worse" depends entirely on the look you want. In some materials you can actually see the individual bumps if you get in close, so in that case a bump map or normal map would work well. In others, they look a bit diffuse and the bumps are really tiny even when close up so roughness would just be more convenient in this case.

Q: Do you have any advice on working with IES files with Maxwell emitters.

A: Apply the IES emitters to a small sphere (around 5cm) – this is the best way to mimic how the IES profile was actually created from a real light source (they put light sensors in a spherical shape around the light source to measure its strength and shape). More info: http://support.nextlimit.com/display/maxwelldocs/IES+emitters

Q: What does lock exposure mean?

A: It means you lock the exposure to a certain value, even if you change for example the fStop, which affects BOTH the depth of field and the exposure. There are three camera parameters that affect the exposure: fStop, Shutter speed, ISO. So for example if you want to render really small objects in focus, you need to increase the fStop to get a wider depth of field but this will also change the exposure. Instead, lock the exposure, change the fStop and Maxwell will automatically change the Shutter speed to compensate for the loss if light reaching the "sensor", because of the increased fStop.

Also in NOVEDGE Blog

Enhance Your Designs with VisualARQ 3: Effortless Geometry Extensions for Walls and Columns

April 30, 2025 8 min read

Read More

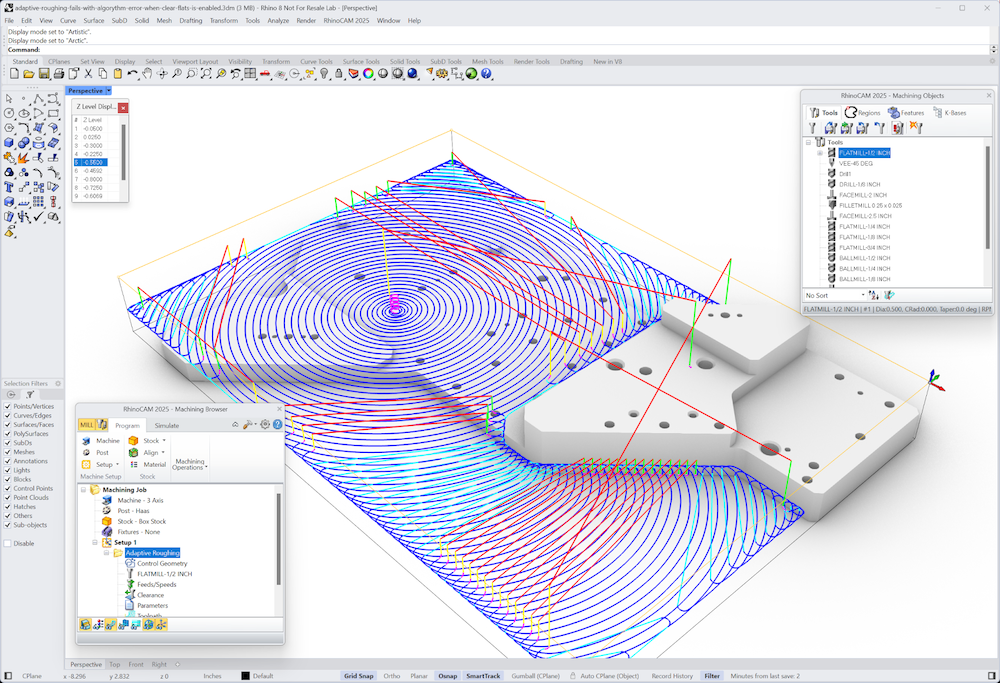

MecSoft Unveils RhinoCAM 2025 and VisualCAD/CAM 2025 with Enhanced Features

March 08, 2025 5 min read

Read MoreSubscribe

Sign up to get the latest on sales, new releases and more …