Your Cart is Empty

Customer Testimonials

-

"Great customer service. The folks at Novedge were super helpful in navigating a somewhat complicated order including software upgrades and serial numbers in various stages of inactivity. They were friendly and helpful throughout the process.."

Ruben Ruckmark

"Quick & very helpful. We have been using Novedge for years and are very happy with their quick service when we need to make a purchase and excellent support resolving any issues."

Will Woodson

"Scott is the best. He reminds me about subscriptions dates, guides me in the correct direction for updates. He always responds promptly to me. He is literally the reason I continue to work with Novedge and will do so in the future."

Edward Mchugh

"Calvin Lok is “the man”. After my purchase of Sketchup 2021, he called me and provided step-by-step instructions to ease me through difficulties I was having with the setup of my new software."

Mike Borzage

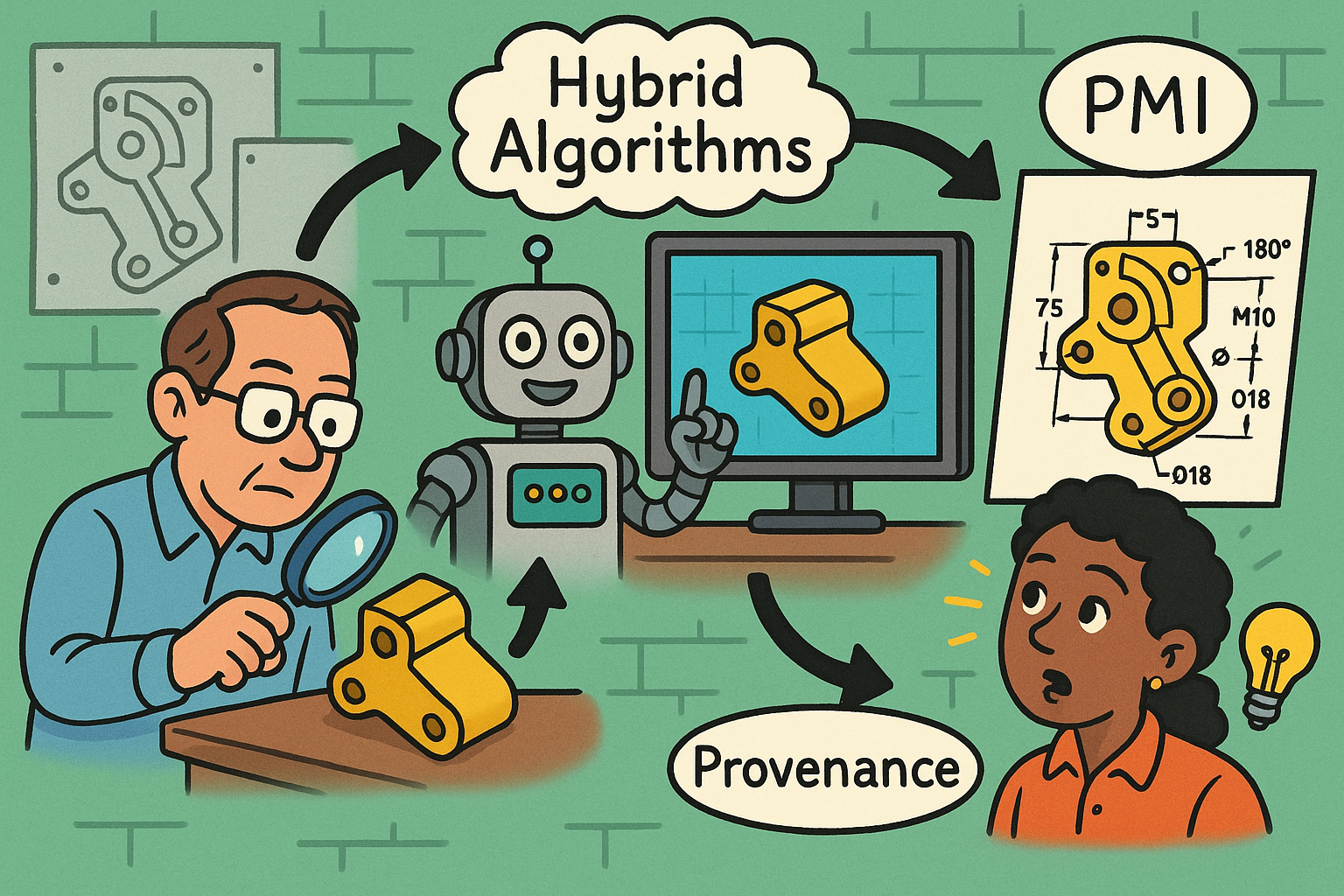

Recovering Design Intent in Legacy Parts: Hybrid Algorithms, PMI, and Provenance

October 29, 2025 13 min read

Why Intent Matters in Legacy Parts

The problem space

Teams inherit millions of legacy geometries—neutral CAD files, triangulated meshes, or scans—that lack the vital semantics that once governed how they were designed and manufactured. When a model arrives as STEP without PMI, an STL mesh, or a raw point cloud, the **design intent** that defined functional relationships evaporates. Edges and faces are preserved only as static shapes; there are no constraints to safeguard dimensions, no feature order to explain precedence, and no tolerance schemes to guide inspection. As a result, downstream processes become fragile. Engineers discover that geometry is effectively un-editable, that a small change to one area ripples unpredictably, and that **tolerance drift** creeps in when regenerations are improvised. Manufacturing planning is forced to infer tool accessibility and setup choices from pure shape, often leading to costly rework. Compliance teams must extrapolate GD&T or process notes, introducing risk into safety-critical records and audits. Over time, these gaps accumulate as technical debt: recurring engineering change orders, duplicated parts with trivial variations, and ad hoc vendor-specific tweaks that are impossible to generalize. The net effect is a slow erosion of confidence in the digital master, longer lead times, and a measurable increase in material scrap and validation overhead.

Practitioners can reduce this risk by treating legacy shapes not as inert surfaces but as incomplete narratives. That mindset shift—seeing geometry as evidence rather than ground truth—opens a systematic path to recover intent: heal and canonicalize the shape, reconstruct features and constraints, estimate parameters with uncertainty bounds, and reattach manufacturability semantics. Concretely, focus on:

- Mitigating lossy import effects with robust stitching and tolerance unification.

- Restoring editability through feature and sketch recovery backed by constraint solvers.

- Reducing manufacturing uncertainty by inferring process assumptions, tool reachability, and setup orientation.

- Re-establishing compliance by generating PMI, attaching traceable provenance, and aligning to inspection standards.

What “intent” means

“Intent” encodes how a part should behave under change, how it is fabricated, and how it is verified. Geometric intent specifies the shape-building elements and their parameters, while topological intent captures how those elements connect. Constraint intent describes the relationships that keep the design stable across edits. Process semantics capture the manufacturing and inspection rationale that gives context to the numbers. Consider four intertwined strata:

- Geometric intent: recognizable features (holes, pockets, slots, blends, drafts), parameterized by diameters, depths, angles, and radii; symmetry planes and circular patterns; layout references such as datum frames and coordinate systems.

- Topological intent: adjacencies and face loops that demarcate pockets and ribs; shelling operations that create interior cavities; sheet-metal developability and relief features that ensure flat pattern feasibility.

- Constraint/parametric intent: sketches with driving dimensions, equations, equalities, and design rules; GD&T relationships that bound allowable variation; relational groups that ensure **regeneration robustness** when edits cascade.

- Process semantics: cues indicating milled versus cast versus bent or additively built; inferred tool accessibility and cutter diameters from fillet spectra; setup assumptions like primary datum selection and fixturing faces.

Inputs and targets

Intent recovery begins with whatever a team actually has. Inputs come in three principal forms:

- B-Rep solids from neutral or proprietary kernels (Parasolid, ACIS, Open Cascade) that retain analytic faces but may lack features and PMI.

- Polygonal meshes (STL/OBJ) common from additive workflows or legacy archives, where curvature is approximated by triangles and analytic primitives are lost.

- Point clouds from scanning, which require surface reconstruction before any feature inference is plausible.

- Editable parametric models with a feature tree that captures operations and their order.

- Annotated PMI aligned to standards like STEP AP242 and QIF for GD&T, with datums and tolerances attached to the right faces and edges.

- Uncertainty bounds on inferred dimensions and parameters, allowing engineers to understand confidence and trigger review where needed.

Success criteria

To judge whether intent has truly been recovered, define measurable standards. Geometry overlap is necessary but insufficient: a perfect surface replica without constraints is brittle. Instead, define a multi-axis rubric:

- Feature recall and precision: how many true features were found, and how many detections are correct? Evaluate on a curated set with feature labels.

- Parameter accuracy: mean absolute error (MAE) and confidence intervals for diameters, depths, and radii versus ground truth or authoritative drawings.

- Constraint solvability: rate at which reconstructed sketches solve without over/under-constrained warnings; minimal constraints that yield stable regeneration.

- Regeneration robustness: success over model edits, including feature suppression and reorder operations, across kernel tolerance windows.

- Manufacturability checks: toolpath feasibility for pockets and holes, bendability for sheet metal, and accessibility cones that match reasonable fixtures.

- Traceable provenance: every inference linked to evidence—faces, edges, rules, and learned model scores—to support auditability.

Algorithms for Feature Recognition and Intent Inference

Geometry- and topology-driven baselines

Robust intent recovery starts with classical geometric reasoning. On B-Rep inputs, face classification via curvature and ruling identifies planes, cylinders, cones, tori, and NURBS. Using G1/G2 continuity thresholds, you can separate analytic faces from freeform surfaces and cluster fillet bands. With this palette of primitives, graph algorithms over the B-Rep’s face-edge structure extract features. Subgraph isomorphism detects recurring patterns such as slots, pockets, and stepped faces; coaxial and coplanar tests uncover hole stacks and planar arrays. Loop classification distinguishes inner/outer loops to separate islands from voids, crucial for pocket recognition. Attribute grammars then encode the schemas of features—e.g., a counterbored hole as a coaxial trio of cylindrical faces with specific radius relationships—and rule engines enforce recognition order and suppression.

- Face type detection: principal curvature analysis, best-fit primitives, and residual error checks.

- Adjacency graph mining: pattern templates for common features, topological signatures for ribs and webs.

- Rule scheduling: precedence constraints that peel fillets and chamfers last to expose parent features.

Sketch and constraint recovery

Recovering sketches transforms static faces into editable intent. The first step is hypothesizing sketch planes: dominant planar faces, symmetry planes, and datum-like constructions built from orthogonal clusters serve as candidates. Edges are projected to these planes and simplified to lines, arcs, and conics with tolerance-controlled fitting. Dimensional clustering groups like distances and radii, “snapping” near-equals to canonical values that reflect drafting intent rather than measurement noise. With geometry in place, a variational constraint inference process proposes parallelism, perpendicularity, concentricity, equal radii, and symmetry constraints. Graph or matroid formulations help compute a minimal, independent set of constraints that yields solvability without over-specification.

- Plane hypothesis: RANSAC over normals, inertia tensors for symmetry, and feature-aligned datum estimation.

- Projection and simplification: tolerance-aware vectorization and canonical rounding for dimension families.

- Variational solving: nonlinear least-squares or SMT-backed solvers to handle exact and inequality constraints.

Manufacturing-intent inference

Shape alone seldom states how a part was meant to be made. A classification layer infers process families—milled, cast, sheet-metal, or additive—by analyzing feature types, fillet radius spectra, typical thicknesses, and draft angles. Milled parts tend to show orthogonal pockets with planar floors, cylindrical holes in standard diameters, and fillets clustered around tool radii; castings exhibit generous blends, drafts relative to a parting direction, and cored cavities; sheet-metal parts show uniform thickness, bend radii consistent with K-factors, and reliefs; additive components often reveal lattice regions and organic freeform blends. From this context, downstream feasibility checks translate intent into actionable constraints:

- Toolpath-feasible pockets: check minimum cutter diameter, corner accessibility, and residual material with 3+2 or 5-axis reachability.

- Drillable hole stacks: verify concentric sequences, countersink/spotface dependencies, and stack order.

- Stamping/bending lines: detect bend axes, reliefs, and developability limits in sheet metal.

Data-driven approaches

Learning-based models extend coverage to noisy and ambiguous cases where rules struggle. Graph neural networks over face-edge B-Rep graphs (e.g., BRepNet-style encoders) classify faces and propose feature groupings by aggregating local geometry and topology. Mesh and point-cloud inputs benefit from voxel or point convolution networks that capture curvature context and symmetries. Transformer models trained on operation sequences (from synthetic corpora or mined histories) learn priors over feature orders and parameter ranges. In practice, **hybrid symbolic-ML** shines: ML proposes ranked hypotheses, while rule engines and solvers deliver exact parametrization and validation.

- Representation learning: node and edge embeddings for faces and adjacencies; multi-resolution voxel fields for curvature cues.

- Hypothesis ranking: classifiers with calibrated probabilities to prioritize plausible features and constraints.

- Uncertainty quantification: Bayesian heads and conformal prediction yield intervals and coverage guarantees for parameters.

Healing and canonicalization

Before recognition, geometry must be trustworthy. Healing routines stitch and sew faces, close small gaps, and reconcile tolerance mismatches across imported bodies. Canonical axis and frame detection aligns parts to stable references, making symmetry and pattern discovery straightforward. A critical step is fillet and chamfer peeling: blends often obscure parent features, so recognizing and temporarily suppressing them “deflates” the model back to simpler primitives. With parent geometry exposed, base features—extrusions, revolves, and cuts—become recoverable.

- Tolerance unification: normalize unit systems and kernel tolerances to stabilize topology.

- Symmetry discovery: detect mirror and rotational groups to compress hypothesis spaces.

- Order inference: topological sorting of features constrained by precedence rules and geometric dependencies.

System Architecture and Workflow Design

Pipeline blueprint

An effective system is a disciplined pipeline orchestrating geometry processing, hypothesis generation, validation, and reconstruction. Start by importing and healing: normalize units, deduplicate topology, remove slivers, and reconcile tolerances. For meshes, convert to an implicit or signed distance field to denoise and detect primitives, then remesh to a B-Rep to enable analytic recognition. Segmentation groups faces into candidate features using curvature, adjacency, and symmetry signals, generating multiple hypotheses with confidence scores.

- Hypothesis scoring: ML rankers propose priorities; rule validators enforce geometric feasibility.

- Global selection: optimize for a consistent, non-overlapping set of features that maximizes total score while respecting precedence.

- Constraint fitting: iterative solve to estimate parameters under tolerance intervals, with relaxation suggestions when conflicts arise.

Human-in-the-loop tools

Even with strong automation, expert oversight is essential. The UI should surface uncertainty as actionable context, not noise. Uncertainty heatmaps color-code faces and edges by confidence; interactive panels explain “why this feature” with the evidence: primitive fits, adjacency patterns, and learned scores. Engineers can override feature types, edit parameter intervals, or add missing constraints with quick-fix affordances. Regeneration diffs show how a change propagates, highlighting any naming changes or PMI detachments.

- Review queues: rank parts and features where model confidence falls below thresholds.

- One-click corrections: promote or demote constraints (e.g., perpendicular to parallel) and re-solve with provenance captured.

- Explainability: link each inference to faces, rules, and model logits for auditability.

Interoperability and governance

Recovered intent must travel across tools and organizations without losing meaning. Standards-first design is key: STEP AP242 with PMI ensures geometry and tolerances move together; JT supports lightweight visualization; QIF structures GD&T for inspection planning and metrology. Audit trails link inferences to the precise faces, edges, and rules that justified them, preserving **traceable provenance** for compliance reviews. Kernel strategy deserves equal attention: cross-kernel validation—regen and compare in Parasolid, ACIS, and OCC—reduces vendor lock and exposes tolerance sensitivities early.

- Standards alignment: AP242 PMI entities for datums and FCFs; QIF MBD for measurement plans.

- Cross-kernel tests: golden-model diffs under varying tolerances to detect brittle operations.

- Security/IP controls: on-prem deployment, confidential compute, and redaction pipelines for sharing anonymized geometry and features.

Performance and quality metrics

Speed and quality are co-equal. Establish KPIs that reflect the realities of engineering change and manufacturing:

- Feature F1 and parameter MAE for recognition accuracy.

- Constraint solvability rate and average solve iterations for stability.

- Regen time and geometric deviation (Hausdorff/volume) for performance and fidelity.

- Manufacturability pass rate on standard checks (tool reach, draft, bendability).

Tooling landscape

A practical stack blends mature open-source, research code, and enterprise integration. OpenCascade (OCAF) provides B-Rep operations and a data model suitable for feature trees; CGAL offers robust geometry algorithms; Open3D handles point clouds; trimesh eases mesh processing. Research projects like BRepNet, DeepCAD, SolidGen, and SketchGraphs inform data-driven recognition and sketch recovery. On the enterprise edge, PLM connectors integrate with PDM vaults, and MBD pipelines ensure PMI survives handoffs to CAM and CMM.

- Open source: OCC for topology, CGAL for computational geometry, Open3D for scanning workflows, trimesh for mesh utilities.

- Research: GNNs over B-Rep graphs and generative models for feature sequences and sketches.

- Integration: traceable export with uncertainty annotations; PLM hooks for change management and approvals.

Conclusion

Blending reasoning and learning under uncertainty

High-fidelity intent recovery is not a single technique but a coordinated ensemble. Geometric and topological reasoning provide exactness: face classification, adjacency graphs, and rule-driven schemas form a deterministic core that engineers can trust. Constraint solving lifts geometry into editable models where **design rules**—parallelism, equalities, datum references—regulate change. Learned ranking layers on top, navigating the ambiguity and noise endemic to legacy data; GNNs and transformers propose what to try first, where to focus human attention, and how to weight alternatives. Uncertainty is central: Bayesian heads and conformal prediction yield intervals that keep solvers honest and drive UI triage. The integration is the innovation: ML generates hypotheses; symbolic methods verify and parametrize; human experts arbitrate hard calls with a transparent record. This blend delivers models that regenerate reliably, carry PMI aligned to standards, and remain editable when requirements shift. In an era where legacy IP can unlock massive value, the ability to reconstruct **intent under uncertainty** becomes a strategic capability, converting brittle geometry into durable knowledge.

A pragmatic hybrid path to scale

Organizations succeed by being pragmatic rather than doctrinaire. Start with rules where the payoff is immediate—holes, pockets, fillets, patterns—and surround them with robust healing and canonicalization. Add constraint recovery to anchor sketches and expose the **parametric levers** engineers expect. Introduce ML where rules falter: ranking overlapping hypotheses, classifying process families, and prioritizing reviews. Guard everything with metrics that reflect manufacturability and regeneration, not merely geometric overlap. Bake explainability into the workflow so every inference is inspectable and reversible. Pair automation with human-in-the-loop controls that respect expertise and capture overrides as learning signals. Evolve datasets with deliberate versioning, curating difficult examples that represent your actual part mix and supplier variation. This hybrid approach compounds wins: every override improves future ranking; every metric-driven fix informs rule tuning; every standard-compliant export reduces friction downstream. Over time, reverse-engineered parts transition from risky assets to reliable components in your product platform, accelerating changes, reducing rework, and ensuring that intent is preserved across releases.

Operationalizing trust, provenance, and future-proofing

Trust is earned through transparency, standards, and repeatable results. Anchor exports in STEP AP242 with PMI and QIF, tying tolerances to faces with persistent names and recording uncertainty annotations. Perform cross-kernel validation to expose brittle features before they reach the shop floor, and maintain audit trails that connect each inference to its geometric evidence and algorithmic source. Invest in security: on-prem execution, confidential compute, and redaction pipelines make it safe to collaborate and to leverage external expertise. Measure performance rigorously—feature F1, parameter MAE, solvability, regen time, geometric deviation, manufacturability pass rate—and publish dashboards that keep teams aligned. By treating provenance as a first-class artifact, you make decisions defensible and models portable. This posture **future-proofs reverse-engineered models**, ensuring they survive tool migrations, supplier changes, and regulatory scrutiny. Ultimately, the payoff is scale: the ability to mine legacy archives, recover editable intent, and reuse design knowledge across families of products—turning yesterday’s geometry into today’s competitive advantage.

Also in Design News

Unlocking Efficiency: 5 CAD Workflow Practices to Streamline Design and Boost Productivity

October 29, 2025 6 min read

Read More

Maxon One for Studios: Unified Licensing, Pinned Installs, and Consistent Redshift Pipelines

October 29, 2025 11 min read

Read More

Design Software History: glTF and Khronos: Architecting a Runtime-Centric, Web-Native Format for Portable 3D

October 29, 2025 13 min read

Read MoreSubscribe

Sign up to get the latest on sales, new releases and more …