Your Cart is Empty

Customer Testimonials

-

"Great customer service. The folks at Novedge were super helpful in navigating a somewhat complicated order including software upgrades and serial numbers in various stages of inactivity. They were friendly and helpful throughout the process.."

Ruben Ruckmark

"Quick & very helpful. We have been using Novedge for years and are very happy with their quick service when we need to make a purchase and excellent support resolving any issues."

Will Woodson

"Scott is the best. He reminds me about subscriptions dates, guides me in the correct direction for updates. He always responds promptly to me. He is literally the reason I continue to work with Novedge and will do so in the future."

Edward Mchugh

"Calvin Lok is “the man”. After my purchase of Sketchup 2021, he called me and provided step-by-step instructions to ease me through difficulties I was having with the setup of my new software."

Mike Borzage

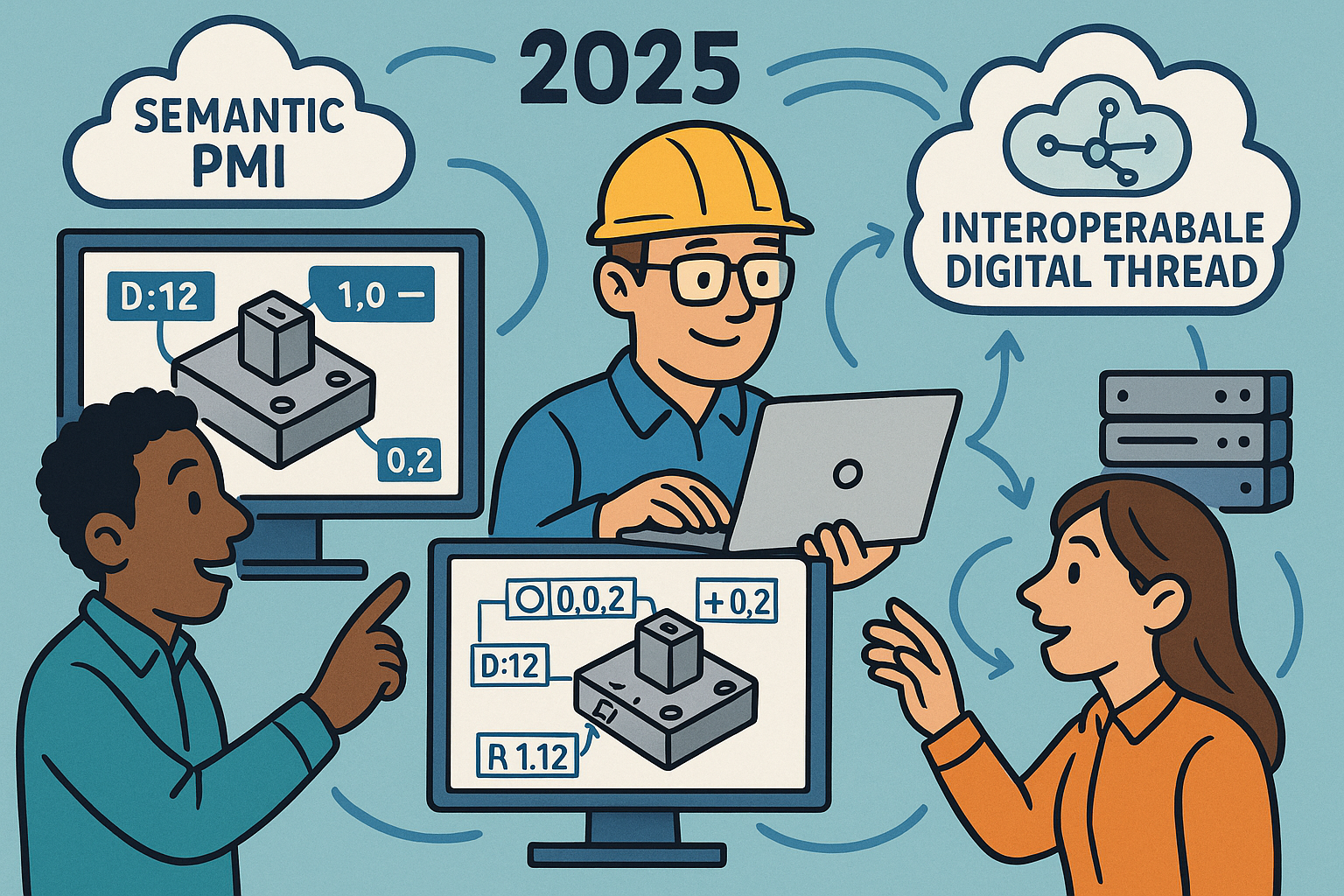

MBD 2025: Semantic PMI, Computable GD&T, and the Interoperable Digital Thread

November 20, 2025 10 min read

Introduction

Why Model-Based Definition Matters Now

By 2025, Model-Based Definition (MBD) is no longer a novel experiment or an aspirational roadmap item—it is the operational core of how leading organizations express, validate, and execute design intent. The shift isn’t merely about replacing drawings with 3D models. It is about making the model carry executable intent so that CAM, CMM, PLM, and quality systems can trust and act on what engineers specify. That shift demands precision in semantics, discipline in governance, and orchestration across the toolchain. The payoff is significant: fewer late-stage ambiguities, higher first-pass yields, and a digital thread that stays intact under the pressure of change. This article explains what MBD means in 2025, how semantic PMI and computable GD&T can generate themselves with automation and guardrails, and what it takes to keep that intelligence interoperable through standards like STEP AP242 and QIF. The near-term goal is not full autonomy; it is trustworthy automation that elevates engineers from drawing mechanics to decision-making. If your models are still annotated as pictures, you are leaving value on the table. If they are annotated as data, you are ready to wire design intent straight into manufacturing and measurement with confidence.

What MBD Means in 2025: From Visual Notes to Machine-Executable Intent

From Presentation PMI to Semantic PMI

Most organizations have lived through the era of “pretty annotations”—dimension callouts and feature control frames that look correct but behave like pictures. In 2025, the decisive move is toward semantic PMI: annotations that tools can query, validate, and execute without reinterpretation. That means dimensions, tolerances, and notes are tightly associated to features, expressed with controlled vocabularies, and backed by machine-interpretable structures rather than free text. The result is far more than convenience. When PMI is semantic, CAM can align toolpaths to true datums, CMM programs can auto-generate inspection characteristics, and PLM can detect when a change to a feature invalidates downstream plans. Instead of engineers spending hours redrawing intent, downstream systems pull the intent directly. Crucially, semantic PMI reduces “translation drift,” where a drawing says one thing and a model implies another. In complex assemblies and regulated contexts, that drift is a quality and compliance risk. By committing to semantic annotations, you create a single source of truth that is both human-readable and machine-executable, enabling a resilient digital thread from requirement to inspection result.

GD&T as a Computable Contract

In the new MBD paradigm, GD&T isn’t static documentation—it is a computable contract that formally defines how parts shall fit, function, and be verified. Datums, material modifiers, and tolerance zones move from being graphical conventions to being data objects that drive CAM fixturing, CMM path planning, and process capability checks. When a datum reference frame is encoded semantically, CAM can simulate the stability of fixturing; CMM software can optimize probing strategies against tolerance types; and statistical process control can bind measured results to the exact features and modifiers that matter. Consider a mounting interface with a positional tolerance at MMC. In a computable world, the inspection software automatically applies bonus tolerance rules, and the SPC dashboard trends the true position error under the correct material condition. On the manufacturing side, CNC post-processors can flag when planned tool deflection risks violating a tight flatness zone. The point is not to replace judgment—it is to make the contract explicit so machines can comply and humans can focus on tradeoffs. The contract becomes the living heart of the model: precise, traceable, and enforceable across the lifecycle.

Business Drivers You Can Quantify

The drivers behind semantic MBD are practical and measurable. Organizations adopt it to eliminate costly misinterpretations, compress time-to-FAI, and close the loop between design and quality. Three threads recur:

- Reduce mismatches and ambiguity: Eliminate drawing-to-model conflicts and late clarification cycles that consume engineering hours and cause rework.

- Automate inspection planning: Generate CMM and scanning programs directly from PMI; feed results into SPC to accelerate learning and capability improvement.

- Enable digital traceability: Connect requirements to features, features to tolerances, and tolerances to measurements; prove compliance at release gates.

A typical path to value starts with high-volume interfaces and features that cause the most N/C activity, then expands to the rest of the product once wins are visible. A key tactic is to quantify the “manual touch” burden: minutes spent re-annotating, writing CMM programs from scratch, or realigning fixtures after late changes. When semantic PMI displaces these touches, you capture time and avoid errors simultaneously. The true ROI becomes clearest when inspection results feed back into tolerance optimization, reducing over-tolerancing and bringing processes inside a capability envelope grounded in Cp/Cpk data.

Readiness Indicators in Real Organizations

Moving to executable models requires specific capabilities. First, models must be feature-based with stable IDs and robust associativity so annotations survive change. Second, organizations need a GD&T rules base—libraries of fit callouts, datum patterns, and modifiers—that encode corporate knowledge. Third, validation gates must verify PMI completeness, consistency, and standards conformance before release. You will know you are ready when major feature types are reliably recognized by the authoring system, and when PMI survives round-trips through exchange formats like STEP AP242. Another indicator is the existence of formal “definition quality” metrics: for example, a PMI coverage score and a report that flags broken associations, missing datums, or standard violations. Finally, the presence of a cross-functional governance loop—design, manufacturing, and quality reviewing the semantic payload together—signals maturity. The practical test is simple: change a key characteristic on a common interface and watch whether CAM, CMM, and SPC models update with minimal manual intervention. If they do, your MBD is more than annotated geometry—it is a system that carries and protects intent.

Automation Deep Dive: How PMI and GD&T Generate Themselves

From Feature Recognition to Intent Capture and Datum Strategy

The engine of MBD automation is feature and intent recognition. Modern authoring tools detect holes, slots, bosses, counterbores, patterns, and interfaces, tagging them as manufacturing features rather than isolated faces. On top of that, they map functional surfaces to system requirements—assembly fit, sealing lines, load paths, or thermal interfaces—so tolerancing scope is grounded in purpose. With this context, the tool proposes a datum strategy: primary, secondary, and tertiary references aligned to how the part is assembled and measured. Stability of contact, accessibility for inspection, and fixturing feasibility drive suggestions. When the system detects over- or under-constrained schemas, it explains the tradeoffs: “Secondary datum is collinear with primary; consider orthogonal plane for robustness,” or “Pattern datum candidate reduces variability in assembly clamp sequence.” The goal is to automate not just placement of symbols, but the reasoning behind them. In practice, the assistant may offer multiple viable datum schemas ranked by stability and measurability, inviting the engineer to choose. Explainability is vital here: automated proposals are accompanied by natural-language rationales, links to standards clauses, and simulation cues that de-risk acceptance.

Tolerance Synthesis and Budgeting

Once datums are set, the assistant derives candidate tolerances from performance requirements, historical Cp/Cpk, and cost models. It proposes limits that hit functional targets with an acceptable risk profile. Deterministic stack-ups compute worst-case accumulation across key characteristics; Monte Carlo runs explore distributional behavior under realistic process variability. The assistant then offers allocations: tighten the flatness here, relax perpendicularity there, shift a fraction of the radial clearance to a mating component. Manufacturing capability libraries inform tradeoffs: if a bore is routinely held to 0.02 mm position in a given cell, the system does not default to an ultra-conservative 0.01 mm without justification. This budgeting loop includes pricing models that associate fractional tolerances with cycle time, tool wear, and scrap risk. The user experience emphasizes levers: “Reduce surface profile by 20% to increase assembly yield by 3%, expected cost +0.6%.” Engineers can accept, modify, or override with notes. Over time, feedback from SPC refines these models so recommendations get sharper and less biased toward unnecessary tightness.

Knowledge, Rules, and AI With Guardrails

Automation scales only when it stands on a foundation of explicit rules and curated knowledge. Corporate standards—fastener interfaces, machined fits, datum patterns for castings—are encoded as templates with context checks. When the tool detects a countersunk fastener in a sealed interface, it applies the correct callouts and warns if the sealing land is undersized. Rule violations are flagged with fix suggestions and rationale tied to ASME/ISO clauses. AI augments this by mining legacy models to learn recurring GD&T schemes and variation patterns, but its outputs are framed as patterns, not absolutes. Every AI proposal is accompanied by a “Why” note and a confidence score; low-confidence cases explicitly request human input. A lightweight rationale capture flow preserves design intent in natural language: “Datum A chosen as machined plane contacting chassis rib; ensures load path and measurement accessibility.” This content becomes searchable audit evidence and improves future recommendations. The guardrails are twofold: standards-aligned templates that limit the solution space to valid options, and a human sign-off that anchors accountability where it belongs—on the engineering team.

Automated Inspection Planning and Hard Cases

When PMI is semantic, inspection planning can be synthesized directly. The system classifies characteristics and selects probing versus scanning, generates approach vectors, and sequences moves to minimize cycle time while protecting accuracy. For CMMs, it proposes styli sets and collision-safe paths; for scanners, it recommends coverage patterns and meshing densities tuned to tolerance types. Plans export as QIF with characteristics bound to feature IDs so results map back to the model for SPC. The loop closes when measured capability feeds tolerance optimization, informing future budgets. Hard boundaries remain: freeform surfaces, composites, flexible parts, and AM lattices require specialized strategies. Surface profile over a freeform turbine blade may need hybrid probing and scanning with thermal compensation; composite hole position may warrant on-part reference frames due to layup variability; thin-walled parts need fixtures modeled as part of the measurement system. Automation should never bluff confidence here. It must surface uncertainty, show what it cannot guarantee, and invite human decision-making—“Recommend human review: curvature sign changes exceed scanner accuracy envelope; propose alternative datum schema or local tolerance zone refinement.” This partnership between automation and expert oversight is where reliability lives.

Digital Thread and Interoperability: Making MBD Travel Intact

Standards and Formats to Anchor Interoperability

Interoperability is won or lost on the discipline of standards. Authoring must conform to ASME/ISO GD&T and to model organization rules that define feature naming, layer usage, and view structures. Exchange must use semantic-capable formats—most notably STEP AP242 with PMI or JT with PMI—plus QIF for metrology. Human-readable 3D PDFs remain useful, but as fallbacks, not system inputs. The point is to future-proof your definition and hedge against vendor lock-in. When PMI is encoded semantically in AP242, you can round-trip models across CAD and downstream tools while preserving datums, modifiers, and associations. QIF binds characteristics, equipment, and results, enabling traceable analytics. Two practices harden this chain: treat import/export as an engineered interface with regression tests, and gate releases with validators that score PMI completeness and conformance. Standards aren’t bureaucracy; they are the scaffolding that lets your MBD travel intact across organizations and years. Without them, the cost of translation and the risk of semantic loss climb sharply, eroding trust in the thread you worked to build.

A Toolchain Reference Pattern and Governance That Scales

A healthy MBD toolchain follows a recognizable pattern. Authoring systems create feature-based models with semantic PMI, robust associativity, and visible change tracking. Rules engines validate standards conformance, completeness, and clash detection, maintaining a PMI quality score visible at release gates. Publication generates audited, versioned MBD packages with effectivity, bundling validation reports so consumers know what they can trust. CAM uses datums and tolerances to drive fixturing, stock definitions, and toolpath strategies. CMM software auto-loads characteristics, builds paths, and exports results linked to feature IDs. PLM anchors requirements, risks, and compliance evidence to the model, providing traceability for engineering change orders. Governance treats PMI as first-class data: versioned, reviewed, and diffed semantically. Semantic diffs matter—if the tolerance zone changed from positional to profile, stakeholders must see more than a revised picture. Rationale capture ties each change to risk, requirement, or capability data. When ECOs propagate, plans and inspections update in lockstep, and the people who must sign off see precisely what changed and why. This is not theory; it is a pattern that keeps pace as products and organizations scale.

Metrics, ROI, and Risk Management

Measurement drives adoption. Track the percentage of PMI that is truly semantic versus presentation-only; raise the bar each release. Monitor first-pass automatic inspection program rates and manual edits per plan; declines indicate improving annotation quality. Watch reduction in NC/CMM rework, time-to-FAI, and verification cycle time. Combine these with cost models to quantify ROI beyond anecdote. Risk must be managed, too. Three pitfalls recur and can be mitigated:

- Semantic loss in translation: Round-trip test across your toolchain; gate releases with validators and fail builds that regress semantic fidelity.

- Over-tolerancing: Conservative defaults creep in; couple recommendations to capability and cost models, and require approvals for tight stacks.

- Vendor lock-in and IP exposure: Prioritize standards-aligned pipelines; maintain an export-validation regimen; apply role-based redaction and watermarking for external shares.

Done well, metrics are not surveillance—they are feedback. The objective is to see whether your models are becoming more executable, your plans more automatic, and your measurements more traceable. Risks do not vanish; they are contained by engineering the interfaces and validating against drift. The reward is confidence that your MBD is not a brittle artifact but a resilient system component.

Conclusion: From Annotations to Autonomy—But Keep Humans in the Loop

From Executable Intent to Elevated Engineering

The defining value of MBD in 2025 is executable intent. When datums, tolerances, and associations are expressed semantically, downstream systems can trust and act on them without guesswork. Automation then does what it does best: it accelerates repeatable work, exposes tradeoffs, and keeps data in sync across authoring, manufacturing, and measurement. That elevation lifts engineers from drawing mechanics to decision-making—balancing capability, cost, and risk with a tighter feedback loop. None of this negates human agency. It requires it. The point of a computable contract is not to cede judgment to software but to provide a clear, precise foundation for judgment. Guardrails—the standards, validation gates, and rationale capture—ensure explainability and traceability. The outcome is a digital thread that carries design intent unbroken from concept to measurement, one where trustworthy autonomy can emerge in specific tasks while humans steer the system. If you invest in semantics, rules, and validation, your models stop being pictures of intent and become instruments that play it.

Pragmatic Next Steps and the North Star

Start where the payoff is highest. Target high-volume interfaces and key characteristics, build a template library, and codify your rules. Introduce semantic PMI validators and treat a PMI quality score as a release criterion. Wire QIF-based inspection loops so measured capability continuously informs tolerance optimization. Make the feedback visible with dashboards that bind requirements, features, tolerances, and results. Along the way, insist on standards-aligned exchange—AP242 and QIF—and maintain export-validation routines that protect against drift and lock-in. Embed rationale capture in the authoring flow to improve auditability and to train the assistant responsibly. The North Star is not maximal automation for its own sake; it is trustworthy autonomy in definition, where explainability, governance, and risk controls sustain confidence. In that operating model, models do more than show geometry; they prosecute intent. Every datum, modifier, and tolerance becomes a data-backed commitment that survives translation, withstands change, and drives manufacturing and measurement as a single, coherent system. That is how MBD stops being a promise and becomes infrastructure.

Also in Design News

Design Software History: From Phong to MaterialX: The Evolution of Appearance Models, PBR Standards, and Commercial Material Ecosystems

November 20, 2025 12 min read

Read More

Cinema 4D Tip: Cinema 4D: Linear Workflow and Color Management

November 20, 2025 2 min read

Read More

ZBrush Tip: Mirror and Weld Color to Transfer Polypaint Across the X Axis

November 20, 2025 2 min read

Read MoreSubscribe

Sign up to get the latest on sales, new releases and more …