Your Cart is Empty

Customer Testimonials

-

"Great customer service. The folks at Novedge were super helpful in navigating a somewhat complicated order including software upgrades and serial numbers in various stages of inactivity. They were friendly and helpful throughout the process.."

Ruben Ruckmark

"Quick & very helpful. We have been using Novedge for years and are very happy with their quick service when we need to make a purchase and excellent support resolving any issues."

Will Woodson

"Scott is the best. He reminds me about subscriptions dates, guides me in the correct direction for updates. He always responds promptly to me. He is literally the reason I continue to work with Novedge and will do so in the future."

Edward Mchugh

"Calvin Lok is “the man”. After my purchase of Sketchup 2021, he called me and provided step-by-step instructions to ease me through difficulties I was having with the setup of my new software."

Mike Borzage

Live Performance Budgets: Real-Time Cost, Carbon, Energy and Lead-Time in CAD/BIM

December 11, 2025 14 min read

From Static Reporting to Live Performance Budgets

Definitions and scope

Design organizations have long treated sustainability and cost reports as post-hoc artifacts, disconnected from the moment of decision. A **performance budget** changes that posture by defining continuously updated targets during modeling for total cost, **carbon (kgCO2e)**, **energy (kWh)**, **water (L)**, and **lead time**, and making those targets visible where choices are made: inside the parametric modeler, the BIM authoring tool, or the DfAM workflow. Instead of a spreadsheet that arrives weeks after a design freeze, the budget acts as a living constraint system that reacts to feature edits, material swaps, and routing changes in near real time, translating geometry into metrics without leaving the creative context. This mindset shifts budgets from static yardsticks to interactive guardrails and feedback loops. In practice, the performance budget is neither a single number nor a single discipline; it is a synchronized set of targets that express tradeoffs clearly—how a lower-cost supplier might increase transport carbon, how a thinner wall that saves mass could demand a tighter tolerance and longer cycle time, or how switching to recycled feedstock affects both price volatility and embodied emissions. Teams gain clarity because every incremental change reconciles against the current budget and the release target, making drift visible early rather than after commitments solidify, when course correction is expensive or impossible.

- Cost budget: should-cost forecast tied to materials, processes, yields, and logistics.

- Carbon budget: cradle-to-gate or cradle-to-grave emissions expressed in kgCO2e with regionalization.

- Energy budget: manufacturing and in-use energy consumption in kWh, tied to grid factors.

- Water budget: process and supply-chain water intensity in liters, including scarcity weighting if applicable.

- Lead time budget: combined production, transport, and approval slack in days, bounded by release cadence.

Boundaries and allocation

Budgets are only actionable when their system boundaries are explicit, consistent, and traceable. Designers must choose whether the budget is **cradle-to-gate** (raw material through factory exit) for development milestones, or **cradle-to-grave** when product stewardship and end-of-life policies are integral to the release criteria. Carbon budgeting requires clarity on **Scope 1, 2, and 3** emissions per the GHG Protocol: in most design contexts, Scope 3 upstream (purchased goods and services, transport) dominates, while Scope 2 (electricity) and Scope 1 (on-site fuels) shape plant choices. Allocation rules also shape fairness: mass-based allocation is simple but can distort when co-products have very different economic values; economic allocation reflects market signals but can swing with price volatility; energy-based allocation fits thermally intensive co-production. The solution is to encode allocation policy as a first-class setting with provenance, so the same part measured under different boundary choices produces comparably interpretable metrics. This also prevents “boundary shopping,” where optimistic assumptions leak out of one step into another. Finally, material stacks and coatings should be represented explicitly to reflect layered impacts, and transport legs must be modular to capture changes in mode, route, and containerization. When boundaries and allocations are versioned and reported next to the numbers, the budget becomes auditable and the design team avoids misinterpretation during reviews.

- Boundary options: cradle-to-gate for phase gates; cradle-to-site to include inbound logistics; cradle-to-grave for stewardship.

- Allocation choices: mass, economic, energy; document rationale and applicable standards (e.g., ISO 14044).

- Scope mapping: highlight where Scope 3 dominates; show plant electricity (Scope 2) regionalization explicitly.

- Layered materials: adhesive, primer, coating each treated as separate impacts with thickness and coverage.

- Transport modularity: route nodes (plant, port, DC) and mode nodes (truck, rail, ocean, air) with editable factors.

Why now

The timing is not incidental. Pressure from **ESG disclosures** (e.g., CSRD, emerging SEC climate rules), customer mandates, and supply chain volatility is converging with **carbon pricing** and procurement’s **should-cost** expectations. At the same time, the well-documented dynamic that design decisions lock in **70–80% of lifecycle cost and carbon** before release makes latency the enemy of leverage—waiting weeks for an external LCA or quoting cycle squanders the window in which geometry is still fluid. Meanwhile, energy grids are decarbonizing unevenly and supply routes are in flux, so static averages are less defensible. Teams need a live connection to data that understands time, geography, and uncertainty. Moreover, digital engineering stacks now give us the hooks—feature history, multi-body metadata, and material assignments—to infer reliable BOMs and process routes with little human friction. The convergence of modeling tool APIs, scalable estimation services, and versioned sustainability data means the barrier to live budgeting has fallen; inertia, not capability, is the primary hurdle. Organizations that codify budgets as they model will make better decisions faster, with traceable rationales that withstand external scrutiny, while laggards struggle to stitch together late spreadsheets and rework parts that never had a chance to meet their targets.

- External drivers: regulatory disclosures, customer supplier scorecards, insurer and lender risk models.

- Market factors: energy price swings, logistics disruptions, and regional content rules.

- Internal enablers: accessible CAD/BIM APIs, cloud microservices, ML surrogates, and curated data pipelines.

- Operational benefit: fewer late-stage surprises; faster, defensible sign-offs; reduced premium freight and expediting.

Where it fits and the target outcomes

The natural home for a live performance budget is a **heads-up display inside CAD/BIM/DfAM** where the designer’s attention already lives. The overlay connects to the **EBOM** as features evolve and to the **MBOM** as routing options crystallize, with the PLM system brokering change control and traceability. Under the hood it fuses LCA databases and EPDs for carbon, supplier quotes for price curves, plant calendars for capacity and takt-time effects, and **grid/transport models** for regional energy and logistics. The goal is not another dashboard, but direct behavioral change: immediate “what-if” deltas when swapping alloys; guardrails that prevent drifting beyond a cost or carbon envelope; and **audit-ready rationales** showing how and why each revision changed metrics. When budgets flow through the design graph, each change event becomes a moment to learn, adjust, or defend a choice. High-performing teams end up with cleaner releases, fewer ECOs due to budget overruns, and documentation that explains tradeoffs rather than hiding them. Critically, the overlay must be fast, minimally intrusive, and consistent across contributors, so that individual preferences do not Balkanize the discipline. With that foundation, the organization can scale from a pilot part family to a portfolio, from a single plant to a global footprint, all while preserving the integrity of targets and the fidelity of data.

- In-tool overlay: inline metrics, thresholds, and trend arrows; no context switch to external portals.

- Connected sources: EBOM/MBOM, PLM, LCA/EPD repositories, quoting systems, route and grid services.

- Outcomes: rapid “what-if” loops, budget guardrails, and explainable change histories with **evidence links**.

- Scalability: consistent patterns across teams; role-aware views for engineers, buyers, and program managers.

Architecture and Data Plumbing for Real-Time Metrics

Event-driven pipeline

A responsive performance budget starts with an **event-driven pipeline**. The modeling tool emits change events—feature edit, material assignment, pattern count, tolerance tweak—that stream to a service that performs incremental BOM inference. That inference emits deltas to microservices that estimate mass, cost, carbon, energy, water, and lead time; their responses drive a compact HUD update inside the modeling viewport. To keep interactions smooth, the pipeline uses subscription semantics over a message bus and caches stable subassemblies, so routine edits do not trigger full recomputes. The orchestration is optimized for **sub-200 ms updates**: a dependency graph identifies the smallest affected set of parts and processes, dirty nodes are scheduled in parallel, and results are memoized with content-addressable keys tied to geometry hashes and parameter fingerprints. The system gracefully degrades—if a heavy estimator misses the budgeted time box, a surrogate or last-known-good result feeds the HUD, annotated with uncertainty. This architecture turns the model history into a metric stream where each action is quickly reflected in the budget status, preserving the cognitive loop between intent, effect, and decision. Rather than waiting for nightly builds or manual refreshes, designers experience a living model whose economic and environmental posture is always visible and actionable.

- Inputs: feature deltas, property changes, configuration toggles, assembly operations, route selections.

- Core services: BOM inference, mass/geometry estimators, process route selector, cost/carbon calculators, lead-time forecaster.

- Transport: publish/subscribe messaging with backpressure and QoS for interactive latency.

- Optimization: incremental recomputation, parallel execution, and aggressive memoization on hash-stable subgraphs.

Dynamic BOM and feature extraction

Accurate budgets depend on a smart, **dynamic EBOM** derived from geometry and metadata. Mass is computed from kernel queries or adaptive tessellations, adjusted for hollows, foams, and lattices using heuristics or template annotations. Surface area and edge length inform finishing operations, while bounding boxes and draft analysis suggest manufacturability constraints. Multi-body logic maps solids to likely **process routes**—a thick billet-like block might favor CNC; a thin-walled complex interior might trigger DfAM with a specific support strategy; a high-volume symmetric shell points to molding. Material stacks are explicit: base alloy, heat treatment, primer, coating, adhesive layers—each with thickness, coverage, and cure energy. Fasteners and COTS parts are recognized using feature signatures and ML classifiers trained on vendor catalogs, enabling realistic cost and lead-time pulls. The extractor tags features like threaded holes, tight tolerances, surface finish classes, and overhang angles that feed estimators without a human in the loop. Because geometric fidelity matters, the extractor balances speed and precision by caching results for unchanged bodies and only re-evaluating affected metrics when thresholds are crossed. The result is a living BOM that represents not just parts, but their manufacturing intent and finishing realities, the very levers that drive cost and carbon.

- Geometry-derived properties: volume, mass, surface area, edge length, thin features, overhangs.

- Process mapping signals: tolerance bands, wall thickness histograms, n-up potential, draft presence, material rheology constraints.

- Material stacks: base material plus treatments, primers, coatings, and adhesives with coverage rules.

- Recognition: fasteners, bearings, motors, and other COTS via geometry + metadata + ML classifiers.

Data sources and normalization

Trustworthy numbers require trustworthy data with transparent lineage. For carbon, the pipeline integrates **EPDs**, **LCI databases**, regional **grid factors**, and transport modes and routes with time-varying energy mixes. For cost, it draws on **should-cost models** that decompose material, process, and overhead; supplier **price curves**; commodity, currency, and **inflation indices**; and logistics tariffs. Every factor is normalized to consistent units and stamped with geography, date, and version, so the estimator can assemble a coherent picture with uncertainty bounds. Where there are gaps, conservative defaults are flagged with their provenance, and the UI distinguishes between measured values and inferred surrogates. Because energy mixes and logistics evolve, the data service tracks temporal validity and can re-estimate upon date changes, a critical feature for programs that span multiple quarters. By harmonizing units and taxonomies—materials, processes, finishes, and transport modes—the system prevents hidden conversions and misclassification. Designers see not just a number, but the **provenance** behind it, which builds trust and enables audits. Crucially, the data service supports per-customer curation, allowing organizations to pin approved EPDs, vetted suppliers, and preferred process cards while still benefiting from global updates and corrections in a controlled manner.

- Carbon inputs: EPD references, LCI datasets, plant grid mixes, logistics route factors with time stamps.

- Cost inputs: material commodity indices, supplier breaks and MOQs, labor rates, machine rates, currency and inflation series.

- Normalization: canonical units (kg, kWh, L), taxonomy mapping, and uncertainty ranges (mean, p5–p95).

- Lineage: source, version, geography, validity window, and policy that chose each factor.

Compute and integration choices

A practical deployment blends **hybrid edge/cloud** computation. On-device geometry processing ensures responsiveness and IP containment; the cloud handles ML surrogates for expensive physics (e.g., AM print time, toolpath-aware cycle estimates) and multi-source data fusion. Well-defined API contracts keep the system evolvable: stateless “estimate” endpoints accept a compact descriptor of geometry hashes, materials, and candidate routes, return summary statistics (mean, p5–p95), and embed **data lineage**. Endpoints expose both batch and streaming modes, the latter optimized for interactive edits. Security enforces least privilege and **role-based access control**, with immutable logs that link design revisions, estimator versions, inputs, and outputs; these logs dovetail with PLM change workflows, providing traceable approvals and audit trails. Integration adapters for major CAD/BIM/DfAM tools minimize friction, and a common schema bridges EBOM to MBOM, translating modeling choices into process steps with machine and site context. Reliability patterns—circuit breakers, retries, and cached fallbacks—preserve UX when services hiccup. With these choices, the platform supports both exploratory single-user sessions and multi-branch team programs without brittle dependencies, and it scales as estimator fidelity and data richness improve over time.

- Edge: geometry queries, feature extraction, quick heuristics, and HUD rendering.

- Cloud: ML surrogates, multi-source joins, historical analytics, and portfolio-level benchmarking.

- APIs: stateless estimate endpoints, streaming updates, and schemas with uncertainty and lineage.

- Governance: RBAC, immutable logs, PLM-aligned approvals, and encrypted data at rest and in transit.

Estimation Methods and Interaction Patterns That Work in Practice

Fast, layered estimators

Speed and accuracy are usually at odds, so practical systems use **layered estimators** that deliver good-enough answers quickly and refine as needed. Geometry-to-mass starts with accurate kernel volume times density, then applies heuristics for **hollow, foam, and lattice** content using metadata, detected patterns, or user overrides. Process route selection mixes rule-based filters (tolerances, wall thickness, n-up potential, volume) with ML ranking trained on historical routing decisions. Carbon models combine process-based LCA with input–output hybrids to cover data gaps; manufacturing steps pull regionalized energy mixes and yield assumptions; transport legs compute mode-specific factors from distance and load density. Cost models blend activity-based costing with parametric regressions; they include scrap rates, setup amortization, machine-hour rates, and operator takt time, interpolating supplier price curves where available. The key is composability: estimators emit not only a number but their **feature attributions**—which inputs drove the result—so the UI can explain deltas intelligibly. In the interactive loop, a fast approximation returns in tens of milliseconds, with a refinement pass following under a second; users perceive immediate guidance and then higher confidence. Because estimators are modular, teams can upgrade a specific process (e.g., die-casting energy) without destabilizing the rest of the stack.

- Mass estimation: kernel volume × density ± lattice/foam factors; overrideable with part-specific annotations.

- Route selection: rules to prune infeasible options; ML ranking to prioritize viable candidates by cost/carbon/lead time.

- Carbon estimation: hybrid LCA with regionalized energy and transport segmentation by mode and load factor.

- Cost estimation: activity-based with parametrics for cycle time, scrap/yield, setup, and capacity utilization.

Uncertainty and sensitivity

Design decisions under uncertainty require tools that quantify and communicate it. Each input—density tolerance, scrap rate, energy factor, supplier price curve—carries a distribution, not a point. Estimators propagate these using analytical approximations or Monte Carlo samples to produce **mean and p5–p95 bands**, which the HUD renders as subtle error bars and traffic-light thresholds. Rather than hiding uncertainty, the interface makes it explanatory: a top-drivers panel reveals which materials, operations, or routes dominate variance, guiding where to tighten assumptions or seek quotes. One-click sensitivity scripts test **“+/- 10% material density”** or **“change supplier region”**, showing deltas in cost and carbon along with elasticities. Designers learn whether a choice is robust or fragile and where a quick experiment could bring confidence. When a metric straddles a threshold, the HUD explains whether it’s within error tolerance or a genuine overrun. For program managers and buyers, uncertainty-aware budgets translate to fewer surprises and clearer negotiation targets; for sustainability teams, they provide the evidence to attach ranges, not false precision, to external statements. Over time, as actuals flow back from production and logistics, distributions narrow—closing the loop between modeled intent and realized performance.

- Propagation: analytical approximations for speed; Monte Carlo for complex, non-linear cases.

- Presentation: error bands, sensitivity bars, and top-driver rankings embedded in the modeling viewport.

- Actions: one-click toggles for material, route, and region; saved scenarios with named assumptions.

- Learning: feed actuals to recalibrate priors and reduce estimator variance continuously.

Real-time performance techniques

Responsiveness is a product of algorithmic choices and pragmatic engineering. An incremental recompute graph identifies dependencies between geometry features, BOM items, and estimator inputs, allowing **dirty-node scheduling** so only affected metrics recompute. Subassemblies are memoized with content-addressable keys so minor edits avoid expensive recalculations. For heavy physics—toolpath simulation, thermal modeling, AM print time—lightweight **ML surrogates** trained on high-fidelity runs return results in milliseconds while flagging when predictions fall outside their training domain. Budget thresholds compile into lightweight predicates that provide instant **pass/fail** feedback before full recompute finishes, preserving the flow. Caches are versioned and tenancy-aware to keep IP boundaries intact. On the client, rendering uses a compact HUD and avoids layout thrash; updates push small deltas rather than full redraws. The system monitors its own latency budget, adapting sampling rates when the user is in a high-edit phase versus slower inspection. By treating performance as a requirement equal to accuracy, teams achieve the holy grail: a budget that feels alive, trustworthy, and unobtrusive, turning metric awareness into a natural part of modeling rather than a chore that interrupts it.

- Graph-based recompute: dependency edges across features, BOM lines, and estimator inputs.

- Memoization: content-addressable caches keyed by geometry and parameter hashes.

- Surrogates: ML approximations with out-of-distribution guards and automatic fallback to full physics when needed.

- Immediate predicates: compiled thresholds for instant HUD signals independent of full estimator completion.

UX patterns inside modeling tools

Good UX makes budgets usable; great UX makes them influential. A compact, always-on **heads-up display** shows current versus target, trend arrows, and traffic-light states; hovering reveals delta-to-last-change annotations that explain why the number moved. Scenario toggles let designers evaluate alternate materials, processes, and sites side-by-side with cost/carbon **waterfalls** that break down the contributions of materials, energy, logistics, and yield. Guardrails combine soft nudges—gentle notifications when drift begins—with hard gates at release when a metric violates policy; the system can auto-suggest replacements ranked by a **multi-objective score** that balances cost, carbon, and lead time. Collaboration flows allow per-branch budgets, threaded comments bound to metric deltas, and decision capture with links to evidence—EPDs, quotes, and approvals—so knowledge survives handoffs. Role-aware views tailor detail: CAD-centric overlays for designers; rollups and variance drivers for program managers; supplier and route comparators for buyers. By aligning interaction patterns with the rhythms of modeling, the budget becomes an ally to creativity, not a bureaucratic overlay. The UX respects focus, provides clarity, and invites exploration, so better choices happen naturally and earlier.

- HUD essentials: target vs. actual, trend, error bands, and last-change attribution in context.

- Scenario kits: material/process/site toggles with side-by-side comparisons and saved alternatives.

- Guardrails: soft nudges during exploration; hard gates and auto-suggest at release checkpoints.

- Team workflows: branch-level budgets, threaded rationale, and evidence attachments aligned with PLM.

Conclusion

From retrospective to actionable

Embedding live cost and **carbon metrics** into modeling shifts decisions upstream, precisely when leverage is highest. A budget that updates in response to feature edits, material swaps, and route choices turns static accounting into a creative constraint—one that teaches, guides, and justifies. The **winning stack** is not a single product but a composition: event-driven architecture for responsiveness; geometry-aware inference for reliable EBOMs; trustworthy, versioned data for carbon, cost, energy, and logistics; and layered estimators that balance speed with fidelity and express uncertainty, not false precision. When this stack is wrapped in a clear UX that explains tradeoffs—why mass fell but cycle time rose, how transport mode choice eclipses material differences—the conversation changes from “how did we miss?” to “which path dominates our budget and why?” The practical effect is fewer late ECOs, tighter supplier negotiations grounded in **should-cost** evidence, and sustainability claims that survive audit because boundary and allocation choices are explicit. Most importantly, the budget becomes a shared language across engineering, procurement, manufacturing, and sustainability teams, aligning incentives and shortening the distance from intent to outcome. Numbers alone don’t change behavior; live, explainable, and contextual metrics do.

- Shift: from post-hoc reporting to in-context guardrails and feedback loops during modeling.

- Stack: event-driven pipeline, dynamic BOM, curated data with lineage, fast estimators with uncertainty.

- Outcome: better, earlier tradeoffs; traceable rationales; resilient releases that meet cost and carbon targets.

Practical adoption path and long-term vision

Start small, then scale with confidence. Choose one product line, a curated set of materials and processes, and a few clear targets; wire the **live overlay** in the modeling tool and connect a minimum viable data backbone with versioned EPDs and supplier curves. Iterate on estimator fidelity as adoption grows, capturing actuals to tighten uncertainty and tuning UX to fit team habits. Expand across routes and regions only when data quality and governance can keep pace. Over time, the live performance budget evolves from a helpful overlay to a **governance backbone**: it closes the loop from design intent to compliant, cost-efficient, and low-carbon products at scale. Immutable logs align with PLM workflows, approvals are tied to metric states, and policy gates are enforced consistently across programs. Portfolio analytics roll up savings and emissions pathways, informing strategy and supplier development. As grids decarbonize and supply networks shift, the system’s temporal and geographic awareness keeps decisions current. The north star is simple: make the right choice the easy choice by putting cost, carbon, energy, water, and lead-time truth in the designer’s line of sight, updated as fast as creativity moves. When that happens, budgets stop being retrospective scorecards and become instruments of design.

- Adopt: pilot scope, curated data, tight UX; grow by feedback and actuals-driven learning.

- Govern: role-based access, immutable logs, PLM alignment, and policy gates anchored in live metrics.

- Scale: from part to portfolio, site to network, with trustworthy data and estimators that improve continuously.

Also in Design News

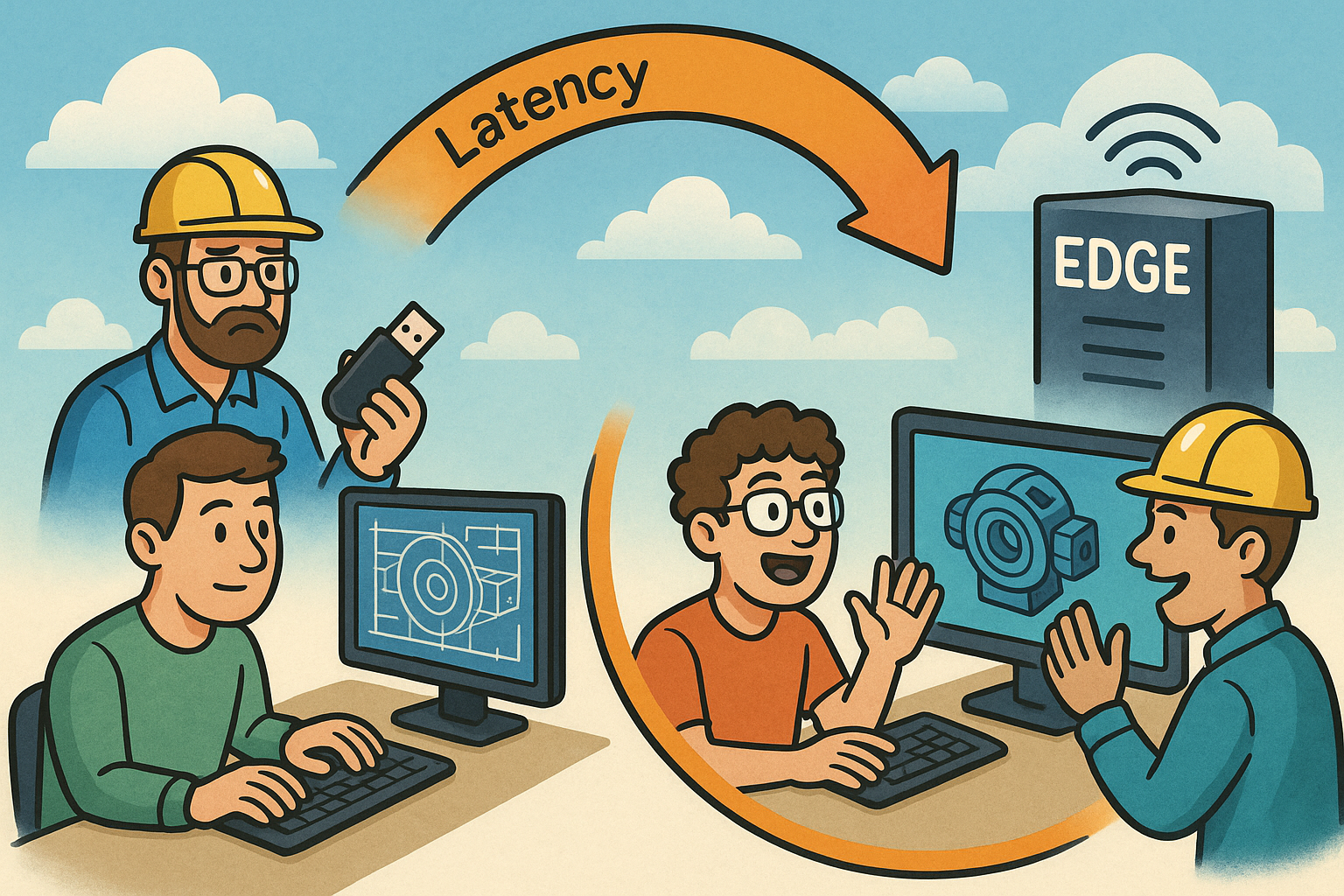

Design Software History: From File-Shuttling to Edge: The Latency Evolution of Collaborative CAD and Real-Time Visualization

December 11, 2025 13 min read

Read More

Cinema 4D Tip: Cinema 4D Soft Body Quick Setup & Parameter Tuning

December 11, 2025 2 min read

Read More

AutoCAD Tip: Annotate and Plot from Paper Space for Consistent Sheets

December 11, 2025 2 min read

Read MoreSubscribe

Sign up to get the latest on sales, new releases and more …