Your Cart is Empty

Customer Testimonials

-

"Great customer service. The folks at Novedge were super helpful in navigating a somewhat complicated order including software upgrades and serial numbers in various stages of inactivity. They were friendly and helpful throughout the process.."

Ruben Ruckmark

"Quick & very helpful. We have been using Novedge for years and are very happy with their quick service when we need to make a purchase and excellent support resolving any issues."

Will Woodson

"Scott is the best. He reminds me about subscriptions dates, guides me in the correct direction for updates. He always responds promptly to me. He is literally the reason I continue to work with Novedge and will do so in the future."

Edward Mchugh

"Calvin Lok is “the man”. After my purchase of Sketchup 2021, he called me and provided step-by-step instructions to ease me through difficulties I was having with the setup of my new software."

Mike Borzage

Tamper-Evident Design Histories: Cryptographic Provenance, Append-Only Logs, and Deterministic Rebuilds

January 22, 2026 11 min read

Introduction

Context and intent

The design history of modern products is no longer a collection of editable documents; it is a continuous stream of decisions, parameter tweaks, tolerance edits, simulation reruns, manufacturing adjustments, and stakeholder approvals. In regulated domains, that stream must serve as **auditable, tamper-evident evidence** rather than a narrative reconstructed from memory and screenshots. Yet, many organizations still rely on conventional PDM/CAD histories and scattered PDFs, which yield brittle, unverifiable trails. This article presents a practitioner’s blueprint for turning design activity into **cryptographically verifiable provenance**, with minimal disruption to engineering flow. We will map the regulatory drivers and the practical threat model, then outline a reference architecture centered on **append-only event logs**, **content addressing**, and **per-event digital signatures**. Finally, we translate these ideas into product workflows—from CAD instrumentation and deterministic rebuilds to Part 11-compliant approvals and human-centered diff views—and close with pragmatic rollout steps and success metrics. The goal is not hype, but a system that lets engineers work quickly while giving compliance teams and auditors machine-verifiable proof of integrity, authorship, and timing. With the right stack, **compliance, trust, and velocity** can rise together.

Why auditable, tamper-evident change logs matter in regulated design

Regulatory drivers and audit expectations

Across regulated industries, expectations converge on traceable, controlled design history that stands as legal-grade evidence. Medical device teams face the trio of **FDA 21 CFR Part 11** (electronic records and signatures), **21 CFR 820** (design controls), and **ISO 13485**, all of which imply a lineage from requirements to design outputs, verification, and validation with **e-signatures that capture intent**. Aerospace and defense introduce **AS9100** quality management, plus development assurance and traceability under **DO-254/DO-178C**, with EASA Part 21 reinforcing airworthiness evidence needs. Automotive organizations align with **IATF 16949** and **ISO 26262** where the safety case must trace hazards to requirements, implementation, test results, and change impacts. Meanwhile, Model-Based Definition (MBD) emphasizes **ASME Y14.41**, treating **PMI and GD&T as controlled records**, not annotations you can casually move or reinterpret.

In practice, auditors now expect: unbroken evidence chains; repeatable exports (STEP/3MF) that reproduce identical content digests; change impact links to requirements and risk analyses; and **trusted timestamps** to anchor approvals. The implications are clear. Engineering tools must produce and preserve: clear semantic diffs, authorship and intent, ordering, and event-level integrity. If the record can be silently altered, backdated, or lost in coarse versioning, it will not withstand scrutiny. Teams that elevate design history into a cryptographically verifiable asset find their audits become faster, more predictable, and less contentious, because **proof replaces persuasion**.

Where conventional PDM/CAD histories fall short

Traditional file-based versioning and PDM check-ins were not designed to serve as **tamper-evident evidence**. Mutable feature trees allow retroactive edits that are difficult to detect, and metadata can be overwritten or “corrected” without a forensic trail. Many systems track at the document level, missing the granularity of changes—parameter deltas, mate edits, PMI updates, or alterations in CAM and FEA derivatives—that matter to quality and safety. Weak identity handling can blur authorship, while timestamping without trusted anchors enables backdating. And while screenshots, PDFs, or email threads feel reassuring, they are brittle: ambiguous, unauthenticated, and easy to spoof or misfile.

Common failure patterns include:

- Coarse versioning that treats all edits as file-level events, obscuring “what actually changed.”

- Absence of canonical hashing for payloads (B-Rep, PMI, toolpaths), making content comparison unreliable.

- Rewrites of historical entries via admin powers; insufficient WORM protections to prevent retroactive edits.

- Fragmented derivative tracking: CAM post changes or FEA boundary adjustments uncaptured by PDM.

- Approval logs that record “who clicked” but not **intent, reason, and cryptographically bound signatures**.

When these gaps surface in audits, teams spend cycles reconstructing context from artifacts that were never meant to be evidentiary. A robust solution makes **fine-grained, canonicalized events** first-class citizens and binds them with **signatures and hash links** so that omissions or silent edits are structurally impossible to hide.

Threat model and scope of proof

Design history must resist both adversarial and accidental failure modes. The threat model spans insider edits without approval, backdated entries, silent deletes, “lost” versions due to migration, and tampering with derivative artifacts like CAM or simulation decks. It also includes misconfigured time sources, ambiguous identity mapping, and weak policy enforcement that allows exports of uncontrolled geometry. Against these risks, the system should guarantee: **integrity** (no undetected edits), **authorship** (provable signer identity), **ordering** (immutable sequencing), **time anchoring** (trusted timestamps), and **revocation/auditability** (visibility into key or approval revocations).

To meet these guarantees, the log must capture more than “document changed.” It should record:

- Parametric edits, feature operations, datum and reference changes, and suppression state transitions.

- Assembly constraints and mating graphs; PMI/GD&T updates and **material and tolerance changes**.

- Derivatives: CAM toolpath parameters, build orientations/supports for AM, and FEA setups and results.

- Exports: STEP, 3MF, JT, STL, with canonicalized digests and exporter configuration fingerprints.

Finally, scope the proof boundary realistically. Not every pixel of a screenshot needs logging; what matters are **canonical, reproducible representations** and their semantics. When auditors ask, “Who changed the hole pattern count and why?” the system should surface an **append-only event** with a semantic diff, a signature, a trusted timestamp, and links to the requirement, risk, and test evidence impacted by that change.

Tamper-evidence building blocks and a reference architecture

Core primitives

At the heart of tamper-evidence is an **append-only event log**. Each per-artifact stream forms a hash chain (every event references the previous event’s digest), and cross-artifact integrity is maintained with a **Merkle DAG** that captures assemblies, derivatives, and dependent records. All payloads—B-Rep geometry, meshes, PMI, BOM, CAM, and FEA decks—are addressed by content via **canonical hashing** so identical content has identical digests regardless of file name or storage path. Every event is signed by the actor’s private key, producing **per-event digital signatures** that bind the signer’s identity, the payload digests, and the event metadata (intent, reason codes). To anchor time, attach **RFC 3161-compliant trusted timestamps**, optionally committing Merkle roots to a public transparency log or blockchain for independent verifiability.

Keys deserve first-class treatment. Assign per-user keys housed in HSMs or secure enclaves; rotate keys using forward-secure or hierarchical schemes; maintain certificate chains and revocation lists; and enforce dual-control for safety-critical approvals. These primitives are not hype—they are the minimal cryptographic toolbox required to answer: “Did this event happen in this order, by this person, over this content, no earlier than this time?” The answer should be machine-verifiable, reducing audit friction and **elevating trust from policy to proof**.

Data and storage design

Tamper-evidence collapses without **canonicalization**. Use deterministic kernel exports; normalize units, tolerances, and attribute ordering; and stabilize identifiers so that identical geometry produces identical digests across sessions and hosts. Store large artifacts in chunked, deduplicated object storage; delta strategies for assemblies can capture structural changes without re-storing the world. Maintain the log index on **WORM storage** with immutability enforcement and retention policies that match regulatory obligations. This is where engineering meets operations: stability and reproducibility become infrastructure concerns as much as UI concerns.

Design considerations include:

- Canonical serialization of PMI/GD&T and material definitions to avoid spurious diffs.

- Chunking that aligns with geometric or topological boundaries to maximize deduplication effectiveness.

- Snapshot-plus-event reconciliation to detect drift between expected and actual exports.

- Separate encryption domains for payload confidentiality vs. public digest verifiability.

The result is a storage layer where **content addresses content**, deduplication keeps costs sane, and immutability features eliminate backdoor edits. This is the substrate upon which the **append-only log** derives its practical value.

Ledger and database choices, identity, and policy

There is no one-size ledger. A pragmatic approach is a cryptographically verifiable log atop a relational/OLTP core—think **PostgreSQL plus cryptographic accumulators** or Merkleized indices—to preserve query flexibility while delivering provable history. Managed ledger databases like **QLDB** provide built-in verification and time-travel queries. For multi-organization collaboration or supplier boundaries, a permissioned blockchain (e.g., **Hyperledger Fabric**) can establish shared trust without exposing sensitive payloads. Regardless of the primary store, publish periodic Merkle roots to a **certificate-transparency-style public anchor** for third-party verifiability without leaking design data.

Identity and policy close the loop. Integrate SSO, map **RBAC/ABAC** to PLM roles, and enforce **dual-control** for critical approvals. A policy engine should gate high-risk actions, such as: “no export without approver signature,” “tolerance edits trigger re-approval,” or “safety-related constraints require multi-sig.” Preserve an immutable linkage to ECO/ECR/CAPA and maintain a provenance graph aligned with **W3C PROV**, so every design element can trace back to its sources, transformations, and responsible actors. In effect, you convert policy from guidelines into **enforceable, evidenced workflows**.

From CAD UI events to auditable evidence: productizing the workflow

Instrumentation and event semantics

The path from a designer’s click to **auditable evidence** begins with instrumentation. SDK hooks should fire at the feature, parameter, and constraint level; assembly views must emit events for mate graph changes and suppression states; and MBD updates need explicit PMI/GD&T deltas rather than opaque file saves. Events must encode canonical semantic diffs such as “constraint type changed,” “tolerance ±0.05 → ±0.02,” or “hole pattern count 6 → 8,” with stable identifiers for affected features and faces. Coverage must extend to derivatives: CAM toolpath parameters, tool libraries, post-processor versions, additive build orientation/support strategies, and FEA boundary conditions, meshing parameters, and solver versions. Treat these as first-class payloads with **content addressing** and signatures so that manufacturing or analysis changes cannot travel under the radar.

For clarity and adoption, define a domain-specific vocabulary of change types and reference schemas. Practical tips include:

- Emit both “before” and “after” digests and include a small human-readable summary string.

- Record motive metadata (intent and reason codes) at source to eliminate brittle later annotations.

- Associate events with requirement IDs and risk items at capture time, not after the fact.

- Batch micro-events when needed, but maintain a cryptographic digest that preserves each atomic step.

With these semantics, you replace narrative reconstruction with **machine-inspectable facts** aligned to engineering language.

Determinism and reproducibility

Auditors and safety teams do not want “similar.” They want “identical under defined conditions.” That standard hinges on **rebuild determinism**. Seed geometric solvers, fix tolerance strategies, and pin versioned dependencies to guarantee that rebuilding from event history reproduces the same B-Rep and PMI digests. When you export STEP, 3MF, JT, or STL, run **reproducible pipelines** with stable identifiers and normalized attribute ordering. Maintain a snapshot-plus-event reconciliation: if a snapshot deviates from the event-derived digest, flag it as drift and surface a remediation flow.

Determinism also applies to derivatives:

- CAM: pin post-processor versions, spindle and feed tables, tool libraries, and material models.

- Additive: capture build plate coordinates, orientation algorithms, and support generation parameters.

- FEA: record boundary conditions, mesh seeds, solver versions, and random seeds for stochastic steps.

Finally, embed **trusted timestamps** into builds and exports. A replay should verify content digests, order proofs, signatures, and time anchors. When two teams independently rebuild an artifact from the same event history and produce matching digests and signatures, you gain a powerful property: **reproducible builds equal identical signatures**, shrinking audit cycles and eliminating ambiguity.

Approvals and e-signatures that pass audits

An approval is more than a click; it is a legal assertion. Implement **Part 11-compliant e-signatures** that capture intent (approve, review, reject), reason (with controlled vocabularies), and a challenge-response (re-authentication) bound to the event digests. For safety-critical changes, require multi-signature workflows—e.g., design, manufacturing, and quality must jointly sign a Merkle root representing a batch of atomic changes. Time-stamp review gates to define the earliest moment at which approval is valid, and link changes to requirements, hazards, mitigations, and test evidence, so the approval embeds the safety case context.

Approval UX should reduce cognitive load:

- Show **human-centered diffs**: geometry overlays, PMI delta callouts, and assembly constraint comparisons.

- Display impacted requirements and risks with quick navigation to prior evidence events.

- Allow structured comments that are cryptographically bound to approval records.

- Enforce policy via the engine: tolerance edits trigger re-approval; exports require approver signature; revocations cascade to dependent artifacts.

When approvals produce cryptographic receipts tied to content digests and timestamps, they stop being administrative overhead and become **provable, reviewable control points** in the design lifecycle.

Performance, UX, and offline realities

Evidence should be invisible until you need it. Hashing, signing, and timestamping can run in the background with adaptive batching that preserves atomicity—group micro-edits into a composite event while retaining a sub-digest for each step. Stream digests to the ledger in near-real time, but avoid blocking the UI on external services. For offline work, capture events in a **secure enclave** with monotonic counters; on reconnect, publish the chain with proofs and obtain delayed TSA anchors that maintain time-order integrity without trusting local clocks.

Make the UI an ally:

- Inline indicators for “uncommitted local events,” “anchored with TSA,” and “publicly anchored.”

- Diff views designed for engineers: compare PMI text and callouts, overlay meshes, and animate assembly constraint changes.

- Context-aware prompts that surface policy requirements just-in-time rather than as generic warnings.

These choices keep performance high and mental overhead low. Engineers continue to design, while the system unobtrusively amasses **cryptographic evidence** that can be queried, verified, and presented in seconds when scrutiny arrives.

Governance and security nuances

Security and privacy add real-world complexity. Use **selective disclosure**: encrypt sensitive payloads, publish only digests for public anchoring, and provide zero-knowledge inclusion proofs where needed. Reconcile GDPR “right-to-erasure” with immutability by practicing **cryptographic erasure**—destroy encryption keys for personal data fields while preserving the log’s structural integrity and verifiability. Plan migrations: import legacy versions as a signed **genesis batch** with attestations about completeness; mark uncertainty explicitly rather than inventing provenance you cannot justify. Roll out in phases, progressively hardening the controls and expanding coverage from core CAD to CAM/FEA to supplier handoffs.

Governance pointers include:

- Define retention classes that align with product criticality and regulatory regimes.

- Split roles so no single admin can both alter policy and approve high-risk changes.

- Continuously monitor for revocations: compromised keys, withdrawn approvals, or invalidated tools.

- Maintain a **W3C PROV-compatible** graph so external auditors can consume provenance through standard vocabularies.

With these measures, you preserve evidence, respect privacy, and handle the messiness of real organizations without sacrificing the core **tamper-evident guarantees**.

Conclusion

From editable narratives to cryptographic evidence

Auditable, tamper-evident logs transform design history from editable narratives into **cryptographic evidence**. In doing so, they satisfy regulatory demands without shackling engineers to paperwork. The winning approach is not a monolithic blockchain fantasy; it is a practical stack that blends a **ledgered event log**, **canonical content addressing**, **strong identities with per-event signatures**, and **trusted timestamps**—all governed by policy-aware approvals and provenance graphs. When every design action is treated as an evidence event, you get unambiguous answers to the questions auditors actually ask: “What changed, who changed it, when, why, and what else does it affect?” More importantly, engineering teams gain confidence that their decisions will stand the test of time, personnel changes, toolchain upgrades, and scrutiny from regulators or customers. By reducing ambiguity, you accelerate reviews, compress certification timelines, and transform compliance from a cost center into a product capability. In short, the path forward is clear: **treat provenance as a first-class artifact**, and allow trust and velocity to reinforce each other rather than trade off.

Priorities for rollout, success metrics, and the strategic takeaway

Rollout succeeds when it’s incremental and grounded in current workflows. Start inside your existing PLM: capture **canonical semantic diffs**, sign every event, and enforce WORM retention. Next, tighten reproducibility: deterministic rebuilds and exports with stable IDs; bind them with **TSA anchoring** and periodic public commitments of Merkle roots. Expand coverage to CAM/FEA and supplier handoffs; wire evidence links to ECO/CAPA and requirements so approvals operate with full context. Use metrics to prove value: zero unexplained changes, lower median review times, reproducible builds producing identical signatures, fewer nonconformances tied to history gaps, and shortened certification cycles because auditors can verify machine-readable proofs rather than parsing screenshots. These are not abstract aspirations; they are measurable outcomes that justify investment.

Practical next steps include:

- Start with canonical diffs, per-event signatures, and WORM retention in your current PLM.

- Enforce deterministic exports and rebuilds; add trusted timestamping and periodic public anchoring.

- Extend to CAM/FEA and supplier exchanges; integrate ECO/CAPA and requirement links.

- Adopt human-centered diff views to reduce cognitive load and accelerate approvals.

Track success by watching:

- Zero “unexplained” changes during audits.

- Median review time trending down as diff clarity improves.

- Reproducible builds that yield identical digests and signatures across sites.

- Reduced nonconformances associated with history gaps; faster certification through **machine-verifiable proofs**.

The strategic takeaway is simple and powerful: **treat every design action as an evidence event**. With provenance elevated and enforced through cryptographic mechanisms, you don’t slow engineers—you free them to move fast with confidence, while giving regulators what they need: integrity, authorship, ordering, and time anchoring, backed by proofs rather than promises.

Also in Design News

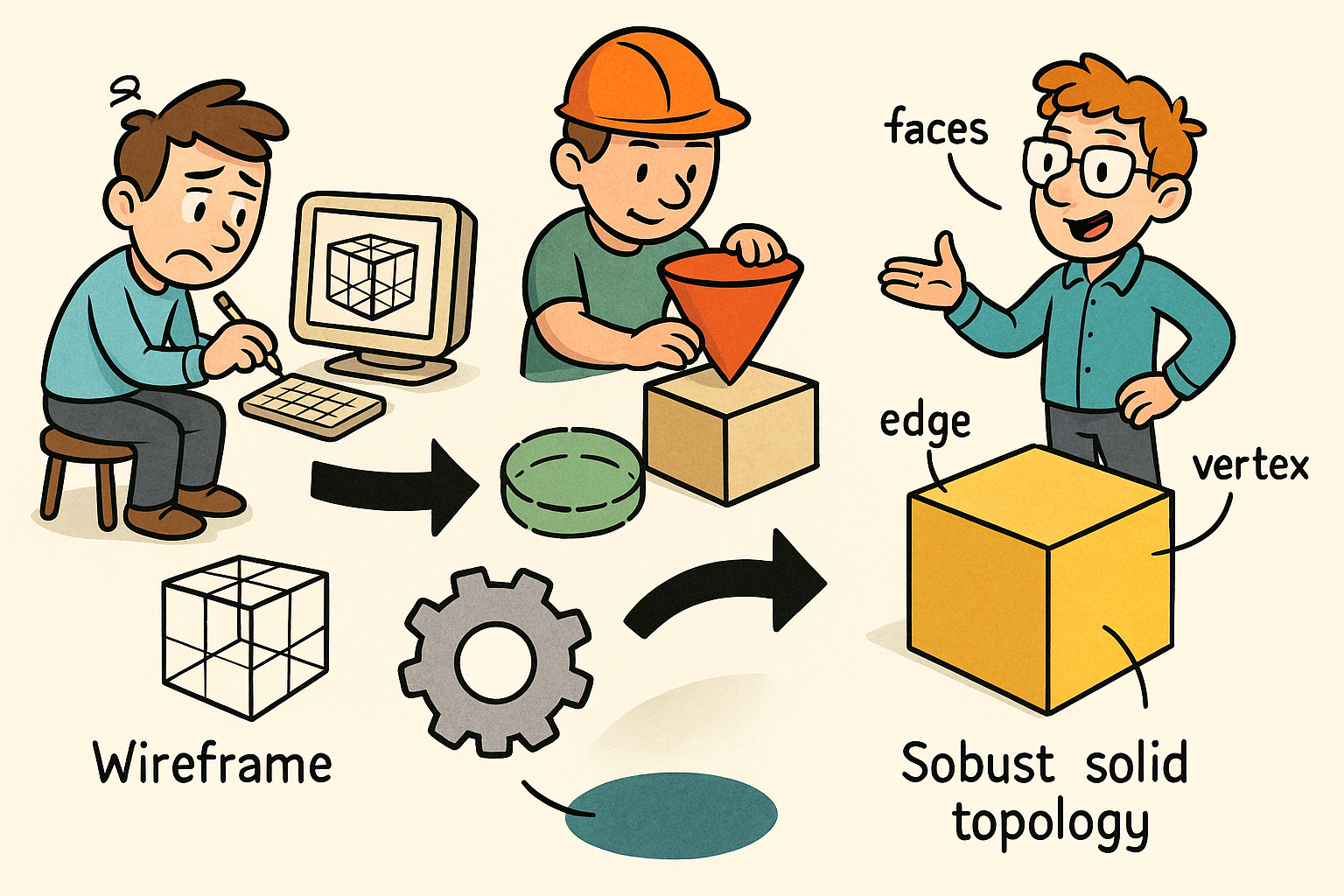

Design Software History: Why B-rep Was Invented: From Wireframe and CSG to Kernels, Topology, and Robust Solid Modeling

January 22, 2026 13 min read

Read More

Cinema 4D Tip: MatCap Shading for Rapid Form and Topology Feedback

January 22, 2026 2 min read

Read More

V-Ray Tip: Stylized Colored Rim Lights for Physically Plausible Silhouette Readability

January 22, 2026 2 min read

Read MoreSubscribe

Sign up to get the latest on sales, new releases and more …