Your Cart is Empty

Customer Testimonials

-

"Great customer service. The folks at Novedge were super helpful in navigating a somewhat complicated order including software upgrades and serial numbers in various stages of inactivity. They were friendly and helpful throughout the process.."

Ruben Ruckmark

"Quick & very helpful. We have been using Novedge for years and are very happy with their quick service when we need to make a purchase and excellent support resolving any issues."

Will Woodson

"Scott is the best. He reminds me about subscriptions dates, guides me in the correct direction for updates. He always responds promptly to me. He is literally the reason I continue to work with Novedge and will do so in the future."

Edward Mchugh

"Calvin Lok is “the man”. After my purchase of Sketchup 2021, he called me and provided step-by-step instructions to ease me through difficulties I was having with the setup of my new software."

Mike Borzage

Simulation-Driven Automated Tolerancing for Capability-Aware GD&T

December 10, 2025 12 min read

Why automated tolerancing matters now

The cost of guesswork

Design teams have long paid a quiet tax for imprecise tolerance decisions. When dimensions are tightened “just in case,” **over‑tolerancing** inflates machining cycle time, drives special fixturing and tool wear, and multiplies **inspection operations** that add no functional value. Conversely, if tolerances are loosened without understanding sensitivity to variation, **under‑tolerancing** erodes yield, triggers rework and concessions, and amplifies warranty exposure through field failures that are hard to trace back to specific geometric deviations. The true cost is nonlinear: a single overly strict positional tolerance can push a part from standard milling into grinding and from sample inspection into 100% CMM checks, while one overlooked flatness zone can cascade through an assembly stack‑up to cause sealing, NVH, or fatigue problems. In an era where lead times are compressed and mass customization is rising, guesswork becomes a structural liability that undermines both throughput and product confidence.

Breaking that cycle requires a shift from rules of thumb to **simulation-informed tolerance allocation**. Rather than treating GD&T as a late-documentation step, an automated pipeline can quantify how each feature’s permissible deviation moves key performance indicators and how those tolerances intersect with **process capability (Cp/Cpk)** and inspection reach. The impact is immediate: tolerance bands are justified against performance targets; inspection effort is proportional to risk; and cost becomes a controllable variable rather than an unpleasant surprise after PPAP. Typical hidden costs of guesswork include:

- Secondary finishing and additional setups to chase microns that do not move performance.

- Escalation to high-end CMM routines when simpler gauging would suffice with smarter datum strategies.

- Scrap and rework from unmodeled stack-ups interacting with thermal or preload effects.

- Latent failures in service when variability couples with operational loads outside assumed nominal conditions.

Gaps in current workflows

Most organizations still separate tolerance decisions from **functional performance simulations**. Manual stack‑ups, often in spreadsheets, live in a different universe than FEA, CFD, or EM models. The result is a brittle handoff: analysts work at nominal geometry while designers make GD&T calls by experience, and manufacturing tries to hit a moving target constrained by ambiguous datum schemes. There’s also a persistent disconnect between GD&T symbols and **capability data**: how a profile tolerance interacts with a supplier’s achievable Cp/Cpk under specific batch sizes, materials, or toolpaths is rarely explicit. Inspection constraints are similarly siloed; a callout that passes an engineering check can be **unmeasurable with the planned fixtures** or demand probing orientations that blow up cycle time. Lastly, tolerances are frequently frozen late, when geometry is already committed, leaving minimal room to balance requirements without costly ECOs.

These gaps accumulate into systemic inefficiency. **Manual stack-ups** often ignore kinematic mobility and realistic contact mechanics; **Datum selections** fail to reflect assembly sequence; and **thermal or hygroscopic effects** are treated as afterthoughts. Without a quantitative link between feature deviations and performance, decision makers default to worst‑case logic, which is safe but expensive, or to optimistic assumptions, which are cheap but risky. An automated, model-driven approach addresses these pain points by embedding GD&T intent within the simulation stack, surfacing **capability-aware ranges** during concept development, and verifying inspection feasibility alongside design choices. Closing the loop early lets teams trade microns for dollars and seconds for sigma, with traceability that stands up to quality audits and regulatory scrutiny.

Target outcomes

The immediate aim is to translate tolerance decisions into measurable business outcomes. That starts with explicit, early **yield vs. cost trade‑offs**. If a design can achieve 99.5% yield at a per-unit cost increase of $0.40 by tightening a profile tolerance, or instead maintain 98.7% yield with simpler machining and one additional inline check, decision makers can choose deliberately rather than intuitively. The engine should synthesize **simulation‑driven sensitivity** with supplier capability and inspection burden to propose GD&T that meets functional targets at minimal total cost. Equally important, it should provide **traceable and explainable rationales**, embedding the logic in **Model‑Based Definition (MBD)** so that PLM captures not just the numbers but the why behind them.

Success looks like a workflow where every tolerance is a small contract: what it protects, what it costs, and how likely it is to be met by real processes. Concrete outcomes include:

- Automated tolerance suggestions grounded in sensitivity to variation across load cases, temperatures, and assembly conditions.

- Quantified yield projections with confidence bounds, tied to assumed Cp/Cpk and inspection strategy.

- In‑CAD visualization of **feature criticality**, enabling rapid what‑if exploration with real‑time cost and risk deltas.

- Persistent provenance in PLM: versioned GD&T, contributing analyses, applicable standards, and human approvals.

- Continuous calibration from shop‑floor metrology that updates capability assumptions and refines future recommendations.

Sensitivity analysis foundations for tolerancing

Sources of variation to model

Robust tolerancing begins by enumerating the ways parts deviate from ideal geometry and how those deviations couple with loads and constraints. **Dimensional variation** spans size, position, orientation, and form errors as formalized in ASME Y14.5 and ISO 1101. Practical models should include feature‑level deviations such as cylindricity lobing, flatness bow, and positional drift relative to datum frames. **Process and material variation** introduces additional spread: modulus and density scatter, fiber orientation in composites, grain direction in forgings, and **thermal expansion anisotropy** in additive builds. Surface roughness affects friction, sealing, and heat transfer, shaping functional margins in ways dimensional stack‑ups alone cannot predict.

Assemblies bring their own complexity. **Contact and clearance** distributions define load paths; **preload scatter** in fasteners, shrink fits, and adhesives shifts stiffness and resonance; and operational environments—temperature gradients, humidity, and vibration spectra—change behavior away from metrology‑room conditions. Modeling must consider assembly sequence and datum mobility, because the order in which constraints are applied impacts the realized geometry under load. Capturing this breadth demands a unified variable set that maps **GD&T‑controlled degrees of freedom to simulation parameters**, enabling analyses that respect both geometric conventions and physics‑based behavior across the product’s lifecycle.

Analysis techniques

Once sources are defined, analysis proceeds along two complementary tracks: local and global. **Local sensitivities** compute derivatives of performance with respect to feature deviations, using finite differences or adjoint methods to estimate d(response)/d(error). These are efficient for exploring design tweaks and informing gradient‑based optimizers. **Global methods** characterize interactions and nonlinear effects over broader ranges: Sobol indices quantify variance contributions and interactions, **Morris screening** identifies influential factors with few runs, and **polynomial chaos expansions** or sparse Gaussian processes provide fast surrogates for Monte Carlo‑level fidelity. Sampling strategies such as Latin hypercube balance coverage and efficiency when model evaluations are expensive.

Robustness needs metrics. Yield is the probability of meeting performance thresholds; **Cpk** translates capability targets into sigma language; **Taguchi loss** prices deviation even inside spec; and **reliability index** (β) provides a structural margin against failure. Tolerance decisions can be framed as worst‑case, statistical, or reliability‑based allocations. The choice should reflect safety criticality and available capability data. A practical toolkit combines: fast local gradients for direction, global indices for hierarchy and interactions, and Monte Carlo (or polynomial chaos) for **distribution‑aware decisions**, all wrapped in automation that is transparent about assumptions and numerical error.

Modeling pipeline

To connect GD&T to physics, the pipeline must translate symbolic callouts into numerical perturbations. Start by deriving **feature Jacobians** that map each geometric tolerance to degrees of freedom in the model relative to **datum reference frames**. For example, a position tolerance on a hole becomes a parametric shift and rotation of the feature’s axis within the allowed zone, while a profile tolerance maps to a field of normal offsets governed by a correlation structure. These perturbations propagate into FEA/CFD/EM models via morphing, meshless embeddings, or parametric CAD drivers. Because high‑fidelity models are expensive, introduce surrogates: **Gaussian processes** with derivative observations, **polynomial chaos expansions** for smooth responses, **physics‑informed neural networks (PINNs)** where governing equations constrain the fit, and reduced‑order models built from modal or Krylov subspaces.

Validation is not optional. Close the loop with targeted physical tests and **metrology‑fed model updating**. Use CMM or optical scans to measure feature deviations and calibrate correlation lengths and noise levels in the variation model. Quantify bias between prediction and observation, and carry that uncertainty forward into yield calculations. Document **V&V artifacts**—mesh convergence, surrogate error bounds, and parameter posteriors—to support credibility in safety‑critical contexts. The result is a pipeline that is both fast enough for design iteration and trustworthy enough for decisions that carry cost and risk implications.

Decision signals produced

The outcome of sensitivity analysis should be actionable, not just analytical. A **feature criticality ranking** exposes which tolerances dominate performance variance, enabling engineers to focus effort where it matters. **Contribution plots** show how each feature’s dispersion maps into KPI spread, while **sensitivity heatmaps** painted on CAD geometry reveal where profile zones can be relaxed without harm and where datum mobility drives instability. These visualizations invite collaboration: designers see geometry, analysts see physics, and manufacturing sees capability demands in context.

Trade space tools complete the picture. **Pareto fronts** make explicit the cost‑yield‑inspection triad: tightening a flatness callout may shift from milling to grinding (cost up), increase Cpk demands (capability up), and reduce rework (yield up). Presenting these fronts with confidence intervals ensures decisions are robust to model uncertainty. Operational outputs include:

- Priority lists of tolerance candidates for tightening/loosening with estimated cost and yield deltas.

- Recommendations for datum re‑selection to stabilize stack‑ups and reduce measurement uncertainty.

- Flags for inspection infeasibility and suggested alternative strategies (e.g., functional gaging vs. CMM probing).

- Expected scrap/rework rates under proposed allocations, conditioned on current Cp/Cpk and batch sizes.

Architecture and workflow of an automated tolerance suggestion engine

Inputs and knowledge sources

An effective engine sits at the intersection of geometry, physics, capability, and standards. It ingests **CAD with PMI/MBD**, reading features, datum schemes, and current GD&T semantics, not just drawings. It couples these with **simulation models and response targets**: stiffness, NVH peaks, leak rates, thermal margins, RF coupling—whatever success means for the product. It references **manufacturing and inspection capability libraries** that encode machine/process tolerance bands, cost curves versus tolerance (including setup and cycle penalties), and gauge strategies with R&R performance. It also loads **standards and rule libraries** (ASME/ISO) together with supplier‑specific constraints and preferred datum templates, allowing the engine to respect corporate conventions and quality systems.

Data quality determines outcomes. PMI must be **semantically rich**, not just annotated text. Simulation models require clean parameter interfaces to accept geometric perturbations. Capability libraries must reflect reality, which means they are living resources updated from shop‑floor data. Finally, user intent matters: response targets, safety classifications, and acceptable risk levels inform optimization objectives and constraints. By unifying these inputs, the engine gains the context to propose tolerances that are legally compliant, physically justified, and economically sensible.

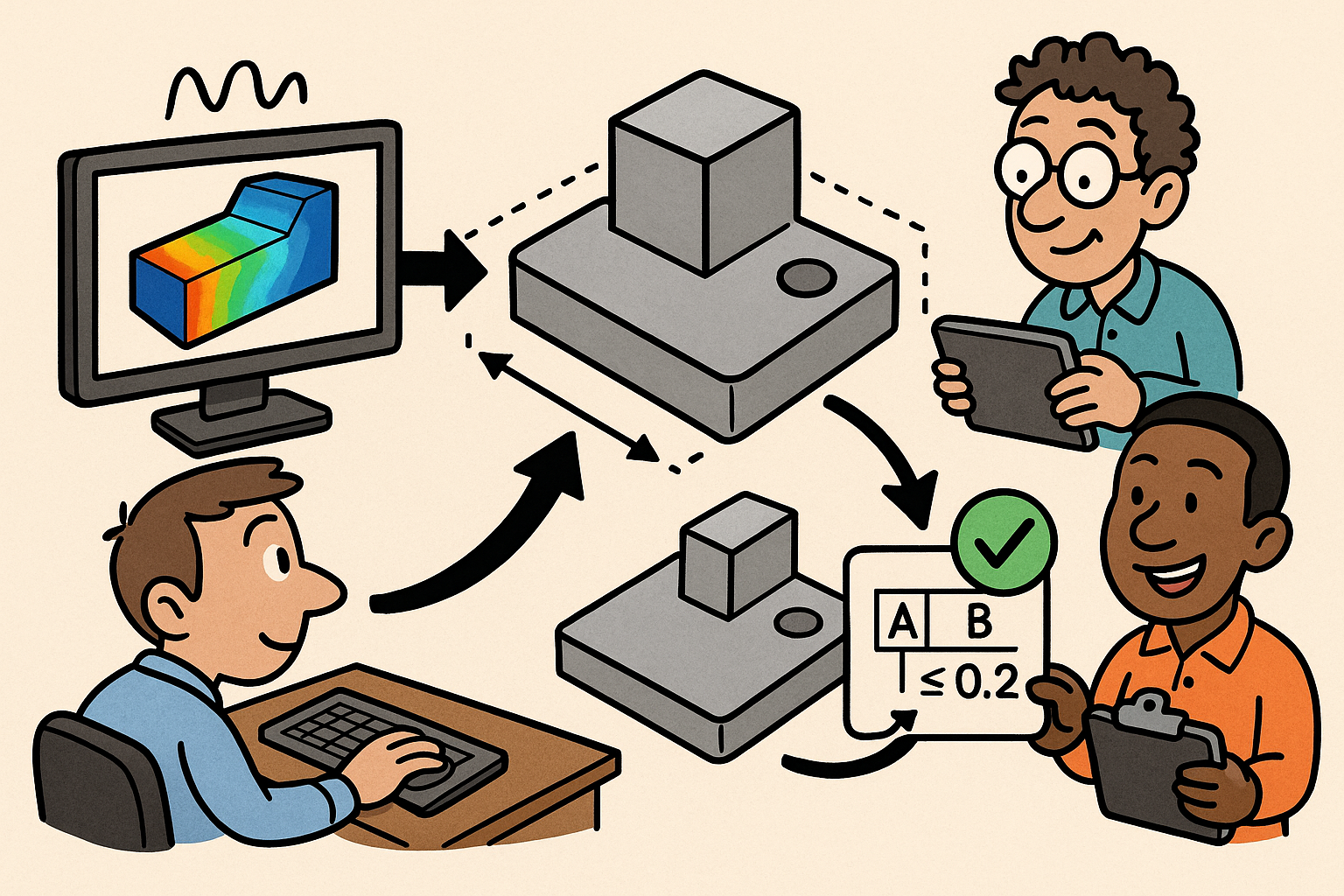

Core loop

The core loop executes a cycle of interpretation, analysis, and optimization. First, the engine **extracts features and semantic intent** from MBD: which surfaces are functional, how datums are established, and what relations exist among features. It then enumerates **candidate GD&T callouts** consistent with standards and design intent—e.g., choosing between position vs. profile for location control or exploring alternate datum precedence to reflect assembly sequence. Next, it runs the sensitivity pipeline to compute **feature‑level importance** and **stack‑up effects**, mapping tolerances to performance variance and locating nonlinear interactions that may confound naive allocations.

Armed with sensitivities and capability data, the engine solves a mixed optimization problem to allocate tolerances. The **objective** is to minimize total cost (fabrication + inspection + expected scrap/rework) subject to yield/performance constraints and measurement feasibility. Decision variables mix discrete choices (tolerance grades, callout types, datum strategies) with continuous bounds (numerical tolerance values). Solvers range from **mixed‑integer programming** and **constraint programming** to stochastic search guided by surrogate gradients. The loop supports **worst‑case** and **statistical allocations**, enforces datum mobility and kinematic constraints, and tracks inspection time models to keep suggestions measurable within takt. The result is a candidate set of allocations that are Pareto‑optimal under current assumptions.

Generate suggestions

Outputs must be concrete and auditable. The engine proposes **specific GD&T callouts**—profile, position, flatness, runout—with recommended values, zones (e.g., UZ), modifiers (MMC/LMC), and **datum schemes** aligned to assembly reality. Each suggestion links to an **inspection plan**: feasible CMM probe vectors and reorientations, functional gage options, expected cycle time, and **gauge R&R** risk estimates. Wherever a callout risks non‑measurability, the engine flags it and offers alternatives along with expected quality impacts. Explanations are integral: contribution plots, side‑by‑side sensitivity comparisons for alternate datums, and **confidence bounds** derived from surrogate error and capability uncertainty, all embedded within the MBD so reviewers can interrogate the logic.

To support decision making, suggestions arrive with context and options. For instance, the engine can package “strict,” “balanced,” and “economy” allocations, each meeting a defined **yield target** with varying inspection burdens. It includes “why not” reasons for rejected strategies (e.g., datum A at MMC leads to unstable assembly under thermal gradient), directs attention to features whose tolerances dominate cost, and surfaces **quick wins** such as replacing a tight parallelism callout with a functionally equivalent profile zone. Every suggestion is versioned, ready for ECO creation, and traceable to the analyses and assumptions that produced it.

Experience and integration

Adoption hinges on experience quality inside the tools engineers already use. In‑CAD overlays show **sensitivity heatmaps** on faces and edges, while sliders let users tighten or loosen tolerances and see real‑time **yield and cost deltas**. A side panel summarizes inspection feasibility and R&R risk, turning measurement into a design parameter. “What‑if” scenarios snapshot alternative datum strategies or process selections, with the engine recalculating capability‑aware impacts in seconds through surrogates. Approved suggestions trigger **automatic ECO generation**, with GD&T updates captured in PLM along with traceable explanations and reviewer comments. The engine also supports batch operations, enabling teams to refresh GD&T across families of parts when processes or suppliers change.

A **feedback loop** connects the digital and physical worlds. Shop‑floor metrology streams update capability distributions, correction factors for **thermal drift**, and probe contact models. The engine ingests this data to recalibrate Cp/Cpk priors and surrogate models, improving future allocations and highlighting suppliers whose performance drifts from commitments. Integration with QMS and SPC systems enables auto‑alerts when control charts indicate shifts that jeopardize tolerance assumptions. Over time, the organization builds a **reusable tolerance knowledge base** that evolves with products, processes, and people.

Edge considerations

Real products stretch beyond linear, isotropic, and stationary assumptions. **Nonlinear contact** and friction can flip sensitivity rankings when gaps close under load; **thermo‑mechanical coupling** introduces path dependence; and hybrid assemblies mix metallic, polymeric, and composite behaviors. **Additive manufacturing** adds anisotropy, scan‑strategy imprinting, and support‑removal distortions that demand spatially varying tolerances or **conformance zones** aligned to function. Assembly sequence matters: constraint application order and fixturing can produce different realized geometries from the same nominal GD&T. A credible engine must encode these realities, or at least flag regimes where its assumptions weaken and conservative buffers are warranted.

Credibility requires rigor. Maintain **uncertainty budgets** that track model form error, surrogate approximation, capability estimation variance, and measurement noise. Curate **V&V artifacts**—mesh independence, surrogate cross‑validation, and posterior predictive checks—so that recommendations stand up in audits and **safety‑critical certification**. Provide controls to switch from statistical to worst‑case allocations where fail‑safe behavior is required, and document the basis. By acknowledging and managing edge cases rather than hiding them, the engine earns trust and expands the domain where **automated tolerancing** can responsibly replace intuition with evidence.

Conclusion

Closing the loop between function and manufacturability

Automated, simulation‑informed tolerancing fuses **functional performance** with manufacturability, replacing ad‑hoc decisions with quantified trade‑offs. It elevates GD&T from a documentation burden to a design variable, and it ties tolerance choices to **yield, cost, and inspection burden** in a way that is explainable and traceable. When feature sensitivities and process capabilities share a common language, engineering can confidently relax non‑critical zones to save time and money while fortifying the features that truly govern performance. The payoff is tangible: fewer late ECOs, shorter PPAP cycles, reduced scrap and rework, and higher field reliability with clear provenance for every decision. In short, **automated tolerancing** turns microns into margin—economic, operational, and reputational—by making variability a first‑class citizen of the design process.

The broader impact is cultural. Teams coordinate around data instead of opinions, suppliers engage with transparent targets instead of surprises, and inspectors measure what matters rather than what tradition dictates. The digital thread records not only what was specified, but why, letting organizations learn across product lines and over time. That is how quality becomes scalable and how costs shrink without sacrificing confidence.

Preconditions for success

Technology cannot compensate for weak foundations. **Trustworthy models** are the bedrock; without well‑posed simulations, calibrated surrogates, and credible uncertainties, the engine may be precise but wrong. **High‑quality PMI/feature semantics** are essential so the engine understands intent—GD&T must be machine‑readable, consistent, and connected to functional surfaces and assembly references. Current and accurate **process capability data** is non‑negotiable; stale Cp/Cpk or generic catalogs will misprice trade‑offs and misguide allocations. Equally, inspection capability and **gauge R&R** realities must be encoded so that suggestions remain measurable on the shop floor, not just in software.

Organizations should treat these enablers as strategic assets. Invest in cleaning and enriching MBD, standardize datum strategies, and align simulation parameterization with GD&T semantics. Establish data pipelines from metrology and SPC into capability libraries, with governance that tracks provenance and drift. And cultivate human oversight: quality engineers, designers, analysts, and suppliers must be in the loop to sanity‑check suggestions, document exceptions, and teach the system edge cases that only experience reveals.

Near‑term actions

Momentum comes from targeted wins. Start with a **pilot on one product line** where simulation fidelity is strong and metrology feedback is accessible. Use that sandbox to prove the link between sensitivity, capability, and GD&T, and to refine user experience inside CAD. In parallel, build **cost and capability libraries** with key suppliers, capturing tolerance‑versus‑cost curves, preferred processes, and measurement capacity under realistic takt. Standardize **datum strategies** and feature ontologies so recommendations are consistent and understandable across teams. Finally, embed **explainability and human‑in‑the‑loop approvals** in the workflow, ensuring that every automated suggestion is reviewable, annotatable, and versioned in PLM.

These actions reduce risk and accelerate learning:

- Define success metrics (yield increase, cost reduction, ECO reduction) and track them from day one.

- Instrument the shop floor to stream metrology to the engine; close the loop on capability assumptions.

- Create review rituals where designers, analysts, and manufacturing vet suggestions and capture tribal knowledge.

- Document exceptions and edge rules to grow the standards/rules library with each iteration.

Long‑term vision

The destination is a living system where tolerances adapt with evidence. **Continuous calibration** from in‑process and inline inspection keeps capability models current, allowing **adaptive tolerancing** that shifts across batches, machines, or environmental conditions without compromising quality. Enterprise PLM becomes a **tolerance knowledge graph**, linking features to performance sensitivities, capability histories, inspection strategies, and field returns, so designs inherit hard‑won wisdom instead of rediscovering it. Over time, the engine can coordinate allocations across assemblies, optimizing at the system level rather than per part, and enabling **global Pareto balancing** of yield, cost, and throughput across the factory network.

With that digital thread in place, organizations can move faster with more confidence. New variants launch with pre‑validated tolerance templates; supplier onboarding becomes data‑driven; and compliance evidence is generated as a by‑product of normal work. The long‑term payoff is a competitive advantage built on **simulation‑informed, capability‑aware GD&T**, where every micron is assigned a purpose and every dollar is tied to measurable risk reduction.

Also in Design News

Rhino 3D Tip: HDRI Workflow for Realistic Lighting and Fast Look‑Dev in Rhino

December 09, 2025 2 min read

Read More

Cinema 4D Tip: Controlled Chamfers and Edge Weighting for Consistent Subdivision Bevels

December 09, 2025 2 min read

Read MoreSubscribe

Sign up to get the latest on sales, new releases and more …