Your Cart is Empty

Customer Testimonials

-

"Great customer service. The folks at Novedge were super helpful in navigating a somewhat complicated order including software upgrades and serial numbers in various stages of inactivity. They were friendly and helpful throughout the process.."

Ruben Ruckmark

"Quick & very helpful. We have been using Novedge for years and are very happy with their quick service when we need to make a purchase and excellent support resolving any issues."

Will Woodson

"Scott is the best. He reminds me about subscriptions dates, guides me in the correct direction for updates. He always responds promptly to me. He is literally the reason I continue to work with Novedge and will do so in the future."

Edward Mchugh

"Calvin Lok is “the man”. After my purchase of Sketchup 2021, he called me and provided step-by-step instructions to ease me through difficulties I was having with the setup of my new software."

Mike Borzage

Scene-Aware LOD: Perception-Driven Fidelity for Scalable Design Visualization

December 25, 2025 12 min read

Why scene-aware LOD matters in design visualization

Use cases

Design visualization has outgrown simplistic decimation tricks because teams now interrogate models that rival flight simulators in geometric and material complexity. In interactive design reviews, it is common to orbit a consolidated CAD/BIM scene with tens of assemblies, millions of triangles, and hundreds of physically based materials that stack layered BRDFs, clearcoats, decals, and displacement. AR/VR walkthroughs raise the bar further: to maintain presence and avoid discomfort, headsets must sustain 90+ FPS with tight motion-to-photon budgets, while high dynamic range lighting and glossy surfaces expose any LOD misstep. Cloud and edge streaming add heterogeneity and volatility; the renderer must adapt to phones, HMDs, and browsers over fluctuating networks without drifting visual intent. In all these cases, the question shifts from “how many triangles can we drop” to “how do we keep the right details, at the right time, for the right user task.” That is where **scene-aware LOD**—an approach that couples geometry, materials, and lighting importance—becomes the backbone of scalable fidelity.

- Interactive design reviews: massive CAD/BIM scenes (millions of triangles, hundreds of PBR materials) examined from unpredictable camera paths.

- AR/VR walkthroughs: strict low-latency loops (90+ FPS) and **tight motion-to-photon** budgets that punish temporal instability and aliasing.

- Cloud/edge streaming: adaptation to heterogeneous clients and links with controlled bandwidth, while preserving perceptual coherence for multi-user sessions.

Limits of naive LOD

Distance-only heuristics were serviceable when scenes were static and mostly diffuse, but they break down immediately inside interiors, on specular and refractive materials, and around thin functional parts where silhouette fidelity is critical. A bolt head that is distant in world space can be large in screen space because it sits near the camera plane or is magnified by foveated optics; likewise, a glass panel may reflect distant geometry that dominates the viewer’s perception even if that geometry occupies few pixels directly. Distance rules also ignore task context: a reviewer highlighting a safety zone or measuring a chamfer needs details preserved regardless of proximity. Worse, naive transitions cause temporal popping and specular instability as LODs flip with camera jitter, eroding trust in visual decisions. If the highlight on a clearcoated surface shimmers due to normal-map demotion or mip thrash, users question whether they are seeing genuine material behavior or artifacts of the pipeline. The cumulative effect is hesitation and rework: teams hedge decisions until an offline render confirms them, defeating the purpose of real-time review.

- Interiors and occluders scramble world-space proximity; **screen-space footprint** matters more than nominal distance.

- Reflective/refractive materials propagate distant importance; reflections exaggerate the harm of overly coarse LODs.

- Temporal instability (popping, mip shimmer) destroys confidence, especially during head motion in XR.

What scene-aware adds

Scene-aware LOD reframes fidelity as an optimization over attention, semantics, and light transport under budget constraints. The system computes a per-object or per-cluster importance score that blends screen-space area, semantic tags (critical fasteners, safety zones, label text), interaction likelihood (hover rays, selection heatmaps), motion, and lighting contribution (shadow casting, reflection visibility, caustic potential). It then allocates geometry, material, and lighting quality where it best supports the current task and human perception. Task-aware quality budgets distinguish “review” versus “measure” versus “marketing,” while **contrast sensitivity** and **eye-tracked foveation** bias resources toward where the user looks. Multi-channel constraints—GPU time in milliseconds, VRAM residency, network bitrate, and even power on mobile—are tracked concurrently so the renderer can preempt non-critical work, defer streaming of low-value textures, or replace ray-traced effects with prefiltered probes where the delta is imperceptible. The result is a renderer that behaves less like a brute-force rasterizer and more like a responsive assistant: aware of what matters, why it matters, and how to meet the frame within budgets without compromising the substance of the design.

- Importance scoring: blends screen coverage, semantics, interaction, motion, and **light transport impact** into a single priority.

- Task-aware budgets: modulate quality based on review goals and human visual sensitivity (fovea versus periphery).

- Multi-channel constraints: maintain targets for GPU time, **VRAM**, network bandwidth, and device power simultaneously.

Core techniques: from importance scoring to hybrid ray-traced pipelines

Importance modeling

Pragmatic importance modeling starts with a simple, differentiable formula and evolves with telemetry. A common baseline is a per-object or per-cluster score S = w1*area_on_screen + w2*semantic_priority + w3*motion + w4*lighting_contribution + w5*user_focus. Area_on_screen updates cheaply from bounding cones or meshlet visibilities. Semantic_priority reflects CAD metadata (part class, PMI, safety tags) and user annotations. Motion accounts for relative velocity between object and camera to guard against temporal artifacts when things cross the fovea. Lighting_contribution estimates how changes in an object will affect what the viewer sees—shadow caster coverage on receivers, reflection/refraction hit probability, and reuse statistics from **ReSTIR reservoirs**. User_focus encodes gaze rays, dwell time, and UI cues. For eye-tracked devices, the renderer combines **Variable Rate Shading (VRS)** or Multi-Resolution Shading with the score, tightening LODs and shading rates inside the foveal radius, easing them smoothly into the periphery with a perceptually tuned falloff. Critically, importance should be temporally filtered: apply hysteresis to prevent thrash and use blue-noise jitter to de-correlate decisions across pixels and frames, then aggregate through TAA that respects reactive masks for specular regions.

- Lighting contribution approximations: shadow-importance maps, reflection visibility cones, and DI/GI sampling stats from ReSTIR to guide where fidelity affects pixels.

- Gaze-aware control: finer LOD and higher shading rate in fovea; gentle, masked transitions outward to avoid awareness of change.

- Semantic signals: never reduce below thresholds for safety-critical or measurement-relevant features.

Geometry and material LOD

Geometry costs scale brutally in massive scenes, so a layered approach is essential. Start with quadric error metrics (QEM) for decimation that preserve curvature and creases, then assemble meshlets to feed mesh shaders efficiently. Build **HLOD** clusters that can cull whole subtrees and swap to impostors—sparse voxel grids, depth/normal atlases, or parallax-corrected billboards—when an object’s projected error stays below a silhouette deviation cap. For CAD integrity, use feature-aware decimation that recognizes sharp edges, fillets, and holes, locking minimum ring counts and edge chamfer fidelity. Materials deserve equal attention: establish BRDF complexity tiers so a clearcoat stack can degrade to a single lobe where imperceptible, demote normal maps to bump or reduce microflake models to pre-baked LUTs at distance. Tie texture mip selection to anisotropic prefiltering and camera motion to suppress shimmer. Lighting quality scales similarly: adapt probe density to importance, allocate cascaded shadow resolution based on screen coverage and receiver salience, and blend reflection probes where acceptable while reserving ray tracing for **highly salient** regions and materials that collapse under approximation.

- Geometry: QEM decimation, meshlets, clustered HLOD, and high-quality impostors with depth/normal atlases.

- CAD-preserving rules: protect functional edges/holes and enforce silhouette and dimension caps.

- Materials: BRDF tiers, **clearcoat-to-specular fallback**, normal-to-bump demotion, microflake LUTs, and anisotropic mip control.

- Lighting: adaptive probe grids, importance-driven shadow cascades, and selective RT for reflections/refractions.

Ray tracing with LOD

Ray tracing amplifies the cost of detail because rays sample content outside primary visibility, so LOD must be ray-type aware. Build BVHs whose leaves reference multiple representations—full mesh, simplified proxy, or impostor—and select a level per ray based on type (primary, shadow, reflection, refraction) and cone angle. Primary rays can demand higher geometric fidelity than soft shadow rays; glossy reflections can select a mid-LOD consistent with roughness, while very rough lobes can sample probes. Integrate **ReSTIR DI/GI** with LOD-aware candidate selection and reuse so that spatiotemporal sampling respects current representations and avoids surfacing stale high-fidelity samples when budgets shrink. Temporal stability hinges on controlled transitions: apply hysteresis to LOD promotion/demotion, add blue-noise dithering to spread change across frames, and gate TAA with reactive masks around specular highlights to prevent ghosting when normal or roughness LODs change. The aim is not to eliminate change but to make it perceptually smooth, synchronized with gaze when available, and consistent with the material’s expected temporal frequency response.

- Multi-representation BVH leaves with per-ray, per-cone selection rules tied to roughness and visibility.

- LOD-aware ReSTIR: candidate filtering that aligns to current LODs and prevents reuse conflicts or bias.

- Temporal guards: hysteresis bands, blue-noise toggles, and **TAA with reactive masks** for specular regions.

Budget control and QA

Achieving a frame target reliably requires a live budgeter, not wishful thinking. Use a real-time knapsack or gradient-based solver to pick LODs per object/cluster so the sum of predicted costs—raster time, ray cost, shading, and bandwidth—fits the frame. Feed the solver with learned cost models built from GPU counters (primitive throughput, cache misses), VRAM residency, and network telemetry. Preempt low-importance draws via secondary command lists to shave spikes, and make streaming incremental so that textures and meshlets arrive in priority order. Quality assurance must be objective: track FPS, frame-time variance, and residency, but also perceptual metrics like **LPIPS** or SSIM relative to a max-LOD baseline for the same camera path. Capture user task metrics—time to locate a part, measurement precision, error rate—to ensure optimizations do not sabotage outcomes. Bake guardrails into the solver: minimum LODs for safety classes, silhouette deviation caps, and dimension drift limits in measurement modes. When the solver must drop quality, it should do so where LPIPS deltas are smallest and user tasks least affected, then revert gracefully as headroom returns.

- Live budget solver: selects LODs to meet frame time, VRAM, and bandwidth targets, with preemption of non-critical work.

- Metrics: FPS, variance, **VRAM residency**, LPIPS/SSIM deltas, and user task success/time to validate perceptual equivalence.

- Guardrails: per-part minimums, silhouette and dimension caps, and mode-specific constraints to maintain trust.

Pipeline architecture for scalable photorealistic rendering

Asset prep and interoperability

Scalable fidelity starts at ingestion. Convert CAD to renderable meshes with tessellation tuned to curvature and downstream LOD strategies; export parametrics to support feature-aware decimation that recognizes fillets and sharp edges. Automate UV generation or leverage chartless methods where possible, then standardize material translation via MDL, MaterialX, or **OpenPBR** so shading intent survives renderer swaps. Bake derived textures (AO, bent normals, thickness, curvature) to stabilize GI and enable BRDF tier demotion without breaking look. LOD generation should be a first-class batch: geometry HLODs built from clustering and QEM, impostor atlases for mid/far distances, and material simplification tiers enumerated explicitly. Preserve semantics—PMI, part class, safety tags, fastener roles—through the LOD stack so runtime importance can reference them. For scene formats, **USD** is a strong hub: variants encode LOD stacks and task modes; relationships carry semantic bindings. Where glTF is the delivery target, use EXT_meshopt_compression and EXT_lod to reduce size while keeping deterministic LOD groups. Hydra delegates abstract renderer choice so teams can swap hybrid raster/RT backends without reauthoring content, and the same USD scene can drive desktop, XR, and cloud variants consistently.

- CAD-to-render: curvature-based tessellation, UVs, material standardization (MDL/MaterialX/OpenPBR), and auxiliary baking (AO, bent normals).

- Automated LODs: clustered HLODs, impostor baking, and material tiers with preserved **semantic metadata**.

- Interchange: USD variants for LOD and modes, glTF with meshopt and LOD extensions, and Hydra for renderer abstraction.

Runtime systems

At runtime, a GPU-driven architecture is essential. Use bindless resources and mesh shaders to let the GPU cull, cluster, and issue indirect draws from priority queues that reflect importance. A residency manager treats textures and meshlets as pages with priorities that fluctuate with user focus; it predicts future needs from camera velocity and gaze vectors. Hybrid raster plus RT is often optimal: rasterize primary visibility for throughput, then deploy ray tracing for shadows/reflections only where importance warrants it. Expose LOD-specific ray flags and per-ray shading-rate control so rough reflections sample proxies and soft shadows consult lower-res BVHs when acceptable. For streaming, break the scene into patches with progressive LOD; adapt bitrate via congestion signals, and add forward error correction where retransmits would violate latency. Ensure deterministic LOD selection across clients to keep multi-user sessions in perceptual sync, even if network quality differs. XR specifics round out the stack: use late latching for head pose, eye-tracked foveated transport to save pixels and bits, and thin-object safeguards so handrails or cables never vanish due to aggressive decimation or culling in the periphery.

- GPU-driven core: bindless, mesh shaders, indirect dispatch, and priority-based residency of meshlets and textures.

- Hybrid raster/RT: primary via raster, ray-traced shadows/reflections gated by importance with **per-ray LOD**.

- Streaming: patch-based progressive LOD, network-aware adaptation (bitrate/FEC), deterministic LOD for multi-user sync.

- XR: late latching, foveated transport, and rules that protect thin, safety-relevant geometry from disappearance.

Collaboration, validation, and modes

Scene-aware rendering must align to the purpose of a session and remain consistent across collaborators. Provide explicit modes: a “review” mode that applies aggressive LOD with perceptual guardrails; a “measure/inspect” mode that locks critical edges and disables impostors for targeted parts; and a “marketing” mode that pushes fidelity, optionally invoking offline denoisers on RT passes. To keep distributed teams aligned, store shared state (camera bookmarks, annotations, selected parts, gaze-derived points of interest) in a CRDT-backed layer so edits converge without conflicts. Seed LOD decisions with the same random sources across clients to avoid perceptual mismatch—two users looking at the same car hood should not see different flake noise or mipseams. Validation automates the unglamorous but vital checks: per part-class decimation caps, limits on dimension drift versus CAD, silhouette deviation thresholds, and warnings when material demotion alters intended appearance (e.g., losing a safety color in a decal downsampling). When the system must compromise, it should explain decisions, surfacing which constraints drove trade-offs so reviewers understand that fidelity was preserved where it matters most.

- Task modes: review, measure/inspect, and **marketing** with different quality budgets and effect mixes.

- Consistency: CRDT-backed scene state, shared seeds to synchronize stochastic decisions and LOD micro-structure.

- Automated checks: part-class rules, dimension drift limits, and silhouette deviation caps enforced preflight and at runtime.

Conclusion: achieving fidelity at scale

Key takeaways

Scaling photorealism is no longer about raw triangle throughput; it is about aligning computation with perception and intent. **Scene-aware LOD** ties fidelity to what actually influences decisions: screen-space footprint, semantics, interaction likelihood, motion, and light transport. Hybrid raster plus RT pipelines let you spend rays where they change the image, while probes, impostors, and material tiering carry the rest with grace. Temporal stability is a first-class requirement; without hysteresis, blue-noise dithering, and TAA that respects specular reactivity, even correct LOD choices can feel wrong. The pipeline is as much organizational as technical: standardize assets with USD/MaterialX/OpenPBR, codify task modes with explicit guardrails, and measure outcomes with both performance and perceptual metrics. When these pieces align, teams stop arguing with the renderer and start arguing productively about the design, confident that what they are seeing—whether on a desktop, in a headset, or over a streamed session—is faithful to the intent where it matters most.

- Fidelity follows relevance: importance scoring + perception beats distance-only rules, especially indoors and on specular content.

- Hybrid raster/RT with LOD-aware sampling delivers photorealism within strict budgets when paired with robust temporal strategies.

- Standards and automation make consistency possible across devices, users, and modes.

Measurable outcomes

Organizations that operationalize scene-aware LOD report strong, repeatable gains because they trim what does not matter rather than indiscriminately cutting detail. Across representative models, a well-tuned pipeline reduces triangle and texture footprints by 2–4x with negligible task impact, thanks to feature-aware decimation, clustered HLODs, and material tiering that respect appearance. The headroom reclaimed—often 20–40% of frame time—pays for accurate ray-traced shadows on salient receivers, higher-resolution reflections in the fovea, or simply higher framerates that stabilize XR comfort. In cloud review sessions, bandwidth falls meaningfully when streaming prioritizes needed meshlets and texture mips while de-emphasizing out-of-view or low-importance content; the session feels sharper, not blurrier, because bits are spent where eyes dwell. Crucially, these wins are validated with objective metrics: LPIPS/SSIM deltas stay under agreed thresholds relative to max-LOD baselines, and user task metrics—time-to-find, measurement error—hold steady or improve as temporal stability reduces second-guessing. The benefit compounds at scale: larger scenes, more devices, and more simultaneous collaborators all fit within budgets without diluting trust.

- 2–4x reductions in triangles and texture memory with **negligible task impact**.

- 20–40% frame time headroom reclaimed for RT effects, higher framerate, or power savings.

- Lower network bandwidth for cloud/edge sessions via progressive, importance-driven streaming.

Common pitfalls

Scene-aware does not mean carefree. Over-decimation of thin or functional features is the most common sin; losing a safety rail or softening an edge intended for measurement undermines the entire exercise. Material LODs can alter design intent if color-critical decals, microflake sparkle, or clearcoat behaviors are demoted without perceptual checks—and specular instability from aggressive normal-map mip selection induces shimmer that reviewers misread as surface defects. Temporal instability is the silent killer: if LOD scores oscillate frame-to-frame, even imperceptible geometric changes become perceptible as popping or crawling speculars, especially during head motion in XR. Finally, pipelines sometimes treat network and power as afterthoughts; streaming overload or thermal throttling can quietly erase the benefits of careful GPU budgeting. The antidote is to encode non-negotiables in rules (minimum LODs, silhouette caps, colorimetry checks), apply hysteresis and blue-noise in transitions, and measure perceptual impact continuously so regressions are caught early and automatically, not in the demo room.

- Protect thin and functional features; enforce measurement-safe decimation and silhouette limits.

- Guard material intent: validate color, gloss, and microstructure when demoting BRDF tiers or textures.

- Control temporal behavior: add hysteresis, blue-noise, and reactive TAA to avoid **LOD thrashing**.

- Account for bandwidth and power budgets alongside GPU time to prevent backdoor degradations.

Roadmap

The next wave of scalability will come from learned and standardized intelligence. Importance predictors trained on interaction telemetry can anticipate where users will look or click, guiding prefetch and LOD promotion before the need is visible. CAD semantics fused with **3D Gaussian Splatting** or NeRF variants can paint distant context at extreme efficiency while preserving near-field CAD fidelity for measurement. Industry schemas should evolve: standard USD and glTF LOD schemas that include semantics-aware stacks, material tier mappings, and intent constraints would make pipelines interoperable out of the box. On the rendering side, expect deeper per-ray controls—shading-rate maps bound to gaze and roughness, reservoir resampling that respects material tiers, and BVH nodes that encode energy bounds to pick proxies safely. Most importantly, invest in mode-specific guardrails and objective QA: codify what “never change,” what “change only beyond X,” and what “freely adapt,” and pair them with LPIPS/SSIM gates and user task A/B harnesses. With those in place, teams can scale scenes and audiences aggressively, confident that **faithful perception** remains the invariant while the machinery beneath adapts fluidly to the moment.

- Learned importance from gaze and interaction telemetry to drive proactive LOD and streaming.

- Fusion of CAD semantics with Gaussian Splatting/NeRF for efficient distant context.

- Standardized USD/glTF LOD schemas with semantics and material tier intent.

- Mode-specific guardrails and continuous perceptual QA to maintain trust at scale.

Also in Design News

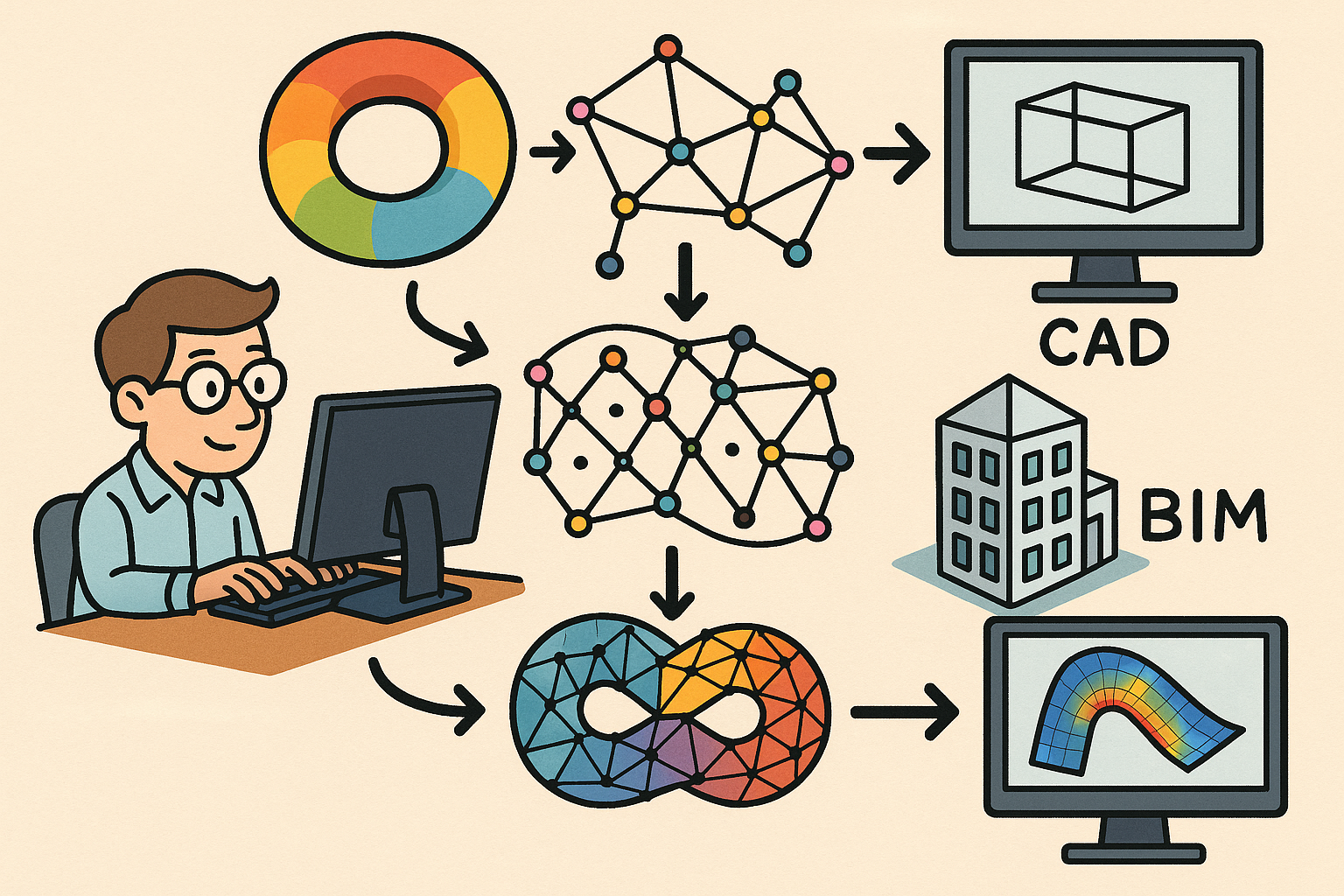

Design Software History: From Computational Topology to Design Software: Integrating TDA into CAD, BIM and CAE Workflows

December 25, 2025 10 min read

Read More

Cinema 4D Tip: Hair-to-Spline Workflow for Stylized NPR Ribbons in Cinema 4D

December 25, 2025 2 min read

Read MoreSubscribe

Sign up to get the latest on sales, new releases and more …