Your Cart is Empty

Customer Testimonials

-

"Great customer service. The folks at Novedge were super helpful in navigating a somewhat complicated order including software upgrades and serial numbers in various stages of inactivity. They were friendly and helpful throughout the process.."

Ruben Ruckmark

"Quick & very helpful. We have been using Novedge for years and are very happy with their quick service when we need to make a purchase and excellent support resolving any issues."

Will Woodson

"Scott is the best. He reminds me about subscriptions dates, guides me in the correct direction for updates. He always responds promptly to me. He is literally the reason I continue to work with Novedge and will do so in the future."

Edward Mchugh

"Calvin Lok is “the man”. After my purchase of Sketchup 2021, he called me and provided step-by-step instructions to ease me through difficulties I was having with the setup of my new software."

Mike Borzage

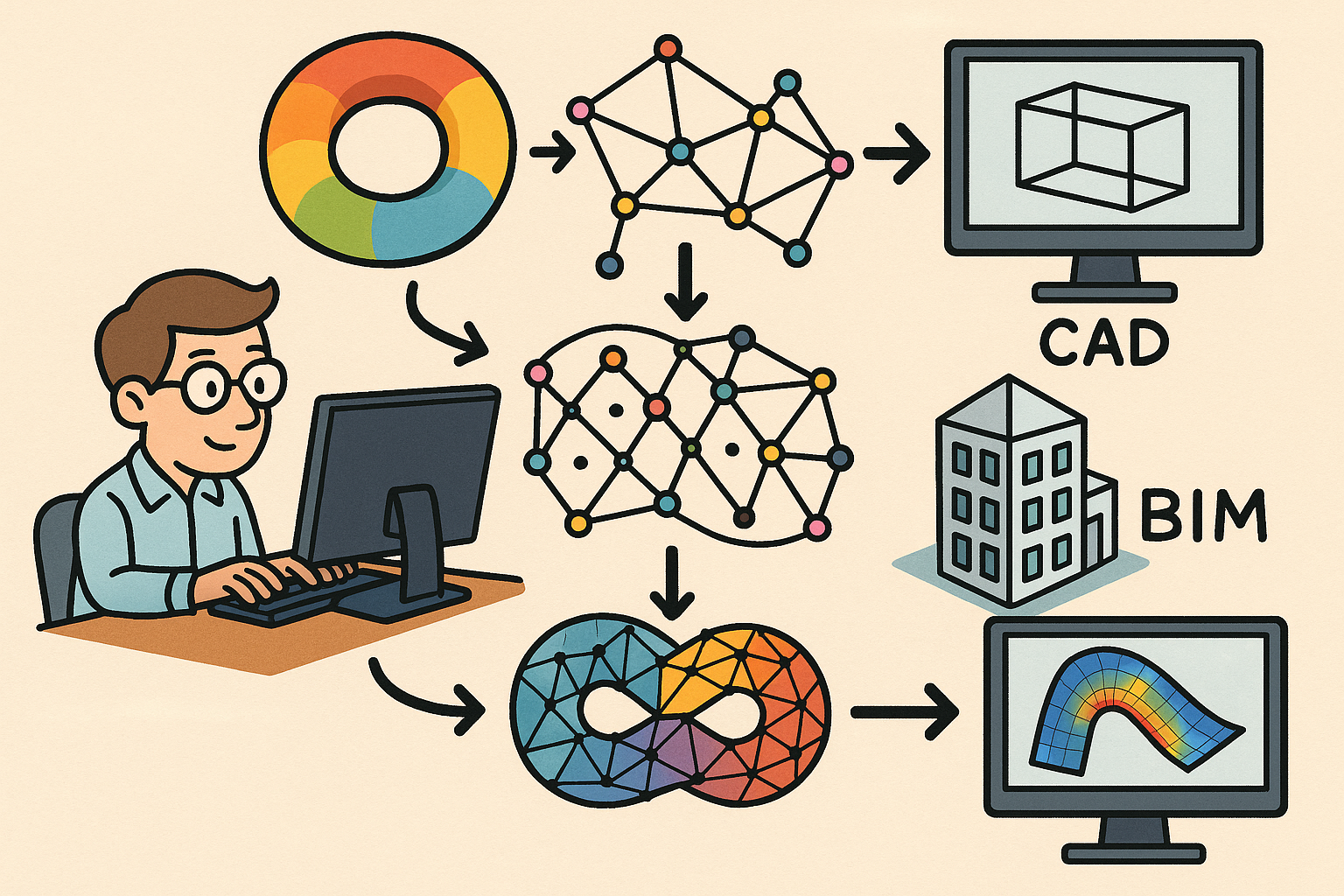

Design Software History: From Computational Topology to Design Software: Integrating TDA into CAD, BIM and CAE Workflows

December 25, 2025 10 min read

From computational topology to design software: early roots and milestones

Early theoretical pillars (1991–2010)

Design software’s late‑breaking embrace of Topological Data Analysis (TDA) did not appear overnight; it emerged from a precise sequence of mathematical ideas that quietly matured across the 1990s and 2000s before moving into CAD/BIM/CAE pipelines. A widely cited starting point is 1991, when Yutaka Shinagawa, Tosiyasu Kunii, and Yves Kergosien introduced Reeb graph–based segmentation for shapes, repurposing a classic topological construct into an actionable tool for partitioning models by how level sets of a function connect across a surface. That move made topology operational: design geometry could be decomposed into meaningful parts—protrusions, tunnels, and caps—using the connectivity encoded by a scalar field such as height or geodesic distance. Soon after, the 1994 introduction of alpha shapes by Herbert Edelsbrunner and Ernst Mücke turned Delaunay‑Voronoi scaffolding into a bridge between point clouds and inferred topology, laying the groundwork for scan‑driven reconstruction workflows where one must infer watertightness and connectivity from incomplete samples. Alpha complexes became the language of reverse engineering from scattered points, a theme that would return with the rise of consumer and industrial 3D scanning.

The next decisive step arrived in 2002 with Herbert Edelsbrunner, David Letscher, and Afra Zomorodian’s computational formalization of persistent homology, which assigns lifetimes to topological features as a shape or field is swept by a filtration. This yielded stable, multiscale invariants—barcodes and diagrams—that could summarize “what topology exists and at what scale.” In 2007, Gunnar Carlsson popularized “TDA” as a banner for applying these ideas beyond pure geometry, emphasizing robustness to sampling and noise. That push was reinforced in 2009 by Singh, Mémoli, and Carlsson’s Mapper algorithm, which converted continuous fields on high‑dimensional data into navigable graph abstractions supporting interactive visual analytics. By 2010, the monograph “Computational Topology” by Herbert Edelsbrunner and John Harer provided the standard reference, aligning theory with algorithms. Together, these milestones yielded a vocabulary—Reeb graphs, alpha complexes, persistent homology, Mapper—that translated elegantly into shape processing, simulation analytics, and ultimately CAD‑adjacent software stacks.

- 1991: Reeb graph–based segmentation (Shinagawa, Kunii, Kergosien) operationalizes topology for shape partitioning.

- 1994: Alpha shapes (Edelsbrunner, Mücke) connect point clouds to topology via Delaunay geometry.

- 2002–2010: Persistent homology and “Computational Topology” standardize stable, multiscale topological descriptors.

- 2007–2009: Carlsson’s TDA and the Mapper algorithm link topology with interactive analytics.

Open stacks and early industrial touchpoints (2010s)

With theory in place, the 2010s became about open implementations and their first industrial footprints. INRIA’s GUDHI project, led by Frédéric Chazal and Steve Oudot, delivered a comprehensive C++/Python library for filtrations, persistent homology, alpha complexes, and geometric inference. In parallel, Dmitriy Morozov’s Dionysus and Ulrich Bauer’s Ripser targeted speed for Vietoris–Rips persistence, the latter spawning accelerations such as Ripser++ and contributing to a wave of performance‑oriented persistence pipelines. On the visualization side, Julien Tierny and collaborators released the Topology ToolKit (TTK), which embedded Reeb graphs, Morse–Smale complexes, and persistence computations directly into VTK/ParaView via Kitware’s ecosystem. The Python community further lowered barriers through scikit‑tda (Nathaniel Saul, Chris Tralie) and giotto‑tda, making persistence diagrams, landscapes, and Mapper available to data scientists and computational designers who prefer notebooks over C++ build chains.

These stacks catalyzed early industry exploration. Carlsson’s team co‑founded Ayasdi to commercialize Mapper for high‑dimensional analytics across finance, healthcare, and manufacturing, demonstrating that topology could compress complex datasets into actionable shape‑of‑data summaries. In CAE and scientific visualization, ParaView + TTK spread into research labs, turbomachinery groups, and energy companies to dissect CFD/FEA fields, revealing bifurcations and regime changes that scatterplots obscured. Meanwhile, R&D units at Dassault Systèmes, Siemens Digital Industries Software, and Autodesk Research began prototyping topological descriptors for shape retrieval, mesh defeaturing, and robustness checks in simulation workflows. This period also aligned TDA with reverse engineering and additive manufacturing: alpha and witness complexes helped quantify porosity and connectivity in lattices, while homology‑based checks informed watertightness and defect detection. By the late 2010s, the message was clear: topology was not merely theoretical; it was a practical, open, and performance‑aware layer ready to be threaded through CAD/BIM/CAE toolchains.

What TDA measures in shapes and why it matters for CAD/BIM/CAE

Stable multiscale signatures and segmentation

At its core, TDA equips designers and engineers with stable multiscale signatures of shape. Betti numbers count connected components, tunnels, and voids, but the real power arrives when these counts are tracked across a filtration to form persistence diagrams and barcodes. In practice, one selects a scalar function—height, geodesic distance, thickness, curvature, or a simulation variable—and constructs lower‑star, alpha, or Rips filtrations. Features that persist across a range of thresholds are deemed meaningful; those that flicker briefly are treated as noise. These summaries can be transformed into persistence landscapes and images for machine learning, striking a balance between mathematical rigor and computational convenience. Crucially, stability theorems guarantee that small perturbations in geometry or sampling induce small changes in the diagrams, which is essential when dealing with remeshing, scanning noise, or parameterized design sweeps that do not alter function.

Segmentation—and, by extension, feature understanding—builds on Reeb and Morse–Smale theory. The Reeb graph collapses level sets of a function into a skeletal summary of how the surface is connected, proving invaluable for isolating protrusions, handles, and cavities that repeatedly frustrate automated feature recognition. The Morse–Smale complex further partitions a scalar field into gradient basins and separatrices, giving a structured decomposition useful for defeaturing and simplification without losing functionality. Influential work by Stefano Biasotti, Bianca Falcidieno, and Michela Spagnuolo (IMATI‑CNR) advanced how Reeb‑like constructs carve models into semantically meaningful parts, while Tamal Dey and collaborators focused on robust extraction from meshes and CAD surfaces in the presence of degeneracies. When combined with persistence‑guided pruning, these structures yield designer‑controllable segmentations that retain engineering intent and sidestep brittle rule‑based approaches that can overfit to a specific tessellation or naming convention.

- Persistence diagrams summarize multiscale topology and withstand remeshing and noise.

- Reeb and Morse–Smale complexes create interpretable, controllable segmentations aligned to functional features.

- Landscapes/images enable ML without discarding interpretability; stability theorems ensure robustness.

Search, simulation, and manufacturing impact

Beyond segmentation, TDA gives CAD/BIM/CAE workflows new levers for search, matching, and simulation insight. In shape retrieval, persistence‑based descriptors complement spectral signatures such as HKS and commute‑time kernels, as well as B‑rep graph methods common in mechanical part libraries. Benchmarks including the Princeton Shape Benchmark and SHREC have repeatedly shown that topological descriptors offer invariance to small geometric noise and tessellation changes, while still providing interpretable “what changed” explanations via feature pairings in the diagram. This is significant for parametric families of parts where fillets vary, holes migrate slightly, or lattices densify: the topological summary remains stable and searchable without brittle hand‑crafted heuristics. That same interpretability carries into CAE analytics. Mapper and persistence can summarize CFD/FEA fields across parameter sweeps, highlighting bifurcations, regime transitions, and failure modes that standard KPI dashboards miss—particularly when flow topology, shock structures, or stress channeling is the salient story.

Manufacturing and inspection workflows also benefit. Homology checks can confirm watertightness and intended connectivity in complex lattices and porous structures, detecting accidental disconnections or unmanufacturable micro‑voids before print. In CT‑vs‑CAD comparisons for additive manufacturing QA, persistence can quantify where reconstructed topology diverges from the design intent, guiding targeted repair rather than global over‑smoothing. Mesh QA and repair ecosystems—Materialise Magics and Autodesk Netfabb among them—have historically focused on geometric fixes (self‑intersections, inverted normals, non‑manifold edges). A topology‑aware layer augments that playbook: persistence‑based filtering suggests denoising thresholds that do not erase functional channels; Reeb‑driven segmentation supports selective remeshing of problematic regions; and alpha‑complex diagnostics reveal undersampled features in scan‑to‑CAD. The outcome is fewer brittle decisions and more principled tolerances around what constitutes a “valid” model for simulation and manufacturing.

- Search and matching: persistence complements spectral and B‑rep methods for robust retrieval in large repositories.

- Simulation insight: Mapper captures bifurcations and regime changes in CFD/FEA fields with interpretable structure.

- AM QA and inspection: homology verifies connectivity; persistence quantifies CT‑vs‑CAD discrepancies without blunt smoothing.

How it gets built into tools: kernels, pipelines and performance

Where it plugs in and algorithmic choices

Bringing topology into design software requires careful placement in the pipeline and judicious algorithmic choices. For B‑rep‑centric systems, one typical route is to generate scalar fields over derived meshes: curvature, thickness, signed distance to the medial axis, or a physics field, then run lower‑star filtrations to compute persistence and feed Reeb/Morse–Smale segmentation. When the input is a point cloud or voxels—common in scan‑to‑CAD or AM verification—alpha complexes, witness complexes, and Vietoris–Rips filtrations offer alternatives with different speed‑accuracy tradeoffs. For CAE parameter studies, the same principle scales to high‑dimensional outputs: compute Mapper on collections of fields or statistics, then project the resulting graph into PLM/analytics dashboards that link nodes back to simulation states. Each insertion point matches the data at hand but shares a common thread: a filtration encodes “how the structure emerges,” and persistence quantifies which parts matter.

Algorithmically, design teams juggle a handful of decisions. Filtration design is the first: alpha vs lower‑star vs witness reflects tradeoffs among stability, sampling density, and computational cost. Comparing shapes at scale involves whether to use exact bottleneck/Wasserstein distances—precise but expensive—or fast embeddings via persistence landscapes, silhouettes, and images that support indexing and approximate nearest neighbor search. Simplification is where topology proves its worth: persistence‑guided denoising assigns a principled threshold to remove tiny features while preserving functionally meaningful ones, avoiding arbitrary decimation ratios. When working with CAD surfaces and meshes, robust Reeb extraction (Dey et al.) and streaming lower‑star algorithms reduce sensitivity to degeneracies and non‑manifold artifacts. The result is not a monolithic “TDA mode,” but a set of composable operators—filtration selection, persistence computation, diagram simplification, and Reeb/Morse–Smale segmentation—that can be slotted into existing meshing, feature recognition, and simulation pipelines.

- From B‑rep to mesh: derive scalar fields; run lower‑star filtrations; apply persistence‑guided segmentation/simplification.

- For point clouds/voxels: use alpha, witness, or Rips filtrations to support scan‑to‑CAD and AM verification.

- For CAE studies: compute Mapper on ensembles; link nodes to states in PLM dashboards with drill‑down to fields.

- Distance vs embedding: bottleneck/Wasserstein for precision; landscapes/images for scalable search.

Libraries, acceleration, and UX patterns

The practical stack is increasingly standardized. GUDHI, Ripser/Ripser++, Dionysus, and TTK form a high‑performance core implemented in C++ with Python bindings, while scikit‑tda and giotto‑tda provide user‑friendly orchestration and visualization in notebooks. Acceleration hinges on sparsified complexes, cohomology‑based persistence, GPU kernels, and streaming updates that avoid rebuilding from scratch when fields nudge by small deltas. In large assemblies and CFD meshes, these tactics turn otherwise intractable diagrams into interactive experiences. Data plumbing matters just as much: VTK/ParaView handles heavy visualization and interaction; OCCT/OpenCascade bridges B‑rep to triangulations; and in‑house research prototypes at Dassault Systèmes, Siemens, and Autodesk knit these layers into feature recognition, mesh healing, and simulation tools without forcing users to switch contexts. The most effective workflows conceal complexity while exposing interpretable outputs—barcodes, diagrams, Reeb skeletons—that click with engineers’ mental models.

UX patterns have converged on visual analytics and node‑based composition. In ParaView, linked views are standard: a persistence diagram sits beside the 3D model and a time/parameter plot; brushing a point pair highlights its spatial support on the mesh, while a range selection drives simplification or region selection. This great‑circle path from diagram to mesh to scalar chart turns algebraic topology into concrete design action. For algorithmic assembly, Grasshopper and Dynamo provide familiar node‑based workflows; emerging components expose persistence diagrams, Mapper graphs, and Reeb/Morse–Smale segmentation so designers can insert topology‑aware steps between geometry creation, meshing, and evaluation. Documentation and presets are vital: default filtrations for thickness/curvature, recommended persistence thresholds for “light/medium/heavy” denoising, and example Mapper templates for CFD parameter sweeps help users get value without revisiting theory. When this UX scaffolding sits atop accelerated libraries, topology becomes a natural, fast, and interpretable extension of existing modeling and simulation practice.

- Core libraries: GUDHI, Ripser/Ripser++, Dionysus, TTK; orchestration via scikit‑tda and giotto‑tda.

- Acceleration: sparsified complexes, cohomology tricks, GPU kernels, and streaming updates.

- Plumbing: VTK/ParaView for visualization, OCCT for B‑rep/mesh bridges, and vendor prototypes wiring it all together.

- UX: linked persistence views with 3D selection; node‑based components in Grasshopper/Dynamo to encourage adoption.

Conclusion

Why topology now fits CAD/BIM/CAE

Topological data analysis gives engineering design something it has long needed: stable, multiscale metrics for “shape understanding” that survive remeshing, noise, and small parameter tweaks. Barcodes, diagrams, and landscapes distill connectivity, tunnels, and voids into concise, comparable signatures; Reeb and Morse–Smale structures carve models into functionally aligned pieces that can be defeatured or remeshed without guesswork. This coherence mirrors earlier mathematical transitions in CAD: when NURBS standardized surface representation and parametrics stabilized constraint solving, entire toolchains shifted toward reliability and reuse. The same story is unfolding here. With the maturation of Edelsbrunner and Harer’s formalism and the production‑grade quality of GUDHI, Ripser, and TTK, topology crossed from whiteboards into libraries that vendors can ship, test, and support. In visualization and analytics, ParaView and TTK demonstrated that persistence could be computed on real meshes and fields, linked to 3D selection, and acted upon—not merely plotted.

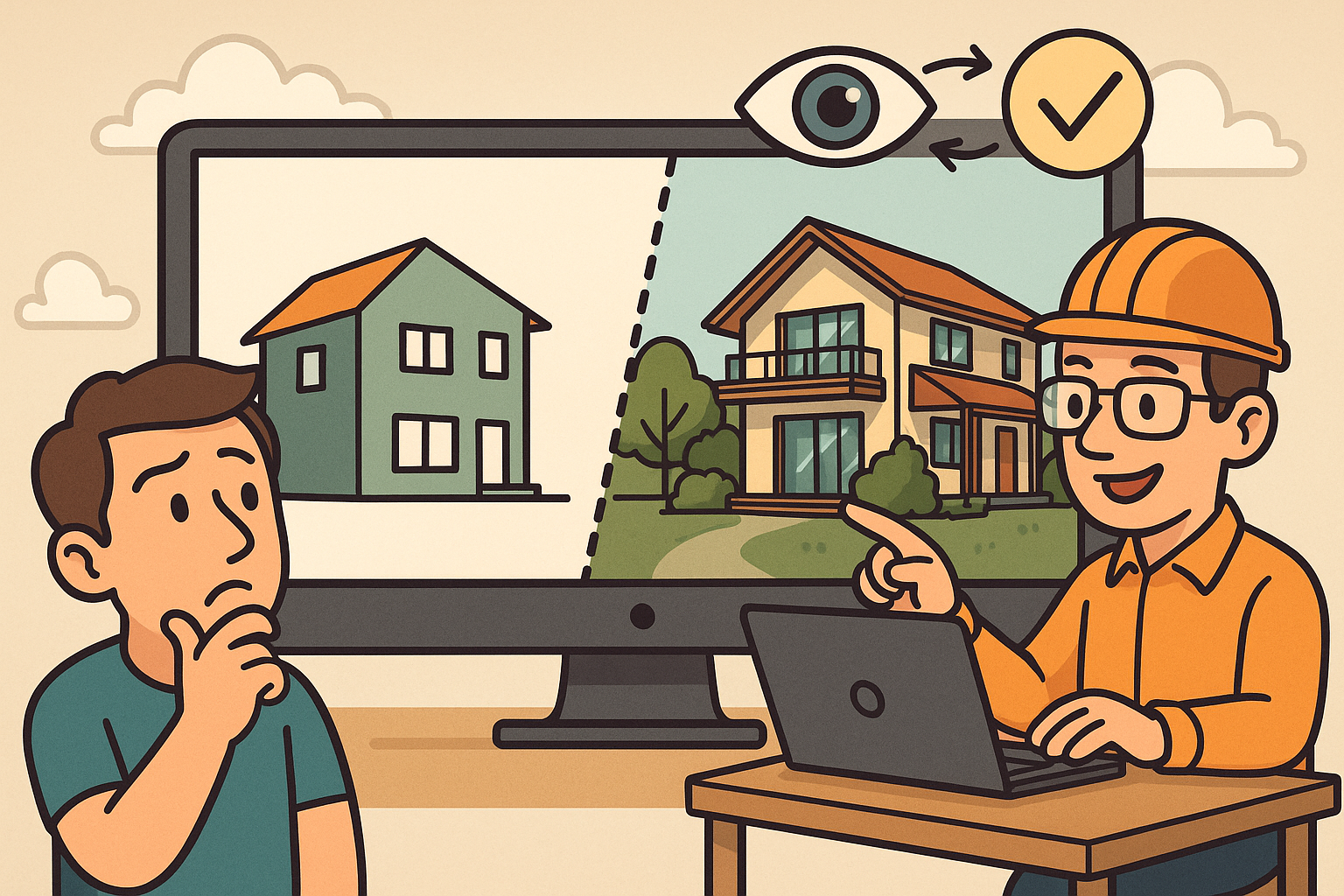

The implications are immediate across domains. In MCAD, topology‑guided segmentation and simplification reduce mesh bloat while preserving function, enabling faster analyses and cleaner manufacturing prep. In BIM, homology‑aware checks verify the intended connectivity of systems—ducts, MEP networks, and structural lattices—under aggressive model federation and LOD shifts. In CAE, Mapper compresses parameterized studies into interpretable graphs that highlight regime changes and anomalous behaviors, guiding exploration and validation. Across these contexts, the benefit is interpretability coupled with robustness: engineers can see which feature pairs define a tunnel or cavity, brush them, and confirm that the resulting edit or simplification aligns with intent. As vendors harden the integrations and add presets for common workflows, TDA becomes less a specialist’s toolkit and more a common language for design, analysis, and quality assurance.

Near-term productization and the next frontier

Expect the next phase to center on performance, standardization, and hybrid intelligence. Cloud/GPU acceleration will make real‑time persistence practical in viewports, allowing interactive denoising sliders tied to diagram thresholds and live Reeb/Morse–Smale overlays during modeling. Standardized topological descriptors will join PDM/PLM metadata alongside mass properties and curvature plots, enabling repository‑wide queries like “find parts with two through‑tunnels and a primary cavity born above thickness τ.” On the ML front, hybrid TDA+learning models will use landscapes/images as inputs for ranking and retrieval, while diagram‑aware losses regularize generative design to avoid unintended topology—no accidental holes or disconnected lattices. Simulation ensembles will gain topology‑aware surrogates: persistence‑preserving autoencoders that maintain the structure of flow or stress fields and flag when topology changes invalidate the surrogate’s predictions.

Productization inside kernels is the longer arc. Vendors will expose topological signatures as first‑class citizens—cached per feature or body, diff‑able across revisions, and enforceable in checks—so that a fillet removal that merges two cavities triggers a warning just like a violated tolerance. CAD kernels will offer built‑ins for lower‑star filtrations over curvature/thickness and provide hooks for Reeb/Morse–Smale segmentation that downstream meshing and defeaturing respect. BIM platforms will attach homology summaries to MEP networks to ensure connectivity survives model fusion. CAE environments will integrate Mapper into design‑of‑experiments dashboards, with nodes linked to simulation histories and 3D state captures. The unifying principle is simple: make topology a routine property, not an afterthought. When topological signatures sit beside parametrics, constraints, and material models, the design stack becomes more robust to noise, modification, and scale—exactly what is needed as geometry becomes more complex, data flows more continuous, and verification more demanding across MCAD, BIM, and CAE toolchains.

Also in Design News

Scene-Aware LOD: Perception-Driven Fidelity for Scalable Design Visualization

December 25, 2025 12 min read

Read More

Cinema 4D Tip: Hair-to-Spline Workflow for Stylized NPR Ribbons in Cinema 4D

December 25, 2025 2 min read

Read MoreSubscribe

Sign up to get the latest on sales, new releases and more …