Your Cart is Empty

Customer Testimonials

-

"Great customer service. The folks at Novedge were super helpful in navigating a somewhat complicated order including software upgrades and serial numbers in various stages of inactivity. They were friendly and helpful throughout the process.."

Ruben Ruckmark

"Quick & very helpful. We have been using Novedge for years and are very happy with their quick service when we need to make a purchase and excellent support resolving any issues."

Will Woodson

"Scott is the best. He reminds me about subscriptions dates, guides me in the correct direction for updates. He always responds promptly to me. He is literally the reason I continue to work with Novedge and will do so in the future."

Edward Mchugh

"Calvin Lok is “the man”. After my purchase of Sketchup 2021, he called me and provided step-by-step instructions to ease me through difficulties I was having with the setup of my new software."

Mike Borzage

Model-Based Definition: Semantic PMI, AP242/QIF Validation, and Paperless Manufacturing

January 08, 2026 13 min read

Introduction

Setting the stage for model-based definition

Design and manufacturing teams are converging on a simple, powerful idea: let the 3D model and its embedded intelligence drive everything from toolpaths to inspection to service. That is the promise of Model-Based Definition (MBD), and its broader operational manifestation, Model-Based Enterprise (MBE). While the term has existed for decades, the practical meaning of MBD has sharpened dramatically with the maturation of semantic Product Manufacturing Information (PMI), neutral data standards, and a digitally integrated shop floor. This article distills what MBD means today, which capabilities matter most for authoring and validation, and how to consume MBD across manufacturing without falling back to drawings. The focus is on making the model truly computable by downstream systems, enforcing governance so quality scales, and grounding the effort in PLM/MES effectivity and secure provenance. If you are planning pilots or leveling up existing programs, the goal here is to provide both conceptual clarity and detailed, pragmatic steps you can apply immediately to reduce interpretation risk, accelerate change, and link quality outcomes to design intent in a repeatable, auditable way.

- Why now: CAM/CMM automation thrives on machine-readable GD&T rather than graphical callouts.

- What’s different: standards like STEP AP242 and QIF enable round-trip interoperability and metrology automation.

- Where to begin: focused pilots on high-value parts and a robust validation backbone to avoid scaling faulty models.

What MBD Really Means Today

Clarifying the single source of truth with embedded PMI

MBD means the authoritative definition of the product is the 3D model enriched with Product Manufacturing Information (PMI). The model is not a visual reference; it is the specification. Geometry, topology, tolerances, materials, surface treatments, finishes, notes, and specification references live together such that design intent is both human-comprehensible and machine-actionable. Practically, this “single source of truth” implies that derived outputs—drawings, 3D PDFs, NC code, CMM programs, setup sheets—inherit from and trace to the model revision and effectivity. The model’s embedded metadata and links ensure that when dimensions or tolerances change, the connected process plans, inspection routines, and documentation update consistently. This is the core of the digital thread: minimizing opportunities for transcription error and ambiguity. To achieve this, MBD models should avoid face-only annotations that break when features move. Instead, PMI needs to bind to features and datums defined intentionally (holes, slots, bosses, patterns, and compound features) so that change propagation respects function. When that happens, the model’s role shifts from describing geometry to governing behavior across manufacturing, quality, and service.

- Authoritative source: the model carries intent, not just shape, and downstream systems rely on it directly.

- Traceability: all derived artifacts reference the same revision, effectivity, and configuration context.

- Resilience: feature-linked PMI maintains relevance through design evolution.

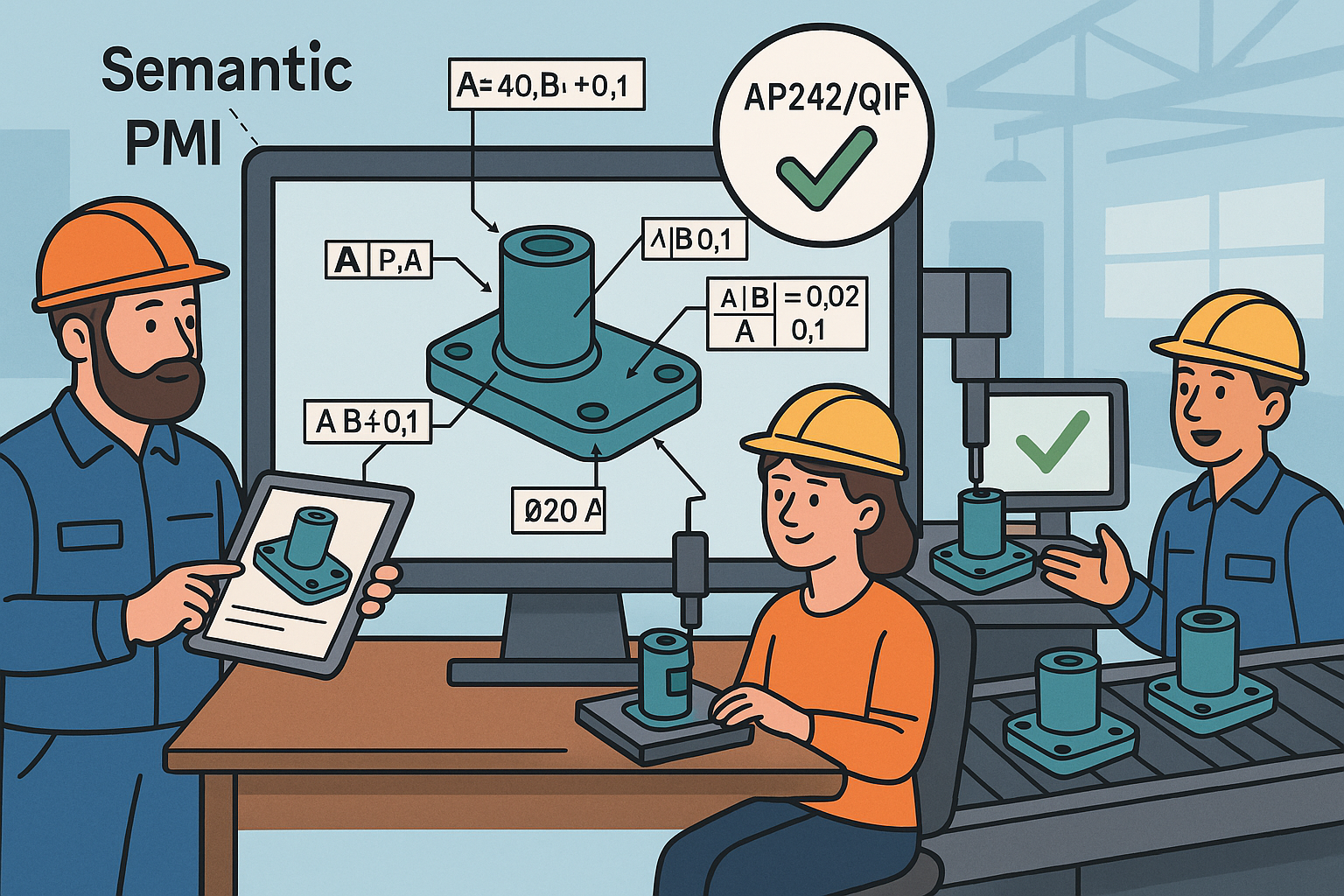

Graphical versus semantic PMI and why machine readability matters

Graphical PMI consists of visual annotations—dimension strings, symbols, leaders—rendered for people. While more legible than a 2D drawing, graphical-only detail leaves automation on the table; CAM and CMM software must infer meaning from pictures. In contrast, semantic PMI encodes the same information in structured, machine-readable form: datums, feature control frames, modifiers, material conditions, and surface finish are modeled as data objects tied to explicit features. This enables functions like rule-based tolerance consumption, automatic CMM program generation, SPC feedback loops linked to features, and tolerance-aware toolpath strategies. For example, a profile tolerance with M modifier affixed to a pattern of complex surfaces can drive probing density and adaptive finishing automatically when the PMI is semantic; no manual interpretation required. Material and finish selections can similarly steer process plans: heat treat sequences, coating allowances, or textured surface requirements become computable constraints. When combined with controlled vocabularies and schemas, semantic PMI eliminates ambiguity, enabling reliable exchange via standards and consistent consumption by CAM, CMM, and MES. The result is fewer misinterpretations and a repeatable path to automation that scales across part families.

- Graphical PMI: great for humans, insufficient for deterministic automation.

- Semantic PMI: data-driven, feature-linked, and consumable by algorithms.

- Outcome: machine-readable GD&T unlocks robust CAM/CMM automation and analytics.

The standards landscape that makes MBD interoperable

The practical success of MBD depends on standards that preserve meaning across tools and time. ASME Y14.41 defines digital product definition practices and how to present PMI on 3D models; ASME Y14.5 governs GD&T; and ISO 16792 complements these for international MBD practices. For neutral exchange, STEP AP242 Edition 2 is the cornerstone for semantic PMI, enabling systems to interpret and validate feature and tolerance semantics rather than just graphics. For visualization, JT and 3D PDF serve human consumption, while QIF (Quality Information Framework) structures metrology data—measurement plans, results, and traceability to features—for automation and analytics. Long-term archiving is covered by LOTAR EN/NAS 9300, which mandates durable, verifiable storage of models and associated PMI to safeguard design intent over decades. The interplay of these standards matters: AP242 transports design semantics, JT/3D PDF communicates to people, QIF automates quality, and LOTAR ensures longevity. A disciplined bundling and validation strategy around these formats ensures that what leaves engineering is faithfully consumed by manufacturing and quality, across suppliers and tools, without reverse engineering or reinterpretation.

- Design semantics: AP242 Edition 2 for model geometry and PMI semantics.

- Visualization: JT or 3D PDF as human-readable adjuncts.

- Metrology: QIF for plans, results, and traceability to features.

- Archiving: LOTAR EN/NAS 9300 for durable, auditable records.

Business drivers and risk reduction across the digital thread

The business case for MBD crystallizes around repeatability, speed, and accountability. By enforcing a single, semantic model, you reduce interpretation errors that typically stem from drawing re-transcription and ad hoc notes. Downstream systems can auto-derive CAM and CMM content, decreasing cycle time and labor variability while increasing first-pass yield. Change becomes safer because faster propagation across the digital thread ensures all derived assets remain synchronized with the source model. With semantic features and PMI, traceability improves: each NC program, probing routine, or inspection result can reference the exact features and tolerance statements that drove it. That yields analytics granularity—capability by feature or tolerance class—and auditability for compliance regimes. Risk is further reduced by eliminating multiple sources of truth: operations, suppliers, and inspectors consume the same governed model and effectivity. Finally, by structuring PMI and exchanges, you pave the way for automation in quoting, process planning, and SPC-driven process control. The net effect is fewer nonconformances, faster engineering changes, and a measurable reduction in the total cost of quality.

- Reduced ambiguity: fewer interpretation errors, especially at supplier boundaries.

- Automation: automated CAM/CMM and documentation from the model.

- Traceability: feature-centric analytics and closed-loop feedback.

- Change velocity: synchronized updates to all downstream artifacts.

Readiness and scoping pilots for maximum learning

Before scaling MBD, assess your organizational MBE maturity and GD&T literacy. Semantic PMI is only as good as the clarity and correctness of the underlying datum schemes and tolerance strategies. Start by selecting pilot part families where tolerance, geometry, or manufacturability risks are high—thin walls, complex freeform surfaces, tight fits, compound datum structures—so the value of automation and unambiguous intent is obvious. Evaluate CAD authoring ability for semantic PMI, CAM and CMM toolchains for AP242/QIF consumption, and PLM/MES readiness for configuration and effectivity control. Address training gaps: investing in GD&T upskilling ensures engineers express intent semantically and consistently. Define a crisp “definition of done” for pilot releases, including coverage metrics for PMI and model quality checks. Lastly, document supplier enablement tiers so you can meet partners where they are while pulling them forward: some may consume graphical PMI today while preparing for semantic exchanges tomorrow. A well-scoped pilot creates reusable authoring rules, validation gates, and exchange patterns that become your enterprise playbook.

- Choose parts with high tolerance and manufacturability risk to maximize signal.

- Measure readiness: authoring, validation, PLM/MES integration, and supplier consumption.

- Codify success: coverage metrics, rules, and checklists that scale beyond the pilot.

The MBD Authoring and Validation Stack

CAD authoring capabilities that unlock automation

Effective MBD starts in CAD with high-fidelity, feature-linked semantics. Author semantic PMI with explicit datums, datum targets, compound features, and feature control frames that encode material conditions and modifiers. Employ rules-based GD&T libraries tied to design intent, process capabilities, and inspection strategies; for example, automatically propose positional tolerances for critical hole patterns based on fit class and selected machining process. Link PMI to features—not transient faces—so models remain resilient through parameter changes, topology optimization, and design updates. Apply built-in model quality checks to ensure naming conventions, units, coordinate systems, and layer/schema hygiene are correct; a clean model is easier to validate and exchange. Integrate tolerance analysis—stack-ups, worst-case and statistical approaches—to calibrate tolerances against both manufacturability and serviceability; semantic PMI then becomes a living link between the analysis and downstream execution. This authoring discipline turns the model from a visualization into a programmable specification, enabling reliable handoffs to CAM, CMM, and work instruction generation without manual re-annotation.

- Semantic PMI: datums, FCFs, material conditions, surface texture as data objects.

- Feature linkage: resilient PMI binding to features and datum reference frames.

- Model hygiene: standardized names, units, coordinate frames, and layers.

- Tolerance analysis: drive tolerances from quantified functional requirements.

Validation and continuous integration for models

No model should enter release without automated validation. Deploy semantic PMI validators to assess coverage (are all functionally relevant features toleranced?), correctness (are reference frames and modifiers valid?), and schema conformance (does the model satisfy AP242 rules?). During transition periods, use drawing-to-model parity checks to verify that legacy drawings and the model express the same GD&T and notes. In change reviews, run PMI diffing and model diffing to isolate changes to features, datums, tolerances, and notes; gate these in PLM workflows so only validated models are promoted. Execute read/write roundtrip tests for STEP AP242 and QIF across your toolchain, comparing to golden models to prevent schema regressions and vendor-specific drift. Treat model validation like software CI: nightly checks, dashboards, and fail-fast alerts. This rigor prevents silent degradation of semantic quality, catches configuration drift early, and builds trust that models are computable beyond the authoring tool. The payoff is a frictionless pathway to automation where downstream systems can consume without defensive rework.

- Automate: enforce PMI coverage and schema checks on every release.

- Compare: diff models and PMI to focus change reviews on what matters.

- Roundtrip: verify AP242/QIF read-write fidelity against golden references.

- Govern: integrate validation gates directly in PLM change workflows.

Packaging and exchange patterns that serve humans and machines

Package data so people and software each get what they need, without creating multiple sources of truth. A proven pattern is to deliver STEP AP242 + JT when visualization is needed, or STEP AP242 + QIF for design plus metrology automation. Reserve 3D PDF strictly for human-readable adjuncts, not as a machine source. Maintain configuration discipline: variant options, effectivity, and all derived outputs must trace unequivocally to a single CAD revision. Embed cryptographic signatures, watermarks, and provenance metadata so recipients can verify authenticity and integrity—especially important in supplier networks and safety-critical programs. Normalize material and process vocabularies to avoid free-text drift across the digital supply chain. Use manifest files or container formats that bind together the model, visualization, QIF, and metadata, making package validation straightforward and auditable. This approach respects both the operators who need clear visuals and the machines that need consistent semantics, all while preserving trust and preventing accidental divergence.

- Human + machine bundles: AP242 + JT or AP242 + QIF as standard kits.

- Single source: strict revision and effectivity control for all derivatives.

- Trust: signatures, watermarks, and provenance to secure IP and authenticity.

- Vocabulary control: standardized materials and processes across systems.

Long-term archiving and security by design

Durability and security underpin the credibility of an MBD program. For archiving, use LOTAR-compliant storage that preserves geometry, semantic PMI, and assembly structure with verifiable references over decades. Capture all necessary configuration context—effectivities, variants, and supplier-specific derivations—so a future retrieval recreates the intended state unambiguously. Implement redaction strategies for supplier views that hide nonessential IP while preserving feature semantics required for manufacturing or inspection. Enforce access control and entitlement-driven visualization so users see only what they’re authorized to consume, whether inside PLM portals or via lightweight viewers. Maintain traceable download and usage logs to support regulatory and customer audits, coupling access events to model revisions. Finally, operationalize vulnerability management: signed packages, zero-trust boundaries, and tamper-evident distribution for external collaborations. When security and archiving are part of the design of your data flows—not afterthoughts—you protect IP, sustain compliance, and maintain the continuity of the digital thread across product lifecycles.

- Archive fidelity: LOTAR storage of models, PMI, and structure with validation.

- Selective disclosure: redacted supplier views that preserve necessary semantics.

- Entitlement: access and visualization governed by role and need-to-know.

- Auditability: comprehensive logs tying usage to revision and effectivity.

Paperless Execution — Consuming MBD Across Manufacturing

CAM and process planning driven by semantic PMI

When CAM consumes semantic PMI, feature recognition becomes deterministic rather than heuristic. Holes, pockets, bosses, and freeform surfaces are identified along with their datum relationships and tolerance classes, enabling the system to auto-select strategies, cutters, and feeds. Tolerance-driven operations shift finishing passes, adapt stepovers, and introduce in-process verification where capability margins are tight. With material and surface finish semantics, CAM can account for coating allowances, microfinishes, and burr-sensitive edges. In-process probing cycles align to machine-readable GD&T: positional checks refine work offsets, profile checks trigger adaptive rework or compensation, and pattern features inherit consistent probing logic. Setup sheets, tool lists, and NC programs are generated with traceability back to model revision and effectivity, ensuring shop floor execution reflects current intent. The outcome is a reduction in manual CAM interpretation time, fewer missed tolerances, and smoother handoffs to operators who trust that the instructions align with the latest, authoritative model.

- Feature recognition: semantic features + datums inform strategy selection.

- Tolerance-aware toolpaths: finishing intensity scales with profile/position limits.

- In-process verification: probing sequences keyed to critical GD&T.

- Traceability: NC and documentation tied to model revision/effectivity.

Inspection and quality automation with QIF

Quality thrives when inspection plans are generated from the same semantics that drove manufacturing. Using QIF, CMM programs can be derived automatically from PMI: datum setups, probing paths, sampling density, and compensation logic are computed from the feature control frames and modifiers. Non-contact scanning systems likewise align measured point clouds to datum reference frames and evaluate GD&T directly against semantic tolerance definitions. Ballooning and first article inspection packages can be created without 2D drawings; features are numbered and referenced back to the model so results remain unambiguous. Close the loop by pushing measured results to PLM/MES, tying them to features, tolerances, lots, and process parameters; SPC analytics then reveal where capability falls short of assigned tolerances. Use the insights to adjust process plans or tolerance allocations—with changes governed through PLM. Correlate NC toolpaths to inspection results to spot systemic biases and machine-specific drift. This closed loop eliminates the latent mismatch between what was designed, what was machined, and what was measured, enabling fact-based improvements.

- Auto-generation: CMM and scan evaluation driven by semantic PMI.

- Paperless FAIR: ballooning and traceability without 2D drawings.

- SPC loop: results feed PLM/MES for capability analytics.

- NC/inspection correlation: detect bias and drift across machines and shifts.

Assembly and work instructions from model to MBOM

Assembly execution benefits when the manufacturing bill of materials (MBOM) and work instructions flow from the same semantic model. PMI-derived sequences identify precedence (press-fit then torque), clearances inform fixture design, and fastener semantics contribute to automatically populated torque specs and tool selections. Augmented reality (AR) overlays can anchor on model features and datums to guide operators, especially in dense assemblies or constrained spaces, while capturing operator feedback tied to the same features. In-process verification—visual checks, torque confirmations, gap measurements—record against semantic features, not free-text steps, building a searchable quality history. Deviations and nonconformance reports (NCRs) can be created directly on the affected features, enabling engineering to respond with updated tolerances, clearances, or process instructions that propagate through PLM to the shop in minutes. When MBOM derivation, fixture design, and instruction generation all respect the model’s semantics, you unlock consistent, paperless assembly where human execution is supported by precise, context-aware guidance.

- MBOM transformation: precedence, clearances, and torque specs from PMI.

- AR-guided assembly: feature-anchored instructions and verification.

- Closed-loop NCR: deviations linked to semantic features for targeted fixes.

- Rapid change: updated instructions propagate with effectivity control.

Supplier collaboration and change control in a semantic world

Suppliers span a readiness spectrum, from consuming graphical PMI to fully semantic automation. Define readiness tiers: graphical-only (JT/3D PDF), hybrid (AP242 with partial semantics), and fully semantic (AP242 + QIF). Tailor enablement by tier: provide conformance tests, sample packages, and validation tools so suppliers can prove they can parse and apply PMI correctly. For engineering changes, package ECOs so affected features and PMI diffs are explicit; ensure CAM, CMM, and work instructions at suppliers auto-regenerate or at least flag rework needs. Govern effectivity in PLM/MES so suppliers always know which configuration applies to a given lot or serial range. Use digital signatures and watermarks to protect IP and verify authenticity of what’s shared externally. By making semantics the common language and validation the contract, you reduce negotiation friction, accelerate onboarding, and increase the probability that what is produced remotely matches the design intent without multiple clarification cycles.

- Tiered enablement: meet suppliers where they are, guide them forward.

- ECO propagation: automatic updates to CAM/CMM/work instructions.

- Effectivity clarity: lot/serial applicability managed in PLM/MES.

- Trust and IP: signed, watermarked packages with clear provenance.

KPIs to instrument during pilots

To validate outcomes and refine workflows, define metrics that reveal both speed and quality. Track time from model release to NC/CMM program readiness, and measure first-pass yield on initial production runs. Monitor NCR rates and supplier turnbacks to quantify interpretation risk reduction. Measure engineering change cycle time from ECO creation to completed downstream updates, including regeneration of CAM/CMM and work instructions; the expectation is meaningful reduction with semantic automation. For inspection, track throughput and on-time completion of FAIRs without drawings, and analyze cost of quality as semantic PMI matures. Augment with feature-level metrics: capability indices by tolerance class, rework frequency by datum structure, and inspection hit rate on critical characteristics. These KPIs, collected consistently, give evidence for scaling decisions, surface training needs, and justify continued investment in authoring rules, validation infrastructure, and supplier enablement.

- Speed: time to NC/CMM, engineering change cycle time.

- Quality: first-pass yield, NCR rate, supplier turnbacks.

- Throughput: inspection cycle time, FAIR completion without drawings.

- Cost: measurable reductions in cost of quality as automation increases.

Conclusion

Anchoring MBD as an enterprise capability

Modern MBD is not a file format migration; it is an enterprise capability anchored in semantic PMI, validation discipline, and integrated execution. Treat the model as the computable contract between engineering and operations, and build a validation and governance backbone—model quality checks, PMI coverage metrics, CI gates—that prevents ambiguity from escaping into production. Standardize bundles around STEP AP242 + QIF for machine readability, and use JT or 3D PDF for human consumption only. Start with focused pilots on high-value parts where tolerance and manufacturability risk is high; instrument KPIs to measure speed, quality, and cost improvements; iterate on authoring rules and downstream apps based on evidence. Invest in GD&T upskilling so engineers can express intent semantically and consistently, and stage supplier enablement with tiered conformance tests and signed, provenance-rich packages. Finally, embed MBD in PLM/MES with strict effectivity control, configuration discipline, and secure provenance to sustain a trustworthy digital thread. When you do, the payoff compounds: fewer interpretation errors, automated CAM/CMM, faster change propagation, traceable quality, and a scalable path to truly paperless manufacturing.

- Semantic PMI is the cornerstone of automation and auditability.

- Validation-first prevents scaling ambiguous models.

- Standard bundles enable reliable human and machine consumption.

- Pilots plus KPIs drive pragmatic, evidence-based scaling.

- Upskilling and supplier enablement unlock enterprise-wide benefits.

Also in Design News

Cinema 4D Tip: Age-Driven Particle Color and Scale Using Xpresso

January 09, 2026 2 min read

Read More

V-Ray Tip: Quick Render Preview Preset Workflow to Accelerate Look‑Dev

January 09, 2026 2 min read

Read MoreSubscribe

Sign up to get the latest on sales, new releases and more …