Your Cart is Empty

Customer Testimonials

-

"Great customer service. The folks at Novedge were super helpful in navigating a somewhat complicated order including software upgrades and serial numbers in various stages of inactivity. They were friendly and helpful throughout the process.."

Ruben Ruckmark

"Quick & very helpful. We have been using Novedge for years and are very happy with their quick service when we need to make a purchase and excellent support resolving any issues."

Will Woodson

"Scott is the best. He reminds me about subscriptions dates, guides me in the correct direction for updates. He always responds promptly to me. He is literally the reason I continue to work with Novedge and will do so in the future."

Edward Mchugh

"Calvin Lok is “the man”. After my purchase of Sketchup 2021, he called me and provided step-by-step instructions to ease me through difficulties I was having with the setup of my new software."

Mike Borzage

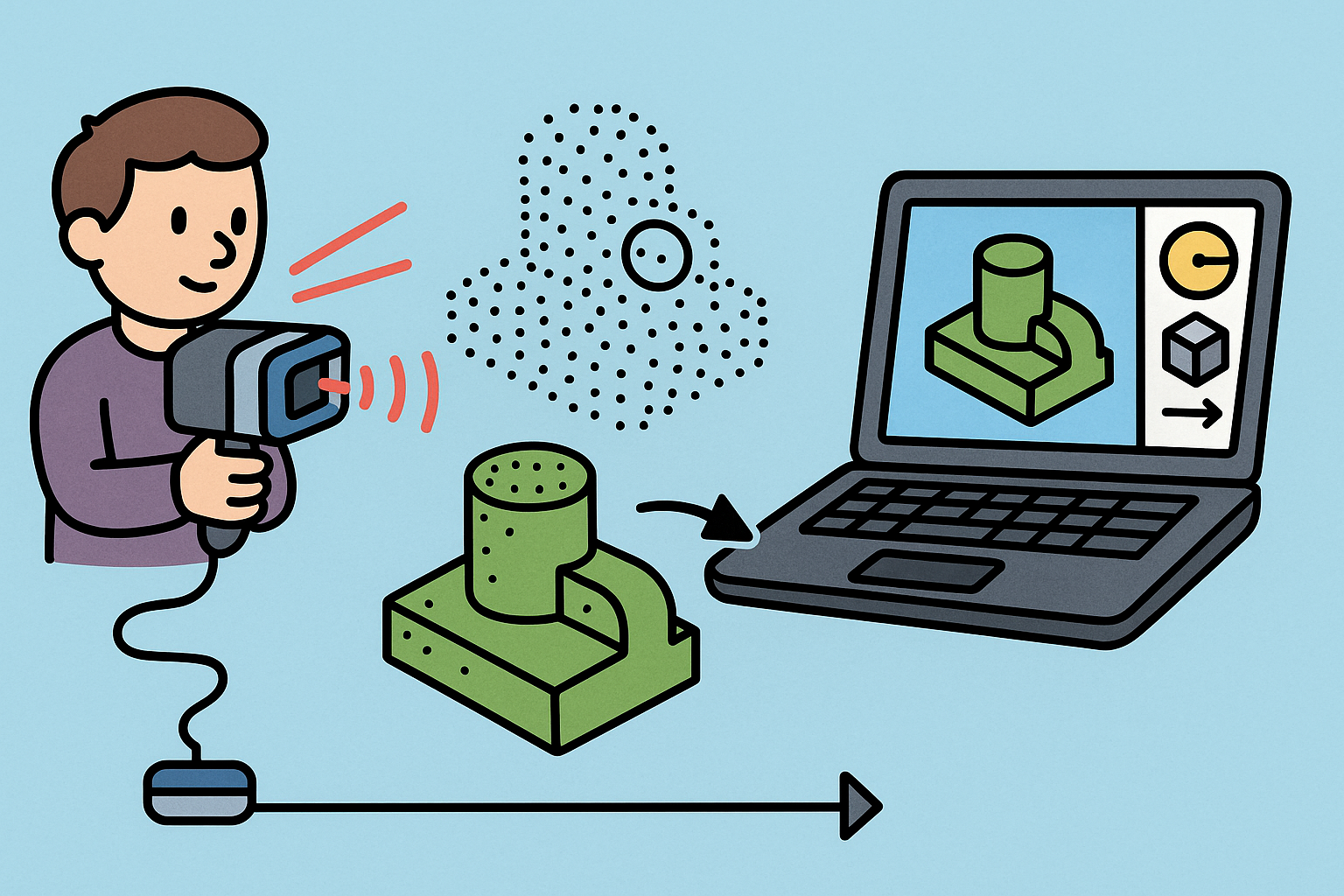

Intent-Aware Scan-to-BRep: Integrating LiDAR Point Clouds into Solid Modeling Pipelines

December 31, 2025 12 min read

Why bring LiDAR point clouds into solid modeling pipelines

Value propositions

The strategic reason to bring **LiDAR point clouds** into solid modeling pipelines is simple: compress time-to-decision without compromising geometric truth. When teams inherit complex, real-world conditions, the traditional survey-to-CAD path burns days or weeks of manual transcription and risk-laden interpretation. High-fidelity scanning eliminates that bottleneck by letting the environment speak directly through data, while the modeling stack translates it into **editable, constraint-aware B-Rep** geometry. The payoff compounds across disciplines. For retrofit work and **as-built validation**, design teams can collapse lead times by auto-seeding models with geometry inferred from points, setting tolerances that reflect measured uncertainty rather than guessing. For brownfield design, preserving warps and non-ideal geometry surfaces issues early—sagging beams, out-of-round pipes, non-orthogonal corners—so the proposed solution respects reality, not an idealized drawing. Critically, linking models to scan lineage makes every new acquisition a delta rather than a do-over, enabling **continuous digital twin** updates without redrafting. The result is a more resilient, traceable design process that treats field data as first-class input, improves coordination, and reduces rework during construction or manufacturing. Teams also benefit downstream in visualization and review: photoreal renders and MR/AR overlays gain credibility when they are grounded in accurate, spatially coherent data. Ultimately, you move from “document then design” to “sense then design,” where the model captures intent while the scan maintains context and evidence, and both evolve in lockstep as conditions change and decisions accrue.Reality vs. intent gap

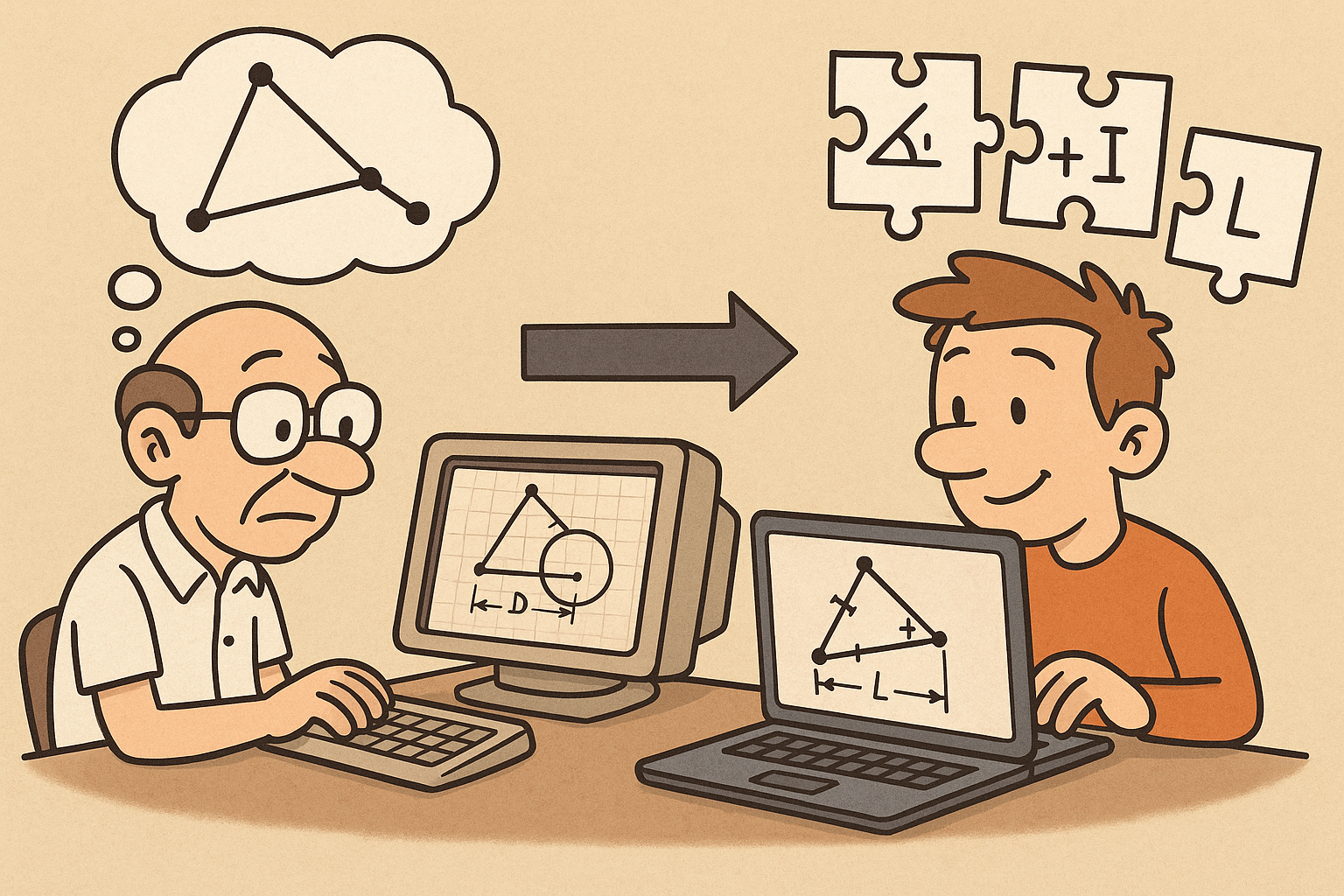

Reality delivers raw, unevenly sampled points; engineering delivers **watertight B-Rep** with feature history and tolerances. Bridging this gap requires acknowledging that point clouds are inherently noisy, non-watertight, and non-parametric, often with **uneven density** and significant occlusions caused by line-of-sight limitations. They capture photons, not intent. In contrast, solid models encode manufactured or constructed artifacts with boundary definitions, consistent topology, and a hierarchy of features built on datums and constraints. These facts create a mismatch: points do not know about perpendicularity, draft angles, or reliefs; B-Reps assume exact surfaces, robust **Boolean operations**, and continuity conditions (G0/G1/G2). The challenge is not to force reality into an exact ideal, but to extract what matters. That means estimating uncertainty at every step, choosing reconstruction strategies that respect design semantics, and preserving anomalies where they carry downstream risk. Closing the gap involves layered understanding: from geometry (normals, curvature) to semantics (pipes, ducts, doors), to intent (this flange is symmetric; this column matches an AISC section). The pipeline must cope gracefully with over- and under-constraints, imperfections in fits, and coordinate system quirks. A successful approach makes “as-built vs. as-designed” differences visible and quantifiable so teams can consciously accept, correct, or constrain them—with the **tolerance-aware** decisions recorded as part of the model’s evolving truth.Primary use cases

The combined **scan-to-solid** path spans multiple domains, each with nuanced success criteria. In AEC, **Scan-to-BIM** accelerates modeling by converting structural and architectural elements into parametric objects, where **façade rationalization** benefits from analytic surface extraction that exposes bowing and panel misalignments. Clash detection moves beyond theoretical geometry by using measured envelopes to catch tolerances that matter in the field. In industrial plants, automated detection of MEP runs, structural steel, equipment envelopes, and access/egress zones streamlines brownfield expansions: cylinder fitting plus topology graphs transform pipe points into an editable network with specifications and offsets. In product and design engineering, **reverse engineering** demands recoverable feature intent; **QC deviation maps** quantify geometric drift against nominal CAD, while fixture design rides on reliable datums and symmetry inference. Field robotics and MR add a new twist: **on-site markup** against live scans creates shared situational awareness, and design decision support overlays candidate geometry directly in the environment so errors are caught where they originate. Across all cases, associativity to scans underpins traceability: if a correction is made, the data trail shows why, when, and with which measurement confidence, enabling disciplined risk management and shared accountability.Output targets define the workflow

Choosing the right output is half the battle. A mesh-only deliverable supports visualization, clash context, and immersive reviews; here, watertightness, decimation quality, and feature preservation are the focus, not parametric editing. A hybrid **mesh + B-Rep** path suits partial design where some areas remain irregular while analytic regions (e.g., planar walls, cylinders, flat plates) become parametric and editable. Finally, a full **parametric B-Rep with feature tree** supports true design iteration, manufacturing, and construction documentation. The chosen target dictates everything upstream: sampling density, fitting strategies, and uncertainty thresholds. Equally important is **associativity to scans**—embedding references so future re-scans can update or revalidate geometry without rework. Consider the following practical axes: (1) tolerance classes per subsystem, so MEP pipes may accept larger residuals than precision-machined flanges; (2) feature inference depth, from surfaces only to extraction of sketches, extrusions, revolves, fillets, and patterns; (3) lineage fidelity, storing scan chunk IDs and pose metadata to enable differencing; and (4) export standards, balancing heavyweight B-Rep for editability with lightweight LODs for visualization. By deliberately setting the output contract, teams avoid over-modeling and ensure compute time is invested where it pays operational dividends.End-to-end pipeline blueprint: from raw points to editable solids

Ingest and normalize

A resilient pipeline begins with disciplined ingestion. Point clouds arrive as E57, LAS/LAZ, PLY, or PCD, often with color, IR, reflectance, and per-point confidence. Harmonizing these into a common schema, tracking coordinate frames, and preserving sensor provenance is essential. For terrestrial scans, project coordinates may be geodetic; for mobile mapping or UAV, **time-synchronization and pose metadata** from IMU/GNSS/SLAM binds each point to a trajectory. Early normalization includes unit checks, axis conventions, and intensity scaling so downstream algorithms see a consistent world. Consider also band-limiting and filtering to avoid aliasing small features or saturating reflectance during shading. Color balancing improves semantic ML performance, and reflectivity can separate materials (e.g., metal versus drywall). Ingest is a perfect place to build **out-of-core** indices and tiles, as well as LAZ compression for archival tiers. Practical guidance: (1) align to a local CAD frame early to minimize floating-point issues in kernels; (2) store both raw and transformed poses to maintain auditability; (3) keep an explicit uncertainty field per point if the sensor provides it. These practices ensure that later registration and fitting operate on coherent, well-posed data, and that any recourse to raw measurements maintains context and fidelity.Registration and cleaning

Bringing multiple scans into a single, stable frame requires a staged strategy. Global alignment can pair **feature-based matching** with RANSAC to derive robust initial transforms; for harder sets, **TEASER++** and **Go-ICP** offer outlier-tolerant formulations that resist local minima. In mobile mapping, loop-closure corrections mitigate drift by re-anchoring the trajectory when revisiting known areas. Once globally plausible, local refinement via point-to-plane **ICP variants** converges faster and better than point-to-point, particularly on planar-rich scenes. Cleaning hinges on disciplined outlier rejection (statistical filters, radius-based trimming) and smoothing without erasing edges—**MLS** or bilateral-like schemes reduce noise while preserving sharp features. Density homogenization—voxel grids or adaptive sampling—aligns data to downstream tolerances: there is little value in fitting a 0.1 mm fillet if the scan’s effective resolution is 3 mm. A practical checklist: remove isolated clusters, downsample to a target that matches reconstruction intent, and flag low-confidence zones created by grazing angles or occlusions. The goal is not just visual neatness, but numerical stability that keeps later solvers inside their convergence basins and reduces the risk of Boolean failure cascades in solid modeling.Semantic and geometric understanding

Understanding precedes reconstruction. Estimating normals and curvature frames the local geometry; **planar fragment extraction** and supervoxel over-segmentation create mid-level units for reasoning. Primitive cues abound: planes, cylinders, cones, spheres, and even tori can be detected through RANSAC, Hough voting, or consensus clustering that tolerates outliers and density fluctuations. On top of geometry, ML-driven **semantic labeling** identifies pipes, ducts, beams, windows, doors, and equipment with uncertainty scores that guide human attention and later fitting. Two practical techniques compound value: (1) fuse multi-view cues—intensity, color, and local roughness—to boost class separation; (2) track uncertainty per segment and surface to prioritize operator review where confidence is low. The output of this stage is a richly annotated point cloud and a set of candidate analytic surfaces and regions of interest. This semantic scaffold informs intent-aware decisions: treat structural steel with section libraries, fit pipes with spec-driven diameters, and keep irregular or damaged zones in mesh form. By promoting semantics to first-class data, the pipeline avoids blind reconstruction and instead navigates toward a **design-intent-aware** model that naturally supports editing and verification.Reconstruction strategies (choose by intent)

With geometric and semantic cues in hand, choose your reconstruction strategy deliberately. A surface-first path uses Poisson or screened **Poisson reconstruction**, Delaunay, or BPA to form watertight meshes. Here, decimation must preserve features: edge-aware simplification and curvature-adaptive remeshing prevent rounding off crisp corners or fillet transitions. When analytic editability matters, a primitive-first approach fits planes, cylinders, cones, and spheres robustly, then assembles them with constraint solving into a coherent **B-Rep**. This requires handling near-parallelism, small gaps, and over-constrained intersections by prioritizing constraints that matter (perpendicularities, coplanarity, axis alignment) within tolerance envelopes. A hybrid approach is often best: fit analytic where confidence and intent support it (walls, columns, pipe spools), patch irregular regions with meshes, and later use NURBS surfacing to bridge and fair complex areas. Practical guidance: (1) assess residuals against per-class tolerances rather than a single global threshold; (2) keep parametric surfaces slightly conservative to avoid Boolean slivers; (3) preserve adjacency graphs between surfaces and meshes for topology healing. The litmus test is editability: can a designer change a diameter, move a wall by 50 mm, or adjust a fillet radius and have the model respond predictably?From surfaces to solids with design intent

Turning surfaces into solids calls for disciplined signaling of intent. Patch layout should reflect manufacturable or constructible seams, with **NURBS fitting** tuned for G1 or G2 continuity where finish quality matters. Edge and corner inference must tolerate small gaps and out-of-square conditions, resolving them with **topology healing**, gap closing, and sliver removal that preserves measurable deviations as metadata rather than erasing them. Robust Booleans under noise depend on kernel-aware thresholds and predicate filtering for near-coplanar cases. Feature inference pays dividends: recognize sketches, extrudes, revolves, fillets, and patterns from the reconstructed surfaces and breed them into a **feature tree**. Where fillet radius clustering reveals families, parameterize them as a single editable variable. Datum restoration and symmetry detection establish a durable coordinate scheme for design changes and QC. Parameterization completes the loop: define editable dimensions, constraints, and **as-built vs. as-designed deltas** so future re-scans can flow into a coherent change set. The outcome is not merely a closed solid but a model that behaves like it was designed, with degrees of freedom aligned to how the artifact will be modified, manufactured, or installed.Verification and handoff

Trust requires quantification. **Deviation maps** colorize residuals between the reconstructed model and points; **GD&T checks** turn those residuals into actionable pass/fail calls relative to form, orientation, and location tolerances. Crucially, uncertainty propagation should accompany every step so the final model can state confidence by region and by feature. Deliverables must match stakeholders: STEP AP242 B-Rep with PMI for manufacturing edits; IFC with point cloud links for AEC model federation; JT/glTF LODs for visualization and MR; and USD for assembling heterogeneous assets with time-varying states. Maintain **associativity to scans** through external references so a re-scan can trigger targeted updates rather than global refits. For review and governance, adopt change tracking: scan-to-model differencing produces issues with snapshots and context, published via **BCF** so design, field, and management teams can converge on decisions. The handoff isn’t an end; it is a versioned milestone in a living pipeline that keeps the digital thread taut between conditions and intent, and keeps uncertainty visible where it affects cost, schedule, or safety.System architecture and implementation patterns

Data management and performance

Point clouds routinely exceed billions of points, demanding **out-of-core** strategies. Hierarchical octrees or k-d trees backed by memory-mapped chunks enable partial loading and indexed neighbor queries without exhausting RAM. LAZ compression and tiled storage reduce IO costs, while multiresolution LOD pyramids let the viewport stream what users see—nearby tiles at high detail, distant tiles as coarse aggregates. Computation shifts to the GPU where possible: parallel normal estimation, ICP, and segmentation kernels dramatically accelerate iterated passes; for the web, **WebGPU/WebGL** viewers bring interactive exploration to lightweight clients. A sensible edge–cloud split prefilters on-device (denoising, coarse registration), then offloads heavy primitive fitting and semantics to the cloud where compute elasticity and ML hardware exist. Practical concerns round out the picture: record tile lineage so segmentation and fits can be replayed deterministically; maintain caching across pipeline stages; and synchronize visualization LOD with analytic LOD so what users see corresponds to the data level the solver used. Performance is not just speed; it is interactivity and trust, ensuring that when a designer drags a parameter, feedback is near real time and faithful to the data actually driving decisions.Robustness and tolerance strategy

Robustness comes from modeling uncertainty explicitly. Store an **uncertainty field** per point or segment, derived from sensor specs, incidence angle, and registration quality; make it visible in the UI so designers know where fits are suspect. Use kernel-aware thresholds to prevent **Boolean failure cascades**—pass tolerances to the solid modeling kernel (Open Cascade, Parasolid, ACIS) rather than relying on generic epsilon values. Predicate filtering for near-coplanar or nearly parallel surfaces keeps numerical noise from fragmenting topology. Unit and coordinate governance is non-negotiable: convert geodetic coordinates to local CAD frames early, run scale sanity checks, and preserve metadata provenance so a chain of transforms can be audited. In fitting, hedge against overconfidence: if residuals stay flat while parameters oscillate, increase regularization or relax constraints. In UI, rank suggestions by uncertainty so operators are guided toward the edits with the highest impact and least confidence. Finally, build health checks into the pipeline: if GD&T checks start systematically failing or Boolean success rate dips below a threshold, automatically flag the batch and revert to a conservative reconstruction recipe tuned for stability over detail.Toolchain and standards

A modern toolchain balances best-of-breed geometry libraries with ML frameworks and open standards. PCL, Open3D, and **CGAL** handle point processing, meshing, and robust computational geometry. For B-Rep modeling, **Open Cascade**, **Parasolid**, or **ACIS** provide kernels with mature Booleans, topology healing, and fillet/chamfer algorithms. Semantic pipelines typically run on **PyTorch** or **TensorFlow**, with ONNX export to serve models across languages and runtimes. Interoperability is vital: maintain E57 to IFC links for AEC, use STEP AP242 with external references to bind PMI and scan lineage, and export USD, **glTF**, or JT for visualization ecosystems. Keep lineage in PLM by referencing scan IDs, registration runs, and fitting job hashes so every face, edge, and parameter can be traced to its source measurements. When possible, adopt open schemas for uncertainty to make confidence portable across apps. Standards are not bureaucracy; they are a hedge against vendor lock-in and a foundation for CI/CD on geometry, where repeatable, testable steps let you improve the pipeline while preserving trust in the output.Automation with a human-in-the-loop

Automation accelerates throughput, but the last mile is human judgment. Embed **active learning** loops: when designers correct segmentation or primitive fits, capture those edits as labeled data and retrain models to reduce future manual touches. Design an intent-aware UI that suggests primitives, constraints, and feature edits ranked by uncertainty and by downstream impact; surface alternative hypotheses (“plane vs. slightly warped NURBS”) with expected deviation deltas so choices are informed. Batch pipelines should run in CI: re-run fits on new scans or updated ML models, generate diffs, validate **tolerance** budgets, and flag regressions before they reach production. Integrate guardrails: if a suggested constraint would trigger a large residual spike, warn and offer a softer constraint or a mesh fallback. Human-in-the-loop does not slow the system; it focuses expertise where algorithms are blind and converts that expertise into improved automation. The KPI is not zero clicks; it is meaningful clicks that shift the pipeline toward fewer, more impactful interventions, captured as training signal, templates, or parametric patterns that scale across projects and teams.Application patterns and KPIs to track

Domain-specific playbooks deliver repeatable wins. For pipes/MEP, build topology graphs from cylinder fits, then apply spec-driven diameters, offsets, and slope constraints; infer branch types and support locations while noting uncertain segments as mesh placeholders. For structural steel, use library-driven section fitting (**AISC/EN**) and detect splices and joints from local geometry and bolt hole patterns; anchor frames to consistent grids. For mechanical parts, emphasize datum restoration and symmetry detection to seed coordinate frames; cluster fillet radii to parameterize families and preserve feature intent. Across these patterns, track KPIs that reflect both quality and economics: (1) time-to-editable-solid from ingest; (2) **Boolean success rate** and topology healing interventions; (3) average deviation by class with uncertainty bands; (4) manual edit count per square meter or cubic meter; and (5) compute cost per m²/m³ so budgets scale predictably. Add two meta-KPIs: associativity integrity (percentage of features with scan lineage) and re-scan assimilation time (how fast a new scan propagates through to updated models). When teams see these numbers trending in the right direction, confidence grows, and the pipeline becomes a strategic asset rather than a one-off project tool.Conclusion

Intent-aware reconstruction as the guiding principle

Bringing LiDAR into solid modeling succeeds when reconstruction is governed by intent. Fit what matters; **mesh what doesn’t**; and quantify uncertainty everywhere. The objective is not a pristine digital twin that denies imperfections, but an editable, trustworthy model that captures where precision counts and where reality resists idealization. Hybrid pipelines—semantic segmentation plus **primitive fitting** plus selective surfacing—yield **B-Reps** that behave like designed objects while respecting measured anomalies. With associativity to scans, every future acquisition becomes a versioned signal rather than a restart, knitting re-scans into the same feature space where designers live. Maintain a relentless focus on tolerances and kernel-aware thresholds to keep Booleans stable and features editable; use deviation maps and GD&T to turn residuals into decisions rather than decoration. In short, intent-aware reconstruction elevates point clouds from pictures of reality to instruments of design, compressing time-to-confidence and strengthening the handshake between field conditions and engineering judgment.Architect for scale, portability, and continuous alignment

Scale is architectural, not incidental. **Out-of-core data**, tiled storage, and GPU acceleration keep pipelines interactive even at campus or plant scales. Standards-based interop—STEP AP242, IFC, USD, glTF—keeps assets portable and lineage intact across toolchains and organizations. An edge–cloud split aligns computation with context: do the fast, local pruning near the sensor, and push heavy fitting and ML to elastic infrastructure. Meanwhile, automation with a **human-in-the-loop** closes the learning loop, steadily reducing manual edits by harvesting corrections as training fuel. The win is continuous reality alignment: every re-scan lands as a diff, tolerance-aware and reviewable via BCF, tightening the digital thread from measurement to model to decision. As projects evolve, the system gets faster, smarter, and more predictable, converting LiDAR from episodic survey to operational telemetry for design. When that happens, retrofits, brownfields, and field-driven engineering stop being exceptions that disrupt process; they become first-class citizens of a pipeline designed to embrace complexity, quantify it, and turn it into competitive advantage.Also in Design News

Design Software History: Constraint Solving in CAD: From Sketchpad to Modern Parametric Engines

December 31, 2025 12 min read

Read More

Cinema 4D Tip: Hand-Painted Vertex Maps — Fast Workflow for Deformers, MoGraph, and Material Masks

December 31, 2025 2 min read

Read MoreSubscribe

Sign up to get the latest on sales, new releases and more …