Your Cart is Empty

Customer Testimonials

-

"Great customer service. The folks at Novedge were super helpful in navigating a somewhat complicated order including software upgrades and serial numbers in various stages of inactivity. They were friendly and helpful throughout the process.."

Ruben Ruckmark

"Quick & very helpful. We have been using Novedge for years and are very happy with their quick service when we need to make a purchase and excellent support resolving any issues."

Will Woodson

"Scott is the best. He reminds me about subscriptions dates, guides me in the correct direction for updates. He always responds promptly to me. He is literally the reason I continue to work with Novedge and will do so in the future."

Edward Mchugh

"Calvin Lok is “the man”. After my purchase of Sketchup 2021, he called me and provided step-by-step instructions to ease me through difficulties I was having with the setup of my new software."

Mike Borzage

Integrating Motion Capture into Animation Design: Enhancing Workflow and Creativity

June 09, 2025 11 min read

Motion Capture Data Acquisition and Processing

Motion capture data acquisition and processing stands as a pivotal stage in contemporary animation workflows, fundamentally influencing the overall output quality and creative depth. In this section, we explore the intricate processes involved in capturing and preparing motion data, beginning with an overview of **motion capture systems** and sensor technologies that are at the forefront of this transformative field. Modern systems use high-resolution sensors, infrared cameras, and inertial measurement units to capture even the subtlest of human body movements in three-dimensional space. These systems rely on a comprehensive network of sensors that can include optical markers, reflective surfaces, and embedded accelerometers. This technological sophistication allows for the recording of detailed skeletal movements and facial expressions, driving advances in character animation and simulation.

Overview of Sensor Technologies

The sensor technologies that underpin motion capture systems have evolved dramatically over the past decades. For instance, passive optical systems use retro-reflective markers that are illuminated by external light sources, enabling cameras to detect motion with extremely high accuracy. Conversely, active systems incorporate markers that emit signals autonomously, enhancing precision in challenging environments. Both methodologies are commonly utilized in environments ranging from state-of-the-art film studios to research labs exploring biomechanics. The integration of **high-fidelity motion capture** in digital workflows has spurred industry adoption for virtual production and immersive media, where even minute discrepancies in data capture can significantly alter the final animation output. Advanced systems now integrate machine learning to predict and refine marker trajectories when occlusions or lost data points occur, ensuring that the resultant dataset is robust and usable for downstream applications.

Techniques for Capturing High-Fidelity Motion Data

To ensure high fidelity, various techniques have been developed for motion capture data acquisition. Practitioners often implement controlled lighting conditions, pre-calibrated sensor arrays, and dynamic range adjustments to mitigate common interference issues. The process requires a careful setup to ensure markers are optimally placed for capturing a full range of movement, whether on human actors or animated objects. The synchronization of multiple cameras using precise timing protocols ensures all angles capture simultaneous data points, a crucial requirement for reconstructing three-dimensional movements. Utilizing dense marker sets further enhances detail retrieval, supporting complex animations where subtleties in motion contribute directly to the narrative's impact. Important supporting measures include comprehensive environmental controls and preliminary test recordings, which are indispensable for defining accurately parameterized capture sequences before engaging in live recording sessions.

Data Cleaning and Pre-Processing Methods

Following the acquisition, data cleaning and pre-processing represent critical steps necessary to prepare the raw motion data for integration into animation pipelines. This stage involves algorithms that address issues such as data noise, latency, and jitter introduced during capture. Practitioners employ robust filtering techniques—including Kalman filters and spline interpolation—to smooth out motion trajectories without compromising the natural feel of the recorded movements. Additionally, pre-processing involves the alignment and synchronization of multiple data streams, a process that ensures compatibility with subsequent layers of animation design. Data cleaning workflows typically consist of several key steps, such as the identification and removal of outliers, gap-filling where marker occlusions occur, and the normalization of movement sequences. Computational tools designed specifically for post-processing enable the efficient transformation of raw positional data into structured formats that are standardized across industry practices. This results in a dataset that is both accurate and adaptable, ready for incorporation into advanced software environments that drive realistic and fluid animations.

Integration Framework for Animation Design Software

Integrating motion capture pipelines into animation design software signifies a major leap forward in how animated content is produced and iterated upon. This section delves into the architectural overview of such integration and outlines how **motion capture technology** is harmoniously combined with digital art and design software to create seamless workflows. The integration framework starts with establishing software protocols and APIs that ensure robust data exchange between hardware capture systems and the digital workstations where motion data is translated into lifelike animations. Working towards complete compatibility means addressing multiple layers of technical detail, such as adjusting data formats, real-time synchronization, and adopting a modular approach that accommodates both off-line and live feed processing. Industry experts emphasize that the ability to quickly optimize and troubleshoot these connections is essential for maintaining continuous production pipelines, particularly in high-demand environments like gaming, virtual reality, and cinematic productions.

Architectural Overview of Integration Framework

At the core of this integration framework is an architectural model that leverages both client-server and event-driven paradigms to manage the vast streams of motion data flowing between capture systems and animation platforms. A well-designed architecture integrates sensor outputs through standardized formats such as BVH and FBX, ensuring that motion data seamlessly translates into tool-specific animation timelines. The adoption of **API-driven development** reflects a broader industry trend where interoperability is paramount; sophisticated APIs offer endpoints that allow for two-way communication, feedback loops, and error correction in real time. This modularity is essential because it allows different components to be swapped or upgraded without disrupting the overall system integrity, thereby future-proofing the entire animation infrastructure. Moreover, system resilience is achieved through robust error handling and failover strategies, ensuring that even if one component fails or delivers corrupted data, the broader pipeline remains operational. Bullet points summarizing this approach include:

- Use of standardized data formats (e.g., BVH, FBX)

- Implementation of modular, API-driven components

- Real-time error handling and fallback mechanisms

- Synchronized client-server architectures

Software Protocols, APIs, and Data Format Compatibility Considerations

Delving deeper into the integration process, one of the essential facets concerns software protocols and API implementations designed to support consistent data interpretability across different platforms. This involves adopting data encapsulation methods, where the information captured by sensors is structured into a standardized format before being transmitted over the network. API protocols are crafted to handle varying data loads, provide secure transmission, and ensure that all middleware components understand and appropriately process the incoming data. This step is indispensable, particularly when dealing with **real-time animation integration**, where lags or data mismatches could lead to significant production delays or quality compromises. For smooth interoperability, the integration framework must account for diverse software environments and operating systems, thus requiring robust error-detection logic and support for a variety of data formats. Practitioners often favor open-source libraries and industry-standard SDKs to reduce compatibility issues, facilitating rapid debugging and iterative development workflows. Key compatibility considerations include maintaining synchronization across different pipelines, preserving data integrity, and ensuring that the dynamic ranges associated with sensor outputs are accurately represented in the digital timelines. The following principles help clarify these requirements:

- Standardization of data format across capture and animation systems

- Use of RESTful or socket-based communications for real-time data exchange

- Robust version control and backward compatibility

- Support for multi-platform operations and cross-software integration

Workflow Synchronization: Real-Time Versus Post-Processed Animation Integration

The challenge and opportunity of workflow synchronization in modern animation production lie between the dichotomy of real-time integration versus post-processed workflows. Real-time animation integration involves immediate data transfer from capture systems directly into design software, enabling artists to view and manipulate motion capture data as it unfolds. This approach can significantly cut down production timelines and foster a more iterative, experimental creative process. Alternatively, post-processed workflows allow the data to be recorded, refined, and meticulously calibrated at a later stage before being embedded into final productions. Both methods have their merits; while real-time systems offer enhanced immediacy and interactivity, they often require more specialized hardware and faster network infrastructure. In contrast, post-processed systems afford more flexibility for detailed data analysis and thorough correction of artifacts, which can be indispensable when the captured data contains unexpected noise or errors. The decision to implement one method over the other typically depends on the project’s scope, the desired fidelity of the animation output, and the technical capabilities of the studio. The benefits and limitations of both approaches are summarized as follows:

- Real-Time Integration:

- Enhanced interactivity and immediate feedback

- Accelerated production times

- Higher demand on computing resources

- Post-Processed Integration:

- Greater control over data refinement

- Opportunity for extensive error correction

- Slightly lengthier production cycles

Enhancing Animation Quality and Efficiency

Enhancing the quality and efficiency of animation production through the integration of motion capture is a multifaceted endeavor that touches upon both technical mastery and creative innovation. By leveraging **motion capture technology** as a central component in animation workflows, studios and individual creators can significantly accelerate production timelines while simultaneously enhancing realism and fluidity within digital animations. The utilization of sophisticated motion capture data streamlines the initial phases of animation, providing a robust basis for developing rich, lifelike character movements and interactions that may otherwise require extensive manual effort. Through the fusion of automated computational correction methods and artist-driven interventions, these techniques further facilitate the fusion of efficiency and artistic expression. This intricately balanced process sees animated storytelling benefiting from enhancements in both the temporal and spatial domains of production. An in-depth exploration of these benefits reveals how the iterative nature of technology and human creativity converges to produce animation that is not only technically sound but also visually compelling.

Leveraging Motion Capture to Accelerate Animation Workflows

The core advantage of modern motion capture integration lies in its ability to accelerate animation workflows by significantly reducing the manual labor traditionally involved in animating complex movements. Automated capture systems record natural human dynamics with a level of detail that would be painstakingly laborious to achieve by hand. This results in rich datasets that can be seamlessly transferred into design software, where advanced interpolation and retargeting algorithms enable rapid transformation into various character rigs. Accelerated workflows aid in reducing the iterative time required for refining animation cycles, thereby allowing artists and animators to dedicate more resources to creative storytelling and visual innovation. Additionally, by utilizing advanced data synchronization and processing techniques, motion capture systems further diminish the overall project turnaround times. The integration of these systems is empowered by bullet-listed advantages such as:

- Minimization of manual keyframe adjustments

- Significant reduction in overall production time

- Sophisticated data retargeting techniques for multiple character models

- Enhanced synchronization leading to smoother transitions

Benefits and Challenges in Enhancing Quality and Efficiency

One of the most compelling advantages of integrating motion capture with animation software is the dual benefit of increased realism and improved artist efficiency. The detailed capture of nuanced physical behaviors allows digital characters to move in ways that are both naturalistic and expressive, forging a stronger connection with audiences and enriching narrative immersion. This increased realism is bolstered by automated processes that free artists from the need to craft every frame manually, thereby opening up fresh creative opportunities for exploration and experimentation. However, the path to these enhancements is not without significant technical challenges. Data noise, resulting from imperfect sensor conditions or external interferences, remains a pervasive issue that must be addressed through advanced filtering and cleaning techniques. Latency issues can arise when handling high-frequency data streams, which may lead to noticeable delays in real-time applications, thereby disrupting the fluidity of motion and impacting workflow reliability. Furthermore, the processing of vast amounts of motion data demands substantial computational resources, necessitating high-performance computing infrastructures and efficient algorithmic designs. The central challenges to address include:

- Minimizing data noise without sacrificing authenticity

- Managing and reducing latency for real-time applications

- Ensuring sufficient computational power for rapid data processing

- Balancing the trade-off between automated corrections and artistic intent

Conclusion

In conclusion, the integration process between motion capture technologies and state-of-the-art animation design software represents a profound convergence of creative artistry and technical sophistication. The journey from data acquisition—with its emphasis on **high-fidelity motion capture systems**, sensor precision, and robust pre-processing pipelines—to the intricate integration framework that enables both real-time and post-processed animation reflects a major leap forward for the industry. The architectural design that supports this integration, built around standardized protocols, dynamic APIs, and versatile data-format compatibility, has opened up new avenues for accelerating production workflows and enhancing the overall quality of animated content. Studios and individual creators benefit immensely from these advancements, as automated pipelines and efficient processing methods minimize manual input while preserving the authenticity and expressiveness of captured movements.

Recap of the Integration Process and Its Impact on Modern Animation Design

Throughout this discussion, we have examined the multi-layered processes that underpin effective motion capture integration. From the initial capture of complex human movements using sophisticated sensor technologies to the subsequent data cleaning and synchronization that primes the motion data for editorial pipelines, each step is critical in transforming raw inputs into a polished animation product. The architectural strategies that unify different software platforms, and the nuanced negotiations between real-time and post-processed workflows, have collectively contributed to elevating both the technical and creative standards across the industry. The capacity to rapidly translate natural movement into lifelike animation has not only enhanced realism but also provided artists with greater opportunities for creative exploration, transforming what was once a labor-intensive process into one that is far more automatic and refined. As such, the integration of these methodologies continues to reshape the landscape of animation design, offering new standards in quality, speed, and versatility.

Future Outlook on Emerging Motion Capture Innovations in Animation Software

Looking ahead, the evolution of motion capture innovations will undoubtedly continue to reshape the animation landscape. Emerging developments such as AI-driven predictive modeling, enhanced sensor miniaturization, and further improvements in data processing algorithms are poised to offer even greater precision and fluidity. Moreover, the ongoing convergence of technologies in virtual reality, augmented reality, and mixed reality is creating additional opportunities for real-time interaction, making the creative process more immersive than ever before. Future systems will likely provide even tighter integration across various platforms, ensuring that data captured in one environment is immediately and accurately reflected in another. This cross-pollination of creative tools will enable designers and directors to adapt rapidly and iterate dynamically on projects, thereby balancing the technical demands of high-resolution motion capture with expansive creative freedom. As the industry landscape evolves, the commitment to maintaining and advancing these integrated pipelines will remain a core focus for professionals seeking to push the boundaries of what is technically achievable and aesthetically inspiring.

Final Thoughts on Balancing Technical Implementation with Creative Freedom

In final analysis, the successful convergence of motion capture systems with animation design tools is not solely a matter of overcoming technical challenges; it is also a delicate balancing act between meticulous engineering and unfettered creativity. Technological advancements have empowered artists to realize their visions with unprecedented detail and authenticity, yet these benefits are most valuable when matched with the creative insights of designers who understand the nuances of storytelling. Striking an optimal balance between automation and artistic control remains the critical demand for every production pipeline. As the landscape continues to evolve, studios must remain agile, investing not only in cutting-edge hardware and efficient integration frameworks but also in cultivating creative talents who can harness these technologies in innovative ways. With consistent enhancements in data capture, processing, and synchronization methods, the next generation of animation tools is set to empower creators to produce works that not only reflect high technical achievement but also resonate deeply on an emotional level. The future of animation is as much about elevating the human element as it is about refining the computing process, creating a symbiotic relationship where technology amplifies art, and art, in turn, inspires further technological innovation.

Also in Design News

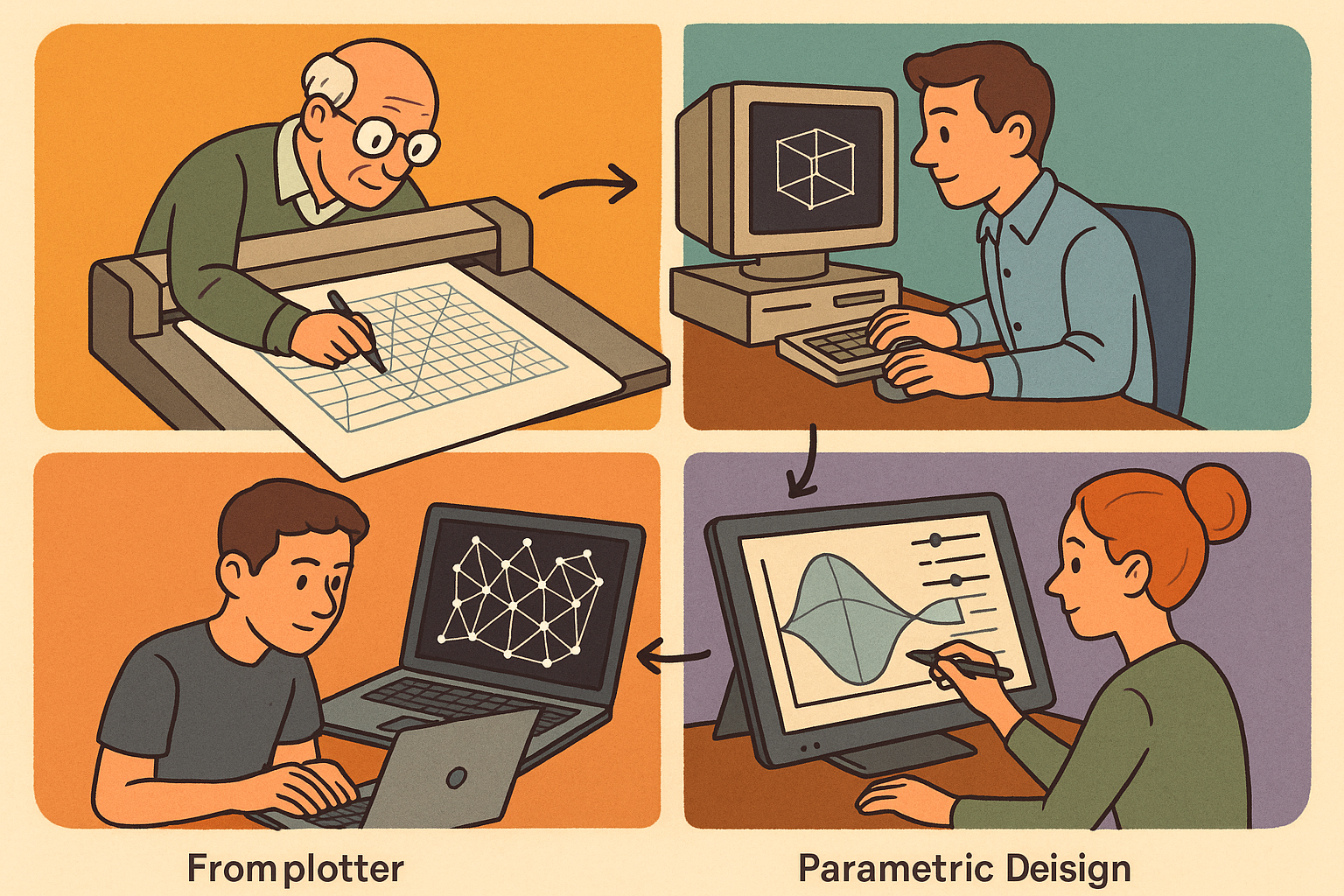

Design Software History: From Plotters to Procedural Intent: A Technical History of Generative and Parametric Design Software

January 04, 2026 13 min read

Read More

Semantic Meshes: Enabling Analytics-Ready Geometry for Digital Twins

January 04, 2026 12 min read

Read MoreSubscribe

Sign up to get the latest on sales, new releases and more …