Your Cart is Empty

Customer Testimonials

-

"Great customer service. The folks at Novedge were super helpful in navigating a somewhat complicated order including software upgrades and serial numbers in various stages of inactivity. They were friendly and helpful throughout the process.."

Ruben Ruckmark

"Quick & very helpful. We have been using Novedge for years and are very happy with their quick service when we need to make a purchase and excellent support resolving any issues."

Will Woodson

"Scott is the best. He reminds me about subscriptions dates, guides me in the correct direction for updates. He always responds promptly to me. He is literally the reason I continue to work with Novedge and will do so in the future."

Edward Mchugh

"Calvin Lok is “the man”. After my purchase of Sketchup 2021, he called me and provided step-by-step instructions to ease me through difficulties I was having with the setup of my new software."

Mike Borzage

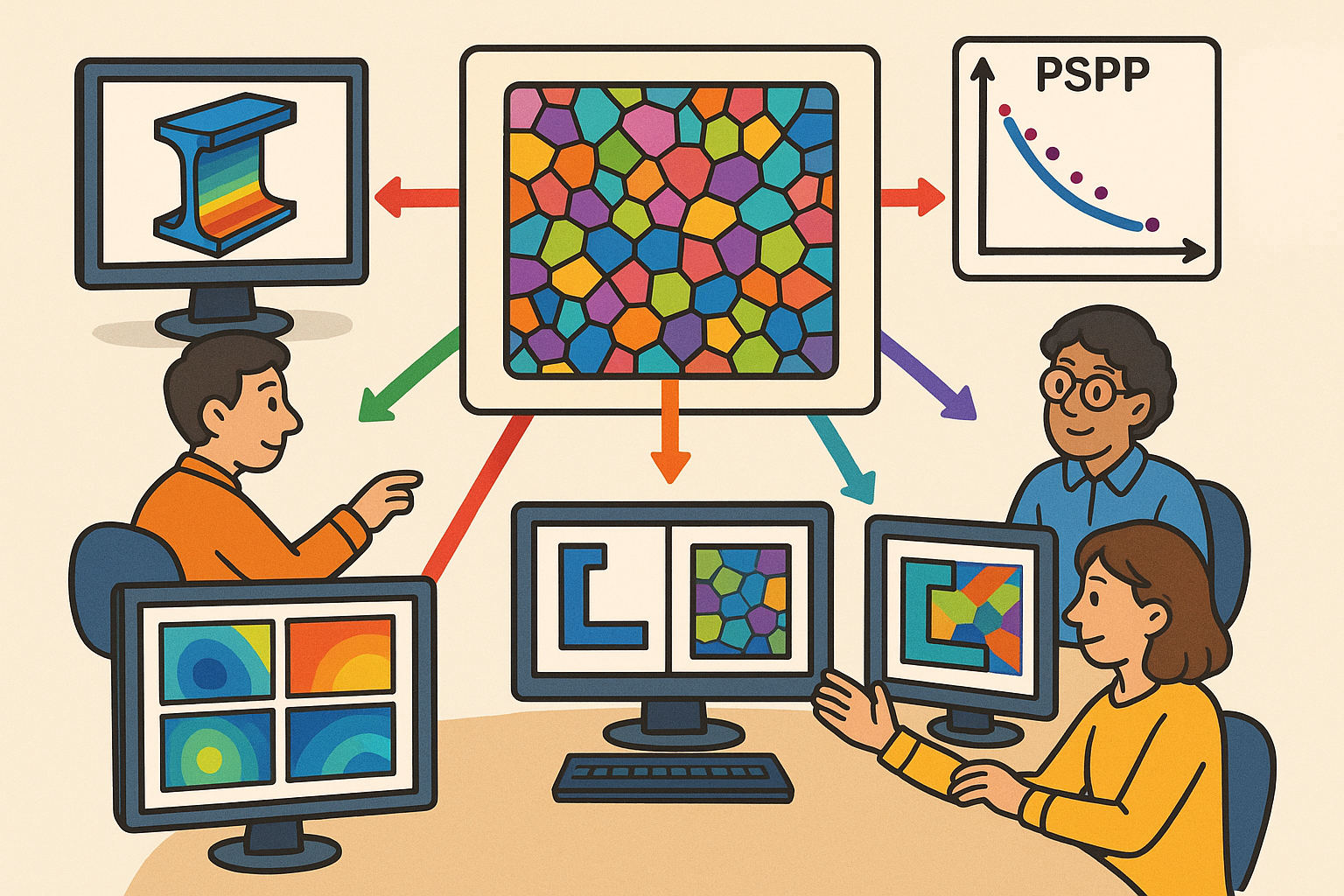

Multiscale Design Software: PSPP Integration and Microstructure-Aware Co-Optimization

November 24, 2025 16 min read

Why Multiscale Modeling Matters Now

The Process–Structure–Property–Performance Promise

The central value of modern engineering design is no longer just predicting stiffness or checking yield. It is closing the loop between how parts are made and how they perform in the field. The **process–structure–property–performance** chain, often abbreviated PSPP, is the connective tissue that lets a designer trace a click in CAD to a knob on the manufacturing line and to a metric in service. In this framing, **multiscale modeling** is not an academic luxury; it is the engine that maps heat input, cooling rate, deformation path, and chemistry to texture, phase fractions, grain size, precipitates, porosity, and residual stresses—then carries those microstructural features up to anisotropic moduli, cyclic plasticity, fracture resistance, and ultimately durability under mission loads. That climb through scales—process to microstructure to properties to performance—ensures design decisions reflect what is actually buildable and reliable, not what an idealized material card assumes.

Design teams can operationalize this promise with a few practical shifts: treat microstructure as a design variable, make manufacturing parameters first-class citizens in optimization, and expose local property tensors to the structural solver rather than blanket isotropy. When done well, this elevates material selection into **microstructure design**, where targets such as grain aspect ratio, orientation distribution function (ODF), or precipitate size distribution are tuned to the part’s feature-level stress states and thermal history. The result is fewer surprises after build, fewer emergency heat-treat tweaks, and confidence that the computed margin—including uncertainty bands—survives reality.

- Connect sliders in CAD/CAE to process controls: scan speed, beam power, toolpath topology, soak times.

- Represent microstructure explicitly: ODFs, voxelized porosity fields, phase maps, and calibrated residual stresses.

- Propagate uncertainty: include variability in grain size and void fraction as random fields feeding fatigue life models.

Where Single-Scale Models Break Down in Practice

Classical single-scale assumptions—uniform properties, isotropy, and temperature-independent behavior—break when processes imprint the material with structure. Additive manufacturing is a canonical example: melt pool dynamics and layerwise reheating generate columnar grains, texture gradients, lack-of-fusion voids, and **residual stress** patterns that are not captured by a single isotropic material card. Parts then show directional stiffness, asymmetric yielding, and location-dependent crack initiation, defeating predictions that ignore microstructure. Likewise, heat-treated or formed metals develop local textures and precipitate morphologies that strongly govern fatigue life and ratcheting, especially near fillets and holes where multiaxial stress states align with preferred slip systems. In composites, tow waviness and voids shift lamina stiffness and alter failure envelopes, moving knee points in compression-after-impact and changing the mode mixity that drives delamination growth.

These gaps are not minor nuisances; they create large and systematic errors. Engineers see conservative safety factors eroded by unexpected brittleness or, conversely, overdesign because models do not credit beneficial textures. The missed physics compels expensive redesign loops late in programs. **Multiscale modeling** corrects this by embedding microstructural state variables—ODF, grain size distributions, precipitate volume fraction, porosity topology—into the constitutive response and by letting the macro model query local RVE behavior as load paths evolve. That step restores predictive power where single-scale models saturate.

- Additive parts: anisotropy, lack-of-fusion porosity, and layerwise residual stress require anisotropic and history-dependent laws.

- Heat-treated and formed metals: texture and precipitation kinetics must inform cyclic plasticity and fatigue initiation.

- Composites: tow waviness, in situ consolidation porosity, and cure gradients alter lamina properties and failure modes.

Design Questions Unlocked by Multiscale Thinking

When the PSPP linkage is active, the design conversation shifts from “Which alloy?” to “Which microstructure and process path deliver the target KPI with the least cost and risk?” That opens design questions that were previously intractable or too slow to evaluate. Instead of assuming a fixed property card, the solver can treat **build orientation**, scan strategy, and hatch spacing as optimization variables and compute their influence on stiffness-to-weight, buckling margins, and plastic shakedown. Heat-treatment schedules are no longer guessed; they become tunable controls whose effects on yield, **fatigue**, and creep are evaluated at notch roots and thin ribs where gradients are strongest. For composites, ply-level microstructure—fiber misalignment distributions, void statistics, resin-rich pockets—inform lamina properties which roll up to laminate performance and failure envelopes.

Concrete questions become answerable in day-to-day design cycles:

- Which build orientation and scan strategy maximize stiffness-to-weight for a given alloy and geometry while respecting thermal distortion and support constraints?

- How do quench delays and temper temperatures shift yield strength, cyclic hardening, and creep rates at critical features?

- Which microstructural targets—grain size, phase fraction, ODF—achieve the KPIs with the lowest cost and lowest variability risk?

The payoff is not only better parts; it is faster iteration with guardrails. **Material-aware generative design** avoids brittle, unmanufacturable results by embedding process feasibility and microstructural realism. The design space expands, but the failure space shrinks.

Quantified Business Impact and Decision Speed

The business case for multiscale modeling is speed with confidence. Programs bleed time when late-stage tests invalidate assumptions baked into single-scale models. By integrating microstructure into the design workflow, teams reduce the probability of late failures and shorten redesign loops. Quantified uncertainty—through variability in microstructure and process—enables tighter safety factors without loss of trust, freeing weight and cost. Moreover, integrating manufacturing realities into generative design avoids concepts that would crack, creep, or distort, saving build cycles and rework. These benefits are tangible and compounding across portfolios.

Translating those gains into operational practice relies on three levers:

- Fewer late-stage test failures: predictive models flag microstructure-sensitive hot spots early, enabling targeted mitigations.

- Tighter safety factors via **quantified uncertainty**: models propagate random fields of porosity and texture to life estimates, revealing what truly drives risk.

- Material-aware generative design: design spaces are constrained by process windows and microstructural feasibility, reducing dead-ends and build scrap.

Organizations that adopt these capabilities report improved first-time-right rates, better alignment between design and manufacturing, and a cultural shift from chasing properties to engineering microstructures. The critical ingredient is traceability: each decision connects back to calibrated models, test data, and process parameters, making the results auditable and repeatable. In other words, **decision quality** rises alongside velocity.

Methods That Link Microstructure to Macro Behavior

RVEs, Homogenization, and FFT Spectral Solvers

At the foundation are representative volume elements (RVEs) that encode grains, phases, voids, and inclusions. From these, homogenization computes effective anisotropic properties for use at the component scale. First-order homogenization delivers average fields and effective tensors, sufficient for many elastic and monotonic regimes, while second-order schemes capture gradients and length-scale effects important for localization and shear bands. For periodic or statistically homogeneous microstructures, **FFT-based spectral solvers** excel: they solve the Lippmann–Schwinger equation in Fourier space, handling high contrasts (e.g., pores vs. metal) efficiently and scaling well on GPUs. The combination—voxelized microstructure plus FFT solver—enables fast sweeps over load cases to produce property surfaces parameterized by texture and porosity.

Homogenization is not a monolith; the choices matter. Periodic boundary conditions reduce boundary artifacts, but for nonperiodic textures, windowed FFT or hybrid schemes with finite elements can be preferable. When inelasticity or contact between phases is present, spectral solvers need careful regularization and stabilized schemes to maintain convergence. To convert RVE responses into usable macro inputs, designers build lookup tables or reduced-order models of the RVE map from strain/temperature to stress and tangent. That map becomes the **anisotropic material model** in the structural analysis, calibrated to the microstructure at hand, not an average from a datasheet.

- Use voxel volumes (HDF5) or level sets to represent RVE geometry; maintain alignment with ODFs from EBSD.

- Apply periodic BCs for FFT solvers; consider second-order homogenization when gradients matter.

- Export consistent tangents to guarantee Newton convergence in the macro finite element solve.

Crystal Plasticity FEA for Texture-Sensitive Plasticity

When plasticity is texture-dependent, **crystal plasticity finite element methods (CPFEM)** are the gold standard. They treat slip system kinetics at the grain level, linking ODF to directional yield, hardening, and cyclic ratcheting. CPFEM captures how grains with favorable orientation yield first, redistribute load, and accumulate damage, explaining anisotropy in yield loci, Bauschinger effects, and mean-stress relaxation under thermomechanical cycles. This is indispensable for additive and formed metals where columnar grains and deformation paths create strong textures. CPFEM also supports advanced features such as kinematic hardening with backstress evolution, twinning, and creep on separate mechanisms, allowing robust simulation of mission profiles with dwell and hold times.

CPFEM’s computational cost is real but manageable with smart strategies. Use polycrystal RVEs with representative grain counts and mesh quality adequate for local gradients. Then accelerate with GPU solvers or **hyper-reduction** techniques that preserve essential modes while trimming degrees of freedom. Precomputing CPFEM response snapshots across strain paths and temperatures paves the way for fast online surrogates in system-level analyses. Integration with fatigue indicators—such as critical plane micro-slip metrics—provides more faithful initiation predictions than scalar stress-life approaches. The result is a constitutive engine that speaks the language of microstructure and cycles, directly addressing the questions designers care about.

- Calibrate slip kinetics from EBSD-informed ODFs and cyclic tests; propagate uncertainties via Bayesian posteriors.

- Leverage reduced-order manifolds of CPFEM states for rapid RVE queries during FE2 or design sweeps.

- Feed micro-slip or energy-based fatigue indicators from CPFEM into life models aligned with observed crack orientations.

Phase-Field and Precipitation/Transformation Kinetics

Static microstructure is the exception; evolution is the rule. **Phase-field models** describe grain growth, phase transformations, and precipitate nucleation/growth/coarsening under thermal and mechanical fields. They resolve interfaces via diffuse order parameters and couple to diffusion and elasticity, capturing how curvature, misfit strain, and local chemistry drive morphology. In heat-treated alloys, phase-field outputs—precipitate size distributions, number densities, and phase fractions—feed directly into strength models via Orowan looping or shearing mechanisms. Under additive thermal histories, phase-field can predict retained phases, martensite lenses, or δ-phase formation that embrittles certain nickel alloys.

These kinetic models enable time-temperature-process maps grounded in physics rather than lookup tables. Designers can explore how soak times, quench delays, and temper schedules push the microstructure toward targets that optimize yield, creep, or fatigue life. Coupling to process simulations—where temperature fields come from moving heat sources or forming work—closes the loop. When computational budgets are tight, **neural operators** and Gaussian-process surrogates approximate phase-field dynamics across parameter spaces, predicting distributions in milliseconds. The benefit is practical: microstructure evolution becomes a tunable, simulated control knob with quantified confidence, and its outputs integrate cleanly into CPFEM or homogenization stages.

- Use CALPHAD-informed free energies to anchor phase-field thermodynamics.

- Extract precipitate statistics for strength models: size, spacing, and volume fraction trajectories.

- Surrogate the evolution with physics-informed neural networks (PINNs) trained on high-fidelity runs across thermal paths.

Scale Coupling Patterns: FE2, Offline-to-Online, and Downscaling

Linking micro to macro requires patterns that balance fidelity and cost. FE2 (finite element squared) nests an RVE solve at each macro quadrature point, letting the macro model query micro response on-the-fly—a powerful but expensive approach. Acceleration comes from **caching**, hyper-reduction, and batched GPU queries. Offline-to-online strategies precompute RVE response surfaces or snapshot manifolds, then deploy **reduced-order models** during system analysis, retaining accuracy over the expected strain/temperature envelope. Downscaling maps macro fields—strain, temperature, rate—back to microstructural state updates (texture rotation, phase fraction evolution) that then update the macro constitutive model. The glue between them is robust field mapping, consistent tangents, and error estimators that trigger refinement when excursions leave the trained domain.

Choosing a coupling pattern is a design decision. Early concept work benefits from surrogates and snapshot models for speed; late-stage verification can spend cycles on FE2 in critical regions. Hybrid approaches are common: use offline ROMs for most of the model and switch to live RVEs at hotspots with large uncertainty or high sensitivity. These patterns elevate **progressive fidelity** as a core UX principle: quick answers early, high-confidence answers at release, all within one pipeline. With the right orchestration, teams can make this seamless to the designer, hiding complexity behind material models that adapt their fidelity to the task at hand.

- FE2 for high-criticality regions; ROMs for bulk regions.

- Error estimators trigger RVE refresh when macro states leave surrogate validity.

- Downscaling updates ODF and phase fractions in response to macro loading history.

Data and Inference: Sources, Descriptors, and Parameter Estimation

Microstructure enters the pipeline through data. EBSD yields ODFs, grain sizes, and misorientations; micro-CT provides porosity shapes and distributions; in-situ and offline process simulations produce thermal histories and cooling rates. From these sources, statistical descriptors—two-point correlation functions, lineal-path and chord-length distributions, and graph-based grain topology—compress structure into parameters usable by models and surrogates. The descriptors enable learning mappings from process controls to structure and from structure to properties. To make models predictive rather than descriptive, **Bayesian calibration** estimates kinetic and constitutive parameters from images and tests, exposing posterior uncertainty that carries into performance predictions.

Data plumbing matters as much as algorithms. Microstructure datasets live best in versioned, queryable stores with provenance: process parameters, heat histories, sample orientation, and test conditions. During calibration, priors reflect physics (e.g., diffusion-limited coarsening exponents), and likelihoods consider measurement noise and segmentation errors. The outputs are not single numbers but distributions, arming designers with confidence intervals. In practice, these methods unlock calibrated, reusable **material models** that reflect the real factory, not a generic handbook, and that stay current as new data arrives. The reward is traceable predictions and faster convergence between models and measurements.

- EBSD for ODF and grain metrics; micro-CT for void topology; thermography and process sims for thermal histories.

- Descriptors: two-point correlations, chord-lengths, and grain graphs that capture morphology compactly.

- Bayesian parameter estimation with credibility intervals that propagate to life and stiffness predictions.

Surrogates, Order Reduction, and Uncertainty Quantification

To keep design loops fast, surrogates approximate expensive RVEs and evolution models. **Neural operators**, PINNs, and Gaussian processes map from microstructure descriptors and loading paths to stress responses or property tensors. Model order reduction—POD/PGD for state compression and hyper-reduction techniques like DEIM and ECSW—trims the cost of evaluating constitutive updates. The trick is to train across the region of the state space the product will visit and to quantify extrapolation risk. Uncertainty quantification wraps the whole stack: random fields represent microstructural variability across the part, and global sensitivity analysis ranks drivers, revealing whether porosity, ODF skew, or precipitate spacing dominates a KPI.

Surrogate governance is crucial. Version each surrogate with its training data, validation metrics, and domain of validity; include a **confidence score** at runtime that decays as the query drifts from the training manifold. For design exploration (DSE), batch RVE queries to GPUs, cache repeated states, and use active learning to place new high-fidelity runs where the surrogate is uncertain. The outcome is a responsive, trustworthy loop: interactive sliders adjust grain size or porosity; live plots show stiffness, life, and uncertainty bands; and the system automatically enriches itself when the designer ventures into novel territory.

- POD/PGD plus DEIM/ECSW for fast constitutive updates within Newton solves.

- Random-field microstructure with Karhunen–Loève expansions for spatial variability.

- Sobol indices to rank microstructural drivers of performance; inform robust optimization.

Implementing Multiscale Pipelines in Design Software

Data Architecture and Interoperability Foundations

Practical adoption starts with a data spine that carries microstructure and process information through the stack. Representations span voxel volumes (HDF5) holding phase/porosity fields, signed distance functions (SDF) representing inclusions or voids implicitly, and parametric statistics such as ODFs and grain size distributions. Toolchains like DREAM.3D, OOF2, and MTEX produce and analyze these microstructures; their outputs must integrate with CAE solvers that consume **anisotropic and cyclic material models**. Interoperability hinges on stable schemas that carry units, frames, and provenance, and on adapters that translate between voxel grids and finite element meshes while preserving statistics critical to the constitutive response.

Metadata is nonnegotiable. Each dataset should tie back to process parameters (scan speed, power, path), heat histories, calibration tests, and sample orientations, all versioned and linked to PLM and model-based definition (MBD). This lets a designer trace a property outlier to a specific build file or furnace profile and roll forward fixes. Material cards become more than curves: they are bundles of surrogates, descriptors, and RVE references with V&V reports. With this foundation, microstructure-aware analysis becomes repeatable and auditable, and teams can safely scale from pilot projects to product lines without losing control of the details.

- Standardize on HDF5 for volumetric microstructure and JSON/YAML for descriptor sets with units and frames.

- Bridge tools: DREAM.3D/OOF2/MTEX for structure; export to CAE with tensors and consistent tangents.

- Embed provenance and link to PLM/MBD so changes to process or calibration propagate through the stack.

Workflow Patterns Built on the PSPP Loop

A robust pipeline structures work around the PSPP loop—Process → Structure → Property → Performance—with clear contracts at each hop. For additive manufacturing, the path is: scan strategy and build orientation drive thermal simulation; thermal histories feed microstructure prediction (texture, porosity, phases); those descriptors parameterize an anisotropic material model; and the structural solver verifies margins under service loads with uncertainty bands. Metals processing follows a parallel thread: forming and heat-treat simulations produce texture and precipitate kinetics that inform cyclic plasticity models and **fatigue life** near notches. Composites chain ply microstructure to lamina properties, laminate stacking, and buckling/failure checks, with tow waviness and voids explicitly influencing the outcome.

Successful teams codify these patterns as templates that run end-to-end and expose just the right controls to designers. Examples of controls that belong on the dashboard:

- AM: build orientation, hatch spacing, power/speed pairs, preheat; outputs include anisotropic stiffness and residual stress maps.

- Metals: quench delay, temper set-points, strain path in forming; outputs include ODF evolution, precipitate metrics, and cyclic parameters.

- Composites: cure cycle, compaction pressure, tow path; outputs include lamina stiffness/failure envelopes adjusted for waviness/voids.

With these loops operational, the **design space** becomes process-aware. Optimization can co-vary shape and process parameters to hit KPIs while respecting manufacturability. The final step is governance: each loop has V&V gates that compare predicted structures/properties to measurements, preventing drift and ensuring guardrails remain sturdy.

Associativity in CAD/CAE and Material Tensors

Microstructure is not uniform; it is feature- and process-dependent. Associativity in CAD/CAE ties microstructure variants to geometric features (ribs, bosses, thin walls) and to local processes (welds, HAZ, scan re-melts). Build orientation becomes a first-class parameter in the model tree; change it, and the toolpath updates, thermal fields change, the ODF rotates, and the **material tensors** in the structural model rotate and re-magnitude accordingly. This is not just convenience; it is correctness, ensuring that edits propagate to the simulation assumptions. Similarly, associativity allows localized refinements: designate a critical fillet to use live RVE response while the rest of the part runs on a surrogate, maintaining speed without losing fidelity where it matters most.

Implementing associativity requires consistent coordinate frames and clear data ownership. Microstructure tensors need to be attached to elements or regions with transformations that reflect the CAD feature’s orientation. Field mappers handle mesh changes during optimization, preserving microstructure alignment. For welded or heat-affected zones, process-aware regions can carry distinct kinetics and constitutive parameters. When designers see material behavior update dynamically with their edits, they build intuition about **microstructure–performance linkages** and avoid designs that fight the process. It is where UX and physics meet to create leverage.

- Attach ODF-based tensors to CAD features with frame-aware transformations.

- Propagate edits: orientation changes trigger updates to toolpaths, thermal fields, and constitutive inputs.

- Localize fidelity: hotspots get live RVEs; other regions use validated surrogates for throughput.

Compute and Orchestration for Throughput

Multiscale pipelines only fly with the right compute backbone. Co-simulation couples solvers via FMI/FMU or gRPC, while field mappers handle mesh/scale transfers with conservation and smoothing as needed. Performance comes from GPU-accelerated FFT solvers and CPFEM kernels, distributed RVE farms that answer batched queries, and **caching** of RVE states to avoid redundant work. In design space exploration, batch and vectorize: run hundreds of RVE calls in a single GPU pass; exploit identical microstructures across elements with rotated frames instead of re-solving from scratch. Continuous integration (CI) for models—version control, regression tests on V&V datasets, and automatic performance checks—keeps the stack reliable as it evolves.

Orchestration is also about economics. Spot schedules cheap precomputations overnight; reserve live FE2 only for late-stage signoff. Use active learning to direct expensive high-fidelity runs where surrogates are uncertain or where sensitivity is high. With these tactics, teams deliver interactive UX even with heavy physics under the hood: sliders for grain size, porosity, or scan spacing respond in seconds, supported by batched **surrogate queries**. The effect is a step-change in DSE throughput without sacrificing traceability or quality.

- Couple via FMI/FMU or gRPC; enforce consistent units and frames across solvers.

- GPU-accelerate FFT/CPFEM; distribute RVEs; cache and reuse states; batch queries for large DSE sweeps.

- CI for material models and surrogates with regression tests anchored to V&V datasets.

Decision Quality and UX for Trustworthy Adoption

Designers adopt tools they trust and that respect their time. **Progressive fidelity** is a core principle: early-stage flows use fast surrogates with visible confidence bands; near release, the system automatically escalates fidelity in critical regions with FE2 or high-resolution RVEs. Interfaces should expose microstructural “knobs” (grain size, ODF sharpness, porosity fraction, precipitate size) as sliders, showing live impact on KPIs—stiffness, yield, fatigue life—and the attendant uncertainty. Risk-aware outputs matter: rather than a single number, show distributions and explain contributors via sensitivity bars so teams see how microstructure variability affects margin. This turns uncertainty from a threat into a managed quantity.

Good UX also tells the truth about scope. Warnings appear when a query leaves a surrogate’s training envelope; buttons let users trigger targeted high-fidelity refresh. Traceability is accessible: a click opens the provenance of a material tensor—its EBSD file, calibration fits, and V&V status. The aim is to make **microstructure-aware design** feel natural: no special workflows, just smarter material models and richer sliders. When teams can iterate quickly and see why the model says what it says, they move faster with fewer surprises, and the organization accrues confidence that computed margins are real.

- Interactive sliders for microstructural controls with live KPIs and confidence bands.

- Clear indicators of surrogate validity and one-click escalation to higher fidelity.

- Risk visuals: distributions and sensitivity ranks guiding robust design decisions.

Conclusion

From Material Selection to Microstructure Design

Multiscale modeling elevates design from choosing a grade to engineering a microstructure. By embedding manufacturing reality—the thermal histories, deformation paths, and kinetics—into the constitutive behavior, the PSPP chain becomes executable, not aspirational. The winning stack blends trusted physics—homogenization, **CPFEM**, phase-field—with calibrated surrogates, robust data plumbing, and GPU-scale compute, all traceable in PLM. It yields confidence bands alongside KPIs, allowing tighter safety factors and fewer late-stage surprises. Most crucially, the approach reframes the optimization problem: shape, process, and material co-evolve, and the solution is a region in that space where manufacturability, cost, and performance align with acceptable risk.

Adoption does not require boiling the ocean. Start pragmatic: identify critical features where microstructure dominates margin; deploy **offline RVE surrogates** tuned to those hotspots; wire process simulators to structural solvers with clear V&V gates. Build associativity so changes in build orientation or heat treatment propagate automatically to material tensors. Establish model CI to keep the stack trustworthy as data accumulates. As competence grows, expand the scope: broader uncertainty models, live RVEs for signoff, and richer microstructure descriptors. The path is incremental but compounding, and the returns show up quickly in first-time-right rates and design agility.

- Blend physics and data: CPFEM and phase-field anchored by experiments, accelerated by surrogates.

- Instrument the data spine: provenance, descriptors, and validated cards as first-class assets in PLM.

- Aim for progressive fidelity: fast early, rigorous late, always with uncertainty in view.

Design Software at the Horizon: Grain-Up Co-Optimization

The horizon is compelling: **microstructure as a parametric feature** in CAD, with generative search exploring process paths as readily as it explores shapes. Toolpaths and heat treatments become optimization variables, subject to constraints learned from factory data. In-situ sensors stream temperatures and melt pool signals that continuously calibrate surrogates and update uncertainty, creating a living material model that mirrors the line. With this, design software becomes a co-optimizer of shape, process, and material, searching the PSPP space for solutions that are strong, light, robust, and buildable. Decision support matures from pass/fail to risk-aware trade studies presented as frontier curves with confidence bands.

Getting there means solidifying today’s practices: interoperable microstructure representations, validated coupling patterns, and UX that reveals physics without burdening the user. The destination is not just better predictions; it is better organizations—ones that treat materials as programmable, processes as design variables, and uncertainty as managed information. When those ingredients converge, **multiscale modeling** ceases to be a niche capability and becomes the default lens through which products are conceived. The outcome is a competitive edge written in grains and phases, delivered through software that finally reasons from the microstructure up.

- Generative co-optimization over shape, toolpath, and heat treatment with manufacturability and reliability constraints.

- Continuous calibration from in-situ sensors to keep surrogates honest and uncertainty current.

- Microstructure-aware PLM that closes the loop from CAD edits to factory parameters to field performance.

Also in Design News

Cinema 4D Tip: Cinema 4D Noise Shader Workflow for Realistic Surface Detail

February 16, 2026 2 min read

Read More

V-Ray Tip: V-Ray Velocity and Cryptomatte Motion-Blur Workflow for Compositing

February 16, 2026 2 min read

Read More

Revit Tip: Optimize Revit Project Browser Organization and Navigation

February 16, 2026 2 min read

Read MoreSubscribe

Sign up to get the latest on sales, new releases and more …