Your Cart is Empty

Customer Testimonials

-

"Great customer service. The folks at Novedge were super helpful in navigating a somewhat complicated order including software upgrades and serial numbers in various stages of inactivity. They were friendly and helpful throughout the process.."

Ruben Ruckmark

"Quick & very helpful. We have been using Novedge for years and are very happy with their quick service when we need to make a purchase and excellent support resolving any issues."

Will Woodson

"Scott is the best. He reminds me about subscriptions dates, guides me in the correct direction for updates. He always responds promptly to me. He is literally the reason I continue to work with Novedge and will do so in the future."

Edward Mchugh

"Calvin Lok is “the man”. After my purchase of Sketchup 2021, he called me and provided step-by-step instructions to ease me through difficulties I was having with the setup of my new software."

Mike Borzage

Harnessing AI in Design Automation: Balancing Innovation and Ethical Responsibility

November 19, 2024 7 min read

Introduction to AI in Design Automation

Artificial Intelligence (AI) has increasingly become a cornerstone in the field of design automation, fundamentally transforming how designers conceptualize, develop, and execute projects across various sectors including engineering, architecture, and product development. The integration of AI into design processes heralds a new era of innovation, where complex algorithms and data-driven insights enable the creation of sophisticated designs with enhanced efficiency and precision. By leveraging machine learning and deep learning techniques, AI systems can analyze vast amounts of data to identify patterns, optimize parameters, and generate solutions that might elude human designers. This symbiotic relationship between human creativity and AI computational power fosters an environment where rapid prototyping, iterative testing, and optimization become more streamlined and accessible. The advantages of incorporating AI in design are manifold. Designers can tackle more complex challenges, reduce time-to-market, and achieve cost savings through automation of routine tasks. AI-driven tools assist in exploring a broader design space, allowing for the discovery of novel solutions and enhancements in product performance. Additionally, AI facilitates customization and personalization at scale, meeting diverse user needs more effectively. However, as AI becomes more deeply embedded in the design landscape, it introduces a host of emerging ethical concerns that must be thoughtfully addressed. The reliance on AI systems brings to the forefront issues such as bias in algorithmic decision-making, questions surrounding the ownership of AI-generated intellectual property, and the need for transparency and accountability in AI operations. These considerations are critical, as they impact not only the integrity of the design process but also societal trust and the equitable distribution of technology benefits. It is imperative for the design community to engage proactively with these ethical challenges to ensure that the integration of AI contributes positively to the industry and society at large.

Bias and Representativity

One of the most pressing ethical considerations in AI-driven design is the issue of bias and representativity within AI algorithms. AI systems learn from data, and if this data is skewed or unrepresentative of the diverse populations that will interact with the design outcomes, the AI's decisions and suggestions can perpetuate or even exacerbate existing biases. These biases can be inadvertently encoded into AI models through the use of training datasets that reflect historical prejudices or systemic inequalities. For example, if an AI system used for architectural design is trained primarily on data from urban, affluent areas, it may not generate solutions suitable for rural or lower-income communities. This lack of representativity can lead to designs that are inaccessible or unsuitable for significant portions of the population. The implications of such biased design outcomes are profound, potentially resulting in products or environments that exclude or disadvantage certain groups. Addressing bias in AI requires a multifaceted approach. Designers and developers must critically assess their data sources, ensuring that datasets are comprehensive and inclusive. This involves collecting data that represents a wide array of demographics, geographies, and socioeconomic backgrounds. Additionally, there must be an ongoing effort to identify and correct biases as they emerge. Techniques such as fairness-aware machine learning can be employed to mitigate bias in algorithms. It is also important to involve diverse teams in the development process, bringing multiple perspectives that can help identify potential biases and address them proactively. Failure to address bias and representativity not only undermines the ethical integrity of AI-driven design but can also have legal and reputational repercussions. As awareness of these issues grows, stakeholders are increasingly holding organizations accountable for biased outcomes. Therefore, integrating strategies to ensure fairness and representativity is both an ethical imperative and a practical necessity for the sustainability of AI in design.

Intellectual Property Concerns

The advent of AI-generated designs introduces complex intellectual property concerns, challenging traditional notions of ownership and authorship. In conventional settings, intellectual property rights are straightforward—creators own the rights to their creations. However, when an AI system autonomously generates a design, questions arise regarding who holds the ownership. Is it the developer of the AI software, the user who provided input parameters, or does the AI-generated output fall into the public domain? Current intellectual property laws are ill-equipped to address these nuances. Most legal frameworks require a human element in authorship to grant intellectual property rights, leaving AI-generated works in a gray area. This ambiguity can lead to disputes and hinder the commercialization of AI-generated designs. For instance, if a company uses AI to create a new product design, competitors might legally replicate or modify the design without infringing on intellectual property laws. To navigate these challenges, it's crucial to analyze existing laws and consider potential reforms that acknowledge the role of AI in creation. Legal scholars and policymakers are debating various approaches, such as attributing authorship to the human entities involved in the AI's development and use or creating new categories of rights for AI-generated works. Until clear legal guidelines are established, organizations should:

- Establish explicit agreements that define ownership and rights over AI-generated designs.

- Implement internal policies that address the handling of AI-created intellectual property.

- Stay informed about legislative developments and be prepared to adapt their strategies accordingly.

Transparency and Accountability

Ensuring transparency and accountability in AI systems is paramount for maintaining trust in AI-driven design processes. AI algorithms, particularly those based on deep learning, can function as "black boxes," making decisions without providing understandable explanations for their outputs. This opacity can be problematic in design contexts where understanding the rationale behind decisions is critical for validation, safety, and ethical compliance. Transparency involves making the workings of AI systems interpretable to users and stakeholders. This can be achieved through techniques such as explainable AI (XAI), which aims to make AI decision-making processes more understandable without compromising performance. For designers, this means they can see not just the what but the why behind an AI's suggestions, enabling them to make informed decisions about whether to accept, modify, or reject AI-generated designs. Accountability relates to the mechanisms in place to hold parties responsible for the outcomes of AI systems. In the event of a flawed design that leads to harm or failure, establishing who is accountable—the designer, the AI developer, or the end-user—becomes complex. Clear accountability structures are necessary to assign responsibility appropriately and to incentivize all parties to adhere to ethical standards. Strategies for ensuring transparency and accountability include:

- Implementing audit trails that record AI decision-making processes and interactions.

- Adopting standards and certifications for AI systems that meet transparency criteria.

- Engaging in interdisciplinary collaboration to assess AI outputs from multiple perspectives.

- Providing education and training to users on the capabilities and limitations of AI tools.

Best Practices for Ethical AI Implementation

Balancing innovation with ethical responsibility requires the adoption of best practices for ethical AI implementation within design automation. Establishing frameworks that integrate ethical considerations into every stage of AI development and deployment is essential. These frameworks should be rooted in core ethical principles such as respect for autonomy, non-maleficence, beneficence, justice, and explicability. To operationalize these principles, organizations can:

- Develop Ethical Guidelines: Create comprehensive policies that outline acceptable practices, prohibited actions, and ethical expectations for AI use.

- Engage Stakeholders: Involve a diverse range of stakeholders, including designers, clients, end-users, and ethicists, in the AI development process to gain multiple perspectives.

- Perform Ethical Impact Assessments: Evaluate potential ethical risks and implications of AI systems before deployment, akin to environmental impact assessments.

- Ensure Continuous Monitoring: Establish processes for ongoing evaluation of AI systems to detect and address ethical issues as they arise.

- Foster a Culture of Ethics: Promote an organizational culture that values ethical considerations as much as technical performance, encouraging employees to voice concerns and suggestions.

Regulatory Landscape and Industry Standards

The evolving regulatory landscape and industry standards play a critical role in shaping the ethical use of AI in design automation. Governments and international bodies are increasingly recognizing the need for regulations that address the unique challenges posed by AI technologies. Regulations such as the European Union's General Data Protection Regulation (GDPR) and proposed AI regulations aim to protect individual rights, ensure data privacy, and mandate transparency in AI systems. Industry standards are being developed by professional organizations and standardization bodies to provide guidelines for safe and ethical AI deployment. These standards cover aspects such as:

- Data Management: Guidelines for responsible data collection, storage, and usage to prevent misuse and breaches.

- Algorithmic Fairness: Standards to ensure algorithms are free from bias and discrimination.

- Transparency and Explainability: Requirements for AI systems to be interpretable and for decision-making processes to be accessible to users.

- Accountability Mechanisms: Protocols for assigning responsibility and handling grievances related to AI outputs.

- Advocating for ethical practices and influencing policy development.

- Providing training and certification programs that emphasize ethical competencies.

- Facilitating collaboration among stakeholders to align industry practices with societal values.

Conclusion

In conclusion, the integration of AI into design automation offers transformative potential, enabling unprecedented levels of innovation, efficiency, and customization. However, this potential can only be fully realized if the ethical considerations inherent in AI deployment are addressed with diligence and foresight. Key ethical concerns such as bias and representativity, intellectual property issues, and the need for transparency and accountability highlight the complex challenges that accompany AI-driven design. Balancing innovation with ethical responsibility is not merely a regulatory compliance issue but a fundamental aspect of sustainable and socially responsible practice. By adopting best practices for ethical AI implementation, engaging with the evolving regulatory landscape, and adhering to industry standards, designers and organizations can ensure that their use of AI contributes positively to society. The call to action is clear: designers, developers, and industry leaders must prioritize ethical standards in AI-driven automation to ensure a responsible and inclusive future in design. This involves not only addressing current ethical challenges but also anticipating future dilemmas and preparing to meet them proactively. By doing so, the design industry can harness the power of AI to create solutions that are not only innovative and efficient but also equitable, transparent, and accountable—upholding the highest professional and ethical standards for the benefit of all.

Also in Design News

Design Software History: From Usenet to Cloud: How Forums, Tutorials, and Open Libraries Transformed CAD Practice

February 07, 2026 13 min read

Read More

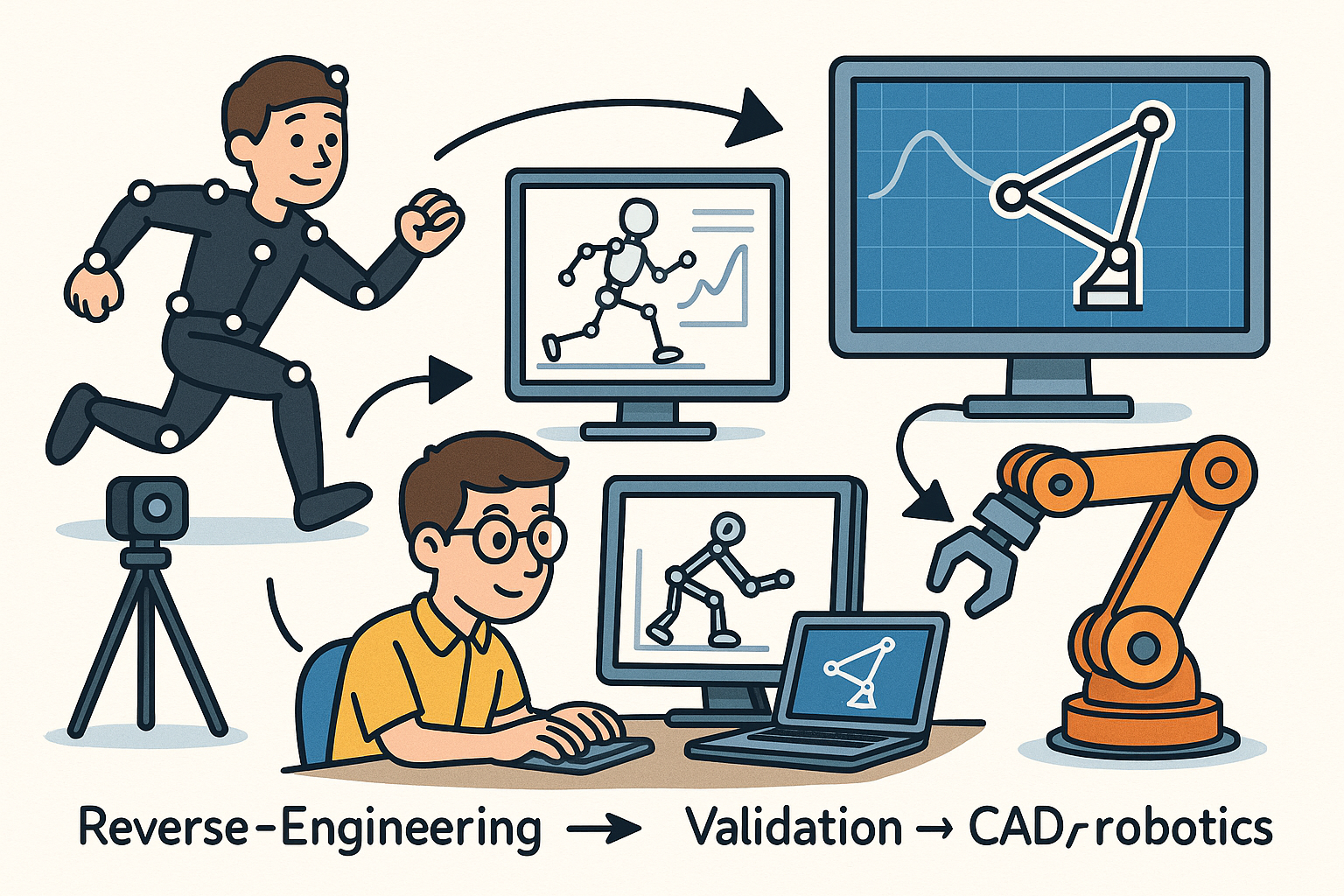

Reverse-Engineering Mechanisms from Motion Capture: Kinematic Identification, Validation, and CAD/Robotics Export

February 07, 2026 12 min read

Read More

Cinema 4D Tip: Guide-First Hair Grooming Workflow for Cinema 4D

February 07, 2026 2 min read

Read MoreSubscribe

Sign up to get the latest on sales, new releases and more …