Your Cart is Empty

Customer Testimonials

-

"Great customer service. The folks at Novedge were super helpful in navigating a somewhat complicated order including software upgrades and serial numbers in various stages of inactivity. They were friendly and helpful throughout the process.."

Ruben Ruckmark

"Quick & very helpful. We have been using Novedge for years and are very happy with their quick service when we need to make a purchase and excellent support resolving any issues."

Will Woodson

"Scott is the best. He reminds me about subscriptions dates, guides me in the correct direction for updates. He always responds promptly to me. He is literally the reason I continue to work with Novedge and will do so in the future."

Edward Mchugh

"Calvin Lok is “the man”. After my purchase of Sketchup 2021, he called me and provided step-by-step instructions to ease me through difficulties I was having with the setup of my new software."

Mike Borzage

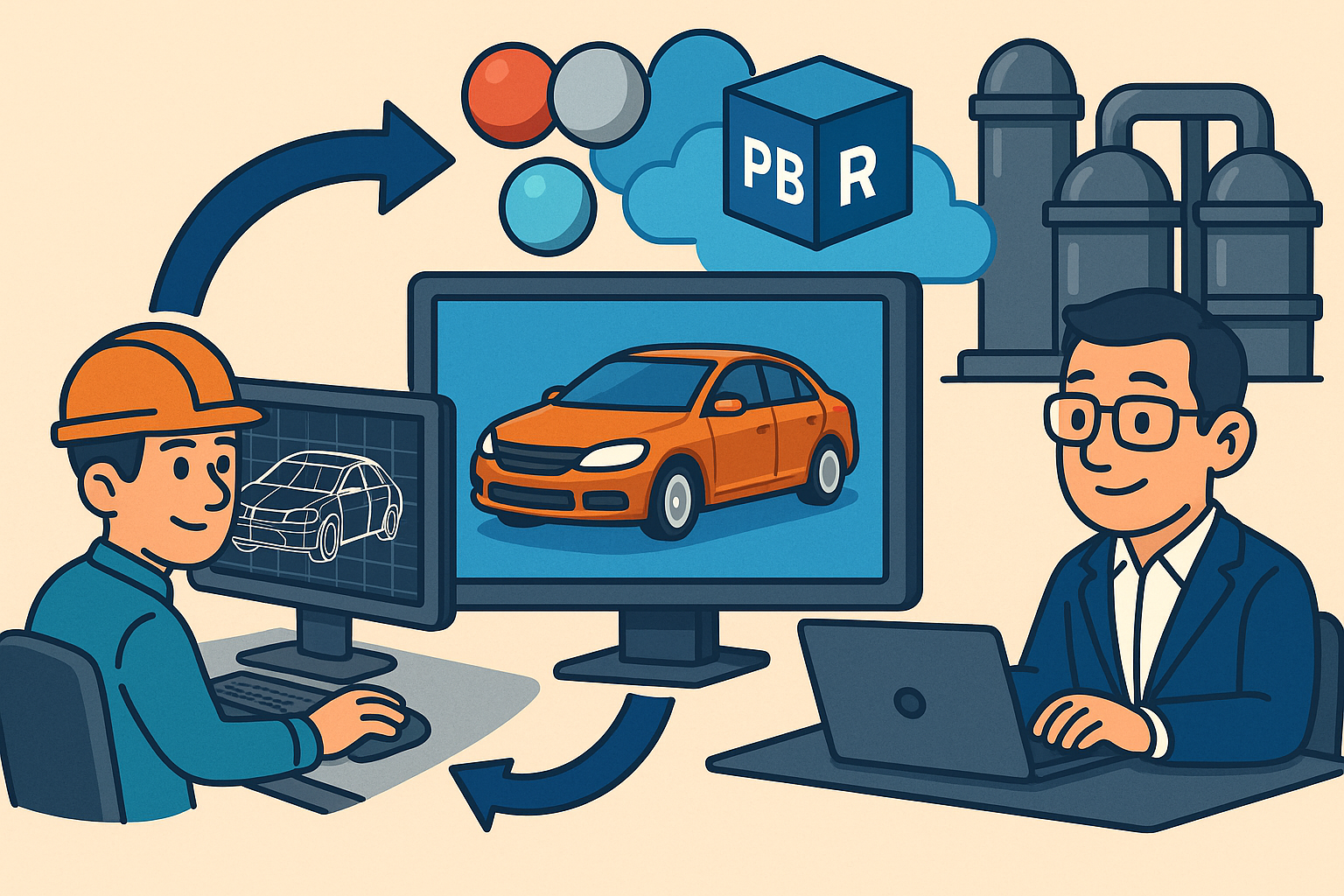

Design Software History: Real-Time Engines Transform Product Visualization: CAD Ingest, PBR, and Enterprise Pipelines

November 27, 2025 14 min read

Why visualization studios pivoted to real time

Market pressures for interactivity

The shift from offline renderers to game engines in visualization studios was not ideological; it was economic and experiential. By the mid‑2010s, brands had to meet customers in contexts where imagery couldn’t be pre-baked: always‑on configurators, retail kiosks with limited compute, and live event installations that had to adapt to crowds and unpredictable inputs. Pipelines anchored in V‑Ray, Mental Ray, Arnold, or KeyShot were exceptional for hero stills and filmic sequences, yet they were brittle at scale when confronted with real‑time interaction. The demands of AR/VR pilots, born in the Oculus DK2 era and nourished by experiential marketing budgets, elevated frame rate over final‑frame fidelity. Creative directors discovered that when an audience could move around a car or sneaker, the ability to maintain 60 fps mattered more than a 12‑hour render per angle. For procurement and marketing leaders, the new KPI was reliable responsiveness across platforms rather than exhaustive shader tricks visible only at 4K. The result was a collision of expectations: global marketing calendars needed thousands of product variations and languages, while IT sought uniform deployability across web, mobile, and kiosk. Real-time engines promised an escape hatch: one project, many outputs. Studios recognized that the opportunity was not merely speed but interactivity as a core storytelling axis, turning visualization from a passive asset pipeline into a living application that could be updated, analyzed, and A/B tested like any other digital product.

Automotive sets the tempo

Automotive brands such as BMW, Audi, and Porsche compressed this transition into an unavoidable mandate. Their goal was deceptively simple: a single vehicle definition should yield imagery that is consistent across web configurators, showrooms, press kits, and trade‑show screens—except now the imagery had to react to the user. This requirement stressed the old boundary between marketing CG and engineering truth. OEMs demanded that real‑time experiences reflect the underlying CAD, color and trim definitions, and feature constraints, so a customer who built a car online would see the same result at the dealership. The expectation of consistent imagery at interactive speeds catalyzed a wave of production changes in agencies and visualization suppliers. The best offline lookdev practices—studio HDRI domes, measured materials, layered coatings for metallic paints—had to be translated into PBR‑based engine workflows without sacrificing brand correctness. Furthermore, automotive contained within it the hardest edge cases: glossy coats with orange peel, carbon weave anisotropy, leather with measured sheen and micro‑normal variation, and refractive optics in headlights. If engines could satisfy these, consumer electronics, footwear, and furniture would follow effortlessly. Automotive also forced SKU sanity: each model year brought a variant explosion, compelling studios to move from a handful of hero shots to a parametric image factory driven by rule sets and product structure. This crucible forged the idea that real‑time engines weren’t just renderers; they were configuration runtimes bound to enterprise data.

Pre‑engine incumbents and the groundwork

Before engines took center stage, RTT DeltaGen (later Dassault Systèmes 3DEXCITE) and Autodesk VRED established that raster‑accelerated and raytraced real‑time could meet the marketing bar, especially for automotive. Their success wasn’t just technical; it was procedural. DeltaGen and VRED encoded the ritual of the automotive studio: light rigs inspired by real cycloramas, turntables, material overrides, and BOM‑aware variant hierarchies. Meanwhile, Mackevision (later acquired by Accenture) and 3DEXCITE built the production and data pipelines that proved the business case for massive product‑line visualization. They wrangled PLM feeds, CAD updates, and regional packaging differences to create a repeatable system for generating tens of thousands of images. This was the template that game engines would later generalize: a data‑driven scene graph wherein material libraries, camera presets, and variant rules could be reapplied across model years and campaigns. Incumbent success validated two critical ideas. First, real‑time interactivity was not inherently at odds with high fidelity; it simply required precise material definitions and predictable lighting. Second, the pipelines that paid the bills were less about pixels and more about governance: mapping CAD assemblies to marketing choices, ensuring SKU legality across markets, and keeping a coherent audit trail from PLM to final media. When engines arrived, they had to inherit these expectations, not discard them, and this is precisely why the earliest engine adoptions hailed from teams who had previously mastered DeltaGen and VRED shopcraft.

The inflection—engines meet PBR and enterprise

The material fidelity gap narrowed abruptly when Unity 5 (2015) and Unreal Engine 4 normalized physically based shading models that echoed Disney’s “principled” approach. Suddenly, the same HDRI and metal‑roughness workflows from film lookdev carried over, allowing Substance 3D assets and X‑Rite scans to look plausible in runtime. ZeroLight—spun out from Eutechnyx under CEO Darren Jobling—dramatically broadcast this potential by delivering Unreal‑based car configurators and early cloud streaming that decoupled client devices from GPU horsepower. In parallel, IKEA’s pivot from offline CGI catalogs to interactive and AR experiences (culminating in IKEA Place) signaled to every consumer brand that engines could underpin mainstream retail storytelling. Sensing a new enterprise market, Epic Games (Tim Sweeney, Marc Petit) and Unity Technologies (during the John Riccitiello era) spun up dedicated automotive/enterprise tracks, with solution engineers, service partners, and ingestion tools tailored to CAD and PLM realities. The pitch became irresistible: retain the best of VRED/DeltaGen predictability, add engine‑grade extensibility, and deploy to web, mobile, retail, and XR from a single project. Engines stopped being “game tools borrowed by agencies” and became platforms for product visualization, complete with licensing, support, and a growing ecosystem of plugins for tessellation, variant logic, and analytics.

The new toolchain: from CAD to engine to everywhere

Ingest and tessellation—controlling complexity

Real products start as NURBS or B‑rep surfaces, not hero meshes, which means the first battle is translation. PiXYZ (commercialized in partnership with Unity), Unreal Datasmith for CAD and 3ds Max, InstaLOD, and Simplygon formed a front line for tessellation, LOD generation, and variant explosion control. With BOMs that might enumerate thousands of parts per vehicle or hundreds per consumer electronic device, naive triangulation is a dead end. What studios needed—and what these tools delivered—was a way to preserve hierarchy, metadata, and naming while producing mesh budgets that respect a frame‑time. This goes beyond decimation; it involves weld logic, instancing strategies for repeated parts (wheels, screws, stitch patterns), and UV generation robust enough for triplanar and UDIM‑style lookdev. Crucially, tessellation had to be repeatable so engineering changes could be re‑ingested without re‑authoring. Datasmith’s non‑destructive philosophy and PiXYZ’s rule‑based processing pipelines enabled “press‑to‑update” workflows where a nightly PLM export produced fresh engine builds with minimal human intervention. Instead of hand‑massaging meshes for every SKU, teams codified ingest as a series of declarative steps, turning geometry prep from artisanal labor into automated manufacturability for pixels. That discipline made real‑time viable for massive catalogs, not just single hero products.

Mapping product structure to runtime logic

Geometry alone does not make a configurator; product logic does. The jump from CAD assemblies to runtime requires careful preservation of structure: grouping by functional systems, variant sets, and legality rules. Studios built schemas where CAD node names and IDs mapped to human‑readable options, while constraints—mutual exclusivity, dependency chains, regional restrictions—were expressed in rule engines embedded in Unity or Unreal, or externalized to CPQ and PIM systems. The aim was a single source of truth: a variant matrix in PLM or PIM that the engine consumes to drive UI toggles, visibility switches, and material swaps. This mapping also solved analytics and operations problems. Because parts and options retained identifiers, clickstreams revealed which trims and colors received attention, feeding back into merchandising. To prevent combinatorial explosions, studios implemented “variant explosion control” at ingest: limiting mesh duplication and using instanced materials with parameter overrides to avoid generating thousands of unique assets. With BOM‑aware hierarchies intact, an engine scene became a living object model—one that could support pricing hooks, stock availability, and region‑specific bundles without duplicating scenes per market. Over time, teams learned to test these rules inside the engine editor itself, using in‑scene QA panels rather than spreadsheet gymnastics, compressing the distance between engineering truth and marketing experience.

Materials and lighting—standardization and fidelity

PBR standardization transformed lookdev from intuition into a measurable discipline. The Disney‑inspired metal‑roughness workflow aligned engines with film/VFX material models, allowing cross‑pollination of assets and knowledge. Allegorithmic’s Substance (later Adobe Substance 3D) became the lingua franca for authoring parameterized materials, while measured inputs—X‑Rite’s AxF and MDL, and laboratory‑captured BRDFs—anchored plastics, leathers, and multicoat paints to brand‑accurate baselines. Automotive lighting discipline carried over wholesale: calibrated HDRI/IBL domes, fill and rim strategies adapted to runtime, and tone‑mapping curves harmonized with photography. Studios also embraced Quixel Megascans (acquired by Epic in 2019) for scene dressing, trading bespoke prop modeling hours for physically scanned assets that played nicely with PBR assumptions. The key was intent: materials were authored to be robust under a range of environments, so interactive users couldn’t “break” a scene by rotating the sun or moving a light. Teams captured real materials with gloss, specular, and normal maps that matched measured values, notarizing every shader with brand sign‑off. As engines added clearcoat lobes, anisotropy, and thin‑film interference, the fidelity gap closed further, enabling marketing‑grade realism without abandoning the performance envelope demanded by web and mobile deployment.

Animation, interaction, and deployment vectors

Real‑time production matured when animation and interactivity traveled cleanly between tools. FBX remained the workhorse for skeletal animation, Alembic carried high‑fidelity caches, and USD began to supply robust scene interchange for complex hierarchies. Control rigs inside engines exposed hinges, seats, doors, and convertible mechanisms as intuitive parameters for UI, replacing pre‑rendered turntables with confident, physics‑aware motion. On the frontend, UI layers evolved from ad hoc UIs to configurable frameworks that bound to CPQ, PIM, and PLM, so pricing and availability felt native to the experience. Deployment multiplied: Windows kiosk builds, iOS/Android AR via ARKit/ARCore, light‑weight WebGL/WebGPU browser targets, and Unreal Pixel Streaming for maximum fidelity without a local GPU. Studios learned to provision cloud GPUs or on‑prem clusters to scale peak traffic, wrapping them with analytics that observed conversion funnels and scene engagement. Success here depended on a harmonious loop: a runtime that exposed state (current trim, materials, options), an API that mirrored enterprise data, and a deployment fabric that stretched from retail floor to pocket device. In this world, “render” gave way to “build,” and content delivery adopted software release practices—versioning, canary deploys, and feature flags—so creative changes could land with the predictability of a modern web app.

Rendering breakthroughs that unlocked parity

Parity with offline rendering arrived through hardware and algorithmic leaps. NVIDIA’s RTX (2018) mainstreamed GPU ray tracing, and denoisers like OptiX and Intel’s OIDN turned noisy path‑traced frames into usable images in milliseconds. Engines seized this: Unreal’s path tracer and Unity HDRP’s ray tracing modes gave studios the option to produce hero stills and cinematic passes directly from the same runtime assets. At the same time, fully dynamic experiences benefitted from Lumen in UE5 and the rise of screen/mesh‑based GI, which tightened the realism of bounced light and reflection coherence without baking. Upscaling technologies—NVIDIA DLSS and AMD FSR—preserved 4K interaction budgets, especially on kiosks and mid‑tier GPUs. The net effect was workflow simplification. Instead of maintaining parallel asset trees for offline and real‑time, teams could author once and choose rendering modes by deliverable: raster for web, hybrid GI for interactive kiosks, path tracing for print‑grade stills. This collapsed the feedback loop between lookdev and deployment: discoveries in real‑time lighting could immediately inform high‑res imagery, and measured material updates propagated everywhere from a single canonical shader. The creative conversation shifted from “can the engine do it?” to “which rendering mode best serves the moment?”—a better question for budgets and outcomes alike.

Key vendor timelines that shaped adoption

Adoption rarely hinges on a single release; it accrues through a chain of enabling moves and ecosystem bets. The late‑2010s were decisive because engine vendors explicitly targeted design visualization, hardened CAD ingest, and opened interchange pathways that enterprise teams could bet careers on. The following milestones crystallized that trajectory and connected the dots from authoring to deployment, giving CTOs and heads of production the confidence to standardize on engine‑based pipelines while staying interoperable with incumbent DCC tools and PLM stacks. Observers sometimes fixate on marquee demos, but the quieter work—importers stabilizing, connectors multiplying, and material standards coalescing—did the heavy lifting. Standards efforts outside the engines mattered, too: glTF’s rise under Khronos leadership (with Neil Trevett’s advocacy) carved out a portable runtime asset format that slotted neatly into web and mobile strategies. Together, these moves rewired expectations about what “real‑time ready” meant, and why the same product graph should drive kiosk, AR, web, and broadcast without bespoke forks.

- 2017: Unreal Datasmith beta targets design/arch‑viz; Epic acquires Twinmotion, seeding AEC‑friendly workflows and simplified lighting rigs.

- 2019: Unreal Pixel Streaming debuts; Epic acquires Quixel, making scanned assets ubiquitous; enterprise partnerships expand with Audi and Porsche for HMI and visualization.

- 2020: Unity Forma productizes configurators; Unity + PiXYZ tighten CAD ingest at scale with rules‑driven tessellation and metadata preservation.

- 2021–2023: UE5’s path tracer and Lumen mature; USD importers stabilize in both engines; NVIDIA Omniverse Connectors court enterprise interchange; Khronos glTF momentum accelerates under Neil Trevett.

How studios reorganized around game‑engine production

New roles and evolving skills

Organizational charts changed as much as toolchains. Traditional CG departments—modeling, lookdev, lighting, compositing—expanded to include technical artists, real‑time shader developers, and gameplay‑adjacent engineers who could build editor tools, UI components, and data bindings. Lookdev itself became Substance‑first, with measured references and spectrophotometer captures informing AxF/MDL asset creation. Pipeline engineers, once focused on render‑farm orchestration, pivoted to Perforce or Git‑LFS for large binary tracking, build automation via CI, and content validation at ingest to catch topology defects, missing UVs, and illegal materials before they poisoned the runtime. QA evolved, too: instead of verifying image buckets, testers validated frame budgets, shader variant permutations, and memory footprints across target devices. Material librarians emerged as a distinct function, curating brand‑accurate, parameterized libraries that survived model years and regional trims, and running governance over how and when materials could be modified. Producer roles blended software and content sensibilities: sprint planning for scenes, release trains for kiosks, and lifecycle support for cloud streaming stacks. Hiring reflected the hybrid nature of the work: Houdini fluency alongside Blueprints/C# proficiency, USD literacy next to PIM/PLM awareness. Studios that invested early in these hybrid profiles found they could maintain pace as scope expanded, because their teams thought in terms of systems and assets—not just frames.

Process shifts toward continuous preview

Process matured from “final‑frame queue” to continuous preview. In offline eras, art direction relied on a slow loop: publish to farm, wait hours, review stills, iterate. Engines inverted that cadence. Directors began to art‑direct inside interactive viewports, compositing cameras and lighting while watching frame budgets and variant rules live. Scene reviews became multiplayer: a creative director, a shader TD, and a pipeline engineer could sit together, toggling trims and time‑of‑day while discussing tone curves and reflections in real time. QA moved from spreadsheet matrices to in‑scene validation tools that exercised combinations via automated scripts, surfacing illegal bundles and missing assets. KPI dashboards shifted accordingly: iteration latency, average ms per frame, runtime crash rates, and configurator conversion took precedence over render throughput. Marketing alignment deepened as CPQ integrations validated pricing on the fly, analytics recorded user journeys, and A/B tests evaluated which lighting rigs or camera behaviors drove engagement. Because builds became deployable artifacts, creative changes inherited software rigor: semantic versioning for scenes, feature flags for experimental UI, and staged rollouts for kiosks and cloud streams. The psychological change was profound. Artists were no longer submitting to an invisible farm; they were shipping software, and their decisions were evaluated by telemetry and retail outcomes, not just aesthetic consensus.

Cost models and IP security in the runtime era

Financial and security models adjusted to a world where experiences run continuously. Licensing costs transitioned from render‑node counts to runtime licensing, support contracts, and cloud GPU spend that resembled ad budgets with peak‑traffic multipliers. Studios learned to forecast operational costs around events, launches, and holidays, pre‑warming autoscaling groups for Pixel Streaming or provisioning on‑prem GPU clusters for show floors. Meanwhile, IP posture hardened. CAD at scale is a crown jewel; moving it into public‑facing contexts required obfuscation, aggressive LODs, and mesh redaction. Teams embedded watermarking, disabled debug overlays in production, and instrumented content delivery networks to throttle scraping. For web delivery, glTF pipelines favored simplified topology and removed tolerancing detail irrelevant to marketing visualization. Even kiosk deployments gained secure boot strategies and file‑system hardening. Contract language evolved as well, clarifying which materials and shader graphs constituted brand IP and setting policies for how third‑party vendors could touch or store assets. The moral was consistent: interactivity magnified exposure, so studios treated runtime builds with the same care as source code. By moving costs and risks into the open, leaders achieved predictability: they could justify GPUs as media spend, and defend IP rigor with auditable controls spanning ingest to deployment.

Notable trajectories across industries

Automotive remained the crucible, where Mackevision and 3DEXCITE pipelines coexisted with Unity and Unreal deployments. ZeroLight championed cloud‑streamed configurators with photoreal visuals, while OEMs experimented with engine‑based HMI and showroom apps that respected the same asset graph as web configurators. Consumer products adapted the pattern with different emphases: Nike‑ and Adidas‑style campaigns blended engines for retail interactives with offline renderer outputs for hero print, ensuring that measured materials and camera language synchronized across billboards and browsers. Furniture and home goods learned from IKEA’s journey: CAD‑accurate models, standardized PBR libraries, and AR placements that respected real‑world lighting were not nice‑to‑haves; they were table stakes. Cross‑pollination flowed from VFX and virtual production back into product visualization. USD scene graphs, variant sets, and stagecraft ideas—LED wall lighting, IBL‑first philosophy—enhanced product shoots and live demos. Even broadcast absorbed these pipelines as on‑air graphics for automotive launches leaned on engine builds. The common denominator was a canonical product graph that described geometry, materials, and rules once, then fanned out to touch web, kiosk, AR, and broadcast. Studios that organized around that canon enjoyed compounding returns: every new channel became an increment, not a reinvention.

Conclusion

From render‑and‑wait to iterate‑and‑deploy

Game engines shifted product visualization’s center of gravity. The old model—queue frames, wait, review—made sense when output was a small set of stills or a hero film. But when audiences started spinning cars on phones, dropping sofas into living rooms, and exploring options at kiosks, the latency budget for creativity collapsed. Engines delivered a live preview of intent: art direction, technical decisions, and business rules converged in one place, visible at frame rate. This didn’t banish offline rendering; it reframed it. Teams still harvest path‑traced stills for print, but they do so from the same assets that drive web and AR. The deeper transformation is organizational: visualization became software. Builds, telemetry, feature flags, and staged rollouts turned campaigns into evolving products. The reward is alignment: creatives, engineers, and marketers now iterate together, guided by the same runtime truth instead of divergent proxy pipelines. The phrase “deploy everywhere” stopped being a slogan and became a practice, as the same project delivered to web, mobile, retail, and broadcast with only mode changes, not re‑authoring.

The three converging threads

Adoption succeeded when three strands locked: robust CAD ingest that preserved structure and metadata (PiXYZ, Datasmith, InstaLOD/Simplygon); standardized PBR/material capture through Substance 3D, X‑Rite AxF/MDL, and measured BRDFs; and GPU breakthroughs—RTX ray tracing, OptiX/OIDN denoising, and modern GI like UE5’s Lumen—that collapsed the fidelity gap. Each thread mattered on its own, but their concurrence was catalytic. Without deterministic ingest, variant logic collapses. Without standardized materials, lighting consistency across devices is a mirage. Without performant GI and upscaling, interactivity buckles under fidelity demands. Engine vendors and partners recognized this interdependence and invested accordingly: CAD connectors hardened, material pipelines gained enterprise features, and rendering modes diversified to suit deliverables from kiosk to print. The result is a durable foundation rather than a fad: a repeatable way to translate engineering truth into marketing expressiveness with minimal lost information along the way, and with performance envelopes that respect the realities of browsers, phones, and retail PCs.

Asset graphs and omnichannel reuse

The most durable competitive advantage to emerge is the canonical product graph. Studios that invested in runtime‑ready asset graphs—geometry, materials, variants, constraints, and metadata—discovered they could amortize costs across channels that used to require bespoke teams. A car’s paint material, validated once against lab captures, surfaces identically in showroom kiosks, AR apps, and path‑traced print. A hinge animation authored for a door becomes an HMI gesture, a broadcast reveal, and a QA tool. With automated ingest and validation, overnight PLM changes roll into engine scenes without human triage, and with analytics bound to variant states, marketers learn continuously which trims and bundles resonate. This omnichannel reuse has governance benefits: there’s only one place to fix errors, only one library to secure, only one set of contracts to audit. It also accelerates creative experimentation: because assets are parameterized and rules‑driven, teams can spin up limited‑time editions, regional bundles, and seasonal lighting without touching the core. Over time, the graph becomes an institutional memory, outlasting campaigns and carrying brand accuracy forward as a living standard.

Next frontiers

What’s next follows the same logic of convergence. USD‑native pipelines will reduce friction between DCC, PLM, and engines, especially as Omniverse connectors and engine USD readers stabilize around variants, layers, and live collaboration. Neural techniques—neural rendering and NeRFs—will slash capture and lookdev time for materials and environments, offering instant variants and view synthesis for configurators that feel photographic even on modest hardware. WebGPU will unlock higher‑fidelity browser experiences without server streaming, while cloud rendering evolves beyond Pixel Streaming into elastic, multi‑tenant stacks tuned for commerce, not just demos. Expect tighter PLM/CPQ bindings, where pricing, availability, and supply signals flow into runtime in near‑real time, and expect brand‑accurate materials to be guarded by cryptographic provenance so that measured assets carry proofs across partners. The north star remains consistent: iterate live and deploy everywhere. Studios that continue investing in real‑time talent, asset libraries, and automation will turn product graphs into full experiential commerce, where configuration, storytelling, and transaction collapse into a single, responsive surface powered by engines—and aligned, at last, with how markets actually move.

Also in Design News

From Design Intent to Controller-Ready CNC: Automating MBD-Driven Handoffs

November 27, 2025 13 min read

Read More

Cinema 4D Tip: Point/Edge/Polygon Workflow for Cleaner, Faster Modeling

November 27, 2025 2 min read

Read More

V-Ray Tip: Exposure Compensation in VRayPhysicalCamera — EV Trim Preserving Depth of Field & Motion Blur

November 27, 2025 2 min read

Read MoreSubscribe

Sign up to get the latest on sales, new releases and more …