Your Cart is Empty

Customer Testimonials

-

"Great customer service. The folks at Novedge were super helpful in navigating a somewhat complicated order including software upgrades and serial numbers in various stages of inactivity. They were friendly and helpful throughout the process.."

Ruben Ruckmark

"Quick & very helpful. We have been using Novedge for years and are very happy with their quick service when we need to make a purchase and excellent support resolving any issues."

Will Woodson

"Scott is the best. He reminds me about subscriptions dates, guides me in the correct direction for updates. He always responds promptly to me. He is literally the reason I continue to work with Novedge and will do so in the future."

Edward Mchugh

"Calvin Lok is “the man”. After my purchase of Sketchup 2021, he called me and provided step-by-step instructions to ease me through difficulties I was having with the setup of my new software."

Mike Borzage

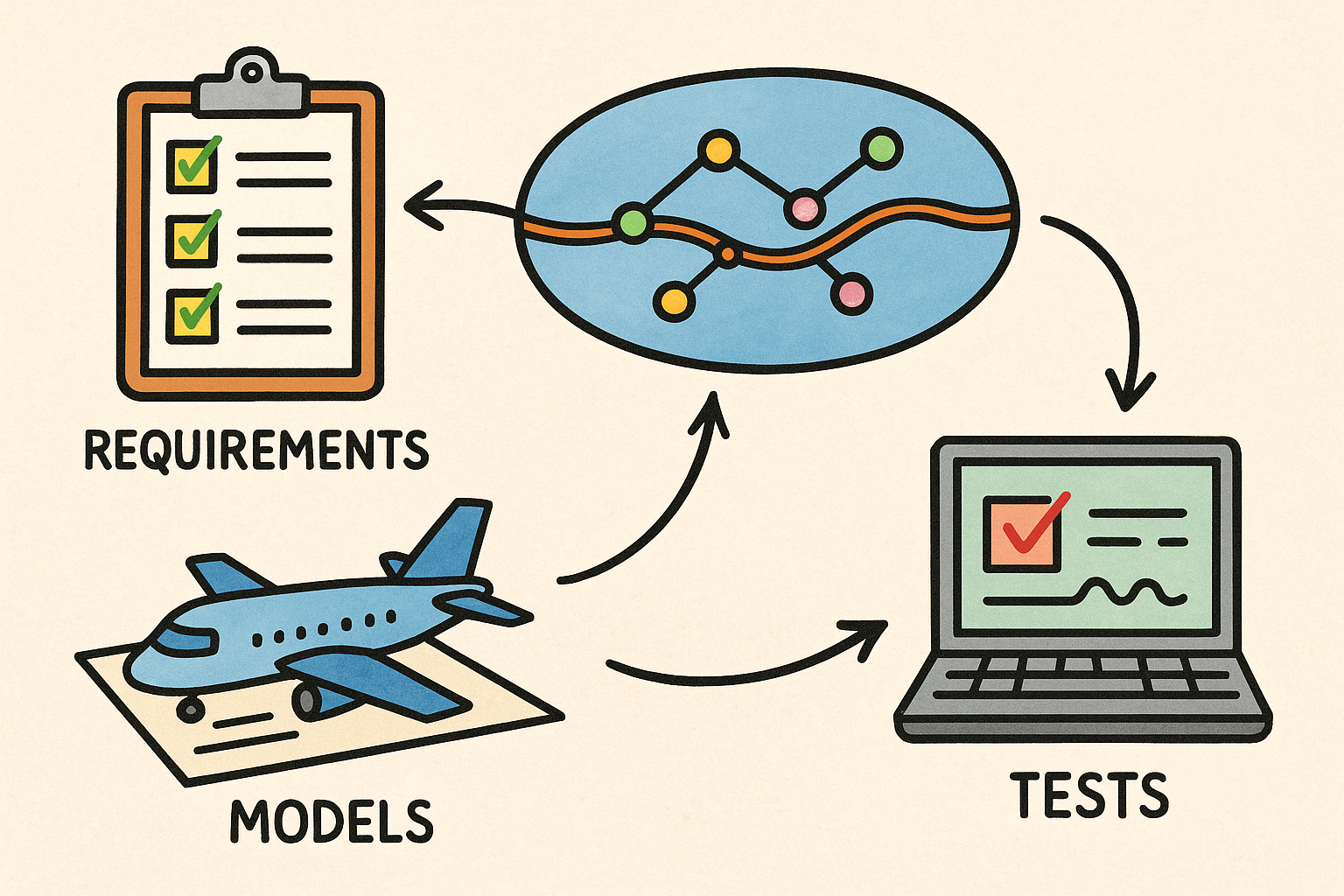

Semantics-First Digital Thread: Linking Requirements, Models, and Tests for Traceable Engineering

February 17, 2026 12 min read

Defining the digital thread linking requirements, models, and tests

Clarify scope and vocabulary

The goal of a modern engineering organization is to make intent, embodiment, and evidence move in lockstep. The mechanism that enables this cohesion is the digital thread: a semantics-first mesh of links that ties requirements to models to tests, across time and across variants. It differs fundamentally from a digital twin, which is a high-fidelity virtual counterpart of a particular physical asset or fleet. Where the twin represents behavior and state, the thread guarantees continuous traceability across the lifecycle—from stakeholder intent through system allocation, design detailing, verification, and ultimately operational feedback. In practice, the thread must embrace heterogeneity. It spans requirements (stakeholder, system, and derived), model artifacts (MBSE/SysML structures and behaviors; CAD for geometry and MBD with PMI; CAE such as CFD and FEA; control and plant models), and tests (simulation suites, unit and integration tests, HIL/SIL campaigns, V&V evidence). Each artifact lives in its native tool yet becomes part of a single, queryable fabric.

For a team starting the journey, three outcomes define success. First, traceability coverage ensures every requirement is linked to at least one implementing model element and one verifying test. Second, impact analysis accelerates decisions by answering, within minutes, which models and tests a change affects and what risk remains. Third, compliance evidence shifts from after-the-fact document hunts to on-demand, reproducible lineage with immutable baselines and e-signatures that withstand audits. To make this work without overwhelming engineers, the thread must be selective—capturing relationships that inform design and risk—while being precise about configuration context so that variant-appropriate truth is always what you see.

Success criteria

Quantifying the health of a digital thread is essential to sustain investment and steer improvements. Start with measurable coverage: the percentage of requirements linked to implementing model elements and verified by at least one test with passing evidence for each relevant variant. Break this down by criticality to avoid averages that hide risk—safety-critical and mission-critical items should approach 100% sooner. Next, track cycle time: the elapsed time from a requirement change being approved to a fully validated baseline restored. This metric rewards automation, good modularity, and the ability to recompute only what changed. Finally, ensure auditability: immutable lineage, cryptographic e-signatures, and cross-baseline diffs that explain who changed what, when, why, and with what downstream effects.

Practical dashboards commonly include: (a) coverage by requirement class and variant; (b) unverified or at-risk items (e.g., requirements linked to deprecated models or flaky tests); (c) verification debt per release candidate; and (d) revalidation burn-down after a change request. To prevent gaming the metrics, align incentives with engineering reality. For example, do not count a requirement as verified by a simulation unless the simulation is tagged with correct model and parameter versions, has a passing status, and its numerical tolerances satisfy the acceptance criteria. Similarly, do not regard a baseline as auditable unless its link graph includes provenance for both link creation and result attachment. These criteria turn dashboards into decision tools rather than vanity displays.

Common failure modes to avoid early

Three pitfalls repeatedly undermine digital thread initiatives. The first is treating the thread as file synchronization. Synchronizing files across tools or folders does not create meaning; it merely clones bytes. A resilient thread encodes semantic linking—“requirement R-102 is satisfied by SysML block B-PowerModule, implemented by CAD assembly A-43, and verified by HIL test T-HV-StartUp”—using link types that analysis and policy engines can reason about. The second pitfall is relying on heroic manual link maintenance. Without automation and policy, links rot as fast as the design evolves. The right posture is assisted authoring: suggestions proposed by heuristics or ML, curated by engineers, and checked by nightly jobs that detect orphans, cycles, and broken effectivity. The third pitfall is losing configuration context. Variants, options, serial number effectivity, software versions, and calibration sets matter. A link that lacks effectivity is at best ambiguous and at worst misleading, producing false coverage and brittle releases.

Additional caution areas include: (a) creating link types ad hoc without a controlled vocabulary, which hinders cross-tool integration; (b) deferring provenance modeling, which later makes audits and root-cause analysis painful; and (c) assuming a single repository must store everything, which tends to force lowest-common-denominator compromises. Instead, design for polyglot persistence with a graph-indexed fabric and enforce consistency at the link and metadata layer. If you get the semantics, configuration, and automation right, the rest becomes a tractable integration problem rather than an endless migration project.

Reference architecture for a resilient digital thread

Identity, configuration, and lineage

The foundation of the thread is identity. Every requirement, model element, and test needs a globally unique, versioned, resolvable identifier—commonly a URI. Without stable IDs, links become fragile proxies for guesses. Use patterns such as tool-scope IDs mapped to enterprise-scope URIs, with content-address or semantic versioning as appropriate. Beyond identity, the architecture must make configuration first-class. That means configuration-aware links that carry variant options, serial effectivity, and baseline context. A 150% definition (the superset) can be specialized by option filters to produce variant-specific truth; links obey those filters so dashboards and impact analysis are always scoped correctly. Coupling links to configuration allows two crucial operations: projecting a baseline state for a release and comparing baselines to quantify change impact.

Lineage is the third pillar. Model and link provenance should be explicit, using a schema such as W3C PROV to capture who, what, when, and why. This allows queries like: Which verification results were attached after calibration set C-27 changed? Which engineer approved the refinement of requirement R-310 into derived requirement R-310b? Combined with e-signatures, lineage enables high-assurance workflows in regulated domains. Practical tips include storing: the source and target IDs, link type, creator, policy that justified the link, creation and modification timestamps, and the change request ID that motivated the link. When lineage is modeled as data, you can simulate release audits, highlight stale verifications when upstream changes occur, and reconstruct decision rationale with precision.

Semantic linking and standards

The thread thrives when it speaks a common language. Adopt a minimal but powerful set of link types, ideally aligned with OSLC so tools can interoperate: satisfies, verifies, refines, implements, tracesTo, and tests. Standard semantics turn native tool actions into predictable events (e.g., a SysML “satisfies” relation maps to an OSLC “satisfies” link). For requirements, SysML v2 provides modern requirement and model element identities, while ReqIF remains the workhorse for exchanging requirements across organizations. For CAD and MBD, STEP AP242 with PMI preserves geometry, tolerances, and annotations in a supplier-neutral form. For dynamic behavior, FMI/FMU packages enable model exchange and co-simulation across control and plant tools, and test result metadata can leverage JUnit/xUnit schemas or domain-specific extensions to express verdicts, environment, seed, and tolerances.

Standards become practical when paired with conventions. For instance: (a) never create an implements link from SysML to CAD without an accompanying allocation rationale attribute; (b) require that a verifies link from any test to a requirement declares the variant filter and the acceptance criteria reference; and (c) ensure every imported ReqIF artifact is immediately minted an enterprise URI and mapped to native identities. Embrace open identifiers and import/export contracts so that you can swap tools without losing meaning. This practice avoids tool lock-in, safeguards investment in semantics, and lets you iterate on tools without breaking the thread.

Data fabric and integration patterns

Centralize semantics, not domains. Keep domain data in its source-of-truth systems—PLM for product structures and CAD, ALM for software and test management, MBSE repositories for SysML, simulation vaults for CAE—while indexing links and critical metadata in an enterprise graph store. This graph-indexed fabric supports low-latency queries such as “show unverified safety-critical requirements in variant V2” without copying heavy models. Pair the fabric with event-driven integration: webhooks or Kafka topics publish changes such as new requirements, updated SysML ports, CAD revisions, or test verdicts. Consumers update the thread index, trigger recomputations, and refresh dashboards. An API gateway mediates access with enforceable service contracts, rate limits, and schema evolution. Backward-compatible schema changes and feature flags let you evolve integrations without disrupting engineering flow.

Effective integration patterns include: (a) change-data-capture from PLM to emit “part revision released” events that fan out to simulation reruns; (b) model linting services that consume SysML updates and produce actionable feedback and links to guidelines; and (c) a simulation orchestrator that subscribes to change requests, runs selected FMUs, and attaches evidence to the appropriate requirement links. Remember that retries, idempotency, and deduplication are critical in event-driven systems. Build adapters that translate native tool events into normalized link updates and provenance entries. The result is a resilient, loosely coupled system that propagates changes rapidly without forcing a monolithic repository.

Security, governance, and compliance

A thread without strong security becomes an audit liability. Use attribute-based access control at both artifact and link levels, so that sensitive relationships (e.g., a requirement that reveals a novel safety strategy) can be restricted even if the target model is broadly visible. For supplier collaboration, apply redaction and surrogate IDs to share only what is necessary, while preserving link integrity. In regulated domains—DO-178C, ISO 26262, FDA—enforce e-signatures for critical transitions and maintain immutable change logs. Immutable does not mean inflexible: store reversible projections so that authorized reviewers can reconstruct any baseline and re-run verification suites with identical seeds and configurations.

Governance is the policy brain of the thread. Deploy policy engines that enforce link completeness, review states, and segregation-of-duties before release. Examples: block a release if any safety-critical requirement lacks a passing verification in the intended configuration; prevent deletion of links that are cited in compliance evidence; require dual review when a derived requirement is created to refine a stakeholder requirement. Finally, bake compliance into normal engineering. Instead of assembling binders near shipment, create living reports that read from the graph, include provenance, and are signed as work progresses. In this mode, compliance becomes a byproduct of everyday engineering rather than a last-minute scramble.

Implementation playbook: from pilot to scale

Start small with a high-leverage slice

Begin where the digital thread can prove value quickly and unmistakably. Choose a safety-critical or high-defect subsystem, ideally one with a mix of mechanical, electrical, and software elements so cross-domain links matter. Constrain initial scope to 10–20 canonical link types—satisfies, verifies, implements, refines, allocatesTo, tests, tracesTo, mitigates, dependsOn, and impacts. Establish a clear baseline of current-state metrics: the percent of requirements with implementing models and passing tests, and the average change-to-validated-baseline cycle time. Also record defect escape rates and the revalidation effort after changes. This baseline becomes the yardstick for progress.

Within the pilot, define crisp acceptance criteria. For example: 90% coverage for safety-related requirements; ability to answer the impact query “What tests verify requirements impacted by Change CR-142 across variants V1 and V2?” within 60 seconds; and automated revalidation triggered within 5 minutes of a requirement change. Socialize conventions early: naming patterns for IDs; rules for when to use refines vs. derives; and the minimum metadata for test evidence (seed, tool version, environment). Keep the team small but cross-functional—requirements, MBSE, CAD, controls, verification, and DevOps—so feedback cycles are tight. Success in this slice establishes patterns, adapters, and dashboards that you can replicate subsystem by subsystem.

Create links where value accrues most

Not all links are equal. Start at the fault lines where misunderstandings and rework typically arise. Create explicit links from requirements to SysML blocks, activities, and states that embody those intents. Then connect SysML elements to CAD assemblies and control models that implement them. The handoff to verification is next: map models to simulation and test cases, and feed test results back to the requirements with verification status. For example, requirement R-201 “Inverter shall limit dV/dt to X” might link to a SysML constraint block, to a control model FMU where the limit is enforced, to a CAD thermal design that ensures heat dissipation, and to a HIL test suite verifying transients under variant V-BEV with calibration set C-12.

Accelerate this web with automation. Heuristics and ML can propose links based on name and ID similarity (e.g., R-201 and block B-R201-Limiter), parameter matching (matching tolerance bands or units), and structural proximity (allocation paths that imply implementation). Keep a human-in-the-loop for approval, and record the policy that justified the link in provenance. Useful practices include: (a) auto-suggest links during model and test authoring; (b) nightly jobs that flag unlinked artifacts; and (c) variant-aware suggestions that only propose connections valid in the current option context. Focus here yields outsized returns: fewer disconnects between intent and embodiment, faster impact analysis, and immediate improvement in coverage metrics.

Automate verification and reporting

With links in place, make verification flow automatically. Treat models as buildable artifacts in CI. Lint SysML (check ports, value types, and allocation consistency), compile FMUs, execute simulation suites, and run CAD checks for PMI completeness, interference, and mass properties. Publish results with resolvable IDs and attach them to verifying links with variant filters. A well-implemented pipeline turns every approved change into a cascade: affected models are diffed, relevant simulations re-run, and tests scheduled across HIL/SIL farms. If a previously passing requirement now fails in variant V2, dashboards should show it as at risk within minutes, and the release should block until verification debt is cleared.

Reporting must be query-first. Coverage dashboards segmented by variant and baseline help engineering and quality leaders triage attention. For deep analysis, expose SPARQL or GraphQL over the graph index to enable questions like: “Which tests verify requirements impacted by Change CR-142 across variants V1 and V2?” or “Which derived requirements depend on parameter P3 that changed in calibration C-27?” Embed permalinks to evidence and provenance so auditors can drill down to signed results. Avoid manual report assembly: instead, generate review packets automatically from queries, including diffs, signatures, and test attachments. Over time, tune pipelines to be incremental—re-run only those simulations whose inputs changed—to keep cycle time low while maintaining confidence.

Manage configuration and variability

Products live as families, not singletons. Managing variability means anchoring links to effectivity conditions: part numbers and revisions, software versions, feature selections, and calibration sets. Use a 150% model that describes the superset of structures and behaviors, and apply feature models or option sets to derive variant-specific configurations. Then, generate variant-specific V&V plans automatically by filtering the link graph. Each link carries the variant predicate it applies to (e.g., Feature.EV && Region.EU && HW.Rev ≥ B). When a change lands, its impact analysis respects those predicates, showing the minimum set of models and tests to revalidate for each variant.

Practical steps include: (a) enforce that every requirement declares its applicability—no “global” assumptions; (b) represent calibration data and software builds as first-class, versioned artifacts with their own URIs; and (c) teach the CI to schedule verifications with matrix builds across variant selections and key calibrations. For large product lines, group variants into equivalence classes where behavior and verification are identical to reduce test explosion. Above all, ensure UI and reports keep configuration front-and-center. An apparently green requirement in one variant may be red in another; the thread should never blur those truths. When configuration-aware links are native, decisions become precise and defensible.

Antipatterns and how to avoid them

Several antipatterns recur. Link rot arises when refactors, renames, and tool upgrades silently break relationships. Counter with scheduled link health checks, orphan detection, and repair workflows that suggest replacements based on heuristics. Another pitfall is the monolithic migration: attempting to move all artifacts into a single new system before you link anything. Prefer a strangler pattern with adapters—stand up the graph index and connectors, begin progressive linking, and retire legacy systems only after value is realized. Tool lock-in is a third danger. Avoid proprietary cul-de-sacs by favoring open IDs, standards-backed connectors (OSLC, ReqIF, STEP AP242, FMI/FMU), and bulk link export. When contracts require a vendor tool, insist on export guarantees and versioned schemas so your semantics remain portable.

Additional watch-outs include: (a) building bespoke link vocabularies that no integration understands; (b) letting CI pipelines become flaky, which erodes trust in dashboards; and (c) modeling provenance superficially, which hampers audits and root-causes. To prevent these, appoint a small architecture council to steward link types and schemas, invest in CI observability (retries, test flakiness detection, historical trends), and treat provenance as a first-class data product. Finally, resist over-automation. Keep humans in the loop where judgment matters—accepting a link proposal, waiving a verification, or refining a requirement—and record those decisions with rationale. Good automation amplifies expertise; it never replaces accountability.

Metrics that matter

Metrics should illuminate risk and speed. Start with requirement verification coverage, sliced by criticality, variant, and baseline. It is not enough to know 85% overall; you need to see that safety-critical coverage is 98% in V1 but only 76% in V2. Track mean time to reflect a change across models and tests—from requirement approval to revalidated baseline—because it rewards modular designs and incremental revalidation strategies. Monitor escaped defect rate and correlate it with missing, stale, or low-confidence links; this ties quality outcomes to thread hygiene. Finally, measure the percentage of automated test evidence attached to releases. High automation doesn’t just save time; it reduces human error and strengthens auditability.

Complement outcome metrics with process health metrics: link creation and approval latency; orphan link count trend; flakiness rates for simulations and HIL; and policy violations caught pre-release. For leadership, visualize verification debt over time per program. For engineers, present actionable lists: “requirements at risk by next milestone,” “tests with high instability,” and “models needing reallocation after CR-142.” Close the loop by tying metrics to interventions—e.g., add a “coverage gap” retro every sprint where top gaps are assigned owners—and reward sustained improvements. With these measures, the digital thread becomes a compass, not merely a ledger.

Conclusion

A robust digital thread is a semantics-first, event-driven system that makes requirement intent computable, model relationships explicit, and test evidence queryable. Its strength flows from stable identities, configuration-aware links, and rich provenance—foundations that outlast any particular tool stack. Start with a focused pilot where traceability gaps are costly, automate link creation and verification with human oversight, and quantify impact with coverage and latency metrics. Avoid link rot, monolithic migrations, and proprietary cul-de-sacs by embracing open standards and a graph-indexed data fabric. When designed this way, the digital thread shortens change cycles, reduces escaped defects, and turns compliance from a scramble into the natural exhaust of everyday engineering. Most importantly, it restores confidence: engineers trust what the dashboards say because the links are meaningful, the evidence is current, and the configuration context is unambiguous.

The payoff compounds. As more subsystems join the thread, impact analysis grows sharper, model reuse increases, and verification becomes predictably incremental rather than all-or-nothing. Suppliers integrate faster with redacted yet resolvable links; auditors find signed, immutable lineages waiting for them; and leadership gains early warning on risk without micromanaging. The journey is iterative, but the principles are stable: invest in identity and provenance; encode semantics with open standards; drive updates through events; and keep humans in the decision loop. With those cornerstones, the digital thread ceases to be an aspirational slogan and becomes the operational backbone of modern engineering.

Also in Design News

Cinema 4D Tip: UV Tiling Best Practices for Cinema 4D and Redshift

February 17, 2026 2 min read

Read More

V-Ray Tip: Reflection Catcher Workflow for Photoreal Plate Integration

February 17, 2026 2 min read

Read More

Revit Tip: Layered View Filters for Phasing and Design Option Comparison

February 17, 2026 2 min read

Read MoreSubscribe

Sign up to get the latest on sales, new releases and more …